[k8s]kubeadm k8s免费实验平台labs.play-with-k8s.com,k8s在线测试

k8s实验

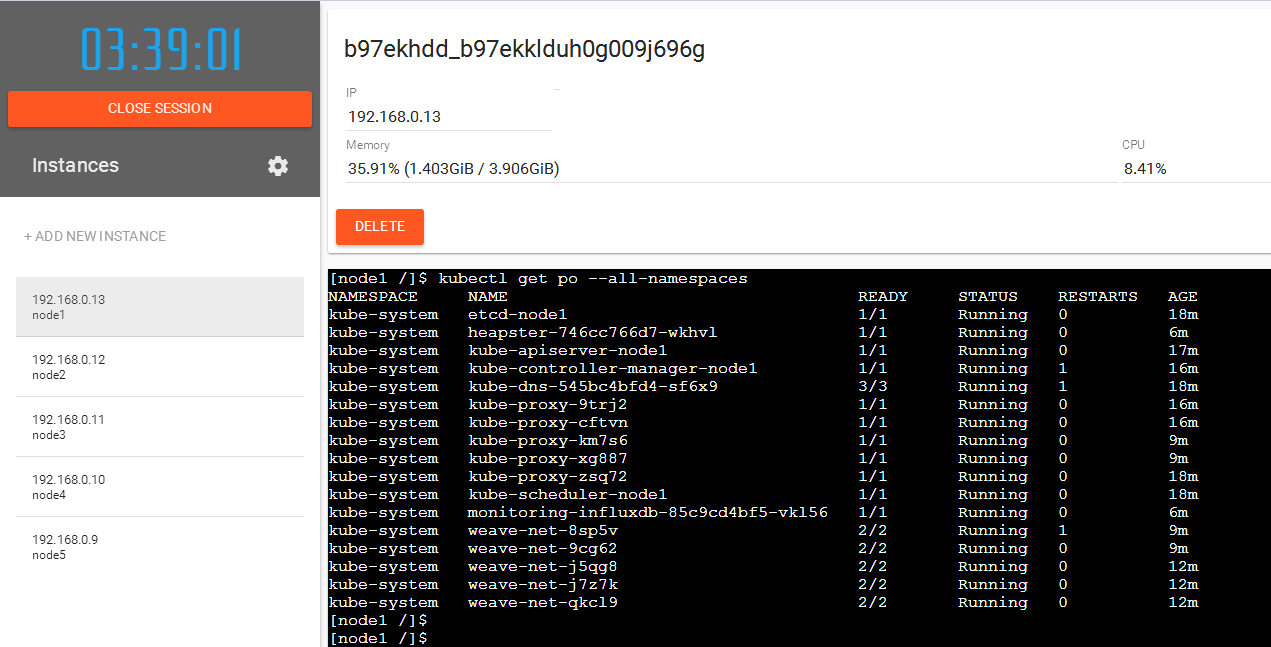

labs.play-with-k8s.com特色

- 这玩意允许你用github或dockerhub去登录

- 这玩意登录后倒计时,给你4h实践

- 这玩意用kubeadm来部署(让你用weave网络)

- 这玩意提供5台centos7(7.4.1708) 内核4.x(4.4.0-101-generic) docker17(17.09.0-ce)

- 这玩意资源配置每台4核32G

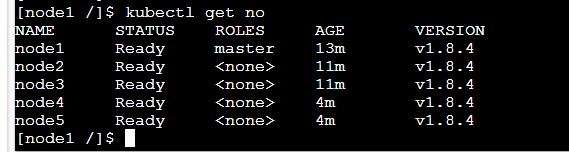

搭建kubeam5节点集群

按照提示搞吧

让你用kubeadm

1. Initializes cluster master node:

kubeadm init --apiserver-advertise-address $(hostname -i)

这玩意让你部署weave网络,否则添加节点后显示notready

2. Initialize cluster networking:

kubectl apply -n kube-system -f \

"https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

让你部署一个nginx尝尝鲜

3. (Optional) Create an nginx deployment:

kubectl apply -f https://k8s.io/docs/user-guide//nginx-app.yaml

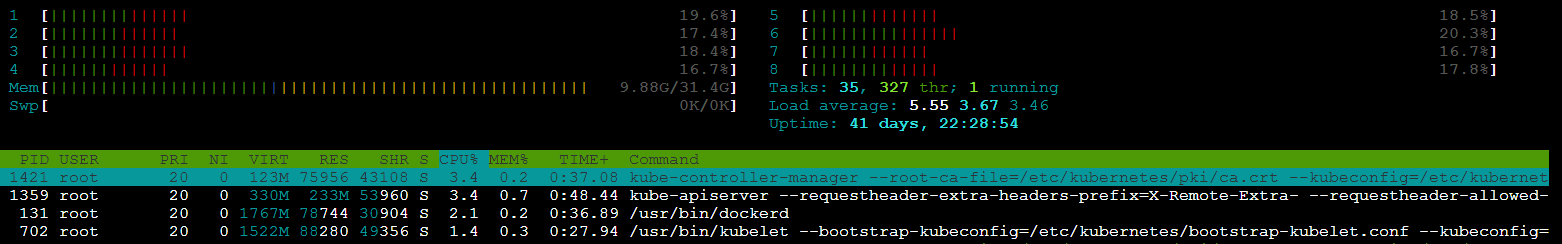

- 这玩意资源排布情况

这玩意的启动参数

kube-apiserver

--requestheader-extra-headers-prefix=X-Remote-Extra-

--requestheader-allowed-names=front-proxy-client

--advertise-address=192.168.0.13

--requestheader-username-headers=X-Remote-User

--service-account-key-file=/etc/kubernetes/pki/sa.pub

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

--kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

--admission-control=Initializers,NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota

--client-ca-file=/etc/kubernetes/pki/ca.crt

--tls-cert-file=/etc/kubernetes/pki/apiserver.crt

--tls-private-key-file=/etc/kubernetes/pki/apiserver.key

--secure-port=6443

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

--insecure-port=0

--allow-privileged=true

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

--requestheader-group-headers=X-Remote-Group

--service-cluster-ip-range=10.96.0.0/12

--enable-bootstrap-token-auth=true

--authorization-mode=Node,RBAC

--etcd-servers=http://127.0.0.1:2379

--token-auth-file=/etc/pki/tokens.csv

etcd的静态pod

[node1 kubernetes]$ cat manifests/etcd.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

component: etcd

tier: control-plane

name: etcd

namespace: kube-system

spec:

containers:

- command:

- etcd

- --advertise-client-urls=http://127.0.0.1:2379

- --data-dir=/var/lib/etcd

- --listen-client-urls=http://127.0.0.1:2379

image: gcr.io/google_containers/etcd-amd64:3.0.17

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /health

port: 2379

scheme: HTTP

initialDelaySeconds: 15

timeoutSeconds: 15

name: etcd

resources: {}

volumeMounts:

- mountPath: /var/lib/etcd

name: etcd

hostNetwork: true

volumes:

- hostPath:

path: /var/lib/etcd

type: DirectoryOrCreate

name: etcd

status: {}

master 三大组件的yaml

cat manifests/kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-allowed-names=front-proxy-client

- --advertise-address=192.168.0.13

- --requestheader-username-headers=X-Remote-User

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --admission-control=Initializers,NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,DefaultTolerationSeconds,Node

Restriction,ResourceQuota

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

- --secure-port=6443

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --insecure-port=0

- --allow-privileged=true

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --requestheader-group-headers=X-Remote-Group

- --service-cluster-ip-range=10.96.0.0/12

- --enable-bootstrap-token-auth=true

- --authorization-mode=Node,RBAC

- --etcd-servers=http://127.0.0.1:2379

- --token-auth-file=/etc/pki/tokens.csv

image: gcr.io/google_containers/kube-apiserver-amd64:v1.8.6

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 6443

scheme: HTTPS

initialDelaySeconds: 15

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 15

name: kube-apiserver

resources:

requests:

cpu: 250m

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/pki

name: ca-certs-etc-pki

readOnly: true

dnsPolicy: ClusterFirst

hostNetwork: true

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: ca-certs-etc-pki

status: {}

cat manifests/kube-controller-manager.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

component: kube-controller-manager

tier: control-plane

name: kube-controller-manager

namespace: kube-system

spec:

containers:

- command:

- kube-controller-manager

- --root-ca-file=/etc/kubernetes/pki/ca.crt

- --kubeconfig=/etc/kubernetes/controller-manager.conf

- --service-account-private-key-file=/etc/kubernetes/pki/sa.key

- --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

- --cluster-signing-key-file=/etc/kubernetes/pki/ca.key

- --address=127.0.0.1

- --leader-elect=true

- --use-service-account-credentials=true

- --controllers=*,bootstrapsigner,tokencleaner

image: gcr.io/google_containers/kube-controller-manager-amd64:v1.8.6

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10252

scheme: HTTP

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-controller-manager

resources:

requests:

cpu: 200m

volumeMounts:

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/kubernetes/controller-manager.conf

name: kubeconfig

readOnly: true

- mountPath: /usr/libexec/kubernetes/kubelet-plugins/volume/exec

name: flexvolume-dir

- mountPath: /etc/pki

name: ca-certs-etc-pki

readOnly: true

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/kubernetes/controller-manager.conf

type: FileOrCreate

name: kubeconfig

- hostPath:

path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec

type: DirectoryOrCreate

name: flexvolume-dir

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: ca-certs-etc-pki

status: {}

cat manifests/kube-scheduler.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --address=127.0.0.1

- --leader-elect=true

- --kubeconfig=/etc/kubernetes/scheduler.conf

image: gcr.io/google_containers/kube-scheduler-amd64:v1.8.6

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10251

scheme: HTTP

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-scheduler

resources:

requests:

cpu: 100m

volumeMounts:

- mountPath: /etc/kubernetes/scheduler.conf

name: kubeconfig

readOnly: true

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes/scheduler.conf

type: FileOrCreate

name: kubeconfig

status: {}

[node1 kubernetes]$ cat kubelet.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: xxx

name: kubernetes

contexts:

- context:

[node1 kubernetes]$ cat kubelet.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: xxx

name: system:node:node1@kubernetescurrent-context: system:node:node1@kuberneteskind: Configpreferences: {}users:- name: system:node:node1 user: client-certificate-data: xxx

client-key-data: xxx

[node1 kubernetes]$ ls

admin.conf controller-manager.conf kubelet.conf manifests pki scheduler.conf

[node1 kubernetes]$ cat controller-manager.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: xxx

server: https://192.168.0.13:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:kube-controller-manager

name: system:kube-controller-manager@kubernetes

current-context: system:kube-controller-manager@kubernetes

kind: Config

preferences: {}

users:

- name: system:kube-controller-manager

user:

client-certificate-data: xxx

client-key-data: xxx

[node1 kubernetes]$

[node1 kubernetes]$ cat admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: xxx

server: https://192.168.0.13:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: xxx

client-key-data: xxx

kubelet参数

/usr/bin/kubelet

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf

--kubeconfig=/etc/kubernetes/kubelet.conf

--pod-manifest-path=/etc/kubernetes/manifests

--allow-privileged=true

--network-plugin=cni

--cni-conf-dir=/etc/cni/net.d

--cni-bin-dir=/opt/cni/bin

--cluster-dns=10.96.0.10

--cluster-domain=cluster.local

--authorization-mode=Webhook

--client-ca-file=/etc/kubernetes/pki/ca.crt

--cadvisor-port=0

--cgroup-driver=cgroupfs

--fail-swap-on=false

kube-proxy

/usr/local/bin/kube-proxy

--kubeconfig=/var/lib/kube-proxy/kubeconfig.conf

--cluster-cidr=172.17.0.1/16

--masquerade-all

--conntrack-max=0

--conntrack-max-per-core=0

kube-controller-manager

kube-controller-manager

--root-ca-file=/etc/kubernetes/pki/ca.crt

--kubeconfig=/etc/kubernetes/controller-manager.conf

--service-account-private-key-file=/etc/kubernetes/pki/sa.key

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

--cluster-signing-key-file=/etc/kubernetes/pki/ca.key

--address=127.0.0.1

--leader-elect=true

--use-service-account-credentials=true

--controllers=*,bootstrapsigner,tokencleaner

kube-scheduler

--address=127.0.0.1

--leader-elect=true

--kubeconfig=/etc/kubernetes/scheduler.conf

[k8s]kubeadm k8s免费实验平台labs.play-with-k8s.com,k8s在线测试的更多相关文章

- KubeEdge v0.2发布,全球首个K8S原生的边缘计算平台开放云端代码

KubeEdge开源背景 KubeEdge在18年11月24日的上海KubeCon上宣布开源,技术圈曾掀起一阵讨论边缘计算的风潮,从此翻开了边缘计算和云计算联动的新篇章. KubeEdge即Kube+ ...

- 年轻的樵夫哟,你掉的是这个免费 8 核 4G 公网服务器,还是这个随时可用的 Docker 实验平台?

小孩子才做选择,成年人全都要.那么我们现在就来看看如何获得一台免费的 8 核 4G 公网 Docker 实验平台服务器. Play With Docker 直接打开 https://labs.play ...

- k8s多集群配置管理平台

k8s多集群配置管理平台 临时集群特性 模拟生产环境 整体环境说明 内网:10.17.1.44 [root@localhost account-server]# kubectl get nodes N ...

- 基于Kubernetes/K8S构建Jenkins持续集成平台(上)-2

基于Kubernetes/K8S构建Jenkins持续集成平台(上)-2 Kubernetes实现Master-Slave分布式构建方案 传统Jenkins的Master-Slave方案的缺陷 Mas ...

- 基于Kubernetes/K8S构建Jenkins持续集成平台(上)-1

基于Kubernetes/K8S构建Jenkins持续集成平台(上)-1 Jenkins的Master-Slave分布式构建 什么是Master-Slave分布式构建 Jenkins的Master-S ...

- 基于Kubernetes/K8S构建Jenkins持续集成平台(下)

基于Kubernetes/K8S构建Jenkins持续集成平台(下) Jenkins-Master-Slave架构图回顾: 安装和配置NFS NFS简介 NFS(Network File System ...

- Android中实现java与PHP服务器(基于新浪云免费云平台)http通信详解

Android中实现java与PHP服务器(基于新浪云免费云平台)http通信详解 (本文转自: http://blog.csdn.net/yinhaide/article/details/44756 ...

- openstack(liberty):部署实验平台(二,简单版本软件安装 part2)

继续前面的part1,将后续的compute以及network部分的安装过程记录完毕! 首先说说compute部分nova的安装. n1.准备工作.创建数据库,配置权限!(密码依旧是openstack ...

- openstack(liberty):部署实验平台(一,基础网络环境搭建)

openstack项目的研究,到今天,算是要进入真实环境了,要部署实验平台了.不再用devstack了.也就是说,要独立controller,compute,storage和network了.要做这个 ...

随机推荐

- 单页WEB应用(三),Chat聊天模块

Chat 聊天模块 这个模块应该就是该书全篇的唯一一个模块吧,后面差点儿全部的篇章都环绕这个模块去实现的,只是就通过这一个模块的实现和上线,也能体现单页应用开发到公布上线的整个过程,毕竟后面的数据.通 ...

- webstorm激活+汉化教程

1.安装教程+激活 输入的激活网址: http://idea.imsxm.com/ 2.汉化教程 软件适用于:webstorm2017.2以及以上,如有需要可直接加本人QQ 1940694428.

- eclipse/STS 切换目录视图

- EXCEPTION-El表达式

CreateTime-- Author:Marydon 声明:异常类文章主要是记录了我遇到的异常信息及解决方案,解决方案大部分都是百度解决的,(这里只是针对我遇到的做个汇总),特此声明! stud ...

- github下载源码的三种方式

从github上下载源码的三种方式 CreationTime--2018年6月7日15点21分 Author:Marydon 1.情景展示 2.实现方式 方式一:直接点击"Downloa ...

- python之函数用法locals()

# -*- coding: utf-8 -*- #python 27 #xiaodeng #python之函数用法locals() #locals() #说明:查找局部变量,返回一个名字/值对的字典对 ...

- HDUOJ---1862EXCEL排序

EXCEL排序 Time Limit: 10000/5000 MS (Java/Others) Memory Limit: 32768/32768 K (Java/Others)Total Su ...

- HDUOJ---2955 Robberies

Robberies Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 32768/32768 K (Java/Others)Total S ...

- HDUOJ-----1066Last non-zero Digit in N!

Last non-zero Digit in N! Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 65536/32768 K (Jav ...

- Hibernate学习备忘

1.关于Hibernate异常: org.hibernate.service.jndi.JndiException: Error parsing JNDI name 刚接触Hibernate,调试 ...