Spark学习:ShutdownHookManager虚拟机关闭钩子管理器

Java程序经常也会遇到进程挂掉的情况,一些状态没有正确的保存下来,这时候就需要在JVM关掉的时候执行一些清理现场的代码。

JAVA中的ShutdownHook提供了比较好的方案。

JDK提供了Java.Runtime.addShutdownHook(Thread hook)方法,可以注册一个JVM关闭的钩子,这个钩子可以在一下几种场景中被调用:

1. 程序正常退出

2. 使用System.exit()

3. 终端使用Ctrl+C触发的中断

4. 系统关闭

5. OutOfMemory宕机

6. 使用Kill pid命令干掉进程(注:在使用kill -9 pid时,是不会被调用的)

下面是JDK1.7中关于钩子的定义:

public void addShutdownHook(Thread hook)

参数:

hook - An initialized but unstarted Thread object

抛出:

IllegalArgumentException - If the specified hook has already been registered, or if it can be determined that the hook is already running or has already been run

IllegalStateException - If the virtual machine is already in the process of shutting down

SecurityException - If a security manager is present and it denies RuntimePermission("shutdownHooks")

从以下版本开始:

1.3

另请参见:

removeShutdownHook(java.lang.Thread), halt(int), exit(int)

首先来测试第一种,程序正常退出的情况:

package com.hook; import java.util.concurrent.TimeUnit; public class HookTest

{

public void start()

{

Runtime.getRuntime().addShutdownHook(new Thread(new Runnable() {

@Override

public void run()

{

System.out.println("Execute Hook.....");

}

}));

} public static void main(String[] args)

{

new HookTest().start();

System.out.println("The Application is doing something"); try

{

TimeUnit.MILLISECONDS.sleep();

}

catch (InterruptedException e)

{

e.printStackTrace();

}

}

}

运行结果:

The Application is doing something

Execute Hook.....

如上可以看到,当main线程运行结束之后就会调用关闭钩子。

下面再来测试第五种情况(顺序有点乱,表在意这些细节):

package com.hook; import java.util.concurrent.TimeUnit; public class HookTest2

{

public void start()

{

Runtime.getRuntime().addShutdownHook(new Thread(new Runnable() {

@Override

public void run()

{

System.out.println("Execute Hook.....");

}

}));

} public static void main(String[] args)

{

new HookTest().start();

System.out.println("The Application is doing something");

byte[] b = new byte[**];

try

{

TimeUnit.MILLISECONDS.sleep();

}

catch (InterruptedException e)

{

e.printStackTrace();

}

} }

运行参数设置为:-Xmx20M 这样可以保证会有OutOfMemoryError的发生。

运行结果:

The Application is doing something

Exception in thread "main" java.lang.OutOfMemoryError: Java heap space

at com.hook.HookTest2.main(HookTest2.java:)

Execute Hook.....

可以看到程序遇到内存溢出错误后调用关闭钩子,与第一种情况中,程序等待5000ms运行结束之后推出调用关闭钩子不同。

接下来再来测试第三种情况:

package com.hook; import java.util.concurrent.TimeUnit; public class HookTest3

{

public void start()

{

Runtime.getRuntime().addShutdownHook(new Thread(new Runnable() {

@Override

public void run()

{

System.out.println("Execute Hook.....");

}

}));

} public static void main(String[] args)

{

new HookTest3().start();

Thread thread = new Thread(new Runnable(){ @Override

public void run()

{

while(true)

{

System.out.println("thread is running....");

try

{

TimeUnit.MILLISECONDS.sleep();

}

catch (InterruptedException e)

{

e.printStackTrace();

}

}

} });

thread.start();

} }

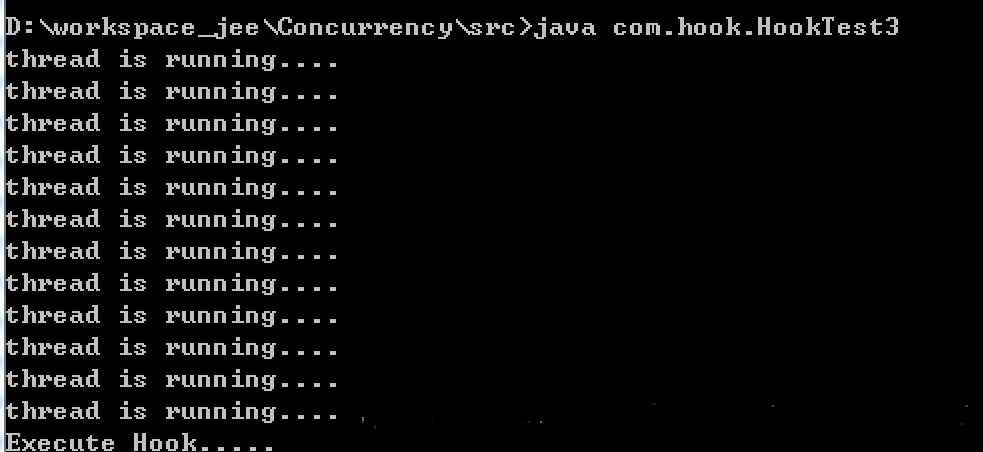

在命令行中编译:javac com/hook/HookTest3.java

在命令行中运行:Java com.hook.HookTest3 (之后按下Ctrl+C)

运行结果:

上面是java的,下面来看看spark的ShutdownHookManager

ShutdownHookManager的创建是在SparkContext中,为了在Spark程序挂掉的时候,处理一些清理工作

/** ShutdownHookManager的创建,为了在Spark程序挂掉的时候,处理一些清理工作 */

_shutdownHookRef = ShutdownHookManager.addShutdownHook(

ShutdownHookManager.SPARK_CONTEXT_SHUTDOWN_PRIORITY) { () =>

logInfo("Invoking stop() from shutdown hook")

// 这调用停止方法。关闭SparkContext,我就搞不懂了

stop()

}

来看看整体代码

package org.apache.spark.util import java.io.File

import java.util.PriorityQueue import scala.util.Try import org.apache.hadoop.fs.FileSystem import org.apache.spark.internal.Logging /**

* Various utility methods used by Spark.

*

* Spark使用的各种实用方法。

*/

private[spark] object ShutdownHookManager extends Logging {

val DEFAULT_SHUTDOWN_PRIORITY = // 默认的ShutdownHookManager优先级 /**

* The shutdown priority of the SparkContext instance. This is lower than the default

* priority, so that by default hooks are run before the context is shut down.

*

* SparkContext实例的shutdown优先级。这比默认的优先级要低,因此在默认情况下,在关闭上下文之前运行默认的hooks。

*/

val SPARK_CONTEXT_SHUTDOWN_PRIORITY = /**

* The shutdown priority of temp directory must be lower than the SparkContext shutdown

* priority. Otherwise cleaning the temp directories while Spark jobs are running can

* throw undesirable errors at the time of shutdown.

*

* temp目录的关闭优先级必须低于SparkContext关闭的优先级。否则,当Spark作业正在运行时,清理temp目录将会在关闭时抛出错误的错误。

*/

val TEMP_DIR_SHUTDOWN_PRIORITY = // 懒加载

private lazy val shutdownHooks = {

val manager = new SparkShutdownHookManager()

// 运行所有的hook,并且添加进去

manager.install()

manager

} private val shutdownDeletePaths = new scala.collection.mutable.HashSet[String]() // Add a shutdown hook to delete the temp dirs when the JVM exits

// 当JVM退出时,添加一个关闭钩子来删除temp dirs

logDebug("Adding shutdown hook") // force eager creation of logger addShutdownHook(TEMP_DIR_SHUTDOWN_PRIORITY) { () =>

logInfo("Shutdown hook called")

// we need to materialize the paths to delete because deleteRecursively removes items from

// shutdownDeletePaths as we are traversing through it.

shutdownDeletePaths.toArray.foreach { dirPath =>

try {

logInfo("Deleting directory " + dirPath)

// 递归地删除文件或目录及其内容。 如果删除失败,则抛出异常。

Utils.deleteRecursively(new File(dirPath))

} catch {

case e: Exception => logError(s"Exception while deleting Spark temp dir: $dirPath", e)

}

}

} // Register the path to be deleted via shutdown hook

// 通过关闭hook注册要删除的路径

def registerShutdownDeleteDir(file: File) {

// 得到文件的绝对路径

val absolutePath = file.getAbsolutePath() // 假如到要删除文件路径的集合

shutdownDeletePaths.synchronized {

shutdownDeletePaths += absolutePath

}

} // Remove the path to be deleted via shutdown hook 删除通过关闭hook删除的路径

def removeShutdownDeleteDir(file: File) {

val absolutePath = file.getAbsolutePath()

// 删除文件

shutdownDeletePaths.synchronized {

shutdownDeletePaths.remove(absolutePath)

}

} // Is the path already registered to be deleted via a shutdown hook ?

// 已经注册的路径是否通过关闭hook被删除?

// 判断shutdownDeletePaths中是否包含给定的路径,如果包含返回true,否则返回false

def hasShutdownDeleteDir(file: File): Boolean = {

val absolutePath = file.getAbsolutePath()

shutdownDeletePaths.synchronized {

shutdownDeletePaths.contains(absolutePath)

}

} // Note: if file is child of some registered path, while not equal to it, then return true;

// else false. This is to ensure that two shutdown hooks do not try to delete each others

// paths - resulting in IOException and incomplete cleanup.

// 注意:如果文件是某个已注册路径的子元素,而不等于它,则返回true;其他错误的。

// 这是为了确保两个关闭hooks不会试图删除彼此的路径——导致IOException和不完整的清理。

def hasRootAsShutdownDeleteDir(file: File): Boolean = {

val absolutePath = file.getAbsolutePath()

val retval = shutdownDeletePaths.synchronized {

shutdownDeletePaths.exists { path =>

!absolutePath.equals(path) && absolutePath.startsWith(path)

}

}

if (retval) {

logInfo("path = " + file + ", already present as root for deletion.")

}

retval

} /**

* Detect whether this thread might be executing a shutdown hook. Will always return true if

* the current thread is a running a shutdown hook but may spuriously return true otherwise (e.g.

* if System.exit was just called by a concurrent thread).

*

* 检测此线程是否正在执行关闭hook。如果当前线程是一个正在运行的关闭hook,但可能会错误地返回true(例如,如果系统),

* 则将始终返回true。退出是由一个并发线程调用的。

*

* Currently, this detects whether the JVM is shutting down by Runtime#addShutdownHook throwing

* an IllegalStateException.

*

* 当前,这检测到JVM是否在Runtime#addShutdownHook,抛出了一个IllegalStateException异常。

*/

def inShutdown(): Boolean = {

try {

val hook = new Thread {

override def run() {}

} // 这一点先加入后移除 是什么意思啊?

// scalastyle:off runtimeaddshutdownhook

Runtime.getRuntime.addShutdownHook(hook)

// scalastyle:on runtimeaddshutdownhook

Runtime.getRuntime.removeShutdownHook(hook)

} catch {

case ise: IllegalStateException => return true

}

false

} /**

* Adds a shutdown hook with default priority. 添加默认优先级的 shutdown hook。

*

* @param hook The code to run during shutdown.

* @return A handle that can be used to unregister the shutdown hook.

*/

def addShutdownHook(hook: () => Unit): AnyRef = {

addShutdownHook(DEFAULT_SHUTDOWN_PRIORITY)(hook)

} /**

* Adds a shutdown hook with the given priority. Hooks with lower priority values run

* first.

*

* 根据一个指定的优先级添加一个shutdown hook,优先级低的Hooks优先被运行

*

* @param hook The code to run during shutdown.

* @return A handle that can be used to unregister the shutdown hook.

*/

def addShutdownHook(priority: Int)(hook: () => Unit): AnyRef = {

shutdownHooks.add(priority, hook)

} /**

* Remove a previously installed shutdown hook. 删除先前安装的shutdown hook

*

* @param ref A handle returned by `addShutdownHook`.

* @return Whether the hook was removed.

*/

def removeShutdownHook(ref: AnyRef): Boolean = {

shutdownHooks.remove(ref)

} } private [util] class SparkShutdownHookManager { // 权限队列

private val hooks = new PriorityQueue[SparkShutdownHook]()

@volatile private var shuttingDown = false /**

* Install a hook to run at shutdown and run all registered hooks in order.

* 安装一个hook来运行关闭,并运行所有已注册的hooks。

*/

def install(): Unit = {

val hookTask = new Runnable() {

override def run(): Unit = runAll()

}

org.apache.hadoop.util.ShutdownHookManager.get().addShutdownHook(

hookTask, FileSystem.SHUTDOWN_HOOK_PRIORITY + )

} def runAll(): Unit = {

shuttingDown = true

var nextHook: SparkShutdownHook = null

while ({ nextHook = hooks.synchronized { hooks.poll() }; nextHook != null }) {

Try(Utils.logUncaughtExceptions(nextHook.run()))

}

} def add(priority: Int, hook: () => Unit): AnyRef = {

hooks.synchronized {

if (shuttingDown) {

throw new IllegalStateException("Shutdown hooks cannot be modified during shutdown.")

}

val hookRef = new SparkShutdownHook(priority, hook)

hooks.add(hookRef)

hookRef

}

} def remove(ref: AnyRef): Boolean = {

hooks.synchronized { hooks.remove(ref) }

} } private class SparkShutdownHook(private val priority: Int, hook: () => Unit)

extends Comparable[SparkShutdownHook] { override def compareTo(other: SparkShutdownHook): Int = {

other.priority - priority

} def run(): Unit = hook() }

Spark学习:ShutdownHookManager虚拟机关闭钩子管理器的更多相关文章

- Spark 分布式环境--连接独立集群管理器

Spark 分布式环境:master,worker 节点都配置好的情况下 : 却无法通过spark-shell连接到 独立集群管理器 spark-shell --master spark://soyo ...

- linux学习之lvm-逻辑卷管理器

一.简介 lvm即逻辑卷管理器(logical volume manager),它是linux环境下对磁盘分区进行管理的一种机制.lvm是建立在硬盘和分区之上的一个逻辑层,来提高分区管理的灵活性.它是 ...

- JAVA虚拟机关闭钩子(Shutdown Hook)

程序经常也会遇到进程挂掉的情况,一些状态没有正确的保存下来,这时候就需要在JVM关掉的时候执行一些清理现场的代码.JAVA中的ShutdownHook提供了比较好的方案. JDK提供了Java.Run ...

- Windows服务安装、卸载、启动和关闭的管理器

最近在重构公司的系统,把一些需要独立执行.并不需要人为关注的组件转换为Windows服务,Windows服务在使用的过程中有很多好处,相信这一点,就不用我多说了.但是每次都要建立Windows服务项目 ...

- SurvivalShooter学习笔记(八.敌人管理器)

敌人管理器:管理敌人的随机出生点创建 在场景中建立几个空物体,作为敌人的出生点 public class EnemyManager : MonoBehaviour { public PlayerHea ...

- Spark集群管理器介绍

Spark可以运行在各种集群管理器上,并通过集群管理器访问集群中的其他机器.Spark主要有三种集群管理器,如果只是想让spark运行起来,可以采用spark自带的独立集群管理器,采用独立部署的模式: ...

- 向虚拟机注册钩子,实现Bean对象的初始化和销毁方法

作者:小傅哥 博客:https://bugstack.cn 沉淀.分享.成长,让自己和他人都能有所收获! 一.前言 有什么方式,能给代码留条活路? 有人说:人人都是产品经理,那你知道吗,人人也都可以是 ...

- Python 的上下文管理器是怎么设计的?

花下猫语:最近,我在看 Python 3.10 版本的更新内容时,发现有一个关于上下文管理器的小更新,然后,突然发现上下文管理器的设计 PEP 竟然还没人翻译过!于是,我断断续续花了两周时间,终于把这 ...

- Spark学习之在集群上运行Spark(6)

Spark学习之在集群上运行Spark(6) 1. Spark的一个优点在于可以通过增加机器数量并使用集群模式运行,来扩展程序的计算能力. 2. Spark既能适用于专用集群,也可以适用于共享的云计算 ...

随机推荐

- Python实现C代码统计工具(三)

目录 Python实现C代码统计工具(三) 声明 一. 性能分析 1.1 分析单条语句 1.2 分析代码片段 1.3 分析整个模块 二. 制作exe Python实现C代码统计工具(三) 标签: Py ...

- a or an

在英语句子中用a还是用an,一直是个容易出错的问题. 原则为:如果下一个词的发音为元音则用an,否则用a. 例: a man an elephant a house an hour 对于大写字母要注意 ...

- collections模块和os模块

collections模块 在内置数据类型(dict.list.set.tuple)的基础上,collections模块还提供了几个额外的数据类型:Counter.deque.defaultdict. ...

- 看看大神们是怎么解决一些【bng】的哪!!!!

作者:姚冬 遇到bng的分享 我曾经做了两年大型软件的维护工作,那个项目有10多年了,大约3000万行以上的代码,参与过开发的有数千人,代码checkout出来有大约5个GB,而且bug特别多,op ...

- 源码安装git工具,显示/usr/local/lib64/libcrypto.a(dso_dlfcn.o) undefined reference to `dlopen'

/usr/local/lib64/libcrypto.a(dso_dlfcn.o): In function `dlfcn_globallookup':dso_dlfcn.c:(.text+0x30) ...

- Laravel 5.2 INSTALL- node's npm and ruby's bundler.

https://getcomposer.org/doc/00-intro.md Introduction# Composer is a tool for dependency management i ...

- Chap1:全景图[Computer Science Illuminated]

参考书目:Dale N . 计算机科学概论(原书第5版)[M]. 机械工业出版社, 2016 from library Chap1:全景图 1.1计算系统 1.2计算的历史 1.3计算工具与计算学科 ...

- kubernetes有状态集群服务部署与管理

有状态集群服务的两个需求:一个是存储需求,另一个是集群需求.对存储需求,Kubernetes的解决方案是:Volume.Persistent Volume .对PV,除了手动创建PV池外,还可以通过S ...

- maven如何将本地jar安装到本地仓库

1.首先确认你的maven是否已经配置: 指令:mvn -v 2.本地的jar包位置: 3.在自己项目pom.xml中添加jar依赖: <dependency> <groupId&g ...

- 根据xml配置使用反射动态生成对象

web.xml <?xml version="1.0" encoding="UTF-8"?> <web-app xmlns="htt ...