OHDSI——数据标准化

Home › Data Standardization

Data Standardization

Data standardization is the critical process of bringing data into a common format that allows for collaborative research, large-scale analytics, and sharing of sophisticated tools and methodologies【美[.meθə'dɑlədʒi],方法论;研究法;【生】分类法】. Why is it so important?

Healthcare data can vary greatly from one organization to the next. Data are collected for different purposes, such as provider reimbursement【英[ˌri:ɪm'bɜ:smənt],补偿;付还】, clinical research, and direct patient care. These data may be stored in different formats using different database systems and information models. And despite the growing use of standard terminologies in healthcare, the same concept (e.g., blood glucose) may be represented in a variety of ways from one setting to the next.

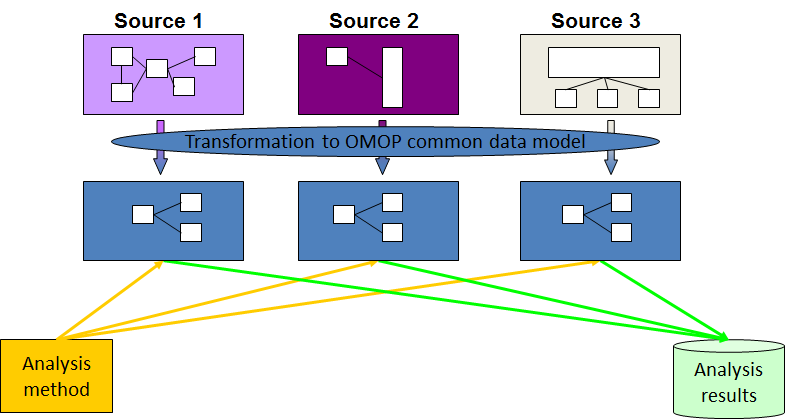

We at OHDSI are deeply involved in the evolution 【evolutionary [,ev·o'lu·tion·ar·y || ‚evə'luːʃənərɪ /ɪːv-]adj. 发展的; 渐进的; 进化的】and adoption【n. 采纳, 采用; 收养; 正式通过

】 of a Common Data Model known as the OMOP Common Data Model. We provide resources to convert a wide variety of datasets into the CDM, as well as a plethora【美['pleθərə],过多的】 of tools to take advantage of your data once it is in CDM format.

Most importantly, we have an active community that has done many data conversions (often called ETLs) with members who are eager to help you with your CDM conversion and maintenance.

OMOP Common Data Model

What is the OMOP Common Data Model (CDM)?

The OMOP Common Data Model allows for the systematic analysis of disparate 【disparate [dis·pa·rate || 'dɪspərət],不同的】observational databases. The concept behind this approach is to transform data contained within those databases into a common format (data model) as well as a common representation (表述)(terminologies【terminology [ter·mi·nol·o·gy |用词,术语】, vocabularies(词汇), coding schemes), and then perform systematic analyses using a library of standard analytic routines that have been written based on the common format.

Why do we need a CDM?

Observational databases differ in both purpose and design. Electronic Medical Records (EMR) are aimed at supporting clinical practice at the point of care, while administrative (adj. 管理的; 行政的)claims data are built for the insurance reimbursement 【reimbursement [,re·im'burse·ment ]补偿,赔偿】processes. Each has been collected for a different purpose, resulting in different logical organizations and physical formats, and the terminologies used to describe the medicinal products and clinical conditions vary from source to source.

The CDM can accommodate【[ac·com·mo·date || ə'kɒmədeɪt]v. 调节, 使适应, 和解; 供应; 适应】 both administrative claims and EHR, allowing users to generate evidence from a wide variety of sources. It would also support collaborative research across data sources both within and outside the United States, in addition to being manageable for data owners and useful for data users.

Why use the OMOP CDM?

The Observational Medical Outcomes Partnership (OMOP) CDM, now in its version 5.0.1, offers a solution unlike any other. OMOP found that disparate coding systems can be harmonized(和谐)—with minimal information loss—to a standardized vocabulary.

Once a database has been converted to the OMOP CDM, evidence can be generated using standardized analytics tools. We at OHDSI are currently developing Open Source tools for data quality and characterization(特征描述), medical product safety surveillance, comparative effectiveness, quality of care, and patient-level predictive modeling, but there are also other sources of such tools, some of them commercial.

For more information about the CDM please read the documentation, download the DDL for various database dialects and learn about the Standardized Vocabularies. If you have qustions post them at the OHDSI Forum.

Vocabulary Resources

The Standard Vocabulary is a foundational tool initially developed by some of us at OMOP that enables transparent and consistent content across disparate observational databases, and serves to support the OHDSI research community in conducting efficient and reproducible observational research.

To download the standard vocabularies, please visit our Athena download site:

Building your CDM

Building your CDM is a process that necessitates proper planning and execution, and we are here to help. Successful use of an observational data network requires a collaborative, interdisciplinary approach that includes:

- Local knowledge of the source data: underlying data capture process and its role in the healthcare system

- Clinical understanding of medical products and disease

- Domain expertise in the analytical use cases: epidemiology, pharmacovigilance, health economics and outcomes research

- Command of advanced statistical techniques for large-scale modeling and exploratory analysis

- Informatics experience with ontology management and leveraging standard terminologies for analysis

- Technical/programming skills to implement design and develop a scalable solution

Getting Started

Ready to get started on the conversion (ETL) process? Here are some recommended steps for an effective process:

- Train on OMOP CDM and Vocabulary

- Discuss analysis opportunities (Why are we doing this? What do you want to be able to do once CDM is done?)

- Evaluate technology requirements and infrastructure

- Discuss data dictionary and documentation on raw database

- Perform a systematic scan of raw database

- Draft Business Logic

a. Table level

b. Variable level

c. Value level (mapping)

d. Capture what will not be captured (lost) in the transformation - Create data sample to allow initial development

- DON’T START IMPLEMENTING UNTIL THE DESIGN IS COMPLETE

- don't start implementing ustil design is complete

Helpful Hints

Having gone through the ETL process with several databases over the past few years, we know that there will be obstacles to overcome and challenges to solve. Here are some helpful hints and lessons learned from the OHDSI collaborative:

- A successful ETL requires a village; don’t make one person try to be the hero and do it all themselves

- Team design

- Team implementation

- Team testing

- Document early and often, the more details the better

- Data quality checking is required at every step of the process

- Don’t make assumptions about source data based on documentation; verify by looking at the data

- Good design and comprehensive specifications should save unnecessary iterations and thrash during implementation

- ETL design/documentation/implementation is a living process. It will never be done and it can always be better. But don’t let the perfect be the enemy of the good

For more information, check out the documentation on our wiki page: www.ohdsi.org/web/wiki

And remember, the OHDSI community is here to help! Contact us at contact@ohdsi.org.

A 100k sample of CMS SynPUF data in CDM Version 5.2 is available to download on LTS Computing LLC’s download site:

OHDSI——数据标准化的更多相关文章

- 数据标准化 Normalization

数据的标准化(normalization)是将数据按比例缩放,使之落入一个小的特定区间.在某些比较和评价的指标处理中经常会用到,去除数据的单位限制,将其转化为无量纲的纯数值,便于不同单位或量级的指标能 ...

- 利用 pandas 进行数据的预处理——离散数据哑编码、连续数据标准化

数据的标准化 数据标准化就是将不同取值范围的数据,在保留各自数据相对大小顺序不变的情况下,整体映射到一个固定的区间中.根据具体的实现方法不同,有的时候会映射到 [ 0 ,1 ],有时映射到 0 附近的 ...

- 数据标准化/归一化normalization

http://blog.csdn.net/pipisorry/article/details/52247379 基础知识参考: [均值.方差与协方差矩阵] [矩阵论:向量范数和矩阵范数] 数据的标准化 ...

- R实战 第九篇:数据标准化

数据标准化处理是数据分析的一项基础工作,不同评价指标往往具有不同的量纲,数据之间的差别可能很大,不进行处理会影响到数据分析的结果.为了消除指标之间的量纲和取值范围差异对数据分析结果的影响,需要对数据进 ...

- sklearn5_preprocessing数据标准化

sklearn实战-乳腺癌细胞数据挖掘(博主亲自录制视频) https://study.163.com/course/introduction.htm?courseId=1005269003& ...

- 转:数据标准化/归一化normalization

转自:数据标准化/归一化normalization 这里主要讲连续型特征归一化的常用方法.离散参考[数据预处理:独热编码(One-Hot Encoding)]. 基础知识参考: [均值.方差与协方差矩 ...

- 数据标准化方法及其Python代码实现

数据的标准化(normalization)是将数据按比例缩放,使之落入一个小的特定区间.目前数据标准化方法有多种,归结起来可以分为直线型方法(如极值法.标准差法).折线型方法(如三折线法).曲线型方法 ...

- python数据标准化

def datastandard(): from sklearn import preprocessing import numpy as np x = np.array([ [ 1., -1., 2 ...

- Matlab数据标准化——mapstd、mapminmax

Matlab神经网络工具箱中提供了两个自带的数据标准化处理的函数——mapstd和mapminmax,本文试图解析一下这两个函数的用法. 一.mapstd mapstd对应我们数学建模中常使用的Z-S ...

随机推荐

- html ie

<meta charset="utf-8"> <meta http-equiv="X-UA-Compatible" content=" ...

- YII框架增删改查常用语句

//实例化db $db = new \yii\db\Query(); //插入 $db->createCommand()->insert('user', [ 'name' => 't ...

- BZOJ1117 [POI2009]救火站Gas 贪心

原文链接https://www.cnblogs.com/zhouzhendong/p/BZOJ1117.html 题目传送门 - BZOJ1117 题意 给你一棵树,现在要建立一些消防站,有以下要求: ...

- 2018牛客网暑假ACM多校训练赛(第四场)A Ternary String 数论

原文链接https://www.cnblogs.com/zhouzhendong/p/NowCoder-2018-Summer-Round4-A.html 题目传送门 - https://www.no ...

- eclipse里面svn比较之前版本的代码

team——显示资源历史记录比较

- Supervisor进程管理&开机自启

这几天在用supervisor管理爬虫和Flask, 每次都记不住命令,花点时间记录下. supervisor是一个进程管理工具,用来启动.停止.重启和监测进程.我用这个东西主要用来监测爬虫和Flas ...

- Java版本翻转字符串

题目链接:http://acm.hdu.edu.cn/showproblem.php?pid=1282题目描述: Java中的StringBuilder有一个字符串翻转函数,因此,可以先将输入的数字转 ...

- Intellij IDEA实现SpringBoot项目多端口启动

前言 有时候使用springboot项目时遇到这样一种情况,用一个项目需要复制很多遍进行测试,除了端口号不同以外,没有任何不同.这时我们强大的Intellij IDEA就能替我们实现. 实现方法 第一 ...

- 错误代码:0x80070032 处理程序“PageHandlerFactory-Integrated”在其模块列表中有一个错误模块“ManagedPipelineHandler”

错误分析: vs2010默认采用的是.NET 4.0框架,4.0框架是独立的CLR,和.NET 2.0的不同,如果想运行.NET 4.0框架的网站,需要用aspnet_regiis注册.NET 4.0 ...

- BZOJ.4820.[SDOI2017]硬币游戏(思路 高斯消元 哈希/AC自动机/KMP)

BZOJ 洛谷 建出AC自动机,每个点向两个儿子连边,可以得到一张有向图.参照 [SDOI2012]走迷宫 可以得到一个\(Tarjan\)+高斯消元的\(O((nm)^3)\)的做法.(理论有\(6 ...

ATHENA

ATHENA