Logistic Regression – Geometric Intuition

Logistic Regression – Geometric Intuition

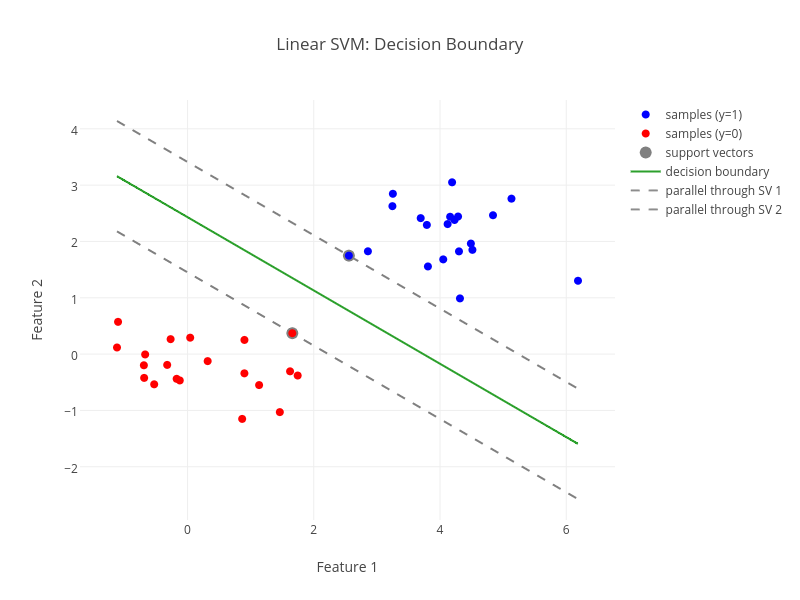

Everybody who has taken a machine learning course probably knows the geometric intuition behind a support vector machine (SVM, great book): A SVM is a large margin classifier. In other words, it maximizes the geometric distance between the decision boundary and the classes of samples. Often you’ll find plots similar to this one:

But what about logistic regression? What is the geometric intuition behind it and how does it compare to linear SVMs? Let’s find out.

Geometric intuition behind logistic regression

First, a quick reminder about the definition of the logistic function, given  features:

features:

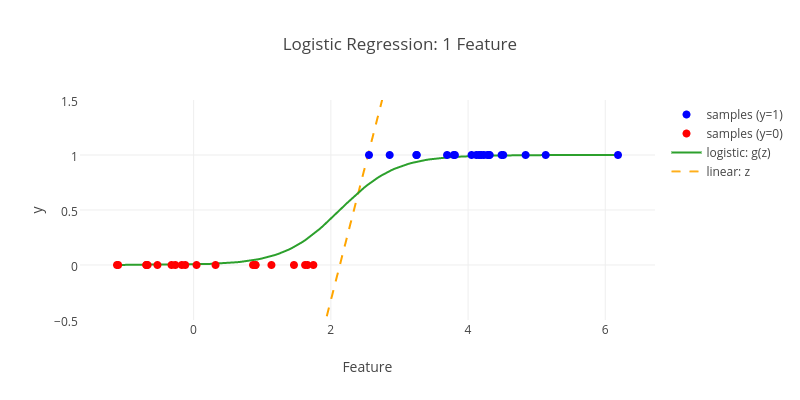

With that out of the way, let’s dive into the geometric aspects of logistic regression, starting with a toy example of only one feature:

The blue samples of our feature data belong to class  , while the red dots are supposed to get assigned to

, while the red dots are supposed to get assigned to  . As we can see in the figure above, our data is fully separable and the logistic regression is able to demonstrate this property. From a geometric perspective, the algorithm essentially adds a new dimension for our dependent variable

. As we can see in the figure above, our data is fully separable and the logistic regression is able to demonstrate this property. From a geometric perspective, the algorithm essentially adds a new dimension for our dependent variable  and fits a logistic function

and fits a logistic function  to the arising two-dimensional data in such a way that it separates the samples as good as possible within the range of

to the arising two-dimensional data in such a way that it separates the samples as good as possible within the range of  . Once we have such a fitted curve, the usual method of prediction is to assign

. Once we have such a fitted curve, the usual method of prediction is to assign  to everything

to everything  and vice versa.

and vice versa.

When thinking about logistic regression, I usually have its tight connection to linear regression somewhere in the back of my head (no surprise, both have “regression” as part of their names). Therefore, I was wondering what the line  would look like and added it to the plot. As we can easily see,

would look like and added it to the plot. As we can easily see,  differs significantly from the potential result of a linear regression for the given input data. While that was to be expected, I think it’s still nice to confirm it visually.

differs significantly from the potential result of a linear regression for the given input data. While that was to be expected, I think it’s still nice to confirm it visually.

Note two details of the plot: For one, the logistic curve is not as steep as you might expect at first. This is due to the use of regularization, which logistic regression basically can’t live without. Second,  for

for  and not, as my intuition initially suggested, for

and not, as my intuition initially suggested, for  . Not sure if this is particularly helpful, but I find it interesting nonetheless.

. Not sure if this is particularly helpful, but I find it interesting nonetheless.

So far, so good. But what happens when we introduce an additional dimension? Let’s have a look at such a two feature scenario (feel free to pinch and zoom the plot):

What we see is the surface of the fitted logistic function  for a classification task with two features. This time, instead of transforming a line to a curve, the equivalent happened for a plane. To make it easier to understand how to carry out predictions given such a model, the following plot visualizes the data points projected onto the surface of the logistic regression function:

for a classification task with two features. This time, instead of transforming a line to a curve, the equivalent happened for a plane. To make it easier to understand how to carry out predictions given such a model, the following plot visualizes the data points projected onto the surface of the logistic regression function:

Now we can again make decisions for our class  based on a threshold

based on a threshold  , which usually is

, which usually is  :

:

So much about the geometric intuition behind logistic regression. Let’s see how that compares to linear SVMs.

Comparison of logistic regression to linear SVM

First of all, notice that with logistic regression we can use the  value of a sample as a probability estimate for

value of a sample as a probability estimate for  . The responsive 3D-plots above created with the help of plotly’s fantastic library illustrate this property of logistic regression very well. I have yet to come across a real-world classification task where such probability estimates are not at least somewhat useful. Alas, SVMs (in their original version) aren’t able to provide them. Due to the way the model works, all it can tell you is whether a sample belongs to class

. The responsive 3D-plots above created with the help of plotly’s fantastic library illustrate this property of logistic regression very well. I have yet to come across a real-world classification task where such probability estimates are not at least somewhat useful. Alas, SVMs (in their original version) aren’t able to provide them. Due to the way the model works, all it can tell you is whether a sample belongs to class  or not.

or not.

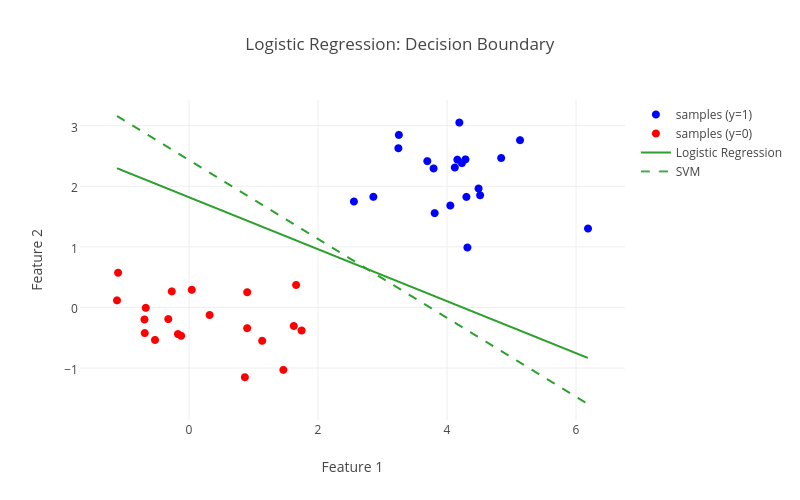

I think at this point the most effective way of comparing logistic regression to linear SVM geometrically, is to add the decision boundary of logistic regression to the initial figure of the post. To make this happen, we need to project the decision boundary  of the three-dimensional plot above onto the two-dimensional feature space. Doing so, we end up with the following figure:

of the three-dimensional plot above onto the two-dimensional feature space. Doing so, we end up with the following figure:

As we can see, the logistic regression does not follow the same large margin characteristic as a linear SVM. I’ll leave it to you to decide which is the better fit for the given data.

If you’d like to know further differences between logistic regression and linear SVMs aside from the geometrically motivated ones mentioned here, have a look at the excellent answers on quora.

The code for generating the plots of this post can be found on github.

Logistic Regression – Geometric Intuition的更多相关文章

- 课程一(Neural Networks and Deep Learning),第二周(Basics of Neural Network programming)—— 4、Logistic Regression with a Neural Network mindset

Logistic Regression with a Neural Network mindset Welcome to the first (required) programming exerci ...

- 机器学习 (三) 逻辑回归 Logistic Regression

文章内容均来自斯坦福大学的Andrew Ng教授讲解的Machine Learning课程,本文是针对该课程的个人学习笔记,如有疏漏,请以原课程所讲述内容为准.感谢博主Rachel Zhang 的个人 ...

- 逻辑回归 Logistic Regression

逻辑回归(Logistic Regression)是广义线性回归的一种.逻辑回归是用来做分类任务的常用算法.分类任务的目标是找一个函数,把观测值匹配到相关的类和标签上.比如一个人有没有病,又因为噪声的 ...

- logistic regression与SVM

Logistic模型和SVM都是用于二分类,现在大概说一下两者的区别 ① 寻找最优超平面的方法不同 形象点说,Logistic模型找的那个超平面,是尽量让所有点都远离它,而SVM寻找的那个超平面,是只 ...

- Logistic Regression - Formula Deduction

Sigmoid Function \[ \sigma(z)=\frac{1}{1+e^{(-z)}} \] feature: axial symmetry: \[ \sigma(z)+ \sigma( ...

- SparkMLlib之 logistic regression源码分析

最近在研究机器学习,使用的工具是spark,本文是针对spar最新的源码Spark1.6.0的MLlib中的logistic regression, linear regression进行源码分析,其 ...

- [OpenCV] Samples 06: [ML] logistic regression

logistic regression,这个算法只能解决简单的线性二分类,在众多的机器学习分类算法中并不出众,但它能被改进为多分类,并换了另外一个名字softmax, 这可是深度学习中响当当的分类算法 ...

- Stanford机器学习笔记-2.Logistic Regression

Content: 2 Logistic Regression. 2.1 Classification. 2.2 Hypothesis representation. 2.2.1 Interpretin ...

- Logistic Regression vs Decision Trees vs SVM: Part II

This is the 2nd part of the series. Read the first part here: Logistic Regression Vs Decision Trees ...

随机推荐

- Js_封装JQ库为插件

//在jQuery匿名函数中,采用jQuery.extend();方法创建jQuery插件 //在jQuery匿名函数中, 采用对象.属性 = 函数的方式创建jQuery插件 (function ($ ...

- PAT甲题题解-1117. Eddington Number(25)-(大么个大水题~)

如题,大水题...贴个代码完事,就这么任性~~ #include <iostream> #include <cstdio> #include <algorithm> ...

- THE LAST ONE!! 2017《面向对象程序设计》课程作业八

THE LAST ONE!! 2017<面向对象程序设计>课程作业八 031602230 卢恺翔 GitHub传送门 题目描述 1.时间匆匆,本学期的博客作业就要结束了,是否有点不舍,是否 ...

- ios UnitTest 学习笔记

一.运行第一个单元测试: 1.在Xcode 5中新建一个工程默认自带一个单元测试的文件夹,IDE自动生成了一个实现XCTestCase的.m文件,里面有一个失败测试(早期版本中实现的是SenTestC ...

- 作业6小学生四则运算测试APP的NABCD模型

小学生四则运算测试NABCD模型 组员:李新,朱浩龙,叶煜稳,陈俊金,林德麟 (1) N (Need 需求) 需求分析: 四则运算是小学生学习数学的核心和基础,必须经过大量的练习才能熟练掌握,但是一 ...

- 关于Sample的分析报告

首先,这是一个典型的sample Table box: 一. Stbl box中常见的子box: stts:Decoding Time to Sample Box时间戳和Sample映射表. ...

- python项目离线环境配置指南

参考文献: http://blog.csdn.net/candcplusplus/article/details/52156324 https://www.cnblogs.com/michael-xi ...

- 圆桌的项目Alpha冲刺——测试

测试工作安排 作为一个测试计划来讲,核心的三个要素是时间,资源,范围.时间就是什么时候做以及要花多久做,资源就是你要调用的人力.机器等资源,范围是你要测试的东西以及测试重点. 时间:每天完成相应的模块 ...

- Python入门:for循环、while循环

Python中有两种循环,分别为:for循环和while循环. 1. for循环 for循环可以用来遍历某一对象(遍历:通俗点说,就是把这个循环中的第一个元素到最后一个元素依次访问一次).for循环的 ...

- pixi.js v5 快速了解

pixi.js 追求简单, 性能,高价值. pixi.js v5将是一交比较大的升级,代码更加精简,性能更加强悍,功能更加丰富,扩展更加高效 pixi.js一步一脚印,版本持续稳定的更新, 深入学习 ...