zookeeper 伪集群安装和 zkui管理UI配置

#=======================【VM机器,二进制安装】

# 安装环境

# OS System = Linux CNT7XZKPD02 4.4.190-1.el7.elrepo.x86_64 #1 SMP Sun Aug 25 07:32:44 EDT 2019 x86_64 x86_64 x86_64 GNU/Linux # JDK = java version "1.8.0_231" / Java HotSpot(TM) 64-Bit Server VM (build 25.231-b11, mixed mode) # zookeeper = zookeeper-3.6.1-x64 # zkui = zkui-2.0 , 备注:main.java有修复一个bug关于config.cfg路径获取。

# https://github.com/tiandong19860806/zkui

# https://github.com/DeemOpen/zkui/issues/81 #========================install zookeeper======================================================================== # step 设置系统swap 分区大小,参考如下公式:

# RAM / Swap Space

# Between GB and GB / 1.5 times the size of the RAM

# Between GB and GB / Equal to the size of the RAM

# More than GB / GB

# 执行如下命令

# 然后,检查和设置swap那一行是否有被注释,如果被注释就要开启

cat /etc/fstab

# 查看swap 空间大小(总计):

free -m

# 查看swap 空间(file(s)/partition(s)):

swapon -s # 查看磁盘路径的空间

df -h /home # 关闭所有的swap空间

swapoff -a # 创建新的swap文件,bs=表示每个block分块大小是1024 byte,count表示多少个block分块,所以总大小是bs*count=4GB

dd if=/dev/zero of=/home/system-swap bs= count=

# 输出如下

# + records in

# + records out

# bytes (4.3 GB) copied, 29.991 s, MB/s # 设置这个分区的权限为600

chmod -R /home/system-swap # 把这个新建分区,变成swap分区

/sbin/mkswap /home/system-swap

# 输出如下

# Setting up swapspace version , size = KiB

# no label, UUID=941e36a8-d389--ad7d-07387e1da776 # 把这个新建分区,设置状态为open。

# 备注:重启之后,该swap分区还是失效,只有执行下面配置后才会永久生效。

/sbin/swapon /home/system-swap # 设置重启后,swap分区仍然有效

# 编辑如下文件,修改swap行内容为新加分区/home/system-swap

cat /etc/fstab

##### /dev/mapper/centos-swap swap swap defaults

# /home/system-swap swap swap defaults # 关闭SELINUX,设置参数SELINUXTYPE=disabled

vi /etc/selinux/config

# 修改参数如下

# # SELINUXTYPE=targeted

SELINUXTYPE=disabled # ============================================================================================================= # step : 安装系统依赖软件

# 修改yum为国内镜像 === 看具体情况,有时候国内镜像不一定完整,这个时候还是要切换回国外地址

# cp /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup-linux && \

# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo && \

yum clean all && \

yum makecache # 清理掉无用的repo

yum --enablerepo=base clean metadata # 安装依赖软件

yum install binutils -y && \

yum install compat-libstdc++- -y && \

yum install gcc -y && \

yum install gcc-c++ -y && \

yum install glibc -y && \

yum install glibc-devel -y && \

yum install libgcc -y && \

yum install libstdc++ -y && \

yum install libstdc++-devel -y && \

yum install libaio -y && \

yum install libaio-devel -y && \

yum install libXext -y && \

yum install libXtst -y && \

yum install libX11 -y && \

yum install libXau -y && \

yum install libxcb -y && \

yum install libXi -y && \

yum install make -y && \

yum install sysstat -y && \

yum install zlib-devel -y && \

yum install elfutils-libelf-devel -y # yum

rpm -q --queryformat %-{name}-%{version}-%{release}-%{arch}"\n" \ compat-libstdc++- glibc-kernheaders glibc-headers libaio libgcc glibc-devel xorg-x11-deprecated-libs

# 输出无法下载和安装的软件,清单如下:

# package compat-libstdc++- is not installed

# package glibc-kernheaders is not installed

# package glibc-headers is not installed

# libaio-0.3.-.el7-x86_64

# libgcc-4.8.-.el7-x86_64

# package glibc-devel is not installed

# package xorg-x11-deprecated-libs is not installed # 遇到部分无法在aliyun下载的软件,则需要重新替换yum.repo

# cp /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup-aliyun && \

# cp /etc/yum.repos.d/CentOS-Base.repo.backup-linux /etc/yum.repos.d/CentOS-Base.repo && \

yum clean all && \

yum makecache && \

yum install -y compat-libstdc++* && \

yum install -y glibc-kernheaders* && \

yum install -y glibc-headers* && \

yum install -y libaio-* && \

yum install -y libgcc-* && \

yum install -y glibc-devel* && \

yum install -y xorg-x11-deprecated-libs* && \ # 确保,已经包含了libaio-0.3.,默认开启异步I/O。

# 检查在操作系统中,是否开启AIO 异步读写IO

cat /proc/slabinfo | grep kio

# 如果没有开启,则在下面文件中,增加如下两行

vi /proc/slabinfo

kioctx : tunables : slabdata

kiocb : tunables : slabdata # ============================================================================================================= # step : 创建zookeper安装目录

mkdir -p /opt/soft/{jdk,zookeeper}

# 然后上传jdk或zookeeper 二进制文件到上面创建的软件目录 # 创建zookeeper的安装主目录

mkdir -p /app/zookeeper && \

# 创建zookeeper的数据主目录

mkdir -p /data/zookeeper && \

# 创建zookeeper的日志主目录

mkdir -p /log/zookeeper # ============================================================================================================= # step : zookeeper安装用户和组的创建

# 使用root用户,进行如下操作:

# 创建ops_install组

groupadd -g ops_install # 创建ops_admin组

groupadd -g ops_admin # 创建zookeeper用户

useradd -g ops_install -G ops_admin zookeeper # 修改zookeeper密码

echo 'password'|passwd --stdin zookeeper # 删除用户和其以来的用户文件

# userdel -r zookeeper

# 查看用户zookeeper权限是否设置正确,正确输出结果如下

# id zookeeper

# [root@CNT7XZKPD02 ~]# id zookeeper

# uid=(zookeeper) gid=(ops_install) groups=(ops_install),(ops_admin) # ============================================================================================================= # step : 安装用户的profile文件的设置 # 编辑/etc/profile,加入以下内容

vi /etc/profile

# -----------------------java env-----------------------------------------------------------------

JAVA_HOME=/env/jdk/jdk-12.0.

PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

# -----------------------java env----------------------------------------------------------------- # -----------------------zookeeper env---------------------------------------------------------------

ZOOKEEPER_HOME=/app/zookeeper/zookeeper-

PATH=$ZOOKEEPER_HOME/bin:$PATH

# -----------------------zookeeper env--------------------------------------------------------------- # 生效配置环境变量

source /etc/profile # 检查生效环境变量

env | grep ZOOKEEPER

env | grep JAVA # ============================================================================================================= # step : 安装jdk

# 创建jdk的软件目录和安装目录,分别如下:

mkdir -p /opt/soft/jdk/ && \

mkdir -p /env/jdk/ # 然后,通过WinSCP工具,把JDK 8二进制安装包tar复制到软件目录

ls -al /opt/soft/jdk/jdk-12.0.2_linux-x64_bin.tar.gz # 解压jdk到安装目录

tar -zxvf /opt/soft/jdk/jdk-12.0.2_linux-x64_bin.tar.gz -C /env/jdk/ # ============================================================================================================= # 伪集群, 节点1// # step : 创建相应的文件系统(或安装目录)

# for 循环 - begin

V_NODE_NUM=

for ((i=;i<=${V_NODE_NUM};i++))

do

mkdir -p /app/zookeeper/zookeeper-${i} && \

mkdir -p /data/zookeeper/zookeeper-${i} && \

mkdir -p /log/zookeeper/zookeeper-${i}

done

# for 循环 - end ls -al /app/zookeeper

# 输出结果,如下图

# [root@CNT7XZKPD02 ~]# ls -al /app/zookeeper

# total

# drwxr-xr-x root root Jun : .

# drwxr-xr-x root root Jun : ..

# drwxrwxr-x zookeeper ops_install Jun : zookeeper-

# drwxrwxr-x zookeeper ops_install Jun : zookeeper-

# drwxrwxr-x zookeeper ops_install Jun : zookeeper- ls -al /data/zookeeper

# 输出结果,如下图

# [root@CNT7XZKPD02 ~]# ls -al /data/zookeeper/

# total

# drwxr-xr-x root root Jun : .

# drwxr-xr-x root root Jun : ..

# drwxrwxr-x zookeeper ops_install Jun : zookeeper-

# drwxrwxr-x zookeeper ops_install Jun : zookeeper-

# drwxrwxr-x zookeeper ops_install Jun : zookeeper- ls -al /log/zookeeper

# 输出结果,如下图

# [root@CNT7XZKPD02 ~]# ls -al /log/zookeeper

# total

# drwxr-xr-x root root Jun : .

# drwxr-xr-x root root Jun : ..

# drwxrwxr-x zookeeper ops_install Jun : zookeeper-

# drwxrwxr-x zookeeper ops_install Jun : zookeeper-

# drwxrwxr-x zookeeper ops_install Jun : zookeeper- # 解压jdk到安装目录: 分别是三个伪节点目录

tar -zxvf /opt/soft/zookeeper/apache-zookeeper-3.6.-bin.tar.gz -C /app/zookeeper/ # 查看zookeeper安装文件

ls -al /app/zookeeper/apache-zookeeper-3.6.-bin

# 解压后,可以看到当前目录下,如下文件

# [root@CNT7XZKPD02 ~]# ls -al /app/zookeeper/apache-zookeeper-3.6.-bin

# total

# drwxr-xr-x root root Jun : .

# drwxrwxr-x zookeeper ops_install Jun : ..

# drwxr-xr-x root root May : bin

# drwxr-xr-x root root May : conf

# drwxr-xr-x root root May : docs

# drwxr-xr-x root root Jun : lib

# -rw-r--r-- root root May : LICENSE.txt

# -rw-r--r-- root root May : NOTICE.txt

# -rw-r--r-- root root May : README.md

# -rw-r--r-- root root May : README_packaging.txt # 修改文件名为zookeeper-3.6.

mv /app/zookeeper/apache-zookeeper-3.6.-bin /app/zookeeper/zookeeper-3.6./ # 配置伪集群,复制三个节点 myid=//3的三个安装目录

for ((i=;i<=${V_NODE_NUM};i++))

do

cp -rf /app/zookeeper/zookeeper-3.6./* /app/zookeeper/zookeeper-${i}/

cp /app/zookeeper/zookeeper-1/conf/zoo_sample.cfg /app/zookeeper/zookeeper-1/conf/zoo.cfg

done

# for 循环 - end # 授予zookeeper用户访问文件夹和文件的权限

V_NODE_NUM=3

for ((i=1;i<=${V_NODE_NUM};i++))

do

chmod -R 775 /app/zookeeper/zookeeper-${i} && \

chown -R zookeeper:ops_install /app/zookeeper/zookeeper-${i} && \

chmod -R 775 /data/zookeeper/zookeeper-${i} && \

chown -R zookeeper:ops_install /data/zookeeper/zookeeper-${i} && \

chmod -R 775 /log/zookeeper/zookeeper-${i} && \

chown -R zookeeper:ops_install /log/zookeeper/zookeeper-${i}

done

# for 循环 - end # ============================================================================================================= # 配置伪集群

# step 10: 配置zookeeper的文件zoo.cfg # -------------------------------------------------- # 节点1 # 首先,修改配置文件

# 备注,必须是这个名字:zoo.cfg

# cp /app/zookeeper/zookeeper-1/conf/zoo_sample.cfg /app/zookeeper/zookeeper-1/conf/zoo.cfg

vi /app/zookeeper/zookeeper-1/conf/zoo.cfg

# 修改配置文件,如下:

# 参数1,数据目录和日志目录

dataDir=/data/zookeeper/zookeeper-1

dataLogDir=/log/zookeeper/zookeeper-1

# 参数2:server参数,为配置集群节点

# 备注:如果想做成伪集群(同一台VM,提供集群部署部署zookeeper),则将每个参数server.x的端口改为不同端口

# 格式 = server.x={IP或HOSTNAME}:{端口 = 2888}:{端口 = 3888}

server.1=CNT7XZKPD02:2881:3881

server.2=CNT7XZKPD02:2882:3882

server.3=CNT7XZKPD02:2883:3883

# 参数3:客户端端口

clientPort=2181 # -------------------------------------------------- # 节点2 # 首先,修改配置文件

# 备注,必须是这个名字:zoo.cfg

# cp /app/zookeeper/zookeeper-2/conf/zoo_sample.cfg /app/zookeeper/zookeeper-2/conf/zoo.cfg

vi /app/zookeeper/zookeeper-2/conf/zoo.cfg

# 修改配置文件,如下:

# 参数1,数据目录和日志目录

dataDir=/data/zookeeper/zookeeper-2

dataLogDir=/log/zookeeper/zookeeper-2

# 参数2:server参数,为配置集群节点

# 备注:如果想做成伪集群(同一台VM,提供集群部署部署zookeeper),则将每个参数server.x的端口改为不同端口

# 格式 = server.x={IP或HOSTNAME}:{端口 = 2888}:{端口 = 3888}

server.1=CNT7XZKPD02:2881:3881

server.2=CNT7XZKPD02:2882:3882

server.3=CNT7XZKPD02:2883:3883

# 参数3:客户端端口

clientPort=2182 # -------------------------------------------------- # 节点3 # 首先,修改配置文件

# 备注,必须是这个名字:zoo.cfg

# cp /app/zookeeper/zookeeper-3/conf/zoo_sample.cfg /app/zookeeper/zookeeper-3/conf/zoo.cfg

vi /app/zookeeper/zookeeper-3/conf/zoo.cfg

# 修改配置文件,如下:

# 参数1,数据目录和日志目录

dataDir=/data/zookeeper/zookeeper-3

dataLogDir=/log/zookeeper/zookeeper-3

# 参数2:server参数,为配置集群节点

# 备注:如果想做成伪集群(同一台VM,提供集群部署部署zookeeper),则将每个参数server.x的端口改为不同端口

# 格式 = server.x={IP或HOSTNAME}:{端口 = 2888}:{端口 = 3888}

server.1=CNT7XZKPD02:2881:3881

server.2=CNT7XZKPD02:2882:3882

server.3=CNT7XZKPD02:2883:3883

# 参数3:客户端端口

clientPort=2183 # ============================================================================================================= # 配置伪集群

# step 11: 配置zookeeper的文件myid # 节点1/2/3

# 配置伪集群,复制三个节点的文件 myid=1/2/3

for ((i=1;i<=${V_NODE_NUM};i++))

do

cat > /data/zookeeper/zookeeper-${i}/myid << EOF

${i}

EOF

done

# for 循环 - end # ================================================================================================================================== # step 12: 启动zookeeper # 启动服务: 节点1/2/3

/app/zookeeper/zookeeper-1/bin/zkServer.sh start /app/zookeeper/zookeeper-1/conf/zoo.cfg

/app/zookeeper/zookeeper-2/bin/zkServer.sh start /app/zookeeper/zookeeper-2/conf/zoo.cfg

/app/zookeeper/zookeeper-3/bin/zkServer.sh start /app/zookeeper/zookeeper-3/conf/zoo.cfg # 查看每个节点的角色: 节点1/2/3

/app/zookeeper/zookeeper-1/bin/zkServer.sh status /app/zookeeper/zookeeper-1/conf/zoo.cfg

/app/zookeeper/zookeeper-1/bin/zkServer.sh status /app/zookeeper/zookeeper-2/conf/zoo.cfg

/app/zookeeper/zookeeper-1/bin/zkServer.sh status /app/zookeeper/zookeeper-3/conf/zoo.cfg # 停止服务: 节点1/2/3

/app/zookeeper/zookeeper-1/bin/zkServer.sh stop /app/zookeeper/zookeeper-1/conf/zoo.cfg

/app/zookeeper/zookeeper-1/bin/zkServer.sh stop /app/zookeeper/zookeeper-2/conf/zoo.cfg

/app/zookeeper/zookeeper-1/bin/zkServer.sh stop /app/zookeeper/zookeeper-3/conf/zoo.cfg # 查看zookeeper启动后的三个节点的端口,如下 :

# client_port = 2181 / 2182 / 2183

# server_port = 2881:3881 / 2882:3882 / 2883:3883

[root@CNT7XZKPD02 ~]# netstat -nltp | grep java

tcp 0 0 0.0.0.0:8080 0.0.0.0:* LISTEN 2533/java

tcp 0 0 0.0.0.0:35581 0.0.0.0:* LISTEN 2533/java

tcp 0 0 192.168.16.32:2882 0.0.0.0:* LISTEN 2595/java

tcp 0 0 0.0.0.0:2181 0.0.0.0:* LISTEN 2533/java

tcp 0 0 0.0.0.0:2182 0.0.0.0:* LISTEN 2595/java

tcp 0 0 0.0.0.0:45062 0.0.0.0:* LISTEN 2595/java

tcp 0 0 0.0.0.0:2183 0.0.0.0:* LISTEN 2663/java

tcp 0 0 0.0.0.0:34312 0.0.0.0:* LISTEN 2663/java

tcp 0 0 192.168.16.32:3881 0.0.0.0:* LISTEN 2533/java

tcp 0 0 192.168.16.32:3882 0.0.0.0:* LISTEN 2595/java

tcp 0 0 192.168.16.32:3883 0.0.0.0:* LISTEN 2663/java # -------------------------------------------------------------------------------------------------- # zookeeper 命令使用

# 连接服务器 zkCli.sh -server {server_zookeeper_ip}:{server_client_port}

zkCli.sh -server 127.0.0.1:2181

zkCli.sh -server 127.0.0.1:2182

zkCli.sh -server 127.0.0.1:2183

# 或

zkCli.sh -server 192.168.16.32:2181

zkCli.sh -server 192.168.16.32:2182

zkCli.sh -server 192.168.16.32:2183 # 然后,在zookeeper命令行,输入如下命令:

# 创建数据,path = "/data-test" , value = "hello zookeeper"

[zk: 127.0.0.1:2182(CONNECTED) 0] create "/data-test" "zookeeper" # 查询数据

[zk: 127.0.0.1:2181(CONNECTED) 15] get "/data-test"

zookeeper # 修改数据,path = /data-test , value = "hello zookeeper"

[zk: 127.0.0.1:2182(CONNECTED) 0] set "/data-test" "hello zookeeper" # 查询数据

[zk: 127.0.0.1:2182(CONNECTED) 5] get "/data-test"

hello zookeeper # 添加子数据,path = /data-test/sub-key-01 , value = "sub-value-01"

[zk: 192.168.16.32:2183(CONNECTED) 2] create "/data-test/sub-key-01" "sub-value-01"

Created /data-test/sub-key-01 # 查询数据

[zk: 192.168.16.32:2183(CONNECTED) 3] get "/data-test/sub-key-01"

sub-value-01

[zk: 192.168.16.32:2183(CONNECTED) 4] get "/data-test"

hello zookeeper

[zk: 192.168.16.32:2183(CONNECTED) 5] get /data-test

hello zookeeper

# 或查询数据

[zk: 127.0.0.1:2181(CONNECTED) 21] get "/data-test/sub-key-01"

sub-value-01

[zk: 127.0.0.1:2181(CONNECTED) 22] get "/data-test"

hello zookeeper

[zk: 127.0.0.1:2181(CONNECTED) 23] get /data-test

hello zookeeper # 查询节点清单

[zk: 192.168.16.32:2183(CONNECTED) 6] ls /

[data-test, zookeeper] # 添加子数据,path = /data-test/sub-key-02 , value = "sub-value-02"

[zk: 192.168.16.32:2183(CONNECTED) 9] create "/data-test/sub-key-02" "sub-value-02"

Created /data-test/sub-key-02

[zk: 192.168.16.32:2183(CONNECTED) 10] ls "/data-test"

[sub-key-01, sub-key-02] # 删除单个节点

[zk: 127.0.0.1:2181(CONNECTED) 21] delete "/data-test/sub-key-02"

[zk: 192.168.16.32:2183(CONNECTED) 14] ls "/data-test"

[sub-key-01] # 删除当前结点和其下面的全部子节点

# rmr = 旧版本命令

[zk: 127.0.0.1:2181(CONNECTED) 21] rmr "/data-test"

# 或 deleteall == 新版本命令

[zk: 127.0.0.1:2181(CONNECTED) 21] rmr "/data-test"

# 检查删除后结果,/data-test和其子节点都不存在了

[zk: 192.168.16.32:2183(CONNECTED) 25] ls /data-test

Node does not exist: /data-test # ==================================================================================================================================================== # step 13: 设置开机启动zookeeper # 创建zookeepr-1.service文件,如下 # 切换到root账户

su root # 节点1/2/3

# 配置伪集群,复制三个节点 myid=1/2/3的service服务文件

V_NODE_NUM=3

for ((i=1;i<=${V_NODE_NUM};i++))

do echo "${i}, begin the service register : zookeeper-${i}, ...." cat > /etc/systemd/system/zookeeper-${i}.service <<EOF

[Unit]

Description=zookeeper-${i} service

After=network.target

After=network-online.target

Wants=network-online.target [Service]

User=zookeeper

Type=forking

TimeoutSec=0

Environment="JAVA_HOME=/env/jdk/jdk-12.0.2"

ExecStart=/app/zookeeper/zookeeper-${i}/bin/zkServer.sh start /app/zookeeper/zookeeper-${i}/conf/zoo.cfg

# ExecStop=/app/zookeeper/zookeeper-${i}/bin/zkServer.sh stop /app/zookeeper/zookeeper-${i}/conf/zoo.cfg

RestartSec=5

LimitNOFILE=1000000 [Install]

WantedBy=multi-user.target

EOF # register service

systemctl enable zookeeper-${i}

systemctl daemon-reload

# start service

systemctl start zookeeper-${i} &

# check service

systemctl status zookeeper-${i}

ps -ef | grep zookeeper-${i}

netstat -nltp | grep zookeeper-${i} echo "${i}, finish the service register : zookeeper-${i}, ...." done

# for 循环 - end # ==================================================================================================================================================== # step 14: 安装zookeeper 可视化UI界面工具 = zkui # 1. 首先,从下面git地址下载源代码,然后通过maven和eclipse构建编译,得到jar包

# 版本 = zkui-2.0-SNAPSHOTS

# SOURCE = https://github.com/DeemOpen/zkui.git

# git clone https://github.com/DeemOpen/zkui.git # 2. 创建zkui的linux服务器的安装目录

mkdir -p /app/zkui/zkui-2.0

# 复制zkui-2.0-SNAPSHOT.jar文件到此目录u

ls -al /app/zkui/zkui-2.0/zkui-2.0-SNAPSHOT.jar # 3. 创建zkui的配置文件,如下

# 注意:zkui的安装,可以和zookeeper服务器不在同一台服务器上。

cat > /app/zkui/zkui-2.0/config.cfg <<EOF

#Server Port

serverPort=19090 #Comma seperated list of all the zookeeper servers

zkServer=CNT7XZKPD02:2181,CNT7XZKPD02:2182,CNT7XZKPD02:2183 #Http path of the repository. Ignore if you dont intent to upload files from repository.

scmRepo=http://CNT7XZKPD02:2181/@rev1= #Path appended to the repo url. Ignore if you dont intent to upload files from repository.

scmRepoPath=//appconfig.txt #if set to true then userSet is used for authentication, else ldap authentication is used.

ldapAuth=false

ldapDomain=mycompany,mydomain

#ldap authentication url. Ignore if using file based authentication.

ldapUrl=ldap://<ldap_host>:<ldap_port>/dc=mycom,dc=com #Specific roles for ldap authenticated users. Ignore if using file based authentication.

ldapRoleSet={"users": [{ "username":"domain\\user1" , "role": "ADMIN" }]}

userSet = {"users": [{ "username":"admin" , "password":"password","role": "ADMIN" },{ "username":"appconfig" , "password":"password#123","role": "USER" }]} #Set to prod in production and dev in local. Setting to dev will clear history each time.

env=prod

jdbcClass=org.h2.Driver

jdbcUrl=jdbc:h2:zkui

jdbcUser=root

jdbcPwd=password

#If you want to use mysql db to store history then comment the h2 db section.

#jdbcClass=com.mysql.jdbc.Driver

#jdbcUrl=jdbc:mysql://localhost:3306/zkui

#jdbcUser=root

#jdbcPwd=password

loginMessage=Please login using admin/manager or appconfig/appconfig. #session timeout 5 mins/300 secs.

sessionTimeout=300 #Default 5 seconds to keep short lived zk sessions. If you have large data then the read will take more than 30 seconds so increase this accordingly.

#A bigger zkSessionTimeout means the connection will be held longer and resource consumption will be high.

zkSessionTimeout=5 #Block PWD exposure over rest call.

blockPwdOverRest=false #ignore rest of the props below if https=false.

https=false

keystoreFile=/home/user/keystore.jks

keystorePwd=password

keystoreManagerPwd=password # The default ACL to use for all creation of nodes. If left blank, then all nodes will be universally accessible

# Permissions are based on single character flags: c (Create), r (read), w (write), d (delete), a (admin), * (all)

# For example defaultAcl={"acls": [{"scheme":"ip", "id":"192.168.1.192", "perms":"*"}, {"scheme":"ip", id":"192.168.1.0/24", "perms":"r"}]

defaultAcl=

# Set X-Forwarded-For to true if zkui is behind a proxy

X-Forwarded-For=false EOF # 4. 添加zookeeper账户对安装目录的权限

ls -al /app/zkui/zkui-2.0/ && \

chmod -R 775 /app/zkui/zkui-2.0/ && \

chown -R zookeeper:ops_install /app/zkui/zkui-2.0/ && \

ls -al /app/zkui/zkui-2.0/ # 4. 启动zkui,如下

java -Xms128m -Xmx512m -XX:MaxMetaspaceSize=256m -jar /app/zkui/zkui-2.0/zkui-2.0-SNAPSHOT-jar-with-dependencies.jar # 5. 设置开机自动启动,如下

cat > /etc/systemd/system/zkui.service <<EOF

[Unit]

Description=zkui-2.0 service

After=network.target

After=network-online.target

Wants=network-online.target [Service]

User=zookeeper

Type=forking

TimeoutSec=0

Environment="ZKUI_HOME=/app/zkui/zkui-2.0/"

ExecStart=${JAVA_HOME}/bin/java -Xms128m -Xmx512m -XX:MaxMetaspaceSize=256m -jar /app/zkui/zkui-2.0/zkui-2.0-SNAPSHOT-jar-with-dependencies.jar

RestartSec=5

LimitNOFILE=1000000 [Install]

WantedBy=multi-user.target

EOF # 注册服务

systemctl enable zkui

# 启动服务

systemctl start zkui &

# 检查服务

systemctl status zkui

netstat -nltp | grep 19090

ps -ef | grep zkui # ====================================================================================================================================================

最后,截图如下

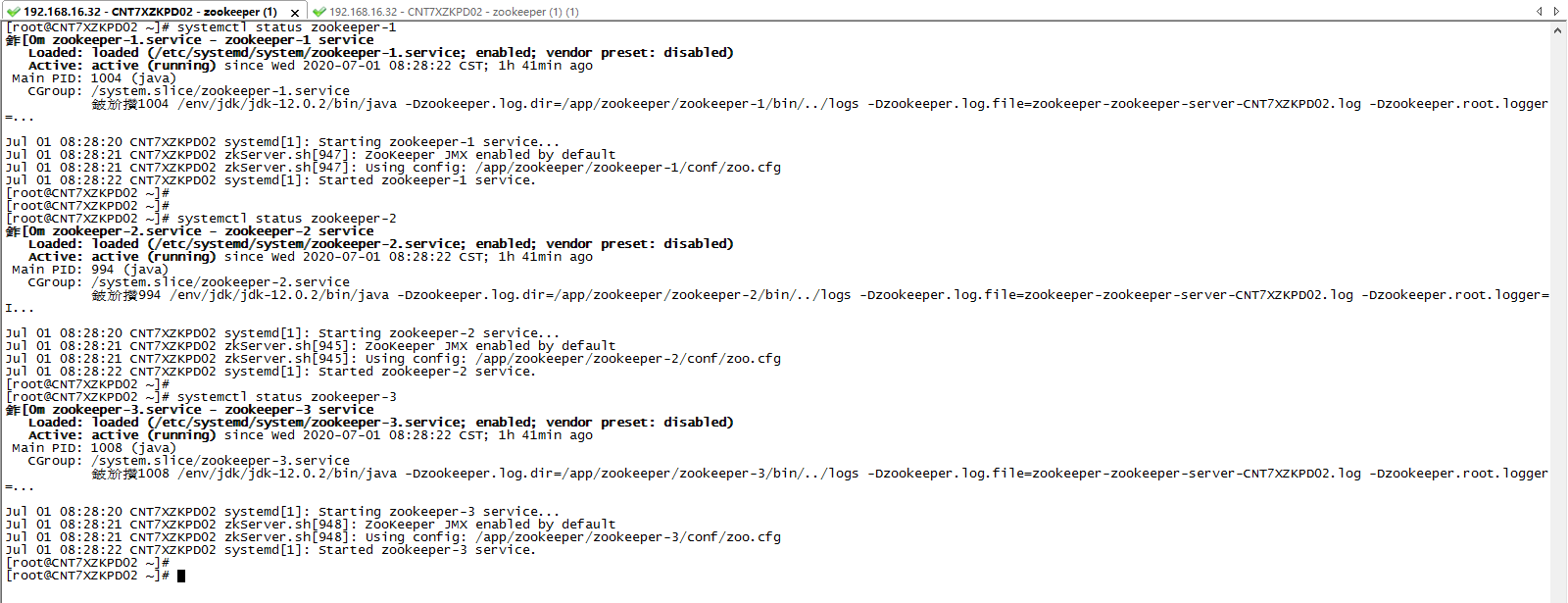

1. zookeeper 运行结果,如下

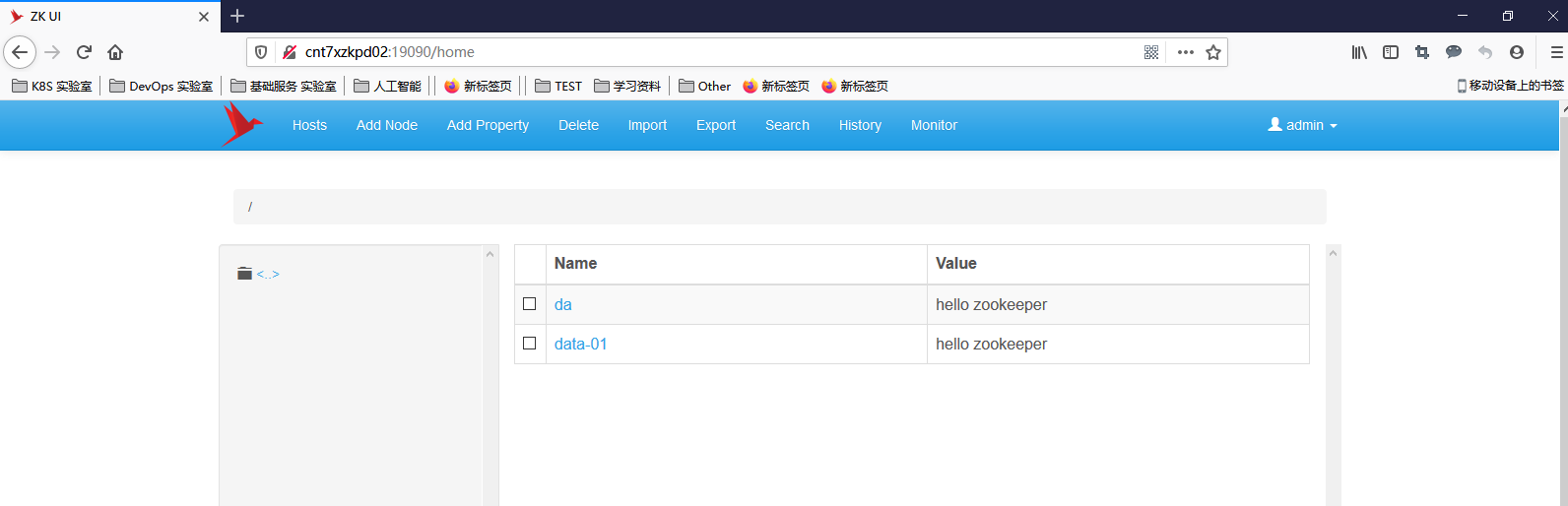

2. zkui, 运行结果如下

zookeeper 伪集群安装和 zkui管理UI配置的更多相关文章

- (原) 1.2 Zookeeper伪集群安装

本文为原创文章,转载请注明出处,谢谢 Zookeeper伪集群安装 zookeeper单机安装配置可以查看 1.1 zookeeper单机安装 1.复制三份zookeeper,分别为zookeeper ...

- 【分布式】Zookeeper伪集群安装部署

zookeeper:伪集群安装部署 只有一台linux主机,但却想要模拟搭建一套zookeeper集群的环境.可以使用伪集群模式来搭建.伪集群模式本质上就是在一个linux操作系统里面启动多个zook ...

- zookeeper伪集群安装

记录下zookeeper伪分布式搭建的过程,假设系统已经配置好了JAVA环境. 1.准备环境 linux服务器一台,下载某个版本的zookeeper压缩包,下载链接:http://apache.cla ...

- 03_zookeeper伪集群安装

一句话说明白:在1台机器上模拟多台机器,对外提供服务 在理解zookeeper集群安装方法的基础上,本文描述如何将1个机器模拟为3个节点的zookeeper集群,建议先参考阅读本文的前一期 zooke ...

- zookeeper+kafka集群安装之二

zookeeper+kafka集群安装之二 此为上一篇文章的续篇, kafka安装需要依赖zookeeper, 本文与上一篇文章都是真正分布式安装配置, 可以直接用于生产环境. zookeeper安装 ...

- zookeeper+kafka集群安装之一

zookeeper+kafka集群安装之一 准备3台虚拟机, 系统是RHEL64服务版. 1) 每台机器配置如下: $ cat /etc/hosts ... # zookeeper hostnames ...

- zookeeper+kafka集群安装之中的一个

版权声明:本文为博主原创文章.未经博主同意不得转载. https://blog.csdn.net/cheungmine/article/details/26678877 zookeeper+kafka ...

- win10环境下搭建zookeeper伪集群

一.下载zookeeper https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/ 这里笔者下载的是zookeeper-3.3.6 二.配置zoo ...

- Zookeeper的集群安装

Zookeeper的集群安装 关闭防火墙 安装jdk 下载Zookeeper的安装包 解压Zookeeper的安装包 进入Zookeeper的安装目录中conf目录 将zoo_sample.cfg复制 ...

随机推荐

- Java实现 LeetCode 218 天际线问题

218. 天际线问题 城市的天际线是从远处观看该城市中所有建筑物形成的轮廓的外部轮廓.现在,假设您获得了城市风光照片(图A)上显示的所有建筑物的位置和高度,请编写一个程序以输出由这些建筑物形成的天际线 ...

- Java实现币值最大化问题

1 问题描述 给定一排n个硬币,其面值均为正整数c1,c2,-,cn,这些整数并不一定两两不同.请问如何选择硬币,使得在其原始位置互不相邻的条件下,所选硬币的总金额最大. 2 解决方案 2.1 动态规 ...

- Java实现 洛谷 P1200 [USACO1.1]你的飞碟在这儿Your Ride Is He…

import java.util.Scanner; public class Main{ private static Scanner cin; public static void main(Str ...

- Java实现 蓝桥杯 历届试题 九宫重排

问题描述 如下面第一个图的九宫格中,放着 1~8 的数字卡片,还有一个格子空着.与空格子相邻的格子中的卡片可以移动到空格中.经过若干次移动,可以形成第二个图所示的局面. 我们把第一个图的局面记为:12 ...

- 体验SpringBoot(2.3)应用制作Docker镜像(官方方案)

关于<SpringBoot-2.3容器化技术>系列 <SpringBoot-2.3容器化技术>系列,旨在和大家一起学习实践2.3版本带来的最新容器化技术,让咱们的Java应用更 ...

- Flutter实战】文本组件及五大案例

老孟导读:大家好,这是[Flutter实战]系列文章的第二篇,这一篇讲解文本组件,文本组件包括文本展示组件(Text和RichText)和文本输入组件(TextField),基础用法和五个案例助你快速 ...

- JAVA 代码查错

1.abstract class Name { private String name; public abstract boolean isStupidName(String name){}} 大侠 ...

- v-on 缩写

<!-- 完整语法 --> <a v-on:click="doSomething"></a> <!-- 缩写 --> <a @ ...

- 学习nginx从入门到实践(五) 场景实践之静态资源web服务

一.静态资源web服务 1.1 静态资源 静态资源定义:非服务器动态生成的文件. 1.2 静态资源服务场景-CDN 1.3 文件读取配置 1.3.1 sendfile 配置语法: syntax: se ...

- [noi.ac省选模拟赛]第10场题解集合

题目 比赛界面. T1 不难想到,对于一个与\(k\)根棍子连接的轨道,我们可以将它拆分成\(k+1\)个点,表示这条轨道不同的\(k+1\)段. 那么,棍子就成为了点与点之间的边.可以发现,按照棍子 ...