FLink同步mysql到Iceberg丢数问题排查解决

一、问题背景

数据对比程序发现通过Flink cdc同步mysql写入Iceberg表运行一段时间后存在丢数问题,排查到丢数是由于hivemetastore钩子函数缺陷引起,由腾讯云EMR修复

钩子函数作用:在查询表时,更新hivemetastore中表的最近访问时间

产生丢数的原因:因钩子函数需要更新表的访问时间,每次有用户或程序查询iceberg表时,会查询两次表元数据信息,第一次是进入钩子时查询元数据放入本地缓存,第二次是更新元数据前查询,如果发现信息不一致时候,会用缓存中的旧元数据信息覆盖新元数据信息。

例:如果在第一次查询和第二次查询之前(1s以内)由Flink产生新的快照,则新的快照数据会丢失(包含新增和变更数据)。

解决方法:新增jar包,修改hivemetastore钩子函数实现方式。新的实现方式在每次查询iceberg表后,因稳定性问题暂时不记录iceberg表最新访问时间,解决丢失问题后再支持记录。

包名称:gaotu-2.3.7.jar

二、排查步骤

1、确定丢数范围

通过数据对比程序发现丢失的数据,根据数据中的时间字段确定丢失时间,Flink checkpoint间隔为5分钟

丢失范围:2024-11-20 15:14:21 到 2024-11-20 15:19:21

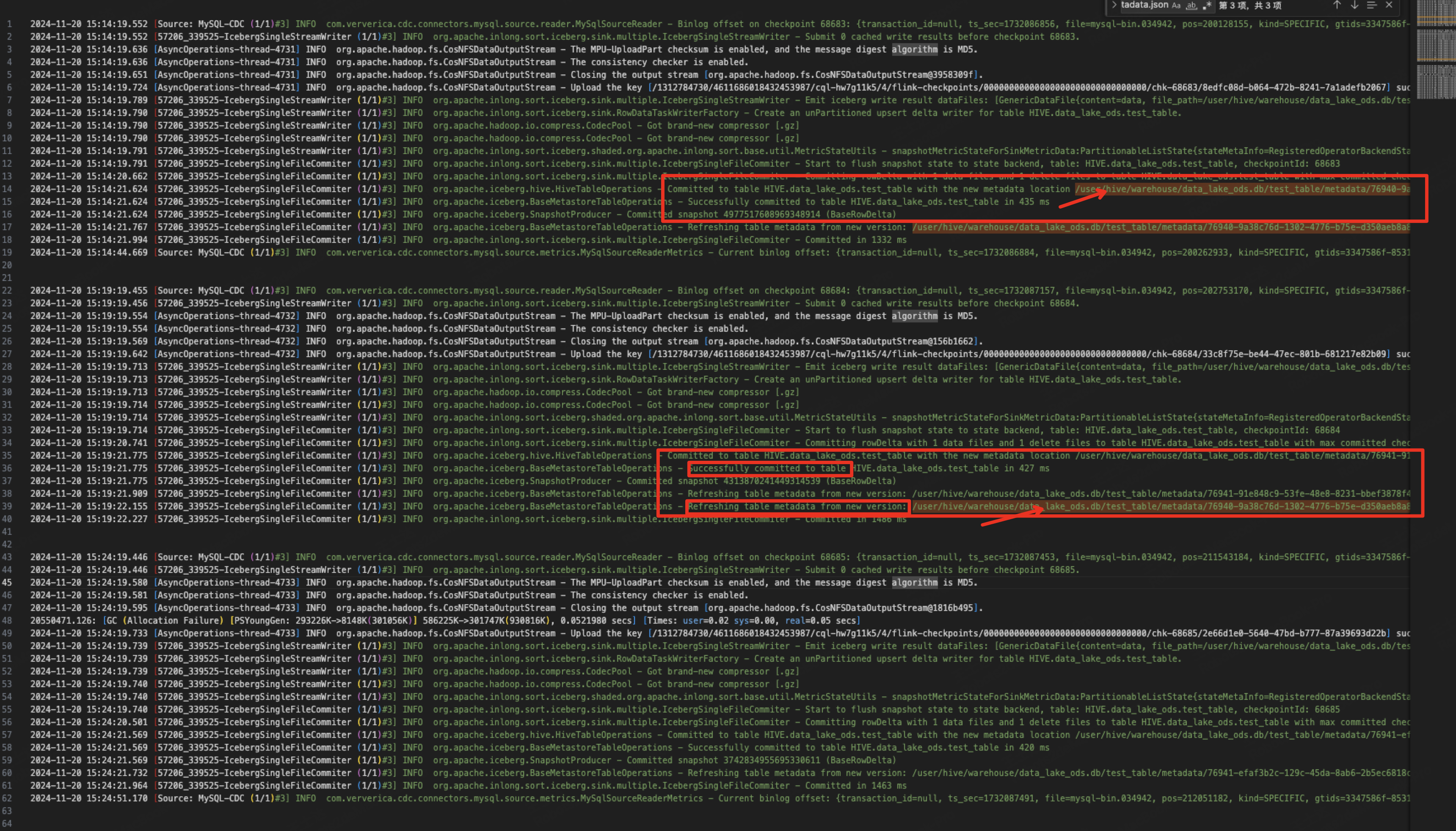

2、Flink日志

根据丢失时间查看Flink日志,查找丢失原因。因为是15:14到15:19丢失,重点查看19之后的这个snapshot为什么丢失

查看日志:2024-11-20 15:13:00 到 2024-11-20 15:26:00

发现15:14写入的快照(4977517608969348914):

/user/hive/warehouse/data_lake_ods.db/test_table/metadata/76940-9a38c76d-1302-4776-b75e-d350aeb8a8bd.metadata.json

在15:19快照写入完成(4313870241449314539)数据丢失:

/user/hive/warehouse/data_lake_ods.db/test_table/metadata/76941-91e848c9-53fe-48e8-8231-bbef3878f4f6.metadata.json

在15:19快照写入完成之后刷新时展示为旧快照信息(4977517608969348914):

/user/hive/warehouse/data_lake_ods.db/test_table/metadata/76940-9a38c76d-1302-4776-b75e-d350aeb8a8bd.metadata.json

在15:24时根据回退的快照进行更新,导致15:19快照数据丢失,注意此次快照也是76941(3742834955695330611):

/user/hive/warehouse/data_lake_ods.db/test_table/metadata/76941-efaf3b2c-129c-45da-8ab6-2b5ec6818c76.metadata.json

怀疑是版本回退导致,并且在flink代码中没看到回退代码,需要看回退的请求是由谁发起

2024-11-20 15:14:19.552 [Source: MySQL-CDC (1/1)#3] INFO com.ververica.cdc.connectors.mysql.source.reader.MySqlSourceReader - Binlog offset on checkpoint 68683: {transaction_id=null, ts_sec=1732086856, file=mysql-bin.034942, pos=200128155, kind=SPECIFIC, gtids=3347586f-8531-11eb-bd7e-0c42a18d9a58:1-4228948520, row=0, event=0, server_id=33401644}

2024-11-20 15:14:19.552 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleStreamWriter - Submit 0 cached write results before checkpoint 68683.

2024-11-20 15:14:19.636 [AsyncOperations-thread-4731] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - The MPU-UploadPart checksum is enabled, and the message digest algorithm is MD5.

2024-11-20 15:14:19.636 [AsyncOperations-thread-4731] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - The consistency checker is enabled.

2024-11-20 15:14:19.651 [AsyncOperations-thread-4731] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - Closing the output stream [org.apache.hadoop.fs.CosNFSDataOutputStream@3958309f].

2024-11-20 15:14:19.724 [AsyncOperations-thread-4731] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - Upload the key [/1312784730/4611686018432453987/cql-hw7g11k5/4/flink-checkpoints/00000000000000000000000000000000/chk-68683/8edfc08d-b064-472b-8241-7a1adefb2067] successfully. check message: File verification succeeded. expected length: 2621817, real length: 2621817, expected CRC64 value: -3758786474250627517, real CRC64 value: -3758786474250627517.

2024-11-20 15:14:19.789 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleStreamWriter - Emit iceberg write result dataFiles: [GenericDataFile{content=data, file_path=/user/hive/warehouse/data_lake_ods.db/test_table/data/00000-3-97858102-92d7-410c-ada6-71504c4a7dba-09472.parquet, file_format=PARQUET, spec_id=0, partition=PartitionData{}, record_count=61, file_size_in_bytes=19139, column_sizes=

2024-11-20 15:14:19.790 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.RowDataTaskWriterFactory - Create an unPartitioned upsert delta writer for table HIVE.data_lake_ods.test_table.

2024-11-20 15:14:19.790 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.hadoop.io.compress.CodecPool - Got brand-new compressor [.gz]

2024-11-20 15:14:19.790 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.hadoop.io.compress.CodecPool - Got brand-new compressor [.gz]

2024-11-20 15:14:19.791 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.shaded.org.apache.inlong.sort.base.util.MetricStateUtils - snapshotMetricStateForSinkMetricData:PartitionableListState{stateMetaInfo=RegisteredOperatorBackendStateMetaInfo{name='inlong-metric-states', assignmentMode=UNION, partitionStateSerializer=org.apache.flink.api.java.typeutils.runtime.PojoSerializer@b14a7b34}, internalList=[MetricState{subtaskIndex=0,

2024-11-20 15:14:19.791 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleFileCommiter - Start to flush snapshot state to state backend, table: HIVE.data_lake_ods.test_table, checkpointId: 68683

2024-11-20 15:14:20.662 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleFileCommiter - Committing rowDelta with 1 data files and 1 delete files to table HIVE.data_lake_ods.test_table with max committed checkpoint id 68683.

2024-11-20 15:14:21.624 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.hive.HiveTableOperations - Committed to table HIVE.data_lake_ods.test_table with the new metadata location /user/hive/warehouse/data_lake_ods.db/test_table/metadata/76940-9a38c76d-1302-4776-b75e-d350aeb8a8bd.metadata.json

2024-11-20 15:14:21.624 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.BaseMetastoreTableOperations - Successfully committed to table HIVE.data_lake_ods.test_table in 435 ms

2024-11-20 15:14:21.624 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.SnapshotProducer - Committed snapshot 4977517608969348914 (BaseRowDelta)

2024-11-20 15:14:21.767 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.BaseMetastoreTableOperations - Refreshing table metadata from new version: /user/hive/warehouse/data_lake_ods.db/test_table/metadata/76940-9a38c76d-1302-4776-b75e-d350aeb8a8bd.metadata.json

2024-11-20 15:14:21.994 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleFileCommiter - Committed in 1332 ms

2024-11-20 15:14:44.669 [Source: MySQL-CDC (1/1)#3] INFO com.ververica.cdc.connectors.mysql.source.metrics.MySqlSourceReaderMetrics - Current binlog offset: {transaction_id=null, ts_sec=1732086884, file=mysql-bin.034942, pos=200262933, kind=SPECIFIC, gtids=3347586f-8531-11eb-bd7e-0c42a18d9a58:1-4228948661, row=1, event=2, server_id=33401644}

2024-11-20 15:19:19.455 [Source: MySQL-CDC (1/1)#3] INFO com.ververica.cdc.connectors.mysql.source.reader.MySqlSourceReader - Binlog offset on checkpoint 68684: {transaction_id=null, ts_sec=1732087157, file=mysql-bin.034942, pos=202753170, kind=SPECIFIC, gtids=3347586f-8531-11eb-bd7e-0c42a18d9a58:1-4228952439, row=0, event=0, server_id=33401644}

2024-11-20 15:19:19.456 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleStreamWriter - Submit 0 cached write results before checkpoint 68684.

2024-11-20 15:19:19.554 [AsyncOperations-thread-4732] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - The MPU-UploadPart checksum is enabled, and the message digest algorithm is MD5.

2024-11-20 15:19:19.554 [AsyncOperations-thread-4732] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - The consistency checker is enabled.

2024-11-20 15:19:19.569 [AsyncOperations-thread-4732] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - Closing the output stream [org.apache.hadoop.fs.CosNFSDataOutputStream@156b1662].

2024-11-20 15:19:19.642 [AsyncOperations-thread-4732] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - Upload the key [/1312784730/4611686018432453987/cql-hw7g11k5/4/flink-checkpoints/00000000000000000000000000000000/chk-68684/33c8f75e-be44-47ec-801b-681217e82b09] successfully. check message: File verification succeeded. expected length: 2621817, real length: 2621817, expected CRC64 value: 3353706539747368092, real CRC64 value: 3353706539747368092.

2024-11-20 15:19:19.713 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleStreamWriter - Emit iceberg write result dataFiles: [GenericDataFile{content=data, file_path=/user/hive/warehouse/data_lake_ods.db/test_table/data/00000-3-97858102-92d7-410c-ada6-71504c4a7dba-09474.parquet, file_format=PARQUET, spec_id=0, partition=PartitionData{}, record_count=77, file_size_in_bytes=20433, column_sizes=

2024-11-20 15:19:19.713 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.RowDataTaskWriterFactory - Create an unPartitioned upsert delta writer for table HIVE.data_lake_ods.test_table.

2024-11-20 15:19:19.713 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.hadoop.io.compress.CodecPool - Got brand-new compressor [.gz]

2024-11-20 15:19:19.714 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.hadoop.io.compress.CodecPool - Got brand-new compressor [.gz]

2024-11-20 15:19:19.714 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.shaded.org.apache.inlong.sort.base.util.MetricStateUtils - snapshotMetricStateForSinkMetricData:PartitionableListState{stateMetaInfo=RegisteredOperatorBackendStateMetaInfo{name='inlong-metric-states', assignmentMode=UNION, partitionStateSerializer=org.apache.flink.api.java.typeutils.runtime.PojoSerializer@b14a7b34}, internalList=[MetricSta

2024-11-20 15:19:19.714 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleFileCommiter - Start to flush snapshot state to state backend, table: HIVE.data_lake_ods.test_table, checkpointId: 68684

2024-11-20 15:19:20.741 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleFileCommiter - Committing rowDelta with 1 data files and 1 delete files to table HIVE.data_lake_ods.test_table with max committed checkpoint id 68684.

2024-11-20 15:19:21.775 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.hive.HiveTableOperations - Committed to table HIVE.data_lake_ods.test_table with the new metadata location /user/hive/warehouse/data_lake_ods.db/test_table/metadata/76941-91e848c9-53fe-48e8-8231-bbef3878f4f6.metadata.json

2024-11-20 15:19:21.775 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.BaseMetastoreTableOperations - Successfully committed to table HIVE.data_lake_ods.test_table in 427 ms

2024-11-20 15:19:21.775 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.SnapshotProducer - Committed snapshot 4313870241449314539 (BaseRowDelta)

2024-11-20 15:19:21.909 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.BaseMetastoreTableOperations - Refreshing table metadata from new version: /user/hive/warehouse/data_lake_ods.db/test_table/metadata/76941-91e848c9-53fe-48e8-8231-bbef3878f4f6.metadata.json

2024-11-20 15:19:22.155 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.BaseMetastoreTableOperations - Refreshing table metadata from new version: /user/hive/warehouse/data_lake_ods.db/test_table/metadata/76940-9a38c76d-1302-4776-b75e-d350aeb8a8bd.metadata.json

2024-11-20 15:19:22.227 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleFileCommiter - Committed in 1486 ms

2024-11-20 15:24:19.446 [Source: MySQL-CDC (1/1)#3] INFO com.ververica.cdc.connectors.mysql.source.reader.MySqlSourceReader - Binlog offset on checkpoint 68685: {transaction_id=null, ts_sec=1732087453, file=mysql-bin.034942, pos=211543184, kind=SPECIFIC, gtids=3347586f-8531-11eb-bd7e-0c42a18d9a58:1-4228954841, row=1, event=2, server_id=33401644}

2024-11-20 15:24:19.446 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleStreamWriter - Submit 0 cached write results before checkpoint 68685.

2024-11-20 15:24:19.580 [AsyncOperations-thread-4733] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - The MPU-UploadPart checksum is enabled, and the message digest algorithm is MD5.

2024-11-20 15:24:19.581 [AsyncOperations-thread-4733] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - The consistency checker is enabled.

2024-11-20 15:24:19.595 [AsyncOperations-thread-4733] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - Closing the output stream [org.apache.hadoop.fs.CosNFSDataOutputStream@1816b495].

20550471.126: [GC (Allocation Failure) [PSYoungGen: 293226K->8148K(301056K)] 586225K->301747K(930816K), 0.0521980 secs] [Times: user=0.02 sys=0.00, real=0.05 secs]

2024-11-20 15:24:19.733 [AsyncOperations-thread-4733] INFO org.apache.hadoop.fs.CosNFSDataOutputStream - Upload the key [/1312784730/4611686018432453987/cql-hw7g11k5/4/flink-checkpoints/00000000000000000000000000000000/chk-68685/2e66d1e0-5640-47bd-b777-87a39693d22b] successfully. check message: File verification succeeded. expected length: 2621817, real length: 2621817, expected CRC64 value: -5527772684804224707, real CRC64 value: -5527772684804224707.

2024-11-20 15:24:19.739 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleStreamWriter - Emit iceberg write result dataFiles: [GenericDataFile{content=data, file_path=/user/hive/warehouse/data_lake_ods.db/test_table/data/00000-3-97858102-92d7-410c-ada6-71504c4a7dba-09476.parquet, file_format=PARQUET, spec_id=0, partition=PartitionData{}, record_count=88, file_size_in_bytes=20704, column_si

2024-11-20 15:24:19.739 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.RowDataTaskWriterFactory - Create an unPartitioned upsert delta writer for table HIVE.data_lake_ods.test_table.

2024-11-20 15:24:19.739 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.hadoop.io.compress.CodecPool - Got brand-new compressor [.gz]

2024-11-20 15:24:19.740 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.hadoop.io.compress.CodecPool - Got brand-new compressor [.gz]

2024-11-20 15:24:19.740 [57206_339525-IcebergSingleStreamWriter (1/1)#3] INFO org.apache.inlong.sort.iceberg.shaded.org.apache.inlong.sort.base.util.MetricStateUtils - snapshotMetricStateForSinkMetricData:PartitionableListState{stateMetaInfo=RegisteredOperatorBackendStateMetaInfo{name='inlong-metric-states', assignmentMode=UNION, partitionStateSerializer=org.apache.flink.api.java.typeutils.runtime.PojoSerializer@b14a7b34}, internalList=[Met

2024-11-20 15:24:19.740 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleFileCommiter - Start to flush snapshot state to state backend, table: HIVE.data_lake_ods.test_table, checkpointId: 68685

2024-11-20 15:24:20.501 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleFileCommiter - Committing rowDelta with 1 data files and 1 delete files to table HIVE.data_lake_ods.test_table with max committed checkpoint id 68685.

2024-11-20 15:24:21.569 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.hive.HiveTableOperations - Committed to table HIVE.data_lake_ods.test_table with the new metadata location /user/hive/warehouse/data_lake_ods.db/test_table/metadata/76941-efaf3b2c-129c-45da-8ab6-2b5ec6818c76.metadata.json

2024-11-20 15:24:21.569 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.BaseMetastoreTableOperations - Successfully committed to table HIVE.data_lake_ods.test_table in 420 ms

2024-11-20 15:24:21.569 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.SnapshotProducer - Committed snapshot 3742834955695330611 (BaseRowDelta)

2024-11-20 15:24:21.732 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.iceberg.BaseMetastoreTableOperations - Refreshing table metadata from new version: /user/hive/warehouse/data_lake_ods.db/test_table/metadata/76941-efaf3b2c-129c-45da-8ab6-2b5ec6818c76.metadata.json

2024-11-20 15:24:21.964 [57206_339525-IcebergSingleFileCommiter (1/1)#3] INFO org.apache.inlong.sort.iceberg.sink.multiple.IcebergSingleFileCommiter - Committed in 1463 ms

2024-11-20 15:24:51.170 [Source: MySQL-CDC (1/1)#3] INFO com.ververica.cdc.connectors.mysql.source.metrics.MySqlSourceReaderMetrics - Current binlog offset: {transaction_id=null, ts_sec=1732087491, file=mysql-bin.034942, pos=212051182, kind=SPECIFIC, gtids=3347586f-8531-11eb-bd7e-0c42a18d9a58:1-4228955402, row=1, event=2, server_id=33401644}3、在hivemetastore的mysql审计日志查询请求来源

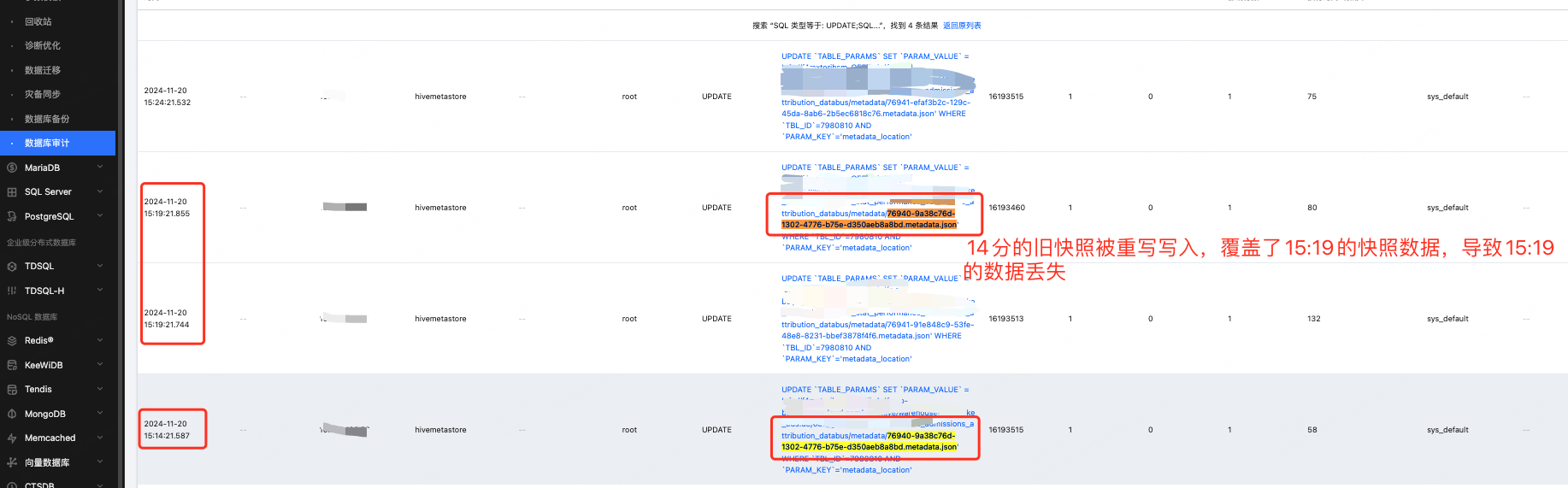

时间:2024-11-20 15:13:00 到 2024-11-20 15:26:00

15:14快照:76940-9a38c76d-1302-4776-b75e-d350aeb8a8bd.metadata.json

15:19快照:76941-91e848c9-53fe-48e8-8231-bbef3878f4f6.metadata.json

15:24快照:76941-efaf3b2c-129c-45da-8ab6-2b5ec6818c76.metadata.json

查看15:19分两次写入的来源ip,其中2024-11-20 15:19:21.744为flink程序写入,2024-11-20 15:19:21.855写入导致丢失,所以需要查询来源来定位问题

4、根据2024-11-20 15:19:21.855执行旧快照的sql,查看来源是hivemetastore地址,请求是经过hivemetastore转发而来。

所以需要登录hivemetastore服务器,根据日志来确定具体来源

由于我们集群日志记录为ERROR级别无法查看,修改EMR的hive-metastore-log4j2.properties配置文件,改成INFO级别来记录更详细信息

rootLogger.level=INFO

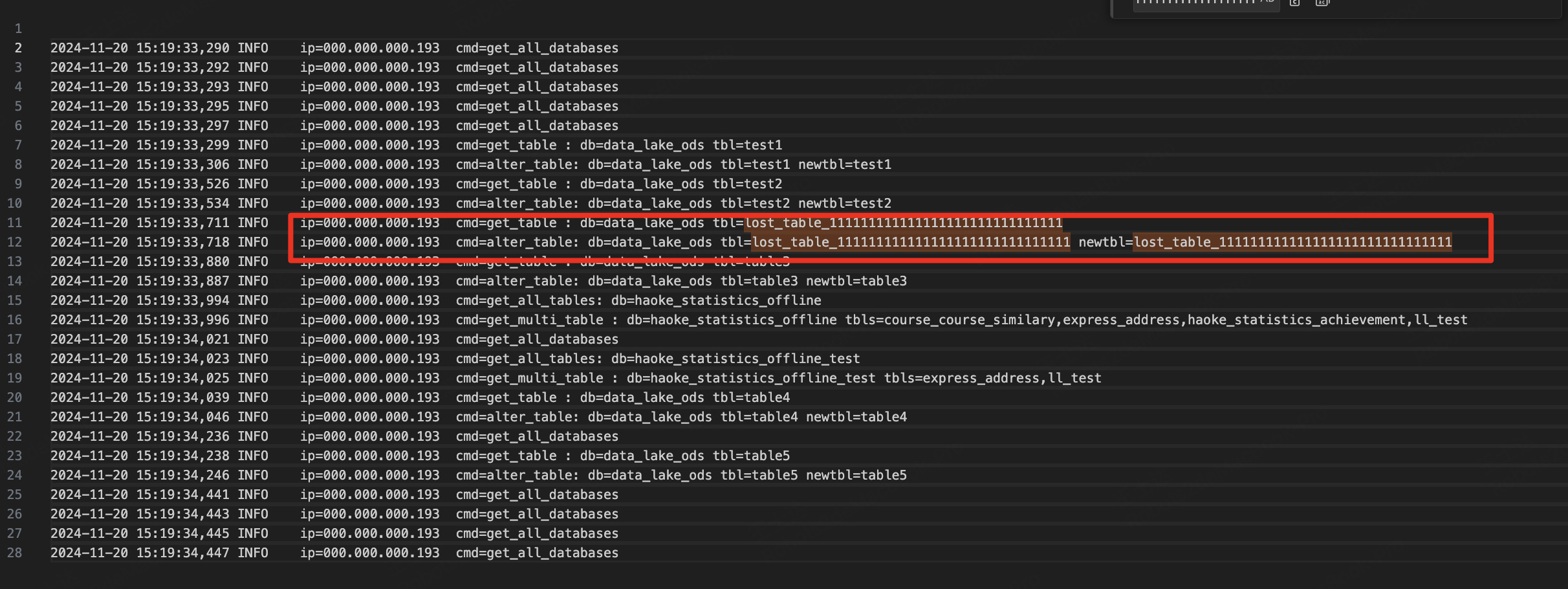

5、查看hivemetastore请求来源ip

15:14重复更新的快照文件:76940-9a38c76d-1302-4776-b75e-d350aeb8a8bd.metadata.json

cd /data/emr/hive/logs

cp 078b6846-d931-4bf5-a4dd-6328c9b97665-hadoop_hive_metastore_audit-2024-11-20.tar.gz ~/templog

tar -zxf 078b6846-d931-4bf5-a4dd-6328c9b97665-hadoop_hive_metastore_audit-2024-11-20.tar.gz

2024-11-20 15:19:33,290 INFO ip=000.000.000.193 cmd=get_all_databases

2024-11-20 15:19:33,292 INFO ip=000.000.000.193 cmd=get_all_databases

2024-11-20 15:19:33,293 INFO ip=000.000.000.193 cmd=get_all_databases

2024-11-20 15:19:33,295 INFO ip=000.000.000.193 cmd=get_all_databases

2024-11-20 15:19:33,297 INFO ip=000.000.000.193 cmd=get_all_databases

2024-11-20 15:19:33,299 INFO ip=000.000.000.193 cmd=get_table : db=data_lake_ods tbl=test1

2024-11-20 15:19:33,306 INFO ip=000.000.000.193 cmd=alter_table: db=data_lake_ods tbl=test1 newtbl=test1

2024-11-20 15:19:33,526 INFO ip=000.000.000.193 cmd=get_table : db=data_lake_ods tbl=test2

2024-11-20 15:19:33,534 INFO ip=000.000.000.193 cmd=alter_table: db=data_lake_ods tbl=test2 newtbl=test2

2024-11-20 15:19:33,711 INFO ip=000.000.000.193 cmd=get_table : db=data_lake_ods tbl=lost_table_111111111111111111111111111111

2024-11-20 15:19:33,718 INFO ip=000.000.000.193 cmd=alter_table: db=data_lake_ods tbl=lost_table_111111111111111111111111111111 newtbl=lost_table_111111111111111111111111111111

2024-11-20 15:19:33,880 INFO ip=000.000.000.193 cmd=get_table : db=data_lake_ods tbl=table3

2024-11-20 15:19:33,887 INFO ip=000.000.000.193 cmd=alter_table: db=data_lake_ods tbl=table3 newtbl=table3

2024-11-20 15:19:33,994 INFO ip=000.000.000.193 cmd=get_all_tables: db=haoke_statistics_offline

2024-11-20 15:19:33,996 INFO ip=000.000.000.193 cmd=get_multi_table : db=haoke_statistics_offline tbls=course_course_similary,express_address,haoke_statistics_achievement,ll_test

2024-11-20 15:19:34,021 INFO ip=000.000.000.193 cmd=get_all_databases

2024-11-20 15:19:34,023 INFO ip=000.000.000.193 cmd=get_all_tables: db=haoke_statistics_offline_test

2024-11-20 15:19:34,025 INFO ip=000.000.000.193 cmd=get_multi_table : db=haoke_statistics_offline_test tbls=express_address,ll_test

2024-11-20 15:19:34,039 INFO ip=000.000.000.193 cmd=get_table : db=data_lake_ods tbl=table4

2024-11-20 15:19:34,046 INFO ip=000.000.000.193 cmd=alter_table: db=data_lake_ods tbl=table4 newtbl=table4

2024-11-20 15:19:34,236 INFO ip=000.000.000.193 cmd=get_all_databases

2024-11-20 15:19:34,238 INFO ip=000.000.000.193 cmd=get_table : db=data_lake_ods tbl=table5

2024-11-20 15:19:34,246 INFO ip=000.000.000.193 cmd=alter_table: db=data_lake_ods tbl=table5 newtbl=table5

2024-11-20 15:19:34,441 INFO ip=000.000.000.193 cmd=get_all_databases

2024-11-20 15:19:34,443 INFO ip=000.000.000.193 cmd=get_all_databases

2024-11-20 15:19:34,445 INFO ip=000.000.000.193 cmd=get_all_databases

2024-11-20 15:19:34,447 INFO ip=000.000.000.193 cmd=get_all_databases 6、来源ip部署了Amoro治理服务

问题:Amoro查看代码发现并没有旧快照覆盖新快照的逻辑,并且当时这张表没有治理任务,通过日志看,当时只有查询这张表的操作。

怀疑:是select操作会不会导致元数据更新,想到可能是有hive的钩子函数会更新表的访问时间,需要确认是不是钩子函数的逻辑引起

7、hive钩子函数确认

在用户发起select时,hivemetastore包中UpdateTableAccessTimeListener钩子方法查询两次表信息,如果发现信息不一致则会更新表的hive元数据信息。

问题:其中更新元数据时有缺陷,正常应该只更新访问时间字段,其它属性不应该更新,而钩子函数对不一样的属性都做了更新。

钩子逻辑:其中进入钩子时查询一次元数据放入缓存,更新访问时间之前查询一次表元数据信息,如果在第一次和第二次查询元数据之间,由flink程序更新了表最新快照id,则会导致两次查询的元数据属性不一致,会用缓存中的旧快照覆盖新快照,导致flink写入的新快照数据丢失。

private void updateAccessTime(PreReadTableEvent event) {

try {

int lastAccessTime = (int)(System.currentTimeMillis() / 1000L);

Table table = event.getTable();

table.setLastAccessTime(lastAccessTime);

Map<String, String> parameters = table.getParameters();

String tableType = parameters.get("table_type");

if (tableType!=null && tableType.equalsIgnoreCase("ICEBERG")) {

LOG.info("skip updating table access time, db:" + table.getDbName() + ",table:" + table.getTableName() + ",access_time:" + lastAccessTime+",tableType:"+tableType);

}else {

event.getHandler().alter_table(table.getDbName(), table.getTableName(), table);

}

} catch (MetaException | InvalidOperationException ex) {

LOG.warn("Failed update table access time:" + ex);

}

}8、分析工具

介绍:可以分析运行程序某个方法中传参,来分析方法中接收到的请求内容

https://arthas.aliyun.com/doc/watch.html#%E5%90%8C%E6%97%B6%E8%A7%82%E5%AF%9F%E5%87%BD%E6%95%B0%E8%B0%83%E7%94%A8%E5%89%8D%E5%92%8C%E5%87%BD%E6%95%B0%E8%BF%94%E5%9B%9E%E5%90%8E

FLink同步mysql到Iceberg丢数问题排查解决的更多相关文章

- 使用logstash同步MySQL数据到ES

使用logstash同步MySQL数据到ES 版权声明:[分享也是一种提高]个人转载请在正文开头明显位置注明出处,未经作者同意禁止企业/组织转载,禁止私自更改原文,禁止用于商业目的. https:// ...

- MySQL服务器的线程数查看方法

mysql重启命令:/etc/init.d/mysql restart MySQL服务器的线程数需要在一个合理的范围之内,这样才能保证MySQL服务器健康平稳地运行.Threads_created表示 ...

- solr6.4.1搜索引擎同步mysql数据库

尚未成功启动solr的,请参考我的另一篇文章:http://www.cnblogs.com/zhuwenjoyce/p/6506359.html(solr6.4.1 搜索引擎启动eclipse启动) ...

- 使用Logstash来实时同步MySQL数据到ES

上篇讲到了ES和Head插件的环境搭建和配置,也简单模拟了数据作测试 本篇我们来实战从MYSQL里直接同步数据 一.首先下载和你的ES对应的logstash版本,本篇我们使用的都是6.1.1 下载后使 ...

- logstash同步mysql数据失败

问题描述 前提: 项目采用Elasticsearch提供搜索服务,Mysql提供存储服务,通过Logstash将Mysql中数据同步到Elasticsearch. 问题: 使用logstash-j ...

- solr6.4.1搜索引擎(3)增量同步mysql数据库

尚未实现首次同步mysql数据库的,请参考我的另一篇文章http://www.cnblogs.com/zhuwenjoyce/p/6512378.html(solr6.4.1搜索引擎同步mysql数据 ...

- solr6.4.1搜索引擎(2)首次同步mysql数据库

尚未成功启动solr的,请参考我的另一篇文章:http://www.cnblogs.com/zhuwenjoyce/p/6506359.html(solr6.4.1 搜索引擎启动eclipse启动) ...

- Logstash使用jdbc_input同步Mysql数据时遇到的空时间SQLException问题

今天在使用Logstash的jdbc_input插件同步Mysql数据时,本来应该能搜索出10条数据,结果在Elasticsearch中只看到了4条,终端中只给出了如下信息 [2017-08-25T1 ...

- ElasticSearch 安装 go-mysql-elasticsearch 同步mysql的数据

一.首先在Centos6.5上安装 go 语言环境 下载Golang语言包:https://studygolang.com/dl [hoojjack@localhost src]$ ls apache ...

- Logstash同步mysql数据库信息到ES

@font-face{ font-family:"Times New Roman"; } @font-face{ font-family:"宋体"; } @fo ...

随机推荐

- PHP模块之ssh2

php远程copy文件以及在远程服务器中执行命令时,所用到的模块是ssh2,以后所有的操作都依据ssh2连接句柄完成. libssh: https://www.libssh2.org/ ssh2: h ...

- nginx配置之Gzip压缩

Nginx开启Gzip压缩功能, 可以使网站的css.js .xml.html 文件在传输时进行压缩,提高访问速度, 进而优化Nginx性能! Web网站上的图片,视频等其它多媒体文件以及大文件,因 ...

- Codeforces Round 878 (Div3)

B. Binary Cafe \(1 \leq n,k \leq 10^9\) 题解:思维 考虑两种情况 第一种:钱足够多,每种咖啡都可以买的情况下,答案为\(2^k\) 第二种:钱不够多,因为任一面 ...

- elastic 7.15 集群搭建

准备三台ES 7.15 关于系统配可以参考之前的文章. https://www.cnblogs.com/yg_zhang/p/10214196.html 这里写一下 的集群配置.这里和之前配置有所不同 ...

- idea springboot 微服务批量启动

概要 在使用IDEA开发微服务的时候,微服务比较多,启动起来比较麻烦,下面介绍一下使用批量启动微服务的方法. 方法 编辑当前项目根目录下的 .idea\workspace.xml 文件. 找到 < ...

- 使用自定义 JsonConverter 解决 long 类型在前端的精度问题

问题 Javascript 的 number 类型存在精度限制,浏览器反序列化 JSON 时,无法完整保留 long 类型的精度. 在 JSON 序列化时将 long 转换为 string 进行传递就 ...

- Java8提供的Stream方式进行分组GroupingBy

有时我们需要对集合进行分组操作,这时可以使用Java8提供的Stream方式进行分组.挺好用的,此处记录下.直接贴code: Road实体: @Data @NoArgsConstructor @A ...

- Qt 在线程中invokeMethod采用QueuedConnection模式,调用带指针参数槽,实际不会调用

widgetObject有操函数Test: void Test(int *v); 在线程中调用Test,会被忽略,实际不会调用. QMetaObject::invokeMethod(widgetObj ...

- Flutter之GetX之GetBuilder

Flutter之GetX之GetBuilder GetX是Flutter的一个非常强力的三方库,包含了非常多的功能,比如状态管理.路由管理.国际化.路由中间件.主题.数据库等等 今天简单介绍一下状态管 ...

- 【Web前端】【开源分享】H5登陆界面 - 2021年12月24日

点我下载