kudu导入文件(基于impala)

kudu是cloudera开源的运行在hadoop平台上的列式存储系统,拥有Hadoop生态系统应用的常见技术特性,运行在一般的商用硬件上,支持水平扩展,高可用,集成impala后,支持标准sql语句,相对于hbase易用性强,详细介绍。

impala是Cloudera公司主导开发的新型查询系统,它提供SQL语义,能查询存储在Hadoop的HDFS和HBase中的PB级大数据。已有的Hive系统虽然也提供了SQL语义,但由于Hive底层执行使用的是MapReduce引擎,仍然是一个批处理过程,难以满足查询的交互性。相比之下,Impala的最大特点也是最大卖点就是它的快速,导入数据实测可达30+W/s,详细介绍。

导入流程:准备数据--》上传hdfs--》导入impala临时表--》导入kudu表

1.准备数据

app@hadoop01:/software/develop/pujh>cat genBiData.sh

#!/usr/bash date

echo ''>data.txt

chmod data.txt for((i=;i<=20593279;i++))

do

echo "$i|aa$i|aa$i$i|aa$i$i$i" >>data.txt;

done; date app@hadoop01:/software/develop/pujh> sed 's/|/,/g' data.txt > temp.csv

app@hadoop01:/software/develop/pujh>chmod 777 tmp.csv

2.上传到hdfs

su - root

su - hdfs

hadoop dfs -mkdir /input/data/pujh

hadoop dfs -chmod -R /input/data/pujh

hadoop dfs -put /software/develop/pujh /input/data/pujh

hadoop dfs -ls /input/data/pujh

hdfs@hadoop01:>./hadoop dfs -ls /input/data/pujh

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it. Found items

-rwxrwxrwx hdfs supergroup -- : /input/data/pujh/aa.txt

-rwxrwxrwx hdfs supergroup -- : /input/data/pujh/data.txt

-rwxrwxrwx hdfs supergroup -- : /input/data/pujh/data2kw.csv

-rwxrwxrwx hdfs supergroup -- : /input/data/pujh/data_2kw.txt

-rwxrwxrwx hdfs supergroup -- : /input/data/pujh/genBiData.sh

3.导入impala临时表

创建impala临时表

employee_temp

create table employee_temp ( eid int, name String,salary String, destination String) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';hdfs@hadoop02>./impala-shell

Starting Impala Shell without Kerberos authentication

Connected to hadoop02:

Server version: impala version 2.8.-cdh5.11.2 RELEASE (build f89269c4b96da14a841e94bdf6d4d48821b0d658)

***********************************************************************************

Welcome to the Impala shell.

(Impala Shell v2.8.0-cdh5.11.2 (f89269c) built on Fri Aug :: PDT ) The HISTORY command lists all shell commands in chronological order.

***********************************************************************************

[hadoop02:] > show databases;

Query: show databases

+------------------+----------------------------------------------+

| name | comment |

+------------------+----------------------------------------------+

| _impala_builtins | System database for Impala builtin functions |

| default | Default Hive database |

| td_test | |

+------------------+----------------------------------------------+

Fetched row(s) in .01s

[hadoop02:] > show tables;

Query: show tables

+----------------+

| name |

+----------------+

| employee |

| my_first_table |

+----------------+

Fetched row(s) in .00s [hadoop02:] > create table employee_temp ( eid int, name String,salary String, destination String) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

Query: create table employee_temp ( eid int, name String,salary String, destination String) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' Fetched row(s) in .32s

[hadoop02:] > show tables;

Query: show tables

+----------------+

| name |

+----------------+

| employee |

| employee_temp |

| my_first_table |

+----------------+

Fetched row(s) in .01s

将hadoop上的文件导入impala临时表

load data inpath '/input/data/pujh/temp.csv' into table employee_temp;

[hadoop02:] > load data inpath '/input/data/pujh/temp.csv' into table employee_temp;

Query: load data inpath '/input/data/pujh/temp.csv' into table employee_temp

ERROR: AnalysisException: Unable to LOAD DATA from hdfs://hadoop01:8020/input/data/pujh/temp.csv because Impala does not have WRITE permissions on its parent directory hdfs://hadoop01:8020/input/data/pujh [hadoop02:] > load data inpath '/input/data/pujh/temp.csv' into table employee_temp;

Query: load data inpath '/input/data/pujh/temp.csv' into table employee_temp

+----------------------------------------------------------+

| summary |

+----------------------------------------------------------+

| Loaded file(s). Total files in destination location: |

+----------------------------------------------------------+

Fetched row(s) in .44s

[hadoop02:] > select * from employee_temp limit ;

Query: select * from employee_temp limit

Query submitted at: -- :: (Coordinator: http://hadoop02:25000)

Query progress can be monitored at: http://hadoop02:25000/query_plan?query_id=4246eaa38a3d8bbb:953ce4d300000000

+------+------+--------+-------------+

| eid | name | salary | destination |

+------+------+--------+-------------+

| NULL | NULL | | |

| | aa1 | aa11 | aa111 |

+------+------+--------+-------------+

Fetched row(s) in .19s

[hadoop02:] > select * from employee_temp limit ;

Query: select * from employee_temp limit

Query submitted at: -- :: (Coordinator: http://hadoop02:25000)

Query progress can be monitored at: http://hadoop02:25000/query_plan?query_id=cb4c3cf5d647c97a:75d2985f00000000

+------+------+--------+-------------+

| eid | name | salary | destination |

+------+------+--------+-------------+

| NULL | NULL | | |

| | aa1 | aa11 | aa111 |

| | aa2 | aa22 | aa222 |

| | aa3 | aa33 | aa333 |

| | aa4 | aa44 | aa444 |

| | aa5 | aa55 | aa555 |

| | aa6 | aa66 | aa666 |

| | aa7 | aa77 | aa777 |

| | aa8 | aa88 | aa888 |

| | aa9 | aa99 | aa999 |

+------+------+--------+-------------+

Fetched row(s) in .02s

[hadoop02:] > select count(*) from employee_temp;

Query: select count(*) from employee_temp

Query submitted at: -- :: (Coordinator: http://hadoop02:25000)

Query progress can be monitored at: http://hadoop02:25000/query_plan?query_id=5a4c1107de118395:bfe96a1600000000

+----------+

| count(*) |

+----------+

| |

+----------+

Fetched row(s) in .65s

3.从impala临时表employee_temp 导入kudu表employee_kudu

创建kudu表

create table employee_kudu ( eid int, name String,salary String, destination String,PRIMARY KEY(eid)) PARTITION BY HASH PARTITIONS 16 STORED AS KUDU;

[hadoop02:] > create table employee_kudu ( eid int, name String,salary String, destination String,PRIMARY KEY(eid)) PARTITION BY HASH PARTITIONS STORED AS KUDU;

Query: create table employee_kudu ( eid int, name String,salary String, destination String,PRIMARY KEY(eid)) PARTITION BY HASH PARTITIONS STORED AS KUDU Fetched row(s) in .94s

[hadoop02:] > show tables;

Query: show tables

+----------------+

| name |

+----------------+

| employee |

| employee_kudu |

| employee_temp |

| my_first_table |

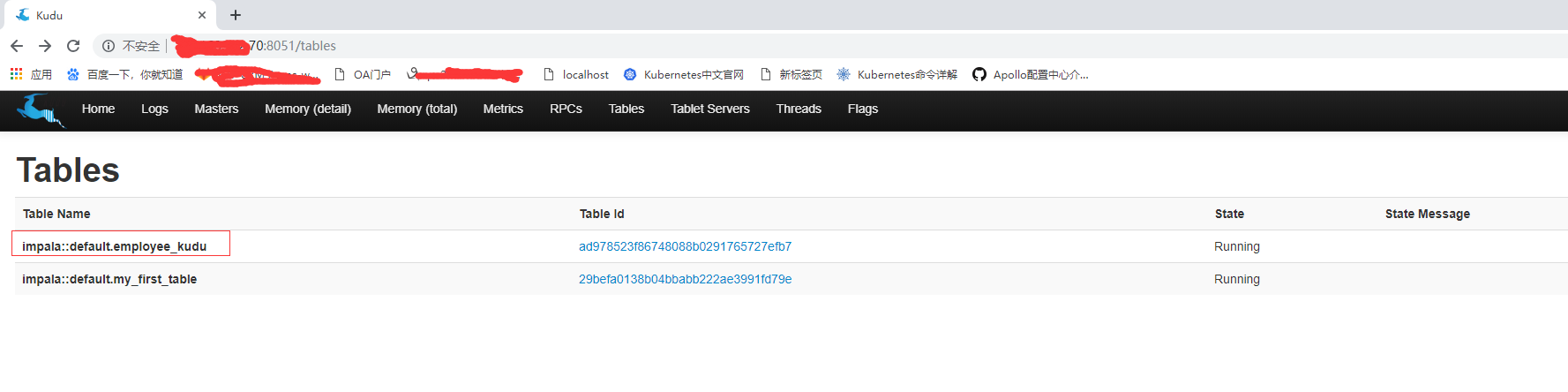

界面查看是否创建成功

从impala临时表employee_temp 导入kudu表employee_kudu

[hadoop02:] > insert into employee_kudu select * from employee_temp;

Query: insert into employee_kudu select * from employee_temp

Query submitted at: -- :: (Coordinator: http://hadoop02:25000)

Query progress can be monitored at: http://hadoop02:25000/query_plan?query_id=2e47536cc5c82392:ef4d552600000000

WARNINGS: Row with null value violates nullability constraint on table 'impala::default.employee_kudu'. Modified row(s), row error(s) in .75s

[hadoop02:] > select count(*) from employee_kudu;

Query: select count(*) from employee_kudu

Query submitted at: -- :: (Coordinator: http://hadoop02:25000)

Query progress can be monitored at: http://hadoop02:25000/query_plan?query_id=6d4bad44a980f229:fd7878d00000000

+----------+

| count(*) |

+----------+

| |

+----------+

Fetched row(s) in .18s

kudu导入文件(基于impala)的更多相关文章

- 基于Impala平台打造交互查询系统

本文来自网易云社区 原创: 蒋鸿翔 DataFunTalk 本文根据网易大数据蒋鸿翔老师DataFun Talk--"大数据从底层处理到数据驱动业务"中分享的<基于Impal ...

- 2017年最新VOS2009/VOS3000最新手机号段导入文件(手机归属地数据)

VOS2009.vos3000.vos5000最新手机号段归属地数据库导入文件. 基于2017年4月最新版手机号段归属地制作 共360569条记录,兼容所有版本的昆石VOS,包括VOS2009.vos ...

- 关于AVD不能导入文件的解决方案

安卓虚拟机导入文件时报以下异常: [2013-01-23 16:09:18 - ddms] transfer error: Read-only file system [2013-01-23 16:0 ...

- iOS发展- 文件共享(使用iTunes导入文件, 并显示现有文件)

到今天实现功能, 由iTunes导入文件的应用程序, 并在此文档进行编辑的应用. 就像我们平时经常使用 PDF阅读这样的事情, 们能够自己导入我们的电子书. 源代码下载:https://github. ...

- javaCV开发详解之3:收流器实现,录制流媒体服务器的rtsp/rtmp视频文件(基于javaCV-FFMPEG)

javaCV系列文章: javacv开发详解之1:调用本机摄像头视频 javaCV开发详解之2:推流器实现,推本地摄像头视频到流媒体服务器以及摄像头录制视频功能实现(基于javaCV-FFMPEG.j ...

- PHP:phpMyAdmin如何解决本地导入文件(数据库)为2M的限制

经验地址:http://jingyan.baidu.com/article/e75057f2a2288eebc91a89b7.html 当我们从别人那里导出数据库在本地导入时,因为数据库文件大于2M而 ...

- Mysql 导入文件提示 --secure-file-priv option 问题

MYSQL导入数据出现:The MySQL server is running with the --secure-file-priv option so it cannot execute this ...

- Vue 导入文件import、路径@和.的区别

***import: html文件中,通过script标签引入js文件.而vue中,通过import xxx from xxx路径的方式导入文件,不光可以导入js文件. from前的:“xxx”指的是 ...

- 使用SQL Developer导入文件时出现的一个奇怪的问题

SQL Developer 的版本是 17.3.1.279 当我导入文件的时候,在Data Preview 的阶段,发现无论选择还是取消选择 Header,文件中的第一行总会被当作字段名. 后来在Or ...

随机推荐

- 为什么ssh 执行完命令以后 挂了, hang , stop respond

- python面向对象基本概念(OOP)

面向对象(OOP)基本概念 面向对象编程 —— Object Oriented Programming 简写 OOP 目标 了解 面向对象 基本概念 01. 面向对象基本概念 我们之前学习的编程方式就 ...

- Problem F: 平面上的点——Point类 (VI)

Description 在数学上,平面直角坐标系上的点用X轴和Y轴上的两个坐标值唯一确定.现在我们封装一个“Point类”来实现平面上的点的操作. 根据“append.cc”,完成Point类的构造方 ...

- SharePoint Framework Extensions GA Release

博客地址:http://blog.csdn.net/FoxDave SharePoint Framework Extensions GA版本已经发布了,介于最近个人工作的变动调整,还没时间好好了解一下 ...

- springboot源码之(bean的递归注册)

在prepareContext中,用loader调用load方法,loader是 BeanDefinitionLoader,在BeanDefinitionLoader的构造方法中,会实例化一个Anno ...

- tp5 Excel导入

/** * 导入Excel功能 */ public function import(){ if (!empty($_FILES)) { $file = request()->file('impo ...

- hadoop day 5

1.Zookeeper Zookeeper的安装和配置(集群模式) 1)在conf目录下创建一个配置文件zoo.cfg, tickTime=2000——心跳检测的时间间隔(ms) dataDir=/U ...

- git的优缺点

git可以说是世界上最先进的版本控制系统,大多语句的执行为linux语句,也不难怪,,起初他就是为了帮助开发linux开发内核而使用. 我们先来说git的主要功能,知道了这个,我们也就知道了为什么 ...

- MySQL - 常见的存储引擎

数据库存储引擎是数据库底层软件组织,数据库管理系统(DBMS)使用数据引擎进行创建.查询.更新和删除数据,不同的存储引擎... 存储引擎 数据库存储引擎: 是数据库底层软件组织,数据库管理系统(DBM ...

- Java实现带logo的二维码

Java实现带logo的二维码 二维码应用到生活的各个方面,会用代码实现二维码,我想一定是一项加分的技能.好了,我们来一起实现一下吧. 我们实现的二维码是基于QR Code的标准的,QR Code是由 ...