基于【CentOS-7+ Ambari 2.7.0 + HDP 3.0】搭建HAWQ数据仓库02 ——使用ambari-server安装HDP

本文记录使用ambari-server安装HDP的过程,对比于使用cloudera-manager安装CDH,不得不说ambari的易用性差的比较多 ~_~,需要用户介入的过程较多,或者说可定制性更高。

~_~,需要用户介入的过程较多,或者说可定制性更高。

首先、安装之前,在每个主机节点上执行下面命令,已清除缓存,避免一些repo原因导致的安装失败。

yum clean all

下面开始安装过程:

一、安装过程:

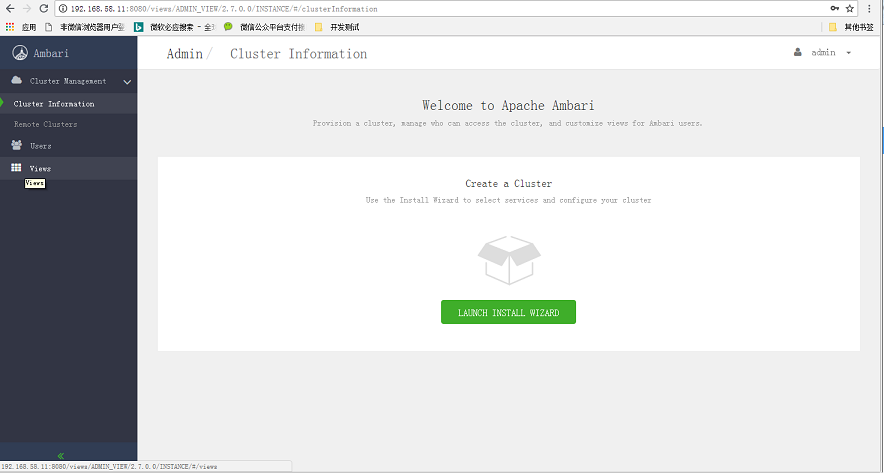

1,登录ambari-server管理界面,用浏览器访问http://ep-bd01:8080,默认用户名口令皆为admin。

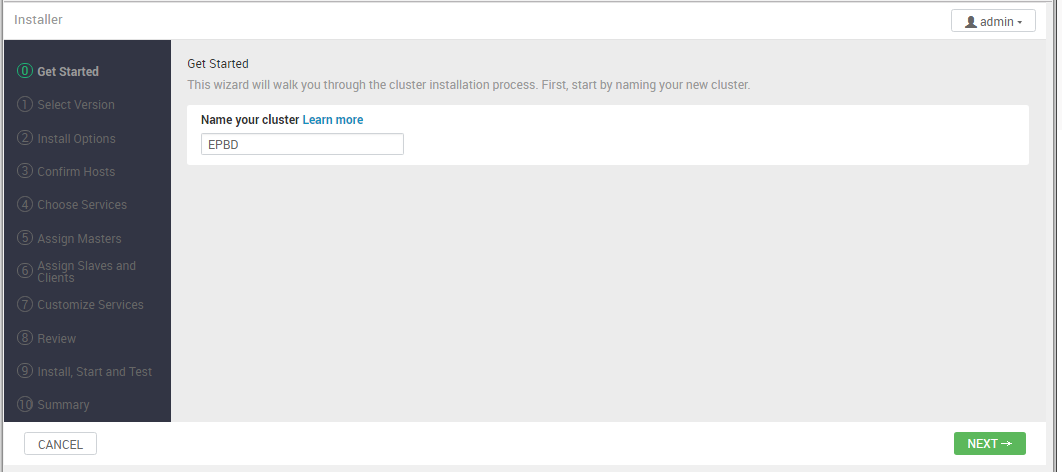

2,点击按钮“LUNCH INSTALL WIZZARD”,给集群起名,这里为EPBD,下一步

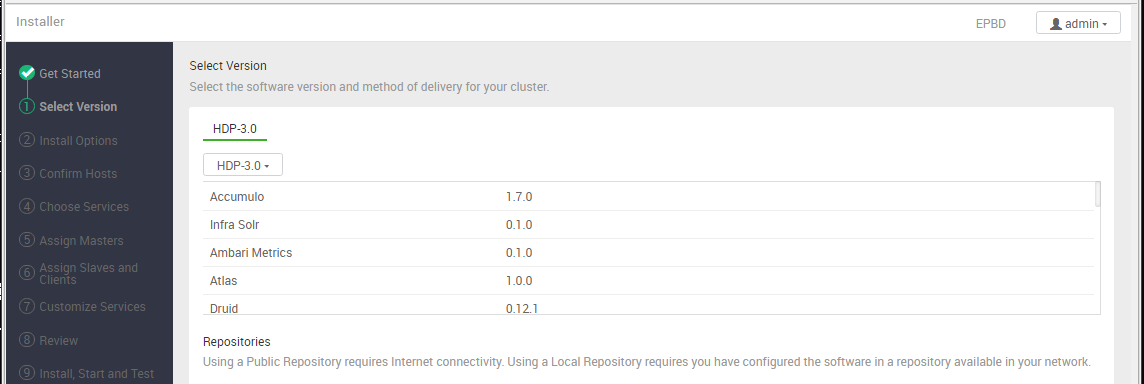

4,选择HDP版本3.0.0.0,配置repo地址

这一步ambari自动列出配置在本地repo中HDP版本的repo ID

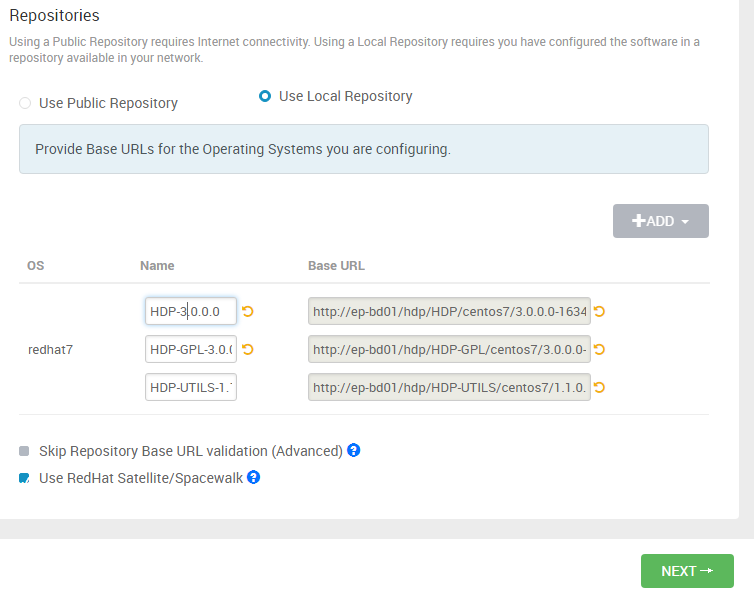

下面是仓库的设置,这里选择本地仓库,删除掉除了"Redhat7"之外的其他操作系统,仓库基地址,就是前面配置的hdp-local.repo中的设置:

http://ep-bd01/hdp/HDP/centos7/3.0.0.0-1634 、 http://ep-bd01/hdp/HDP-GPL/centos7/3.0.0.0-1634 和 http://ep-bd01/hdp/HDP-UTILS/centos7/1.1.0.22

然后,选中“Use RedHat Satellite/Spacewalk”,此时可以修改仓库名称,确保和配好的hdp.repo中保持一致,点击下一步。

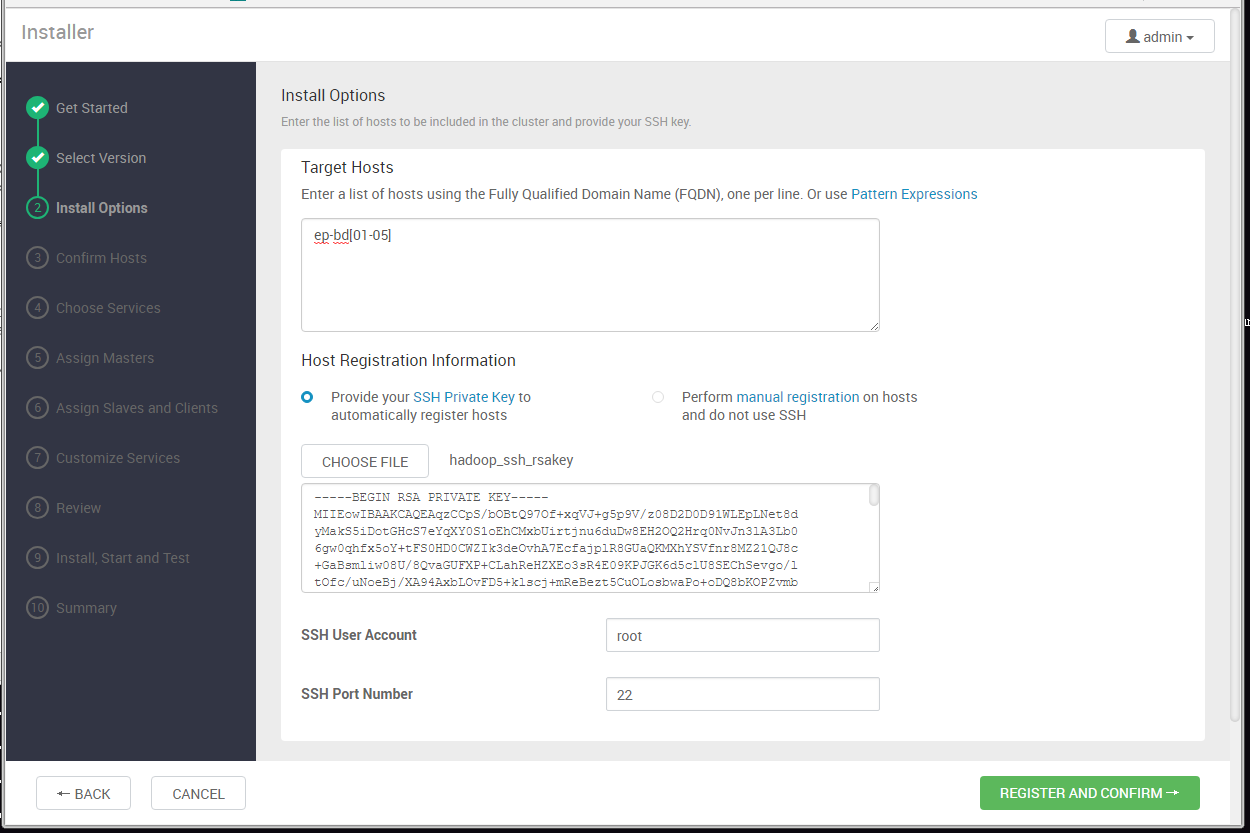

5,[Target Hosts]填写集群中主机列表,主机填写可以使用中括号加上序数后缀范围的方式,详细用法点击"Pattern Expressions"

主机注册方式可以选中SSH方式,这需要提供ssh免密访问所用私有证书;

或者选择“Perform manual registration on hosts and do not use SSH”,这种方式需要在每台主机上事先安装好ambari-agent,就如我在上一篇中所做的,所以我选择的是这种方式。经试验对比用SSH的方式注册主机时稍稍快上一点儿。

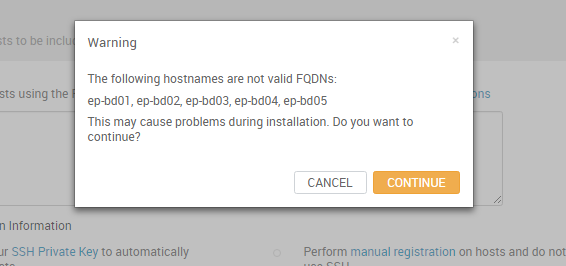

进行下一步“REGISTER AND CONFIRM”,ambari可能会提示主机名称不是全名称FQDN,不用理会它,继续进行即可。

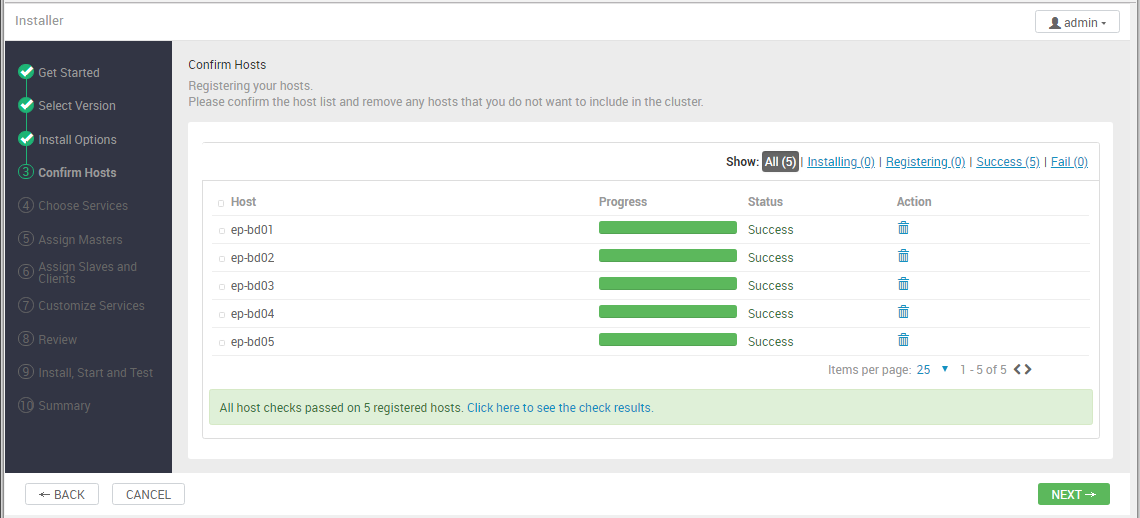

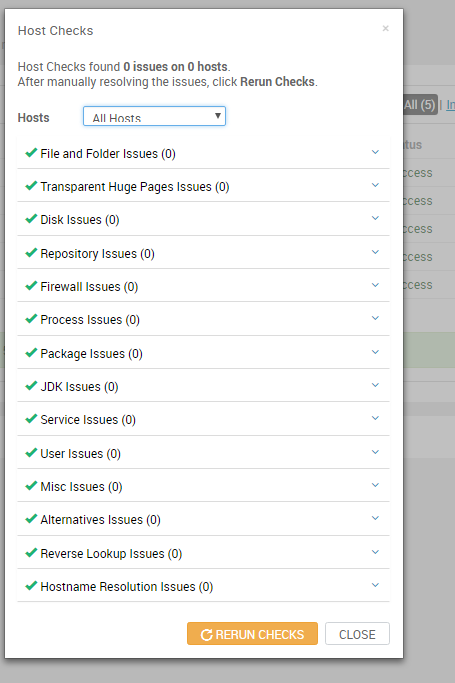

6,点击下一步开始进行主机检测,检测成功后可以点击“ Click here to see the check results”可以查看检测结果。

7,进入选择filesystem和services,这里接受默认设置,点击next。

注:后经过无数次失败的打击,我取消了Ranger和Ranger KMS服务,原因不知,这里又一次失败的log:

stderr:

-- ::, - The 'ranger-kms' component did not advertise a version. This may indicate a problem with the component packaging. However, the stack-select tool was able to report a single version installed (3.0.0.0-). This is the version that will be reported.

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/RANGER_KMS/package/scripts/kms_server.py", line , in <module>

KmsServer().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line , in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/RANGER_KMS/package/scripts/kms_server.py", line , in install

self.configure(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/RANGER_KMS/package/scripts/kms_server.py", line , in configure

kms.kms()

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/RANGER_KMS/package/scripts/kms.py", line , in kms

create_parents = True

File "/usr/lib/ambari-agent/lib/resource_management/core/base.py", line , in __init__

self.env.run()

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line , in run

self.run_action(resource, action)

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line , in run_action

provider_action()

File "/usr/lib/ambari-agent/lib/resource_management/core/providers/system.py", line , in action_create

raise Fail("Applying %s failed, looped symbolic links found while resolving %s" % (self.resource, path))

resource_management.core.exceptions.Fail: Applying Directory['/usr/hdp/current/ranger-kms/conf'] failed, looped symbolic links found while resolving /usr/hdp/current/ranger-kms/conf

stdout:

-- ::, - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=None -> 3.0

-- ::, - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

-- ::, - Group['kms'] {}

-- ::, - Group['livy'] {}

-- ::, - Group['spark'] {}

-- ::, - Group['ranger'] {}

-- ::, - Group['hdfs'] {}

-- ::, - Group['zeppelin'] {}

-- ::, - Group['hadoop'] {}

-- ::, - Group['users'] {}

-- ::, - Group['knox'] {}

-- ::, - User['yarn-ats'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

-- ::, - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['ranger'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['ranger', 'hadoop'], 'uid': None}

-- ::, - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

-- ::, - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['zeppelin', 'hadoop'], 'uid': None}

-- ::, - User['kms'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['kms', 'hadoop'], 'uid': None}

-- ::, - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['livy', 'hadoop'], 'uid': None}

-- ::, - User['druid'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['spark', 'hadoop'], 'uid': None}

-- ::, - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

-- ::, - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop'], 'uid': None}

-- ::, - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

-- ::, - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'knox'], 'uid': None}

-- ::, - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': }

-- ::, - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

-- ::, - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

-- ::, - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': , 'cd_access': 'a'}

-- ::, - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': }

-- ::, - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': }

-- ::, - call['/var/lib/ambari-agent/tmp/changeUid.sh hbase'] {}

-- ::, - call returned (, '')

-- ::, - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1015'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

-- ::, - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1015'] due to not_if

-- ::, - Group['hdfs'] {}

-- ::, - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop', u'hdfs']}

-- ::, - FS Type: HDFS

-- ::, - Directory['/etc/hadoop'] {'mode': }

-- ::, - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

-- ::, - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': }

-- ::, - Repository['HDP-3.0-repo-1'] {'append_to_file': False, 'base_url': 'http://ep-bd01/hdp/HDP/centos7/3.0.0.0-1634', 'action': ['create'], 'components': [u'HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-1', 'mirror_list': None}

-- ::, - File['/etc/yum.repos.d/ambari-hdp-1.repo'] {'content': '[HDP-3.0-repo-1]\nname=HDP-3.0-repo-1\nbaseurl=http://ep-bd01/hdp/HDP/centos7/3.0.0.0-1634\n\npath=/\nenabled=1\ngpgcheck=0'}

-- ::, - Writing File['/etc/yum.repos.d/ambari-hdp-1.repo'] because contents don't match

-- ::, - Repository['HDP-3.0-GPL-repo-1'] {'append_to_file': True, 'base_url': 'http://ep-bd01/hdp/HDP-GPL/centos7/3.0.0.0-1634', 'action': ['create'], 'components': [u'HDP-GPL', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-1', 'mirror_list': None}

-- ::, - File['/etc/yum.repos.d/ambari-hdp-1.repo'] {'content': '[HDP-3.0-repo-1]\nname=HDP-3.0-repo-1\nbaseurl=http://ep-bd01/hdp/HDP/centos7/3.0.0.0-1634\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-3.0-GPL-repo-1]\nname=HDP-3.0-GPL-repo-1\nbaseurl=http://ep-bd01/hdp/HDP-GPL/centos7/3.0.0.0-1634\n\npath=/\nenabled=1\ngpgcheck=0'}

-- ::, - Writing File['/etc/yum.repos.d/ambari-hdp-1.repo'] because contents don't match

-- ::, - Repository['HDP-UTILS-1.1.0.22-repo-1'] {'append_to_file': True, 'base_url': 'http://ep-bd01/hdp/HDP-UTILS/centos7/1.1.0.22', 'action': ['create'], 'components': [u'HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-1', 'mirror_list': None}

-- ::, - File['/etc/yum.repos.d/ambari-hdp-1.repo'] {'content': '[HDP-3.0-repo-1]\nname=HDP-3.0-repo-1\nbaseurl=http://ep-bd01/hdp/HDP/centos7/3.0.0.0-1634\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-3.0-GPL-repo-1]\nname=HDP-3.0-GPL-repo-1\nbaseurl=http://ep-bd01/hdp/HDP-GPL/centos7/3.0.0.0-1634\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-UTILS-1.1.0.22-repo-1]\nname=HDP-UTILS-1.1.0.22-repo-1\nbaseurl=http://ep-bd01/hdp/HDP-UTILS/centos7/1.1.0.22\n\npath=/\nenabled=1\ngpgcheck=0'}

-- ::, - Writing File['/etc/yum.repos.d/ambari-hdp-1.repo'] because contents don't match

-- ::, - Package['unzip'] {'retry_on_repo_unavailability': False, 'retry_count': }

-- ::, - Skipping installation of existing package unzip

-- ::, - Package['curl'] {'retry_on_repo_unavailability': False, 'retry_count': }

-- ::, - Skipping installation of existing package curl

-- ::, - Package['hdp-select'] {'retry_on_repo_unavailability': False, 'retry_count': }

-- ::, - Skipping installation of existing package hdp-select

-- ::, - call[('ambari-python-wrap', u'/usr/bin/hdp-select', 'versions')] {}

-- ::, - call returned (, '3.0.0.0-1634')

-- ::, - The 'ranger-kms' component did not advertise a version. This may indicate a problem with the component packaging. However, the stack-select tool was able to report a single version installed (3.0.0.0-). This is the version that will be reported.

-- ::, - Command repositories: HDP-3.0-repo-, HDP-3.0-GPL-repo-, HDP-UTILS-1.1.0.22-repo-

-- ::, - Applicable repositories: HDP-3.0-repo-, HDP-3.0-GPL-repo-, HDP-UTILS-1.1.0.22-repo-

-- ::, - Looking for matching packages in the following repositories: HDP-3.0-repo-, HDP-3.0-GPL-repo-, HDP-UTILS-1.1.0.22-repo-

-- ::, - Adding fallback repositories: HDP-UTILS-1.1.0.22, HDP-3.0-GPL, HDP-3.0

-- ::, - Package['ranger_3_0_0_0_1634-kms'] {'retry_on_repo_unavailability': False, 'retry_count': }

-- ::, - Installing package ranger_3_0_0_0_1634-kms ('/usr/bin/yum -y install ranger_3_0_0_0_1634-kms')

-- ::, - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=None -> 3.0

-- ::, - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

-- ::, - Execute[('cp', '-f', u'/usr/hdp/current/ranger-kms/install.properties', u'/usr/hdp/current/ranger-kms/install-backup.properties')] {'not_if': 'ls /usr/hdp/current/ranger-kms/install-backup.properties', 'sudo': True, 'only_if': 'ls /usr/hdp/current/ranger-kms/install.properties'}

-- ::, - Password validated

-- ::, - File['/var/lib/ambari-agent/tmp/mysql-connector-java.jar'] {'content': DownloadSource('http://ep-bd01:8080/resources/mysql-connector-java.jar'), 'mode': }

-- ::, - Not downloading the file from http://ep-bd01:8080/resources/mysql-connector-java.jar, because /var/lib/ambari-agent/tmp/mysql-connector-java.jar already exists

-- ::, - Directory['/usr/hdp/current/ranger-kms/ews/lib'] {'mode': }

-- ::, - Creating directory Directory['/usr/hdp/current/ranger-kms/ews/lib'] since it doesn't exist.

-- ::, - Execute[('cp', '--remove-destination', u'/var/lib/ambari-agent/tmp/mysql-connector-java.jar', u'/usr/hdp/current/ranger-kms/ews/webapp/lib')] {'path': ['/bin', '/usr/bin/'], 'sudo': True}

-- ::, - File['/usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar'] {'mode': }

-- ::, - ModifyPropertiesFile['/usr/hdp/current/ranger-kms/install.properties'] {'owner': 'kms', 'properties': ...}

-- ::, - Modifying existing properties file: /usr/hdp/current/ranger-kms/install.properties

-- ::, - File['/usr/hdp/current/ranger-kms/install.properties'] {'owner': 'kms', 'content': ..., 'group': None, 'mode': None, 'encoding': 'utf-8'}

-- ::, - Writing File['/usr/hdp/current/ranger-kms/install.properties'] because contents don't match

-- ::, - Changing owner for /usr/hdp/current/ranger-kms/install.properties from to kms

-- ::, - ModifyPropertiesFile['/usr/hdp/current/ranger-kms/install.properties'] {'owner': 'kms', 'properties': {'SQL_CONNECTOR_JAR': u'/usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar'}}

-- ::, - Modifying existing properties file: /usr/hdp/current/ranger-kms/install.properties

-- ::, - File['/usr/hdp/current/ranger-kms/install.properties'] {'owner': 'kms', 'content': ..., 'group': None, 'mode': None, 'encoding': 'utf-8'}

-- ::, - Setting up Ranger KMS DB and DB User

-- ::, - Execute['ambari-python-wrap /usr/hdp/current/ranger-kms/dba_script.py -q'] {'logoutput': True, 'environment': {'RANGER_KMS_HOME': u'/usr/hdp/current/ranger-kms', 'JAVA_HOME': u'/usr/java/jdk1.8.0_181-amd64'}, 'tries': , 'user': 'kms', 'try_sleep': }

-- ::, [I] Running DBA setup script. QuiteMode:True

-- ::, [I] Using Java:/usr/java/jdk1..0_181-amd64/bin/java

-- ::, [I] DB FLAVOR:MYSQL

-- ::, [I] DB Host:ep-bd01

-- ::, [I] ---------- Verifing DB root password ----------

-- ::, [I] DBA root user password validated

-- ::, [I] ---------- Verifing Ranger KMS db user password ----------

-- ::, [I] KMS user password validated

-- ::, [I] ---------- Creating Ranger KMS db user ----------

-- ::, [JISQL] /usr/java/jdk1..0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "SELECT version();"

2018-08-17 12:04:41,716 [I] Verifying user rangerkms for Host %

2018-08-17 12:04:41,716 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "select user from mysql.user where user='rangerkms' and host='%';"

2018-08-17 12:04:41,981 [I] MySQL user rangerkms already exists for host %

2018-08-17 12:04:41,981 [I] Verifying user rangerkms for Host localhost

2018-08-17 12:04:41,981 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "select user from mysql.user where user='rangerkms' and host='localhost';"

2018-08-17 12:04:42,250 [I] MySQL user rangerkms already exists for host localhost

2018-08-17 12:04:42,250 [I] Verifying user rangerkms for Host ep-bd01

2018-08-17 12:04:42,250 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "select user from mysql.user where user='rangerkms' and host='ep-bd01';"

2018-08-17 12:04:42,525 [I] MySQL user rangerkms already exists for host ep-bd01

2018-08-17 12:04:42,525 [I] ---------- Creating Ranger KMS database ----------

2018-08-17 12:04:42,525 [I] Verifying database rangerkms

2018-08-17 12:04:42,525 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "show databases like 'rangerkms';"

2018-08-17 12:04:42,788 [I] Database rangerkms already exists.

2018-08-17 12:04:42,788 [I] ---------- Granting permission to Ranger KMS db user ----------

2018-08-17 12:04:42,788 [I] ---------- Granting privileges TO user 'rangerkms'@'%' on db 'rangerkms'----------

2018-08-17 12:04:42,788 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "grant all privileges on rangerkms.* to 'rangerkms'@'%' with grant option;"

2018-08-17 12:04:43,048 [I] ---------- FLUSH PRIVILEGES ----------

2018-08-17 12:04:43,048 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "FLUSH PRIVILEGES;"

2018-08-17 12:04:43,544 [I] Privileges granted to 'rangerkms' on 'rangerkms'

2018-08-17 12:04:43,544 [I] ---------- Granting privileges TO user 'rangerkms'@'localhost' on db 'rangerkms'----------

2018-08-17 12:04:43,544 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "grant all privileges on rangerkms.* to 'rangerkms'@'localhost' with grant option;"

2018-08-17 12:04:43,810 [I] ---------- FLUSH PRIVILEGES ----------

2018-08-17 12:04:43,810 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "FLUSH PRIVILEGES;"

2018-08-17 12:04:44,080 [I] Privileges granted to 'rangerkms' on 'rangerkms'

2018-08-17 12:04:44,080 [I] ---------- Granting privileges TO user 'rangerkms'@'ep-bd01' on db 'rangerkms'----------

2018-08-17 12:04:44,080 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "grant all privileges on rangerkms.* to 'rangerkms'@'ep-bd01' with grant option;"

2018-08-17 12:04:44,353 [I] ---------- FLUSH PRIVILEGES ----------

2018-08-17 12:04:44,353 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/mysql -u root -p '********' -noheader -trim -c \; -query "FLUSH PRIVILEGES;"

2018-08-17 12:04:44,619 [I] Privileges granted to 'rangerkms' on 'rangerkms'

2018-08-17 12:04:44,619 [I] ---------- Ranger KMS DB and User Creation Process Completed.. ----------

2018-08-17 12:04:44,624 - Execute['ambari-python-wrap /usr/hdp/current/ranger-kms/db_setup.py'] {'logoutput': True, 'environment': {'PATH': '/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/var/lib/ambari-agent', 'RANGER_KMS_HOME': u'/usr/hdp/current/ranger-kms', 'JAVA_HOME': u'/usr/java/jdk1.8.0_181-amd64'}, 'tries': 5, 'user': 'kms', 'try_sleep': 10}

2018-08-17 12:04:44,679 [I] DB FLAVOR :MYSQL

2018-08-17 12:04:44,679 [I] --------- Verifying Ranger DB connection ---------

2018-08-17 12:04:44,679 [I] Checking connection..

2018-08-17 12:04:44,679 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/rangerkms -u 'rangerkms' -p '********' -noheader -trim -c \; -query "SELECT version();"

2018-08-17 12:04:44,947 [I] Checking connection passed.

2018-08-17 12:04:44,947 [I] --------- Verifying Ranger DB tables ---------

2018-08-17 12:04:44,947 [JISQL] /usr/java/jdk1.8.0_181-amd64/bin/java -cp /usr/hdp/current/ranger-kms/ews/webapp/lib/mysql-connector-java.jar:/usr/hdp/current/ranger-kms/jisql/lib/* org.apache.util.sql.Jisql -driver mysqlconj -cstring jdbc:mysql://ep-bd01/rangerkms -u 'rangerkms' -p '********' -noheader -trim -c \; -query "show tables like 'ranger_masterkey';"

2018-08-17 12:04:45,211 [I] Table ranger_masterkey already exists in database 'rangerkms'

2018-08-17 12:04:45,217 - Directory['/usr/hdp/current/ranger-kms/conf'] {'owner': 'kms', 'group': 'kms', 'create_parents': True}

2018-08-17 12:04:45,217 - Creating directory Directory['/usr/hdp/current/ranger-kms/conf'] since it doesn't exist. Command failed after 1 tries

安装ranger-kms失败

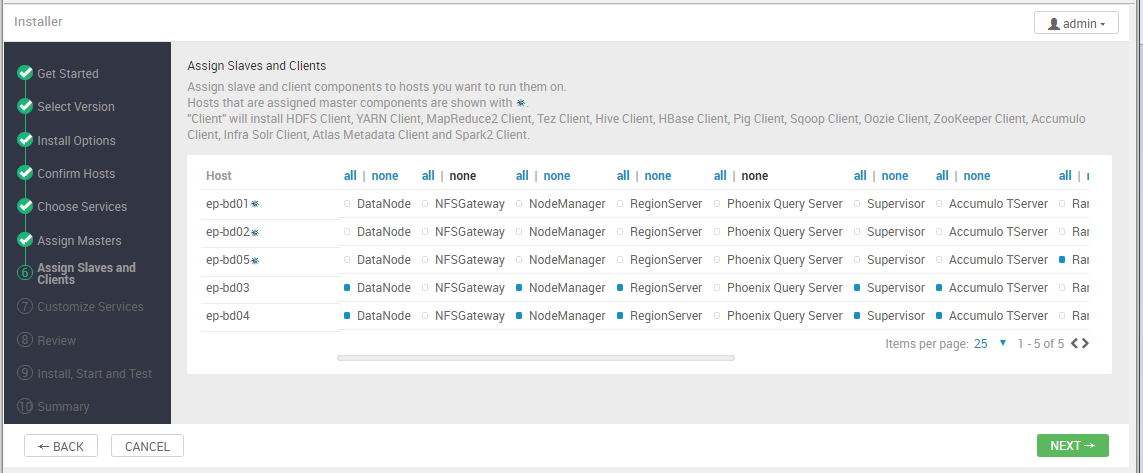

8,【Assign Slaves and Clients】

9,【Assign Slaves and Clients】

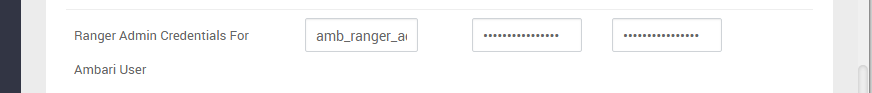

10,【CREDENTIALS】密码这里我都是一样的粘贴过来,除了Ranger Admin这个保持不变

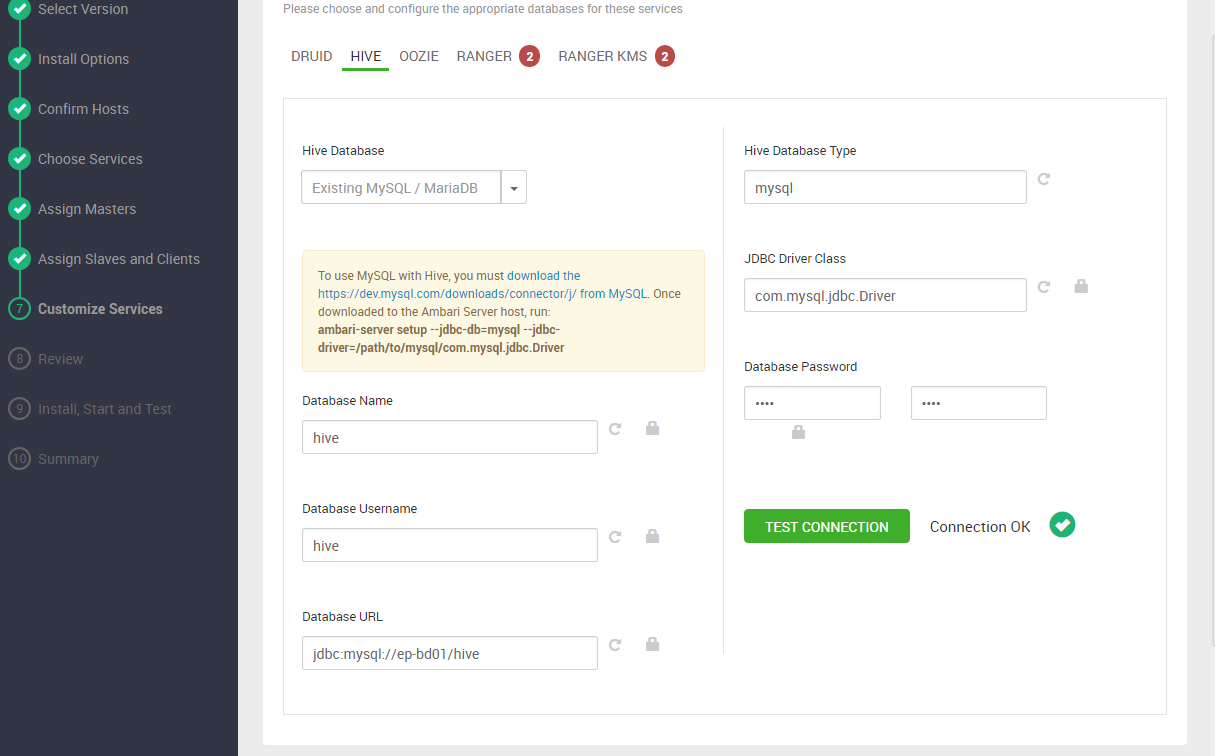

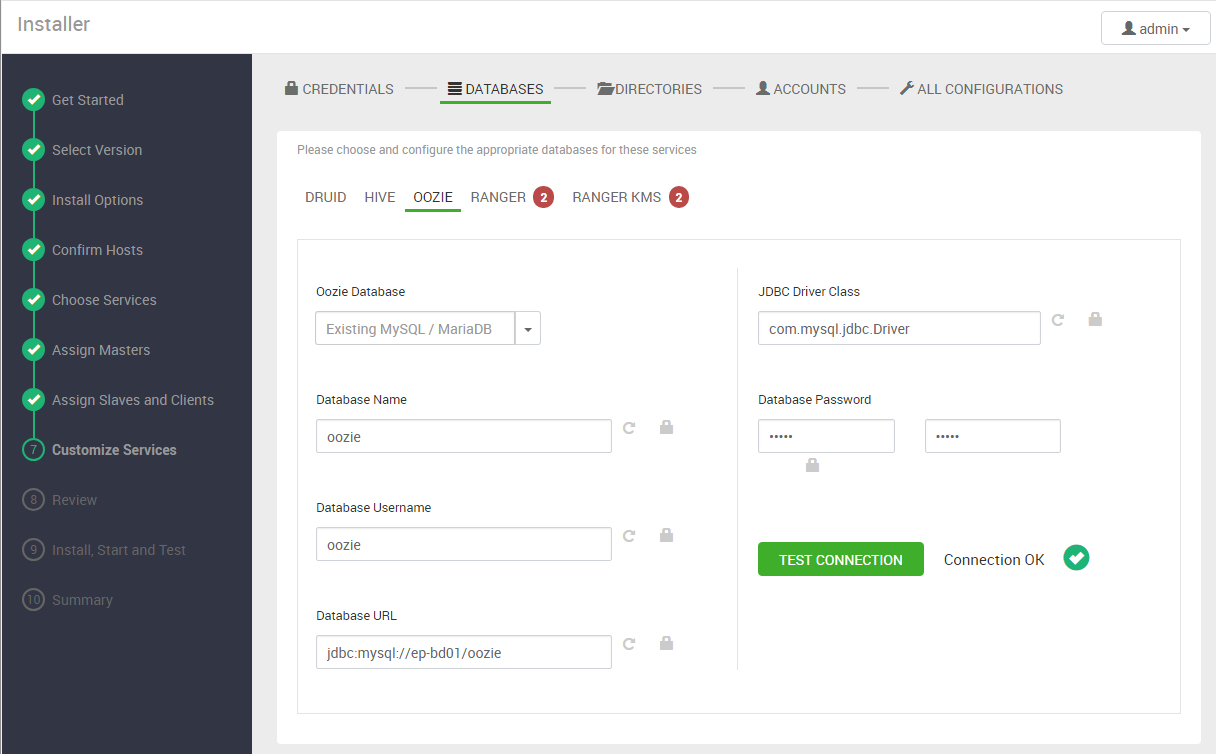

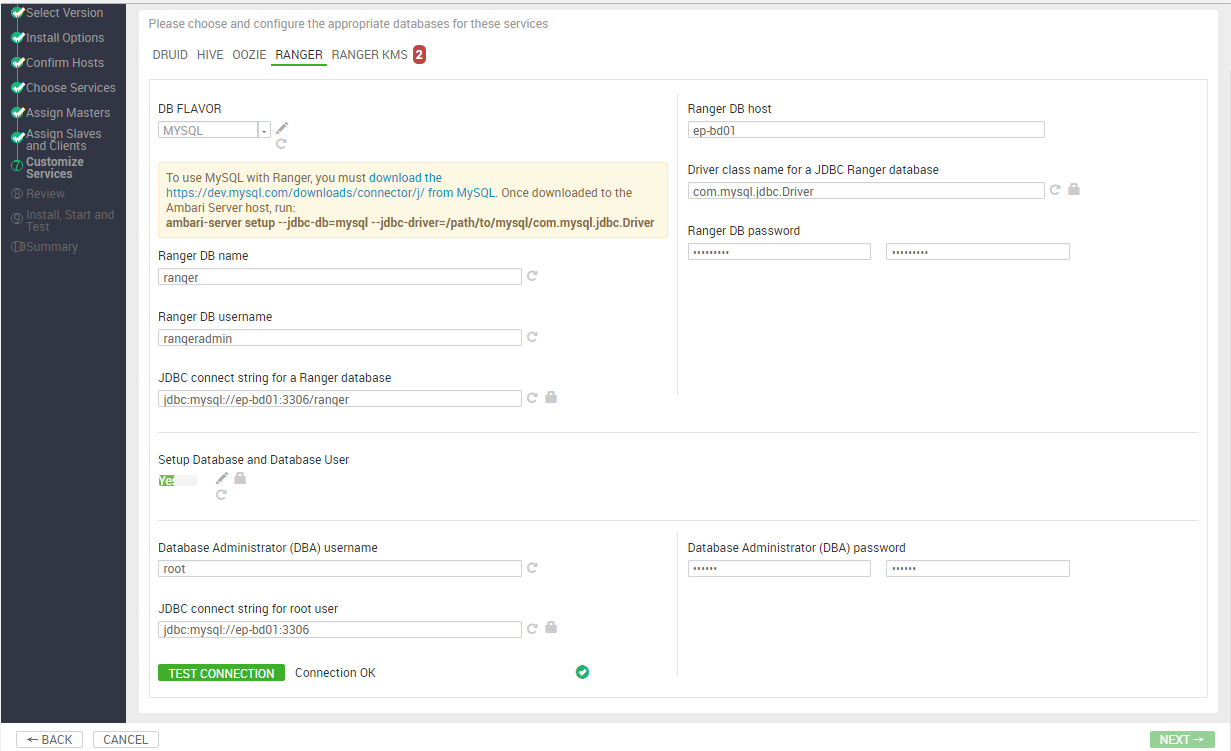

11,【DATABASEs】,用户名和数据库一律使用服务名相同

Hive和Oozie的数据库和用户需要手动建立。 Druid的用户需要建立好。

[root@ep-bd01 downloads]# mysql -uroot -phadoop

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is

Server version: 5.5.-MariaDB MariaDB Server Copyright (c) , , Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> use mysql;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A Database changed

MariaDB [mysql]> create database oozie DEFAULT CHARSET utf8 COLLATE utf8_general_ci;

Query OK, row affected (0.00 sec) MariaDB [mysql]> grant all privileges on *.* to 'oozie'@'%' identified by 'oozie';

MariaDB [mysql]> create database hive DEFAULT CHARSET utf8 COLLATE utf8_general_ci;

Query OK, 1 row affected (0.00 sec) MariaDB [mysql]> grant all privileges on *.* to 'hive'@'%' identified by 'hive';

MariaDB [mysql]> grant all privileges on *.* to 'druid'@'%' identified by 'druid';

Hive的数据库配置需要选择“Existing MySQL/MariaDB”,注意密码要和事先建立的用户一致,不能用默认的。填写后点击“TEST CONNECTION”测试成功才行。

Oozie的设置和Hive基本类似,同样要测试通过才行。

Ranger的数据库配置需要给出数据库所在主机,和root用户的数据库密码,然后测试通过。 Ranger KMS类似,但是没有测试,所以需要仔细填写,我在一次失败过程中就是这一步时填写的数据库主机名写错了一个字母导致的。

12,【DIRECTORYs,ACCOUNTs 】【ALL CONFIGURATIONS】

全部接受默认值,直接下一步

14,【review】

没啥好说的,点击next,等待......

二、遇坑失败,经验总结:

(一)【重置ambari-server,重新开始安装】

1,重设reset ambari-server

systemctl stop ambari-server

ambari-server reset

2,由于ambari-server的数据存在数据库中,ambari-server不能自动重置mariadb数据库表,需要手动删除重建ambari数据库:

mysql -uroot -p

use mysql;

drop database ambari;

create database ambari;

use ambari;

source /var/lib/ambari-server/resources/Ambari-DDL-MySQL-CREATE.sql;

use mysql;

3,重新启动ambari-server和所有主机上的ambari-agent

systemctl restart ambari-server

systemctl restart ambari-agent

ssh ep-bd02 systemctl restart ambari-agent

ssh ep-bd03 systemctl restart ambari-agent

ssh ep-bd04 systemctl restart ambari-agent

ssh ep-bd05 systemctl restart ambari-agent

4,卸载已安装的模块软件包(不卸载导致再次安装失败)

yum erase -y -C ranger_3_0_0_0_1634-admin hive_3_0_0_0_1634 ambari-infra-solr-client oozie_3_0_0_0_1634-client oozie_3_0_0_0_1634-webapp oozie_3_0_0_0_1634-sharelib-sqoop hadoop_3_0_0_0_1634-libhdfs ranger_3_0_0_0_1634-kafka-plugin ranger_3_0_0_0_1634-hive-plugin druid_3_0_0_0_1634 tez_3_0_0_0_1634 oozie_3_0_0_0_1634-sharelib-pig ranger_3_0_0_0_1634-usersync ranger_3_0_0_0_1634-hbase-plugin accumulo_3_0_0_0_1634 ranger_3_0_0_0_1634-yarn-plugin oozie_3_0_0_0_1634-sharelib-distcp hive_3_0_0_0_1634-jdbc knox_3_0_0_0_1634 oozie_3_0_0_0_1634-sharelib-hcatalog hadoop_3_0_0_0_1634 phoenix_3_0_0_0_1634 atlas-metadata_3_0_0_0_1634-hbase-plugin hbase_3_0_0_0_1634 storm_3_0_0_0_1634 ranger_3_0_0_0_1634-hdfs-plugin hadoop_3_0_0_0_1634-hdfs ranger_3_0_0_0_1634-tagsync atlas-metadata_3_0_0_0_1634-storm-plugin ranger_3_0_0_0_1634-storm-plugin ranger_3_0_0_0_1634-knox-plugin ambari-metrics-grafana oozie_3_0_0_0_1634-common kafka_3_0_0_0_1634 spark2_3_0_0_0_1634-yarn-shuffle oozie_3_0_0_0_1634-sharelib-hive2 oozie_3_0_0_0_1634-sharelib-spark bigtop-jsvc oozie_3_0_0_0_1634-sharelib-mapreduce-streaming bigtop-tomcat atlas-metadata_3_0_0_0_1634 oozie_3_0_0_0_1634-sharelib atlas-metadata_3_0_0_0_1634-hive-plugin oozie_3_0_0_0_1634-sharelib-hive ambari-infra-solr hive_3_0_0_0_1634-hcatalog ambari-metrics-monitor hadoop_3_0_0_0_1634-client hadoop_3_0_0_0_1634-yarn smartsense-hst ranger_3_0_0_0_1634-atlas-plugin hadoop_3_0_0_0_1634-mapreduce hdp-select oozie_3_0_0_0_1634 zookeeper_3_0_0_0_1634 ambari-metrics-hadoop-sink zookeeper_3_0_0_0_1634-server ambari-metrics-collector atlas-metadata_3_0_0_0_1634-sqoop-plugin

5,删除安装目录中的内容:不清除文件将导致分发时出现包解压失败等错误。

rm -rf /usr/hdp/*

ssh ep-bd02 rm -rf /usr/hdp/*

ssh ep-bd03 rm -rf /usr/hdp/*

ssh ep-bd04 rm -rf /usr/hdp/*

ssh ep-bd05 rm -rf /usr/hdp/*

由于失败重置次数太多,故将以上过程写成脚本,方便执行

/root/ambari-server-reset.sh

echo reset ambari server and database ......

ambari-server stop && echo yes | ambari-server reset >/dev/null >&

echo Drop and recreate ambari database ......

mysql -uroot -phadoop < /root/ambari-server-db-reset.sql echo remove all packages installed ......

ssh -t root@ep-bd01 "echo -n \"==> Removing installed packages and folders on --- \";hostname;sh /root/rm-hdp-packages.sh >/dev/null 2>&1;rm -rf /usr/hdp/*" &&

ssh -t root@ep-bd02 "echo -n \"==> Removing installed packages and folders on --- \";hostname;sh /root/rm-hdp-packages.sh >/dev/null 2>&1;rm -rf /usr/hdp/*" &&

ssh -t root@ep-bd03 "echo -n \"==> Removing installed packages and folders on --- \";hostname;sh /root/rm-hdp-packages.sh >/dev/null 2>&1;rm -rf /usr/hdp/*" &&

ssh -t root@ep-bd04 "echo -n \"==> Removing installed packages and folders on --- \";hostname;sh /root/rm-hdp-packages.sh >/dev/null 2>&1;rm -rf /usr/hdp/*" &&

ssh -t root@ep-bd05 "echo -n \"==> Removing installed packages and folders on --- \";hostname;sh /root/rm-hdp-packages.sh >/dev/null 2>&1;rm -rf /usr/hdp/*"

echo restart ambari server and all agents ......

systemctl restart ambari-server

systemctl restart ambari-agent

ssh ep-bd02 systemctl restart ambari-agent

ssh ep-bd03 systemctl restart ambari-agent

ssh ep-bd04 systemctl restart ambari-agent

ssh ep-bd05 systemctl restart ambari-agent

echo reset ambari-server done!

(二)、提供版本文件VDF,导致失败,原因不明

VDF,我这里是:

http://ep-bd01/hdp/HDP/centos7/3.0.0.0-1634/HDP-3.0.0.0-1634.xml

在指定版本页可以顺利读取,但是再部署时报错:“Upload Version Definition File Error”,详细信息:"javax.xml.stream.XMLStreamException: ParseError at [row,col]:[1,1] Message: Content is not allowed in prolog"

未找到原因。

(三)、Ranger、Ranger KMS安装失败,多次未找到解决办法,已暂时取消安装,结果安装成功。

oozie_3_0_0_0_1634-client-4.3.1.3.0.0.0-1634.noarch 强制卸载

yum remove oozie_3_0_0_0_1634-client-4.3.1.3.0.0.0-1634.noarch --setopt=tsflags=noscripts -y

基于【CentOS-7+ Ambari 2.7.0 + HDP 3.0】搭建HAWQ数据仓库02 ——使用ambari-server安装HDP的更多相关文章

- 基于【CentOS-7+ Ambari 2.7.0 + HDP 3.0】搭建HAWQ数据仓库01 —— 准备环境,搭建本地仓库,安装ambari

一.集群软硬件环境准备: 操作系统: centos 7 x86_64.1804 Ambari版本:2.7.0 HDP版本:3.0.0 HAWQ版本:2.3.05台PC作为工作站: ep-bd01 e ...

- 基于【CentOS-7+ Ambari 2.7.0 + HDP 3.0】搭建HAWQ数据仓库——操作系统配置,安装必备软件

注意未经说明,所有本文中所有操作都默认需要作为root用户进行操作. 一.安装zmodem,用于远程上传下载文件,安装gedit,方便重定向到远程windows上编辑文件(通过xlanuch) [ro ...

- 基于【CentOS-7+ Ambari 2.7.0 + HDP 3.0】搭建HAWQ数据仓库04 —— 安装HAWQ插件PXF3.3.0.0

一. 安装PXF3.3.0.0,这里所安装的pxf的包文件都包含在apache-hawq-rpm-2.3.0.0-incubating.tar.gz里面下面步骤都是以root身份执行这里注意,pxf插 ...

- 基于【CentOS-7+ Ambari 2.7.0 + HDP 3.0】搭建HAWQ数据仓库03 —— 安装HAWQ 2.3.0.0

一. HAWQ2.3.0环境准备[全部主机节点]: 1, vim /etc/sysctl.conf,编辑如下内容: kernel.shmmax= kernel.shmmni= kernel.shmal ...

- docker中基于centos镜像部署lnmp环境 php7.3 mysql8.0 最新版

Docker是一个开源的应用容器引擎,基于Go语言并遵从Apache2.0协议开源. Docker可以让开发者打包他们的应用以及依赖包到一个轻量级.可移植的容器中,然后发布到任何流行的Linux机器上 ...

- 基于【CentOS-7+ Ambari 2.7.0 + HDP 3.0】搭建HAWQ数据仓库——安装配置NTP服务,保证集群时间保持同步

一.所有节点上使用yum安装配置NTP服务yum install ntp -y 二.选定一台节点作为NTP server, 192.168.58.11修改/etc/ntp.conf vim /etc/ ...

- 基于【CentOS-7+ Ambari 2.7.0 + HDP 3.0】搭建HAWQ数据仓库 —— MariaDB 安装配置

一.安装并使用MariaDB作为Ambari.Hive.Hue的存储数据库. yum install mariadb-server mariadb 启动.查看状态,检查mariadb是否成功安装 sy ...

- 基于【CentOS-7+ Ambari 2.7.0 + HDP 3.0】搭建HAWQ数据仓库——安装配置OPEN-SSH,设置主机节点之间免密互访

配置root用户免密互访(为了方便,各台系统中使用统一的证书文件)一.安装Open-SSH 1,查询系统中是否安装了openssh [root@]# opm -qa |grep ssh 如已安装,则列 ...

- 基于Ambari Server部署HDP集群实战案例

基于Ambari Server部署HDP集群实战案例 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.部署Ambari Server端 博主推荐阅读: https://www.c ...

随机推荐

- Scrapy Crawl 运行出错 AttributeError: 'xxxSpider' object has no attribute '_rules' 的问题解决

按照官方的文档写的demo,只是多了个init函数,最终执行时提示没有_rules这个属性的错误日志如下: ...... File "C:\ProgramData\Anaconda3\lib ...

- the lime limited error

转载自:https://blog.csdn.net/MTOY_320/article/details/78363375?locationNum=7&fps=1 经常会遇到这种令人抓狂的情况 自 ...

- BZOJ4083 : [Wf2014]Wire Crossing

WF2014完结撒花~ 首先求出所有线段之间的交点,并在交点之间连边,得到一个平面图. 这个平面图不一定连通,故首先添加辅助线使其连通. 然后求出所有域,在相邻域之间连一条代价为$1$的边. 对起点和 ...

- Node辅助工具NPM&REPL

Node辅助工具NPM&REPL NPM和REPL是node的包管理器和交互式解析器,可以有效提高开发者效率 NPM npm(Node Package Manager)是node包管理器,完全 ...

- 使用Spring AOP实现MySQL读写分离

spring aop , mysql 主从配置 实现读写分离,下来把自己的配置过程,以及遇到的问题记录下来,方便下次操作,也希望给一些朋友带来帮助.mysql主从配置参看:http://blog.cs ...

- C#轻量级配置文件组件EasyJsonConfig

一.课程介绍 一.本次分享课程<C#轻量级配置文件EasyJsonConfig>适合人群如下: 1.有一定的NET开发基础. 2.喜欢阿笨的干货分享课程的童鞋们. 二.今天我们要如何优雅解 ...

- hive sql 里面的注释方式

如果建表ddl 用 comment 这个没问题 那么在sql 语句里面呢,这个貌似不像mysql 那样能用 # // /* */ (左边这些都不行) 其实用 -- comment 就行啦 貌似上面的- ...

- vue把localhost改成ip地址无法访问—解决方法

打开package.json文件,找到下面的代码 "scripts": { "dev": "webpack-dev-server --inline - ...

- c++中POD类型和non-POD类型

对于一个array来说: For POD-types, a shallow copy or memcpy of the whole array is good enough, while for no ...

- Netty实现的一个异步Socket代码

本人写的一个使用Netty实现的一个异步Socket代码 package test.core.nio; import com.google.common.util.concurrent.ThreadF ...