详解linux io flush

通过本文你会清楚知道 fsync()、fdatasync()、sync()、O_DIRECT、O_SYNC、REQ_PREFLUSH、REQ_FUA的区别和作用。

fsync() fdatasync() sync() 是什么?

首先它们是系统调用。

fsync

fsync(int fd) 系统调用把打开的文件描述符fd相关的所有缓冲元数据和数据与都刷新到磁盘上(non-volatile storage)

fsync() transfers ("flushes") all modified in-core data of (i.e., modified buffer cache pages for) the file referred to by the file descriptor fd to the disk device (or other permanent storage device) so that all changed information can be retrieved even after the system crashed or was rebooted. This includes writing through or flushing a disk cache if present. The call blocks until the device reports that the transfer has completed. It also flushes metadata information associated with the file (see stat(2)).

fdatasync

fdatasync(int fd) 类似fsync,但不flush元数据,除非元数据影响后面读数据。比如文件修改时间元数据变了就不会刷,而文件大小变了影响了后面对该文件的读取,这个会一同刷下去。所以fdatasync的性能要比fsync好。

fdatasync() is similar to fsync(), but does not flush modified metadata unless that metadata is needed in order to allow a subsequent data retrieval to be correctly handled. For example, changes to st_atime or st_mtime (respectively, time of last access and time of last modification; see stat(2)) do not require flushing because they are not necessary for a subsequent data read to be handled correctly. On the other hand, a change to the file size (st_size, as made by say ftruncate(2)), would require a metadata flush.The aim of fdatasync() is to reduce disk activity for applications that do not require all metadata to be synchronized with the disk.

sync

sync(void) 系统调用会使包含更新文件的所有内核缓冲区(包含数据块、指针块、元数据等)都flush到磁盘上。

Flush file system buffers, force changed blocks to disk, update the super block

O_DIRECT O_SYNC REQ_PREFLUSH REQ_FUA 是什么?

它们都是flag,可能最终的效果相同,但它们在不同的层面上。

O_DIRECT O_SYNC是系统调用open的flag参数,REQ_PREFLUSH REQ_FUA 是kernel bio的flag参数。

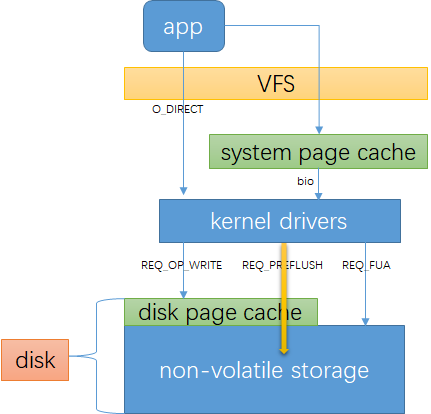

要理解这几个参数要需要知道两个页缓存:

一个是你的内存,free -h可以看到的buff/cache;

另外一个是硬盘自带的page cache。

一个io写盘的简单流程如下:

可以对比linux storage stack diagram:

O_DIRECT

O_DIRECT 表示io不经过系统缓存,这可能会降低你的io性能。它同步传输数据,但不保证数据安全。

备注:后面说的数据安全皆表示数据被写到磁盘的non-volatile storage

Try to minimize cache effects of the I/O to and from this

file. In general this will degrade performance, but it is

useful in special situations, such as when applications do

their own caching. File I/O is done directly to/from user-

space buffers. The O_DIRECT flag on its own makes an effort

to transfer data synchronously, but does not give the

guarantees of the O_SYNC flag that data and necessary metadata

are transferred. To guarantee synchronous I/O, O_SYNC must be

used in addition to O_DIRECT.

通过dd命令可以清楚看到 O_DIRECT和非O_DIRECT区别,注意buff/cache的变化:

#清理缓存:

#echo 3 > /proc/sys/vm/drop_caches

#free -h

total used free shared buff/cache available

Mem: 62G 1.1G 61G 9.2M 440M 60G

Swap: 31G 0B 31G

#dd without direct

#dd if=/dev/zero of=/dev/bcache0 bs=1M count=1024

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB) copied, 27.8166 s, 38.6 MB/s

#free -h

total used free shared buff/cache available

Mem: 62G 1.0G 60G 105M 1.5G 60G

Swap: 31G 0B 31G

#echo 3 > /proc/sys/vm/drop_caches

#free -h

total used free shared buff/cache available

Mem: 62G 626M 61G 137M 337M 61G

Swap: 31G 0B 31G

#dd with direct

#dd if=/dev/zero of=/dev/bcache0 bs=1M count=1024 oflag=direct

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB) copied, 2.72088 s, 395 MB/s

#free -h

total used free shared buff/cache available

Mem: 62G 628M 61G 137M 341M 61G

Swap: 31G 0B 31G

O_SYNC

O_SYNC 同步io标记,保证数据安全写到non-volatile storage

Write operations on the file will complete according to the

requirements of synchronized I/O file integrity completion

REQ_PREFLUSH

REQ_PREFLUSH 是bio的request flag,表示在本次io开始时先确保在它之前完成的io都已经写到非易失性存储里。

我理解REQ_PREFLUSH之确保在它之前完成的io都写到非易失物理设备,但它自己可能是只写到了disk page cache里,并不确保安全。

可以在一个空的bio里设置REQ_PREFLUSH,表示回刷disk page cache里数据。

Explicit cache flushes

The REQ_PREFLUSH flag can be OR ed into the r/w flags of a bio submitted from

the filesystem and will make sure the volatile cache of the storage device

has been flushed before the actual I/O operation is started. This explicitly

guarantees that previously completed write requests are on non-volatile

storage before the flagged bio starts. In addition the REQ_PREFLUSH flag can be

set on an otherwise empty bio structure, which causes only an explicit cache

flush without any dependent I/O. It is recommend to use

the blkdev_issue_flush() helper for a pure cache flush.

REQ_FUA

REQ_FUA 是bio的request flag,表示数据安全写到非易失性存储再返回

Forced Unit Access

The REQ_FUA flag can be OR ed into the r/w flags of a bio submitted from the

filesystem and will make sure that I/O completion for this request is only

signaled after the data has been committed to non-volatile storage.

实验验证

重新编译了bcache内核模块,打印出bio->bi_opf,opf是bio的 operation flag,它有req flag or组成,决定io的行为。

request flag

#from linux source code include/linux/blk_types.h

enum req_opf {

/* read sectors from the device */

REQ_OP_READ = 0,

/* write sectors to the device */

REQ_OP_WRITE = 1,

/* flush the volatile write cache */

REQ_OP_FLUSH = 2,

/* discard sectors */

REQ_OP_DISCARD = 3,

/* securely erase sectors */

REQ_OP_SECURE_ERASE = 5,

/* reset a zone write pointer */

REQ_OP_ZONE_RESET = 6,

/* write the same sector many times */

REQ_OP_WRITE_SAME = 7,

/* reset all the zone present on the device */

REQ_OP_ZONE_RESET_ALL = 8,

/* write the zero filled sector many times */

REQ_OP_WRITE_ZEROES = 9,

/* SCSI passthrough using struct scsi_request */

REQ_OP_SCSI_IN = 32,

REQ_OP_SCSI_OUT = 33,

/* Driver private requests */

REQ_OP_DRV_IN = 34,

REQ_OP_DRV_OUT = 35,

REQ_OP_LAST,

};

enum req_flag_bits {

__REQ_FAILFAST_DEV = /* 8 no driver retries of device errors */

REQ_OP_BITS,

__REQ_FAILFAST_TRANSPORT, /* 9 no driver retries of transport errors */

__REQ_FAILFAST_DRIVER, /* 10 no driver retries of driver errors */

__REQ_SYNC, /* 11 request is sync (sync write or read) */

__REQ_META, /* 12 metadata io request */

__REQ_PRIO, /* 13 boost priority in cfq */

__REQ_NOMERGE, /* 14 don't touch this for merging */

__REQ_IDLE, /* 15 anticipate more IO after this one */

__REQ_INTEGRITY, /* 16 I/O includes block integrity payload */

__REQ_FUA, /* 17 forced unit access */

__REQ_PREFLUSH, /* 18 request for cache flush */

__REQ_RAHEAD, /* 19 read ahead, can fail anytime */

__REQ_BACKGROUND, /* 20 background IO */

__REQ_NOWAIT, /* 21 Don't wait if request will block */

__REQ_NOWAIT_INLINE, /* 22 Return would-block error inline */

.....

}

bio->bi_opf 对照表

下面测试时候需要用到,可以直接跳过,有疑惑的时候回来查看。

| 十进制flag | 十六进制flag | REQ_FLG |

|---|---|---|

| 2409 | 1000 0000 0001 | REQ_OP_WRITE | REQ_SYNC |

| 4096 | 0001 0000 0000 0000 | REQ_META | REQ_READ |

| 34817 | 1000 1000 0000 0001 | REQ_OP_WRITE | REQ_SYNC | REQ_IDLE |

| 399361 | 0110 0001 1000 0000 0001 | REQ_OP_WRITE | REQ_SYNC | REQ_META | REQ_FUA | REQ_PREFLUSH |

| 264193 | 0100 0000 1000 0000 0001 | REQ_OP_WRITE | REQ_SYNC | REQ_PREFLUSH |

| 165889 | 0010 1000 1000 0000 0001 | REQ_OP_WRITE | REQ_SYNC | REQ_IDLE | REQ_FUA |

| 1048577 | 0001 0000 0000 0000 0000 0001 | REQ_OP_WRITE | REQ_BACKGROUND |

| 0010 0000 1000 0000 0001 | REQ_OP_WRITE | REQ_SYNC | REQ_FUA |

测试

用测试工具dd对块设备/dev/bcache0直接测试。

笔者通过dd源码已确认:oflag=direct表示文件已O_DIRECT打开,oflag=sync 表示已O_SYNC打开,conv=fdatasync表示dd结束后会发送一个fdatasync(fd), conv=fsync表示dd结束后会发送一个fsync(fd)

- direct

#dd if=/dev/zero of=/dev/bcache0 oflag=direct bs=8k count=1

//messages

kernel: bcache: cached_dev_make_request() bi_opf 34817, size 8192

bi_opf 34817, size 8192:bi_opf = REQ_OP_WRITE | REQ_SYNC | REQ_IDLE,是一个同步写,可以看到不保证数据安全

- direct & sync

#dd if=/dev/zero of=/dev/bcache0 oflag=direct,sync bs=8k count=1

kernel: bcache: cached_dev_make_request() bi_opf 165889, size 8192

kernel: bcache: cached_dev_make_request() bi_opf 264193, size 0

bi_opf 165889, size 8192: bi_opf=REQ_OP_WRITE | REQ_SYNC | REQ_IDLE | REQ_FUA ,是一个同步写请求,并且该io直接写到硬盘的non-volatile storage

bi_opf 264193, size 0: bi_opf= REQ_OP_WRITE | REQ_SYNC | REQ_PREFLUSH ,size = 0,表示回刷disk的page cache保证以前写入的io都刷到non-volatile storage

通过这个可以理解O_SYNC为什么可以保证数据安全。

#dd if=/dev/zero of=/dev/bcache0 oflag=direct,sync bs=8k count=2

kernel: bcache: cached_dev_make_request() iop_opf 165889, size 8192

kernel: bcache: cached_dev_make_request() iop_opf 264193, size 0

kernel: bcache: cached_dev_make_request() iop_opf 165889, size 8192

kernel: bcache: cached_dev_make_request() iop_opf 264193, size 0

写两个io到设备,从上面可以看到以O_SYNC打开文件,每个write都会发送一个flush请求,这对性能的影响比较大,所以在一般实现中不已O_SYNC打开文件,而是在几个io结束后调用一次fdatasync。

- without direct

#dd if=/dev/zero of=/dev/bcache0 bs=8k count=1

kernel: bcache: cached_dev_make_request() bi_opf 2049, size 4096

kernel: bcache: cached_dev_make_request() bi_opf 2049, size 4096

bi_opf 2049, size 4096:bi_opf = REQ_OP_WRITE | REQ_SYNC,同步写请求,可以看到原先8k的io在page cache里被拆成了2个4k的io写了下来,不保证数据安全

- direct & fdatasync

#dd if=/dev/zero of=/dev/bcache0 oflag=direct conv=fdatasync bs=8k count=1

kernel: bcache: cached_dev_make_request() bi_opf 34817, size 8192

kernel: bcache: cached_dev_make_request() bi_opf 264193, size 0

bi_opf 34817, size 8192:bi_opf = REQ_OP_WRITE | REQ_SYNC | REQ_IDLE, 同步写请求,这个不保证数据安全

bi_opf 264193, size 0:bi_opf = REQ_OP_WRITE | REQ_SYNC | REQ_PREFLUSH,一个disk page cache 回刷请求,这个就是fdatasync()下发的。

- direct & sync & fdatasync

#dd if=/dev/zero of=/dev/bcache0 oflag=direct,sync conv=fdatasync bs=8k count=1

kernel: bcache: cached_dev_make_request() bi_opf 165889, size 8192

kernel: bcache: cached_dev_make_request() bi_opf 264193, size 0

kernel: bcache: cached_dev_make_request() bi_opf 264193, size 0

bi_opf 165889, size 8192: bi_opf=REQ_OP_WRITE | REQ_SYNC | REQ_IDLE | REQ_FUA ,是一个同步写请求,并且该io直接写到硬盘的non-volatile storage

bi_opf 264193, size 0: bi_opf= REQ_OP_WRITE | REQ_SYNC | REQ_PREFLUSH ,size = 0,表示回刷disk的page cache保证以前写入的io都刷到non-volatile storage

结合上面的分析,这三个bio其实就是一个写io,两个flush io,分别由O_SYNC和fdatasync触发。

- direct & fsync

#dd if=/dev/zero of=/dev/bcache0 oflag=direct conv=fsync bs=8k count=1

kernel: bcache: cached_dev_make_request() bi_opf 34817, size 8192

kernel: bcache: cached_dev_make_request() bi_opf 264193, size 0

同direct + fdatasync,应该是写的是一个块设备,没有元数据或元数据没有变化,所以fdatasync和fsync收到的bio是一样的

彩蛋

如何打开关闭硬盘缓存

查看当前硬盘写Cache状态

#hdparm -W /dev/sda

/dev/sda:

write-caching = 1 (on)

关闭硬盘的写Cache

#hdparm -W 0 /dev/sda

打开硬盘的写Cache

#hdparm -W 1 /dev/sda

详解linux io flush的更多相关文章

- Linux下ps命令详解 Linux下ps命令的详细使用方法

http://www.jb51.net/LINUXjishu/56578.html Linux下的ps命令比较常用 Linux下ps命令详解Linux上进程有5种状态:1. 运行(正在运行或在运行队列 ...

- linux dmesg命令参数及用法详解(linux显示开机信息命令)

linux dmesg命令参数及用法详解(linux显示开机信息命令) http://blog.csdn.net/zhongyhc/article/details/8909905 功能说明:显示开机信 ...

- 从苦逼到牛逼,详解Linux运维工程师的打怪升级之路

做运维也快四年多了,就像游戏打怪升级,升级后知识体系和运维体系也相对变化挺大,学习了很多新的知识点. 运维工程师是从一个呆逼进化为苦逼再成长为牛逼的过程,前提在于你要能忍能干能拼,还要具有敏锐的嗅觉感 ...

- 详解Linux运维工程师

运维工程师是从一个呆逼进化为苦逼再成长为牛逼的过程,前提在于你要能忍能干能拼,还要具有敏锐的嗅觉感知前方潮流变化.如:今年大数据,人工智能比较火……(相对表示就是 Python 比较火) 之前写过运维 ...

- 一文详解 Linux 系统常用监控工一文详解 Linux 系统常用监控工具(top,htop,iotop,iftop)具(top,htop,iotop,iftop)

一文详解 Linux 系统常用监控工具(top,htop,iotop,iftop) 概 述 本文主要记录一下 Linux 系统上一些常用的系统监控工具,非常好用.正所谓磨刀不误砍柴工,花点时间 ...

- 详解Linux交互式shell脚本中创建对话框实例教程_linux服务器

本教程我们通过实现来讲讲Linux交互式shell脚本中创建各种各样对话框,对话框在Linux中可以友好的提示操作者,感兴趣的朋友可以参考学习一下. 当你在终端环境下安装新的软件时,你可以经常看到信息 ...

- linux useradd(adduser)命令参数及用法详解(linux创建新用户命令)

linux useradd(adduser)命令参数及用法详解(linux创建新用户命令) useradd可用来建立用户帐号.帐号建好之后,再用passwd设定帐号的密码.而可用userdel删除帐号 ...

- 详解linux运维工程师入门级必备技能

详解linux运维工程师入门级必备技能 | 浏览:659 | 更新:2013-12-24 23:23 | 标签:linux it自动化运维就是要很方便的运用各种工具进行管理维护,有效的实施服务器保护 ...

- watch命令详解(linux)

watch命令详解(linux) 在维护系统时经常需要实时查看系统的运行情况,比如实时的系统连接数之类的.在linux可以通过watch命令,实时监控每一条命令执行的结果动态变化. ...

随机推荐

- emacs配置&博客界面源代码

emacs配置 如果想要考场简单配置也可以去下面看,需要别的考场配置可以自己在下面比较全的里面找 考试备忘录(有新的就会更的...) By Junlier (global-set-key [f9] ' ...

- hdu3664 Permutation Counting(dp)

hdu3664 Permutation Counting 题目传送门 题意: 在一个序列中,如果有k个数满足a[i]>i:那么这个序列的E值为k,问你 在n的全排列中,有多少个排列是恰好是E值为 ...

- 参数化解决sql注入

用DynamicParameters: string where = " where a.is_deleted=0 and a.bvent_id=@bventId and au.user_t ...

- Nginx学习总结:geo与image模块(四

斜体下划线,表示建议采用默认配置,无需显式的配置 一.ngx_http_geo_module 核心特性为:根据客户端IP(段),geo模块将会匹配出指定的变量(比如,国家代码,城市代码).geo模块可 ...

- 阿里云搭建香港代理服务器 shadownsocks

阿里云香港代理服务器搭建方式: 1.阿里云官网购买轻量级服务器即可,流量,配置套餐自己选择,CENTOS7,进入控制台后打开端口管理列表,打开9000即可. 2.安装shadownsocks服务端: ...

- Vue:对象更改检测注意事项

还是由于 JavaScript 的限制,Vue 不能检测对象属性的添加或删除: var vm = new Vue({ data: { a: 1 } }) // `vm.a` 现在是响应式的 vm.b ...

- Window下设置Octave

从 http://sourceforge.net/projects/octave/files/Octave_Windows%20-%20MinGW/Octave%203.6.0%20for%20Win ...

- 25-Node.js学习笔记-express-app.locals对象

app.locals对象 将变量设置到app.locals对象下面,这个数据在所有的模板中都可以获取到 app.locals.users=[{ name:'柠檬不酸', age:20 },{ name ...

- Java基本数据类型及所占字节大小

一.Java基本数据类型 基本数据类型有8种:byte.short.int.long.float.double.boolean.char 分为4类:整数型.浮点型.布尔型.字符型. 整数型:byte. ...

- python的main函数

代码示例:test.py import sys if __name__ == '__main__' size = len(sys.argv) p1 = sys.argv[] p2 = sys.argv ...