cephadm快速部署指定版本ceph集群及生产问题处理

cephadm快速部署指定版本ceph集群及生产问题处理

官方文档:https://docs.ceph.com/en/pacific/

1、虚拟机规划:centos8

| 主机名 | IP | 角色 |

|---|---|---|

| ceph1 | 172.30.3.61 | cephadm,mon,mgr,osd,rgw |

| ceph2 | 172.30.3.62 | mon,mgr,osd,rgw |

| ceph3 | 172.30.3.63 | mon,mgr,rosd,rgw |

2、ceph版本:(安装指定版本在源里面指定即可)

- ceph version 15.2.12(生产)

- ceph version 16.2.4(测试)

3、虚拟机操作系统:

- centos8

4、初始化工作(三台机器同时操作):

4.1关闭防火墙:

systemctl stop firewalld && systemctl disable firewalld

4.2 关闭SELinux:

setenforce 0 && sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@ceph3 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of these three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

4.3 设置时间同步:

$ cat /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

# pool 2.centos.pool.ntp.org iburst

server ntp.aliyun.com iburst

# Record the rate at which the system clock gains/losses time.

driftfile /var/lib/chrony/drift

# Allow the system clock to be stepped in the first three updates

# if its offset is larger than 1 second.

makestep 1.0 3

# Enable kernel synchronization of the real-time clock (RTC).

rtcsync

# Enable hardware timestamping on all interfaces that support it.

#hwtimestamp *

# Increase the minimum number of selectable sources required to adjust

# the system clock.

#minsources 2

# Allow NTP client access from local network.

#allow 192.168.0.0/16

# Serve time even if not synchronized to a time source.

#local stratum 10

# Specify file containing keys for NTP authentication.

keyfile /etc/chrony.keys

# Get TAI-UTC offset and leap seconds from the system tz database.

leapsectz right/UTC

# Specify directory for log files.

logdir /var/log/chrony

# Select which information is logged.

#log measurements statistics tracking

重启服务

$ systemctl restart chronyd

$ systemctl enable chronyd

4.4 配置epel源:

CentOS Linux 8 已经停止更新维护,因此需要修改YUM源:

$ cd /etc/yum.repos.d/

sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-*

sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-*

生成缓存:

yum makecache

# yum update -y

配置epel源:(这里必须用命令安装,vi 写入key不识别)

dnf install epel-release -y

4.5 设置主机名:

$ hostnamectl set-hostname hostname

4.6修改域名解析文件:

[root@ceph1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.30.3.61 ceph1

172.30.3.62 ceph2

172.30.3.63 ceph3

4.7配置ceph镜像源

[root@ceph1 yum.repos.d]# cat ceph.repo

[Ceph]

name=Ceph packages for $basearch

baseurl=https://download.ceph.com/rpm-16.2.4/el8/$basearch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=https://download.ceph.com/rpm-16.2.4/el8/noarch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=https://download.ceph.com/rpm-16.2.4/el8/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

priority=1

[root@ceph1 yum.repos.d]#

想要更换其他版本

在vim 下面输入直接替换即可

ESC

:%s/16.2.4/15.2.12/g Enter

生成缓存:

yum makecache

安装ceph:

yum install ceph -y

验证ceph是否安装成功:

[root@node1 ~]# ceph -v

ceph version 15.2.12 (ce065eabfa5ce81323b009786bdf5bb03127cbe1) octopus (stable)

[root@node1 ~]#

5、安装docker(三台机器同时操作):(8中默认有podman)

官方文档:https://docs.docker.com/engine/install/centos/

5.1安装需要的软件包

$ yum install -y yum-utils

5.2设置stable镜像仓库:

$ yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

5.3生成缓存:

yum makecache

5.4安装DOCKER CE:

$ yum install docker-ce docker-ce-cli containerd.io docker-compose-plugin

5.5配置阿里云镜像加速

$ mkdir -p /etc/docker

$ tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://fxt824bw.mirror.aliyuncs.com"]

}

EOF

5.6验证docker是否安装

$ docker -v

Docker version 20.10.18, build b40c2f6

5.7启动docker:

$ systemctl start docker && systemctl enable docker

6、安装cephadm(ceph1节点操作)部署集群:

6.1安装cephadm:

yum install cephadm -y

6.2引导新群集:

$ cephadm bootstrap --mon-ip ceph1的IP

此操作比较慢,要从镜像源拉取镜像

# 执行结果:

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit chronyd.service is enabled and running

Repeating the final host check...

podman|docker (/usr/bin/docker) is present

systemctl is present

lvcreate is present

Unit chronyd.service is enabled and running

Host looks OK

Cluster fsid: 09feacf4-4f13-11ed-a401-000c29d6f8f4

Verifying IP 192.168.150.120 port 3300 ...

Verifying IP 192.168.150.120 port 6789 ...

Mon IP 192.168.150.120 is in CIDR network 192.168.150.0/24

- internal network (--cluster-network) has not been provided, OSD replication will default to the public_network

Pulling container image docker.io/ceph/ceph:v16...

Ceph version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting mon public_network to 192.168.150.0/24

Wrote config to /etc/ceph/ceph.conf

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Creating mgr...

Verifying port 9283 ...

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/15)...

mgr not available, waiting (2/15)...

mgr not available, waiting (3/15)...

mgr not available, waiting (4/15)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for mgr epoch 5...

mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to /etc/ceph/ceph.pub

Adding key to root@localhost authorized_keys...

Adding host ceph1...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Enabling mgr prometheus module...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for mgr epoch 13...

mgr epoch 13 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

Ceph Dashboard is now available at:

URL: https://ceph1:8443/

User: admin

Password: mo5ahyp1wx

You can access the Ceph CLI with:

sudo /usr/sbin/cephadm shell --fsid 09feacf4-4f13-11ed-a401-000c29d6f8f4 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/docs/pacific/mgr/telemetry/

Bootstrap complete.

拉取下来的镜像

[root@ceph1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ceph/ceph-grafana 6.7.4 557c83e11646 22 months ago 486MB

ceph/ceph v16 6933c2a0b7dd 23 months ago 1.2GB

prom/prometheus v2.18.1 de242295e225 3 years ago 140MB

prom/alertmanager v0.20.0 0881eb8f169f 3 years ago 52.1MB

prom/node-exporter v0.18.1 e5a616e4b9cf 4 years ago 22.9MB

[root@ceph1 ~]#

通过URL可以访问到可视化界面,输入用户名和密码即可进入界面

6.3其他主机加入集群:

启用 CEPH CLI:

cephadm shell

安装集群公共SSH密钥:

$ ceph cephadm get-pub-key > ~/ceph.pub

$ ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph2

$ ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph3

新主机加入集群:

$ ceph orch host add ceph2

$ ceph orch host add ceph3

这里如果无法加入后面加上IP即可

$ ceph orch host add ceph2 172.30.3.62

$ ceph orch host add ceph3 172.30.3.63

等待一段时间(可能需要等待的时间比较久,新加入的主机需要拉取需要的镜像和启动容器实例),查看集群状态:

$ ceph -s

cluster:

id: 09feacf4-4f13-11ed-a401-000c29d6f8f4

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 90s)

mgr: ceph1.sqfwyo(active, since 22m), standbys: ceph2.mwhuqa

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

可以看到新主机加入到集群中的时候会自动扩展mon和mgr节点。

6.4部署OSD:

查看磁盘分区:

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 10.1G 0 rom

nvme0n1 259:0 0 20G 0 disk

|-nvme0n1p1 259:1 0 1G 0 part

`-nvme0n1p2 259:2 0 19G 0 part

|-cl-root 253:0 0 17G 0 lvm /var/lib/ceph/crash

`-cl-swap 253:1 0 2G 0 lvm [SWAP]

nvme0n2 259:3 0 10G 0 disk

将集群中所有的空闲磁盘分区全部部署到集群中:

$ ceph orch daemon add osd ceph1:/dev/nvme0n2

Created osd(s) 0 on host 'ceph1'

$ ceph orch daemon add osd ceph2:/dev/nvme0n2

Created osd(s) 3 on host 'ceph2'

$ ceph orch daemon add osd ceph3:/dev/nvme0n2

Created osd(s) 6 on host 'ceph3'

查看集群状态:

$ ceph -s

cluster:

id: 09feacf4-4f13-11ed-a401-000c29d6f8f4

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 23m)

mgr: ceph2.mwhuqa(active, since 13m), standbys: ceph1.sqfwyo

osd: 9 osds: 9 up (since 7m), 9 in (since 8m)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 48 MiB used, 90 GiB / 90 GiB avail

pgs: 1 active+clean

[ceph: root@ceph1 ~]# ceph -s

cluster:

id: 09feacf4-4f13-11ed-a401-000c29d6f8f4

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 30m)

mgr: ceph2.mwhuqa(active, since 12h), standbys: ceph1.sqfwyo

osd: 9 osds: 9 up (since 12h), 9 in (since 12h)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 48 MiB used, 90 GiB / 90 GiB avail

pgs: 1 active+clean

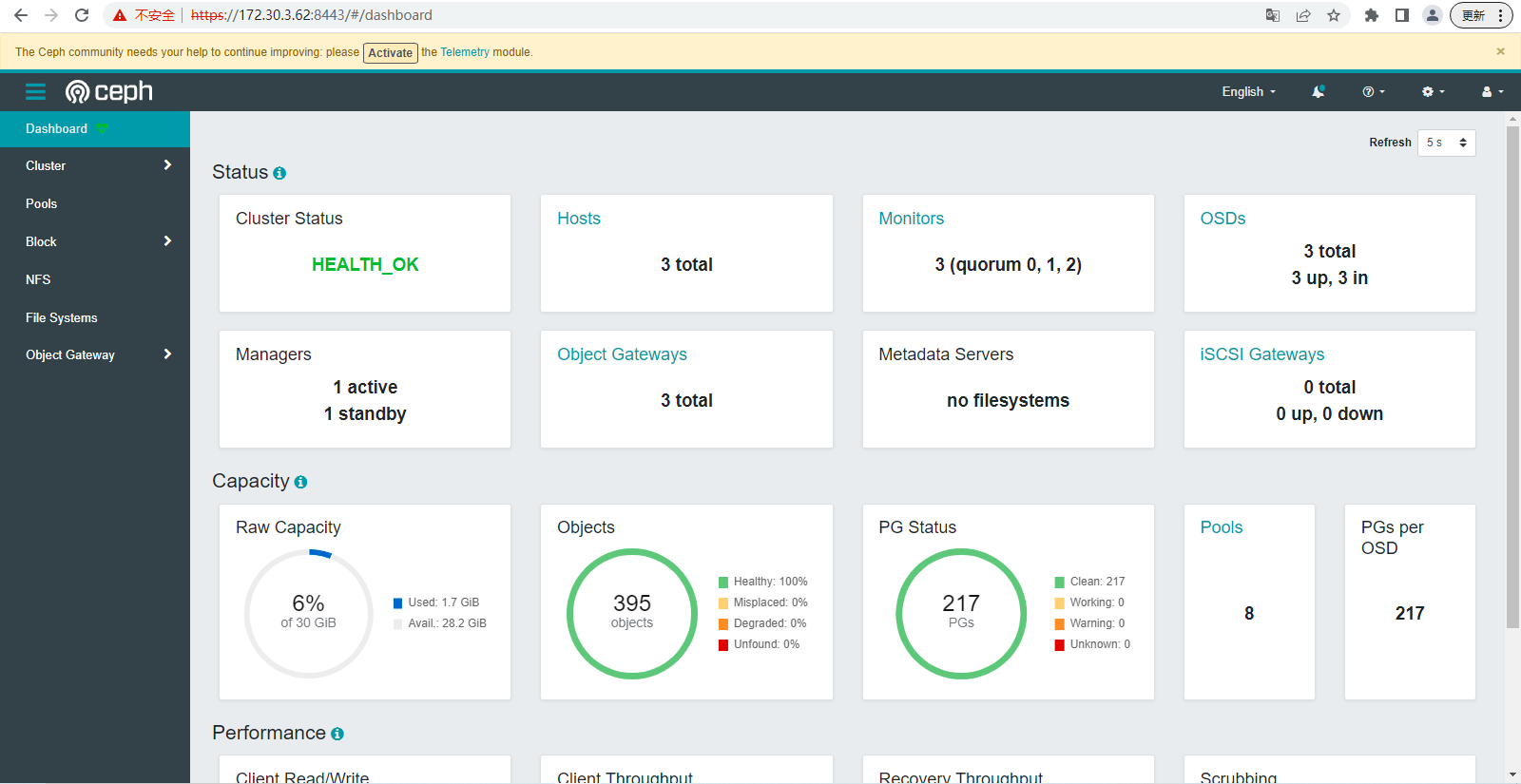

7、可视化界面验证查看集群:

8.部署rgw.s3

8.1ceph version 15.2.12版本

8.1.1创建pool并设置<pg_num> <pgp_num> 大小

ceph osd pool create cn-beijing.rgw.buckets.index 64 64 replicated

ceph osd pool create cn-beijing.rgw.buckets.data 128 128 replicated

ceph osd pool create cn-beijing.rgw.buckets.non-ec 32 32 replicated

[root@node1 ~]# ceph osd pool create cn-beijing.rgw.buckets.index 64 64 replicated

pool 'cn-beijing.rgw.buckets.index' created

[root@node1 ~]# ceph osd pool create cn-beijing.rgw.buckets.data 128 128 replicated

pool 'cn-beijing.rgw.buckets.data' created

[root@node1 ~]# ceph osd pool create cn-beijing.rgw.buckets.non-ec 64 64 replicated

pool 'cn-beijing.rgw.buckets.non-ec' created

8.1.2创建区域 realm zonegroup zone #创建一个新的领域

radosgw-admin realm create --rgw-realm=YOUJIVEST.COM --default

[root@ceph1 ~]# radosgw-admin realm create --rgw-realm=YOUJIVEST.COM --default

{

"id": "6ed9dc1d-8eb4-4c32-9d12-473f13e4835a",

"name": "YOUJIVEST.COM",

"current_period": "8cf9f6e2-005a-4cd2-b0ec-58f634ce66bc",

"epoch": 1

}

8.1.3创建新的区域组信息

radosgw-admin zonegroup create --rgw-zonegroup=cn --rgw-realm=YOUJIVEST.COM --master --default

[root@ceph1 ~]# radosgw-admin zonegroup create --rgw-zonegroup=cn --rgw-realm=YOUJIVEST.COM --master --default

{

"id": "38727586-d0ad-4df7-af2a-4bba2cbff495",

"name": "cn",

"api_name": "cn",

"is_master": "true",

"endpoints": [],

"hostnames": [],

"hostnames_s3website": [],

"master_zone": "",

"zones": [],

"placement_targets": [],

"default_placement": "",

"realm_id": "6ed9dc1d-8eb4-4c32-9d12-473f13e4835a",

"sync_policy": {

"groups": []

}

}

8.1.4 rgw-zone 运行radosgw的区域的名称

radosgw-admin zone create --rgw-zonegroup=cn --rgw-zone=cn-beijing --master --default

[root@ceph1 ~]# radosgw-admin zone create --rgw-zonegroup=cn --rgw-zone=cn-beijing --master --default

{

"id": "cc642b93-8b41-43c8-aa10-863a057401f1",

"name": "cn-beijing",

"domain_root": "cn-beijing.rgw.meta:root",

"control_pool": "cn-beijing.rgw.control",

"gc_pool": "cn-beijing.rgw.log:gc",

"lc_pool": "cn-beijing.rgw.log:lc",

"log_pool": "cn-beijing.rgw.log",

"intent_log_pool": "cn-beijing.rgw.log:intent",

"usage_log_pool": "cn-beijing.rgw.log:usage",

"roles_pool": "cn-beijing.rgw.meta:roles",

"reshard_pool": "cn-beijing.rgw.log:reshard",

"user_keys_pool": "cn-beijing.rgw.meta:users.keys",

"user_email_pool": "cn-beijing.rgw.meta:users.email",

"user_swift_pool": "cn-beijing.rgw.meta:users.swift",

"user_uid_pool": "cn-beijing.rgw.meta:users.uid",

"otp_pool": "cn-beijing.rgw.otp",

"system_key": {

"access_key": "",

"secret_key": ""

},

"placement_pools": [

{

"key": "default-placement",

"val": {

"index_pool": "cn-beijing.rgw.buckets.index",

"storage_classes": {

"STANDARD": {

"data_pool": "cn-beijing.rgw.buckets.data"

}

},

"data_extra_pool": "cn-beijing.rgw.buckets.non-ec",

"index_type": 0

}

}

],

"realm_id": "6ed9dc1d-8eb4-4c32-9d12-473f13e4835a",

"notif_pool": "cn-beijing.rgw.log:notif"

}

8.1.5查看区域

[root@ceph1 ~]# radosgw-admin zone list

{

"default_info": "cc642b93-8b41-43c8-aa10-863a057401f1",

"zones": [

"cn-beijing"

]

}

[root@ceph1 ~]# radosgw-admin zonegroup list

{

"default_info": "38727586-d0ad-4df7-af2a-4bba2cbff495",

"zonegroups": [

"cn"

]

}

[root@ceph1 ~]# radosgw-admin realm list

{

"default_info": "6ed9dc1d-8eb4-4c32-9d12-473f13e4835a",

"realms": [

"YOUJIVEST.COM"

]

}

8.1.6 为s3配置ssl

[root@node1 ~]# ceph config-key set rgw/cert/YOUJIVEST.COM/cn-beijing.crt -i /etc/letsencrypt/live/s3.youjivest.com/fullchain.pem

set rgw/cert/YOUJIVEST.COM/cn-beijing.crt

[root@node1 ~]# ceph config-key set rgw/cert/YOUJIVEST.COM/cn-beijing.key -i /etc/letsencrypt/live/s3.youjivest.com/privkey.pem

set rgw/cert/YOUJIVEST.COM/cn-beijing.key

8.1.7绑定数据存储位置

ceph osd pool application enable cn-beijing.rgw.buckets.index rgw

ceph osd pool application enable cn-beijing.rgw.buckets.data rgw

ceph osd pool application enable cn-beijing.rgw.buckets.non-ec rgw

8.1.8创建rgw.s3 ,curl 访问s3验证

[root@node1 ~]# ceph orch apply rgw YOUJIVEST.COM cn-beijing --placement=3 --ssl

ceph orch apply rgw rgw.YOUJIVEST.COM.cn-beijing --realm=YOUJIVEST.COM --zone=cn-beijing --placement="3 ceph1 ceph2 ceph3" --ssl

#curl 解析的主机:端口

[root@node1 ~]# curl https://s3.youjivest.com

<?xml version="1.0" encoding="UTF-8"?><ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/"><Owner><ID>anonymous</ID><DisplayName></DisplayName></Owner><Buckets></Buckets></ListAllMyBucketsResult>[root@node1 ~]#

#页面访问

8.1.9创建对外访问的KEY 和 secret_key

radosgw-admin user create --uid=esg --display-name='ESG User' --email=chenhu@youjivest.com

[root@node1 ~]# radosgw-admin user create --uid=esg --display-name='ESG User' --email=lvfaguo@youjivest.com

{

"user_id": "esg",

"display_name": "ESG User",

"email": "chenhu@youjivest.com",

"suspended": 0,

"max_buckets": 1000,

"subusers": [],

"keys": [

{

"user": "esg",

"access_key": "9Y3D6KQISHSURJHOUL8Z",

"secret_key": "gDk7TXLoTgAxBDwxfBAxgt4VTJrbcb82AlMvzu91"

}

],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"default_placement": "",

"default_storage_class": "",

"placement_tags": [],

"bucket_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"user_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"temp_url_keys": [],

"type": "rgw",

"mfa_ids": []

}

8.1.10授权esg 用户可以操作所有存储池

radosgw-admin caps add --uid=esg --caps="buckets=*"

[root@node1 ~]# radosgw-admin caps add --uid=esg --caps="buckets=*"

{

"user_id": "esg",

"display_name": "ESG User",

"email": "lvfaguo@youjivest.com",

"suspended": 0,

"max_buckets": 1000,

"subusers": [],

"keys": [

{

"user": "esg",

"access_key": "9Y3D6KQISHSURJHOUL8Z",

"secret_key": "gDk7TXLoTgAxBDwxfBAxgt4VTJrbcb82AlMvzu91"

}

],

"swift_keys": [],

"caps": [

{

"type": "buckets",

"perm": "*"

}

],

"op_mask": "read, write, delete",

"default_placement": "",

"default_storage_class": "",

"placement_tags": [],

"bucket_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"user_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"temp_url_keys": [],

"type": "rgw",

"mfa_ids": []

}

8.1.10 保存创建的用户,用于对外访问s3

[root@node1 ~]# cat key

9Y3D6KQISHSURJHOUL8Z

[root@node1 ~]# cat secret

gDk7TXLoTgAxBDwxfBAxgt4VTJrbcb82AlMvzu91

[root@node1 ~]#

8.2 ceph version 16.2.4

其他步骤都一样,版本不同创建rgw 规则有所不同

#创建 rgw YOUJIVEST.COM.cn-beijing(rgw的name,在ceph df 能看到) YOUJIVEST.COM(realm) cn-beijing(zone)

ceph orch apply rgw YOUJIVEST.COM.cn-beijing YOUJIVEST.COM cn-beijing --placement=placement=label:rgw --port=8000

9、生产问题处理

9.1ceph version 15.2.12 中 指定rgw 的ssl 证书失效了怎么办

在Ceph版本15.2.12中,如果你使用ceph config-key set命令来更新RGW(对象网关)的SSL证书,即使目前服务器证书是最新的,也不会自动生效。这是因为在Ceph中,更新SSL证书需要通过重新加载RGW服务来应用更改。

要使新的SSL证书生效,你需要执行以下步骤:

1.使用ceph config-key set命令将新的SSL证书内容存储到Ceph的配置数据库中。确保你已经替换了正确的证书文件路径和名称。示例命令如下:

ceph config-key set rgw/cert/YOUJIVEST.COM/cn-beijing.crt -i /etc/letsencrypt/live/s3.youjivest.com/fullchain.pem

2.接下来,需要重新加载RGW服务以使其使用新的SSL证书。你可以使用Ceph Orchestrator来重新加载RGW服务。执行以下命令:

ceph orch restart rgw.<realm>.<zone>

将 <realm> 替换为你的RGW实例的实际领域名称, <zone> 替换为区域名称。如果你只有一个RGW实例,则通常为default。

3.重新加载RGW服务后,它将开始使用新的SSL证书。你可以通过连接到RGW并检查证书信息来验证证书是否已更新。例如,你可以使用openssl s_client命令连接到RGW并检查证书详细信息:

openssl s_client -connect <rgw_host>:<rgw_port>

将 <rgw_host> 替换为你的RGW主机名或IP地址, <rgw_port> 替换为RGW监听的端口号(通常为80或443)。

9.2 osd 存储池满了怎么办

方法一:修改pool副本数

查询存储池的副本数:

1.使用以下命令列出当前的存储池及其相关信息:

ceph osd lspools

这将显示当前的存储池列表,其中包括每个存储池的ID和名称

2.选择你要查询副本数的存储池,并记录下其存储池ID或名称

3.使用以下命令查询存储池的副本数

ceph osd pool get <pool_name> size

将 <pool_name> 替换为你要查询的存储池的名称。

执行命令后,将显示该存储池的副本数。

修改存储池的副本数:

1.使用以下命令修改存储池的副本数:

ceph osd pool set <pool_name> size <new_size>

将 <pool_name> 替换为你要修改的存储池的名称, <new_size> 替换为你希望设置的新的副本数。

例如,要将存储池 "my_pool" 的副本数设置为 3,可以执行以下命令:

ceph osd pool set my_pool size 3

请注意,修改存储池的副本数可能会对存储池的容量和性能产生影响。确保你了解副本数对存储需求的影响,并在执行修改操作之前仔细考虑。

同时,修改存储池的副本数可能需要一段时间来重新平衡数据并应用更改。在此过程中,存储池可能会暂时处于不可用状态,因此请确保在适当的时机执行操作,并考虑影响到的应用程序或服务。

如果你需要更详细的信息或遇到问题,请参考Ceph的官方文档或寻求Ceph社区的支持和帮助。

方法二:购买硬盘。进行扩容

详细操作参考下面链接

https://www.cnblogs.com/andy996/p/17448038.html

cephadm快速部署指定版本ceph集群及生产问题处理的更多相关文章

- 使用kubeadm快速部署一套K8S集群

一.Kubernetes概述 1.1 Kubernetes是什么 Kubernetes是Google在2014年开源的一个容器集群管理系统,Kubernetes简称K8S. K8S用于容器化应用程序的 ...

- 基于云基础设施快速部署 RocketMQ 5.0 集群

本文作者:蔡高扬,Apache RocketMQ Committer, 阿里云智能技术专家. 背景 上图左侧为 RocketMQ 4.x版本集群,属于非切换架构.NameServer 作为无状态节点可 ...

- 快速部署 Kubeadm 1.13 集群(ETCD)

软件环境清单 kubeadm.x86_64 Version :1.13.1-0 kubelet.x86_64 Version : 1.13-1-0 kubectl.x86_64 Version : ...

- 仅需60秒,使用k3sup快速部署高可用K3s集群

作者简介 Dmitriy Akulov,连续创业者,16岁时搭建了开源CDN公共服务jsDelivr的v1版本.目前是边缘托管平台appfleet创始人. 原文链接: https://ma.ttias ...

- 使用kubeadm快速部署k8s高可用集群

二进制安装方法请移步到:二进制部署高可用kubernetes-1.22.7集群 一:环境初始化 系统规划 k8s-master01 192.168.113.100 k8s-master02 192 ...

- ACK容器服务发布virtual node addon,快速部署虚拟节点提升集群弹性能力

在上一篇博文中(https://yq.aliyun.com/articles/647119),我们展示了如何手动执行yaml文件给Kubernetes集群添加虚拟节点,然而,手动执行的方式用户体验并不 ...

- kubespray -- 快速部署高可用k8s集群 + 扩容节点 scale.yaml

主机 系统版本 配置 ip Mater.Node,ansible CentOS 7.2 4 ...

- SSD固态盘应用于Ceph集群的四种典型使用场景

在虚拟化及云计算技术大规模应用于企业数据中心的科技潮流中,存储性能无疑是企业核心应用是否虚拟化.云化的关键指标之一.传统的做法是升级存储设备,但这没解决根本问题,性能和容量不能兼顾,并且解决不好设备利 ...

- cephadm 安装部署 ceph 集群

介绍 手册: https://access.redhat.com/documentation/zh-cn/red_hat_ceph_storage/5/html/architecture_guide/ ...

- ceph 集群快速部署

1.三台Centos7的主机 [root@ceph-1 ~]# cat /etc/redhat-release CentOS Linux release 7.2.1511 (Core) 2.主机 ...

随机推荐

- drf——登录功能、认证、权限、频率组件(Django转换器、配置文件作用)

Django转换器.配置文件作用 # django转换器 2.x以后 为了取代re_path int path('books/<int:pk>')--->/books/1---> ...

- 封装vue基于element的select多选时启用鼠标悬停折叠文字以tooltip显示具体所选值

相信很多公司的前端开发人员都会选择使用vue+element-ui的形式来开发公司的管理后台系统,基于element-ui很丰富的组件生态,我们可以很快速的开发管理后台系统的页面(管理后台系统的页面也 ...

- ChatGPT+Mermaid自然语言流程图形化产出小试

ChatGPT+Mermaid语言实现技术概念可视化 本文旨在介绍如何使用ChatGPT和Mermaid语言生成流程图的技术.在现代软件开发中,流程图是一种重要的工具,用于可视化和呈现各种流程和结构. ...

- 用CSS实现带动画效果的单选框

预览一下效果:http://39.105.101.122/myhtml/CSS/singlebox2/singleRadio.html 布局结构为: 1 <div class="rad ...

- 前端Vue自定义顶部搜索框 热门搜索 历史搜索 用于搜索跳转使用

前端Vue自定义顶部搜索框 热门搜索 历史搜索 用于搜索跳转使用, 下载完整代码请访问uni-app插件市场地址:https://ext.dcloud.net.cn/plugin?id=13128 效 ...

- 多模态大语言模型 LlaVA 论文解读:Visual Instruction Tuning

代码:https://github.com/haotian-liu/LLaVA 总览 在这篇论文中,作者首次尝试使用纯语言 GPT-4 生成多模态语言图像指令遵循数据(insruction-follo ...

- 简约版八股文(day1)

Java基础 面向对象的三大基本特征 封装:将一些数据和对这些数据的操作封装在一起,形成一个独立的实体.隐藏内部的操作细节,并向外提供一些接口,来暴露对象的功能. 继承:继承是指子类继承父类,子类获得 ...

- 基于thumbnailator封装图片处理工具类,实现图片的裁剪、压缩、图片水印、文字水印、多行文字水印等功能

目录 一.前言 二.工具类的依赖和简单介绍 1.添加依赖 2.简单的使用 3.加载需要处理的图片 4.添加图片处理规则 4.1 Builder的方式 4.2 使用规则工厂的方式 5.输出处理后的图片 ...

- 即构SDK7月迭代:新增支持按通道设置延迟模式,让卡顿大大减少

即构SDK 7月迭代如期而至,本月SDK更新主要增加了按推流通道设置延迟模式,大大减少了直播卡顿:媒体本地录制新增AAC 格式,可生成更小的录制文件,更易于上传.此外还有多项功能的优化,让用户获得更好 ...

- 【阅读笔记】RAISR

RAISR: RAISR: Rapid and Accurate Image Super Resolution --Yaniv Romano, 2017(211 Citations) 核心思想 LR ...