Coursera, Deep Learning 1, Neural Networks and Deep Learning - week3, Neural Networks Basics

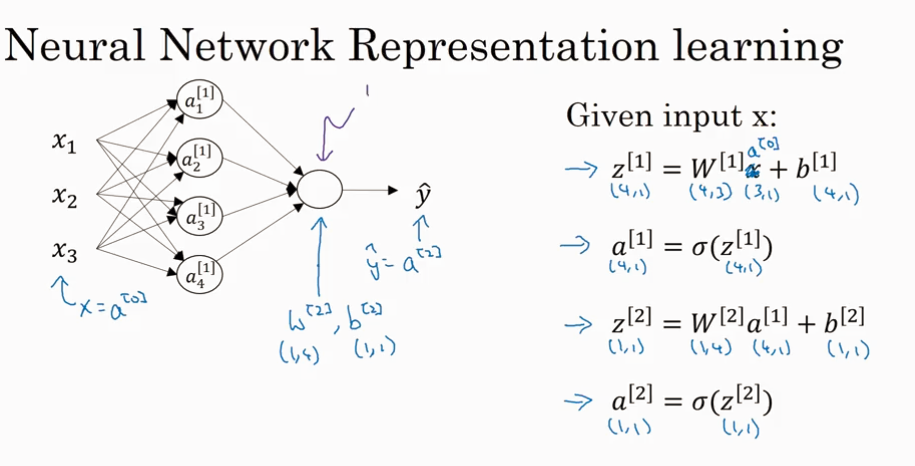

NN representation

这一课主要是讲3层神经网络

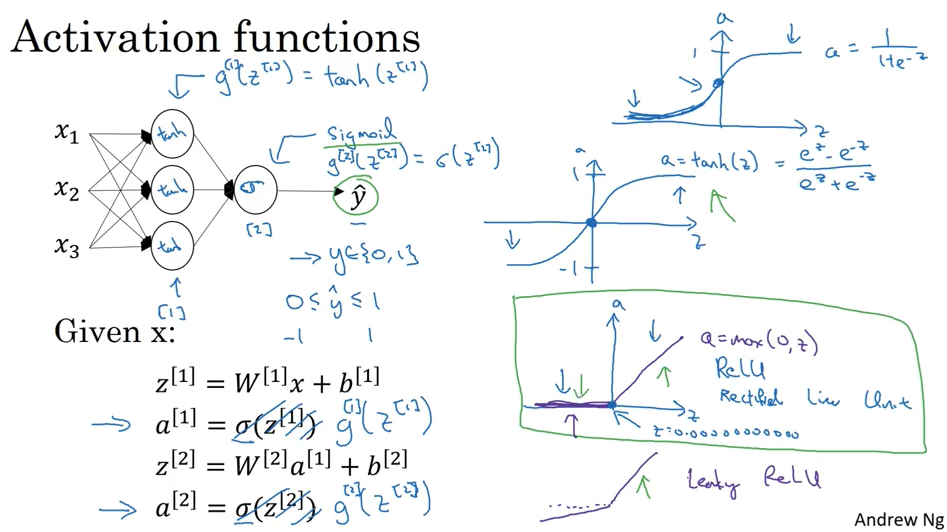

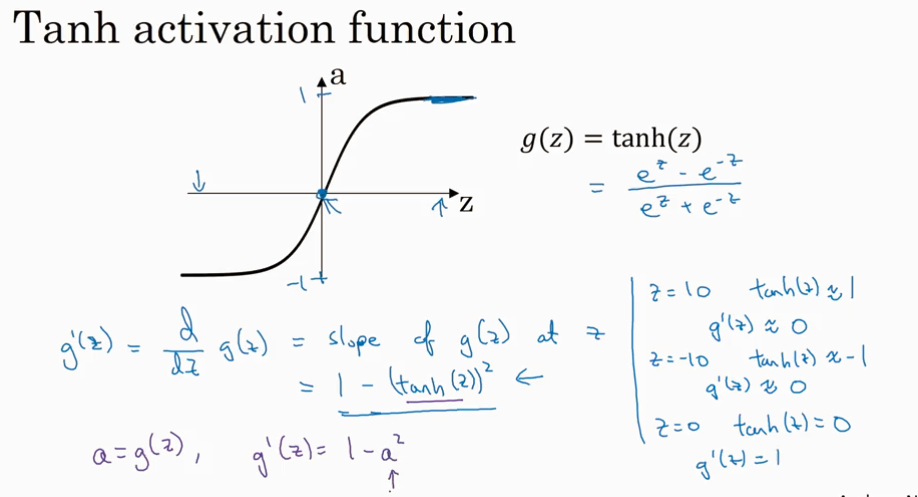

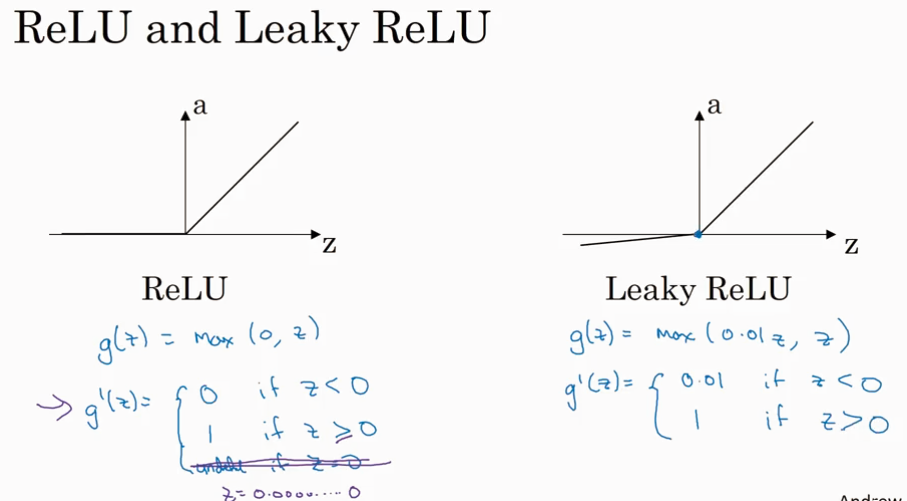

下面是常见的 activation 函数.sigmoid, tanh, ReLU, leaky ReLU.

Sigmoid 只用在输出0/1 时候的output layer, 其他情况基本不用,因为tanh 总是比sigmoid 好.

两种 ReLU 使用起来总是要比sigmoid 和 tanh 快。ReLU 是最常用的 activation.

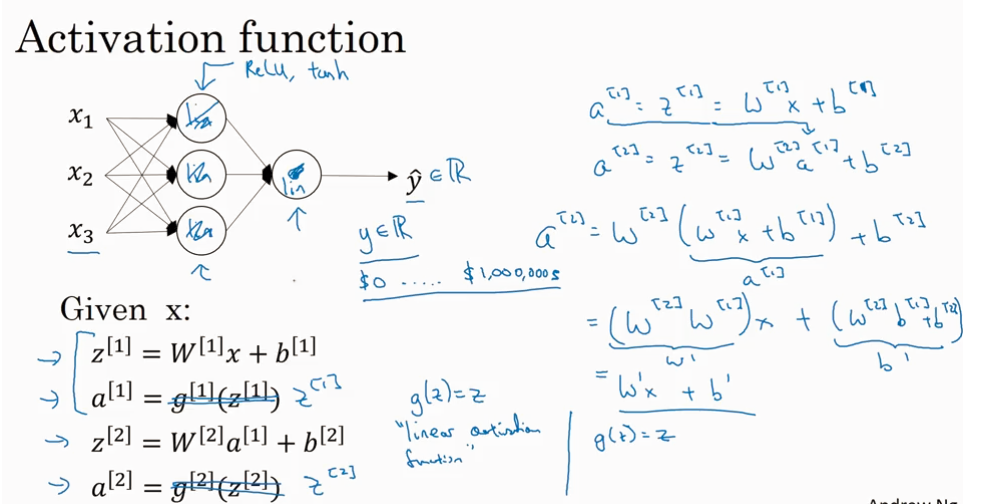

为什么Activation function 要是non-linear的?因为如下图所示如果activation 是linear的,那么最终output 只是 input 的线性函数.

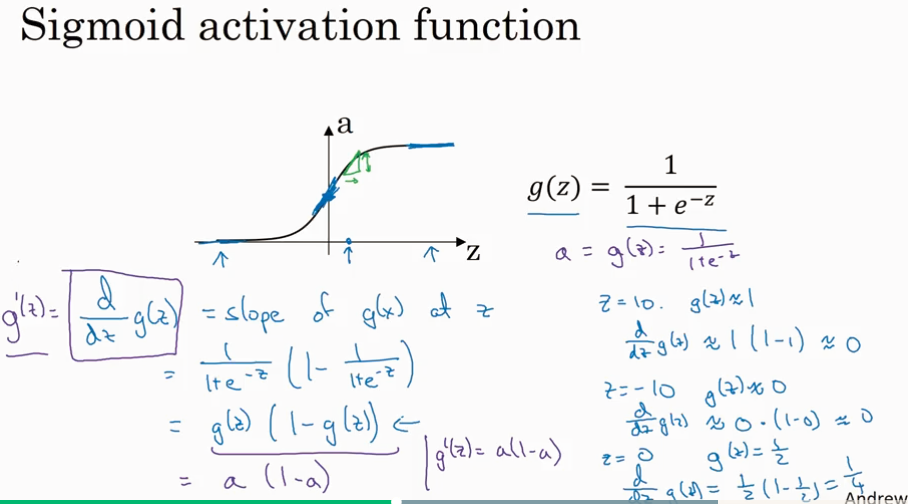

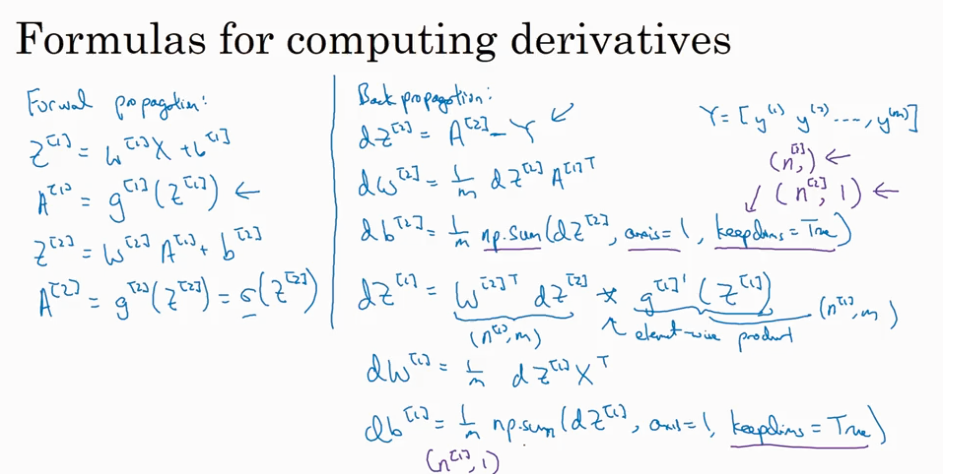

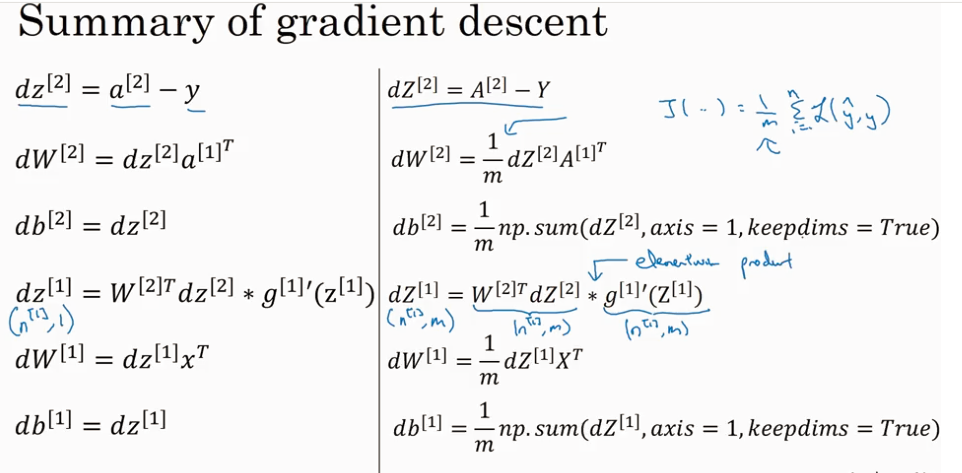

Gradient of activation function

Gredient of 2 layer NN.

Random initialization

Coursera, Deep Learning 1, Neural Networks and Deep Learning - week3, Neural Networks Basics的更多相关文章

- 【DeepLearning学习笔记】Coursera课程《Neural Networks and Deep Learning》——Week2 Neural Networks Basics课堂笔记

Coursera课程<Neural Networks and Deep Learning> deeplearning.ai Week2 Neural Networks Basics 2.1 ...

- 【DeepLearning学习笔记】Coursera课程《Neural Networks and Deep Learning》——Week1 Introduction to deep learning课堂笔记

Coursera课程<Neural Networks and Deep Learning> deeplearning.ai Week1 Introduction to deep learn ...

- 课程一(Neural Networks and Deep Learning),第四周(Deep Neural Networks) —— 3.Programming Assignments: Deep Neural Network - Application

Deep Neural Network - Application Congratulations! Welcome to the fourth programming exercise of the ...

- 课程一(Neural Networks and Deep Learning),第二周(Basics of Neural Network programming)—— 4、Logistic Regression with a Neural Network mindset

Logistic Regression with a Neural Network mindset Welcome to the first (required) programming exerci ...

- Neural Networks and Deep Learning

Neural Networks and Deep Learning This is the first course of the deep learning specialization at Co ...

- [C3] Andrew Ng - Neural Networks and Deep Learning

About this Course If you want to break into cutting-edge AI, this course will help you do so. Deep l ...

- 《Neural Networks and Deep Learning》课程笔记

Lesson 1 Neural Network and Deep Learning 这篇文章其实是 Coursera 上吴恩达老师的深度学习专业课程的第一门课程的课程笔记. 参考了其他人的笔记继续归纳 ...

- 第四节,Neural Networks and Deep Learning 一书小节(上)

最近花了半个多月把Mchiael Nielsen所写的Neural Networks and Deep Learning这本书看了一遍,受益匪浅. 该书英文原版地址地址:http://neuralne ...

- 课程四(Convolutional Neural Networks),第二 周(Deep convolutional models: case studies) —— 0.Learning Goals

Learning Goals Understand multiple foundational papers of convolutional neural networks Analyze the ...

- 课程一(Neural Networks and Deep Learning),第三周(Shallow neural networks)—— 3.Programming Assignment : Planar data classification with a hidden layer

Planar data classification with a hidden layer Welcome to the second programming exercise of the dee ...

随机推荐

- C# 中Web.config文件的读取与写入

asp.net2.0新添加了对web.config直接操作的功能.开发的时候有可能用到在web.config里设置配置文件,其实是可以通过程序来设置这些配置节的. asp.net2.0需要添加引用: ...

- (转)Java动态追踪技术探究

背景:美团的技术沙龙分享的文章都还是很不错的,通俗易懂,开阔视野,后面又机会要好好实践一番. Java动态追踪技术探究 楔子 jsp的修改 重新加载不需要重启servlet.如何在不重启jvm的情况下 ...

- JavaScript(JS)基本语法(一)

https://www.cnblogs.com/haiyan123/p/7577598.html 一.JavaScript的历史 1992年Nombas开发出C-minus-minus(C--)的嵌入 ...

- long long

1. ll a; scanf("%d",&a); 数据读入后,产生错误 2. const ll inf=1e18; 3. int * ll = ll ll * int = ...

- Day17--Python--面向对象--成员

成员 class Person: def __init__(self, name, num, gender,birthday): # 成员变量(实例变量) self.name = name self. ...

- Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.1:co

在pom中加入下面代码: <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId&g ...

- (贪心 map) Flying to the Mars hdu1800

Flying to the Mars Time Limit: 5000/1000 MS (Java/Others) Memory Limit: 32768/32768 K (Java/Other ...

- python异步编程之asyncio(百万并发)

前言:python由于GIL(全局锁)的存在,不能发挥多核的优势,其性能一直饱受诟病.然而在IO密集型的网络编程里,异步处理比同步处理能提升成百上千倍的效率,弥补了python性能方面的短板,如最 ...

- MySQL数据库服务器整体规划(思路与步骤)

MySQL数据库服务器整体规划(思路与步骤) 参考资料: http://blog.51cto.com/zhilight/1630611 我们在搭建MySQL数据库服务器的开始阶段就合理的规划,可以避免 ...

- 20165232 2017-2018-2《Java程序设计》课程总结

20165232 2017-2018-2<Java程序设计>课程总结 每周作业链接汇总: 我期望的师生关系 学习基础和c语言基础调查 预备作业3 Linux安装及学习 第一周学习总结 第二 ...