Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week3, Hyperparameter tuning, Batch Normalization and Programming Frameworks

Tuning process

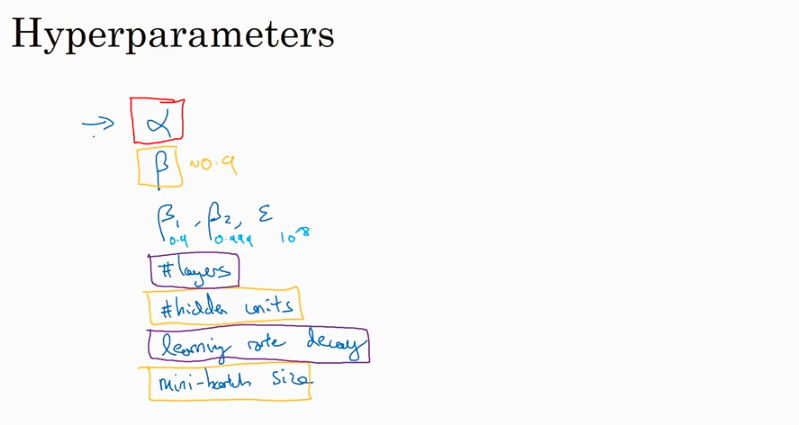

下图中的需要tune的parameter的先后顺序, 红色>黄色>紫色,其他基本不会tune.

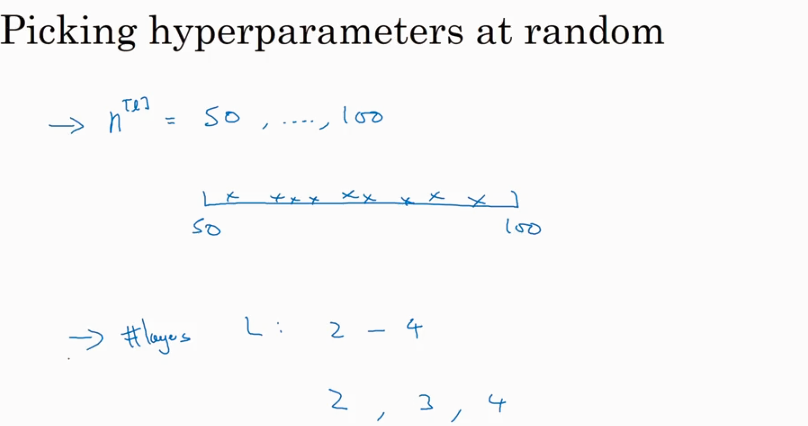

先讲到怎么选hyperparameter, 需要随机选取(sampling at random)

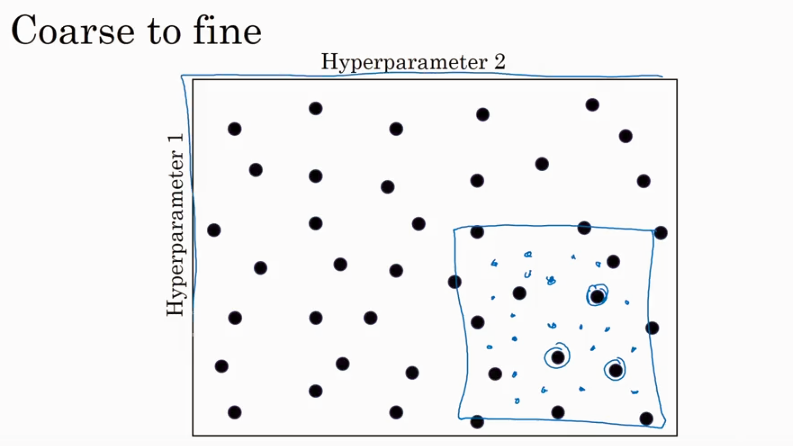

随机选取的过程中,可以采用从粗到细的方法逐步确定参数

有些参数可以按照线性随机选取, 比如 n[l]

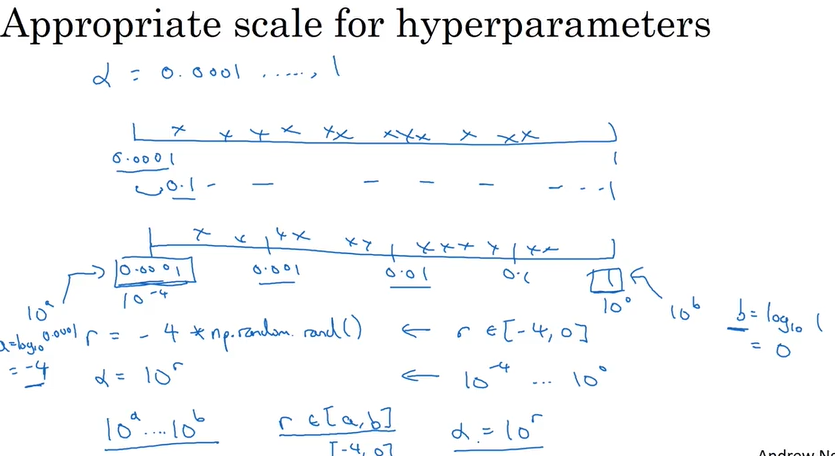

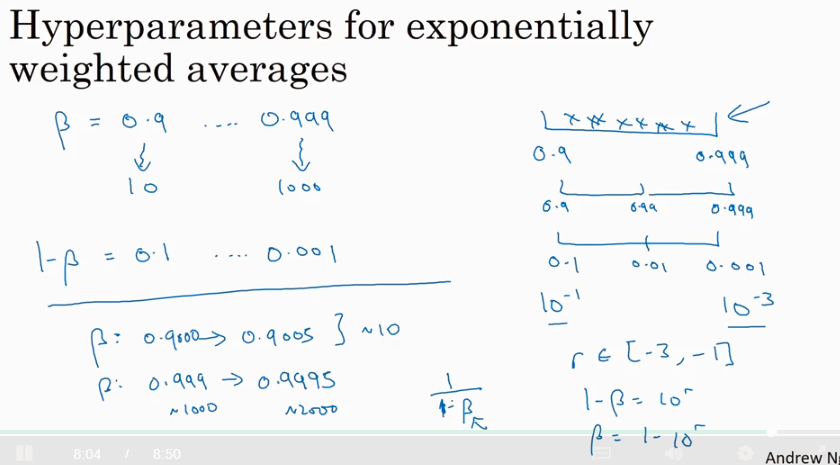

但是有些参数就不适合线性的sampling at radom, 比如 learning rate α,这时可以用 log

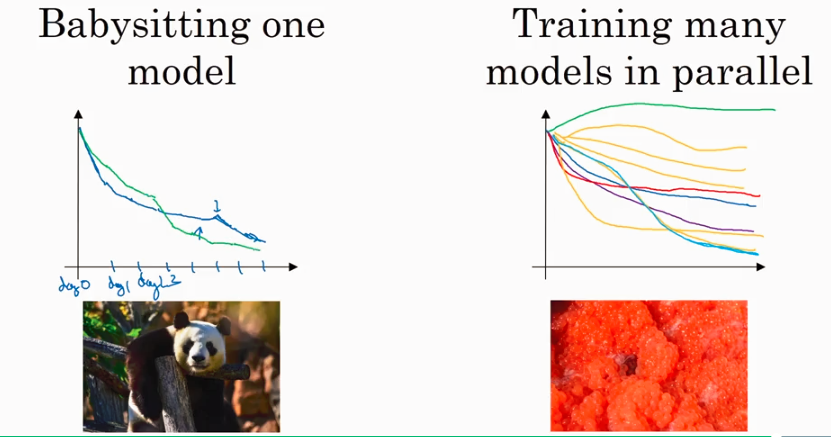

Andrew 很幽默的讲到了两种选参数的实际场景 pandas vs caviar. pandas approach 一般用在你的算力不够时候,要持续几天的training.

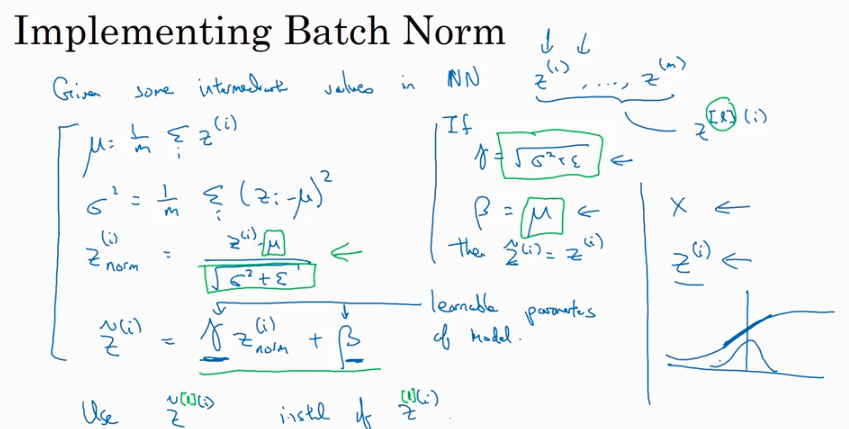

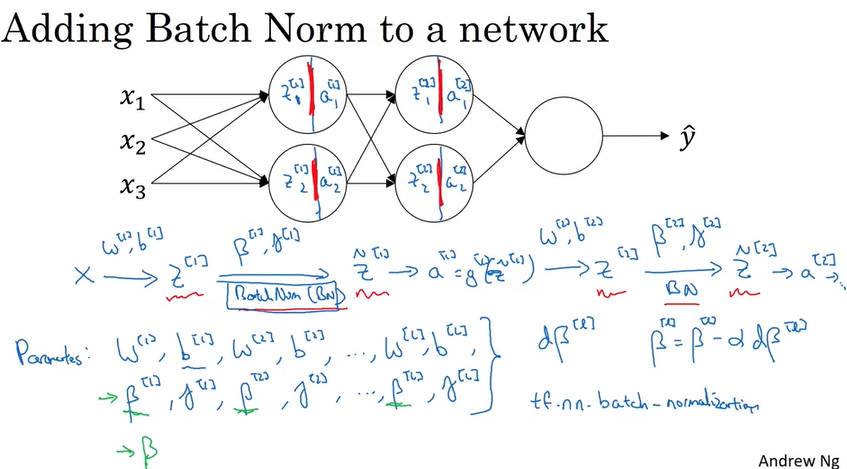

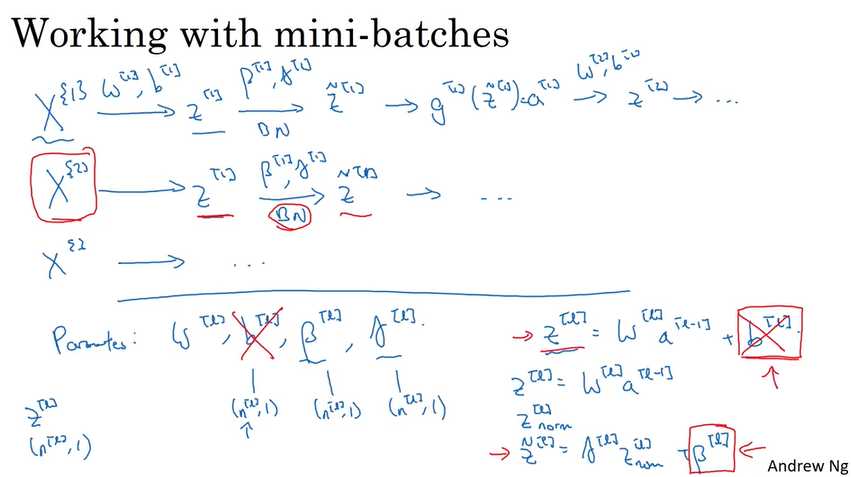

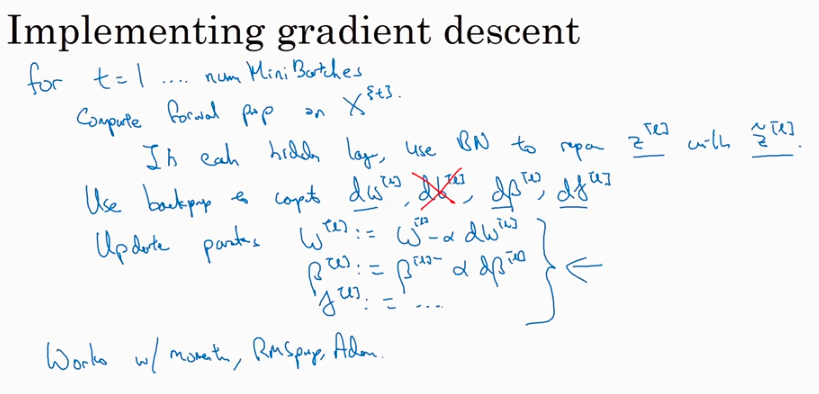

Batch norm

我们知道对input layer 做 normalizing, 其实对每一层的输入都可以做normalizing, 这就是 batch norm. 做batch norm 时,有对 activation后的结果做norm 的,也有对activation 前的结果 z 做batch norm 的,这里讲的是后一种,对z 做norm.

为什么Batch Norm 起作用呢?

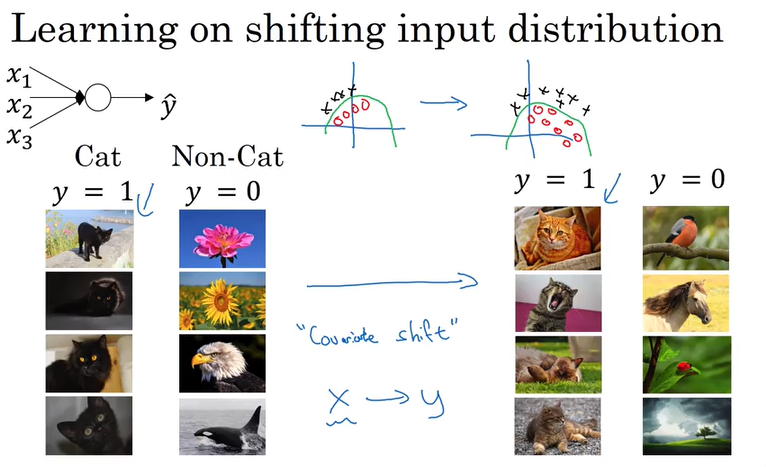

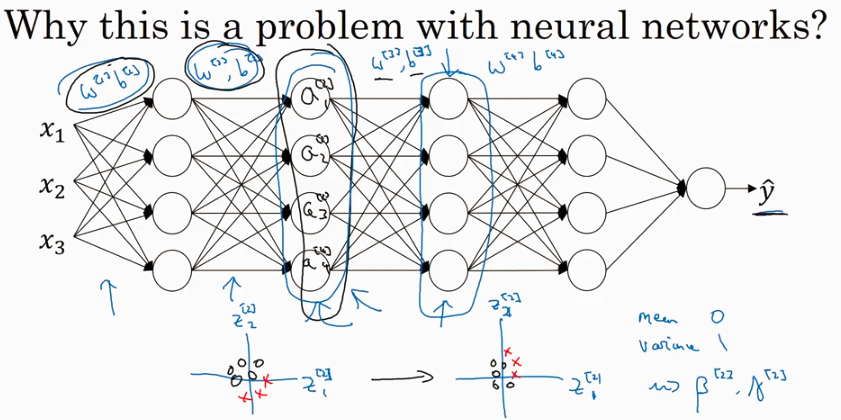

先看下下面图讲到的convariate shift,如果traing set 的distribution 变了,就应该重新train model. 同样,对NN的每一层也有类似的问题.

Andrew讲到batch norm 是为了尽量使得不同layer decouple,这样相互影响就要小一点,整个NN比较稳定.

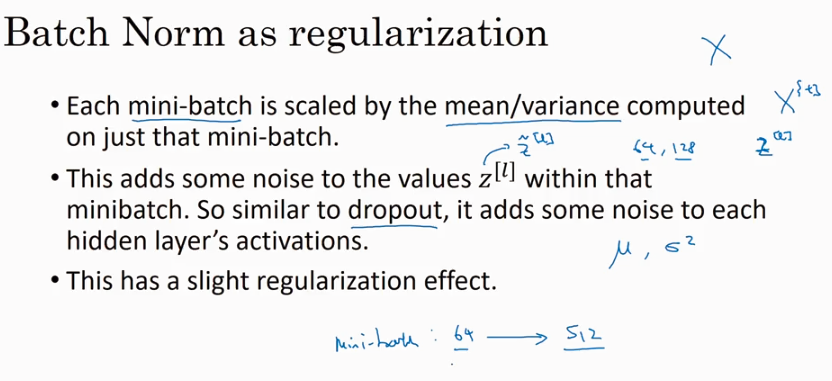

Batch norm 还有regularization 的作用,但是这个算法主要不是做这个的. 不建议专门用它来做regularization.

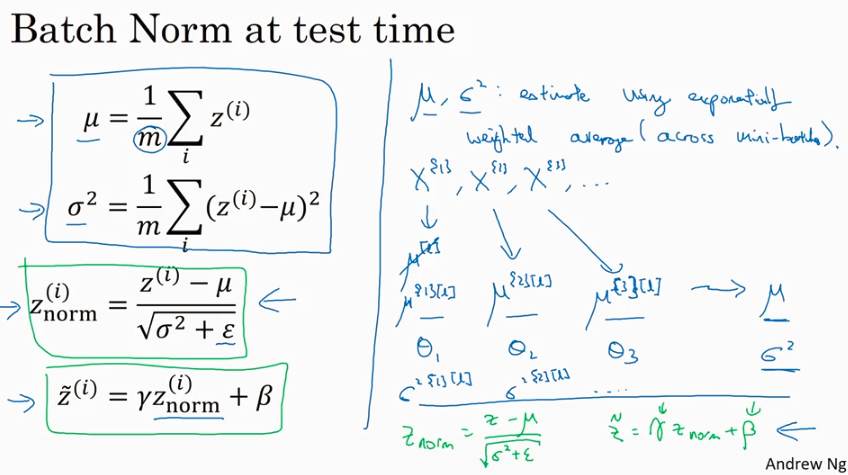

对 test set 求 μ, σ2, 采用了不一样的方法,就是基于签名mini-batch set 求出来的μ, σ2 应用exponetially weighted average 求平均值. 它和logistic regression 一样,decision boudary 是线性的.

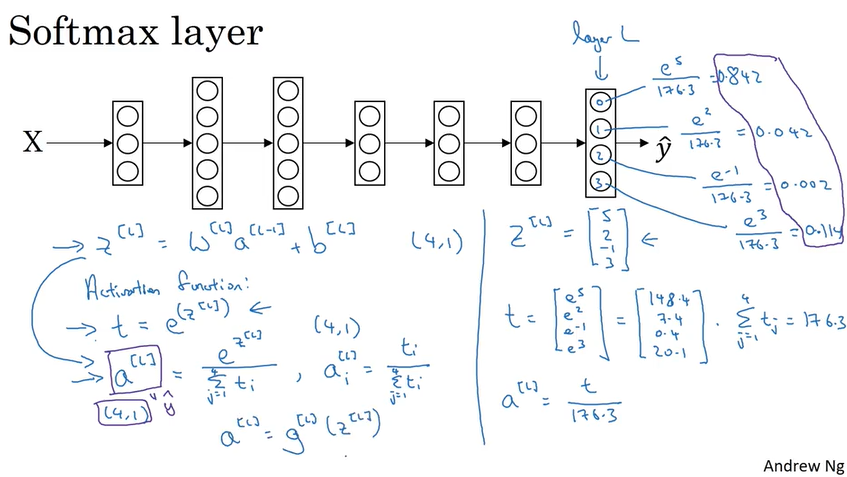

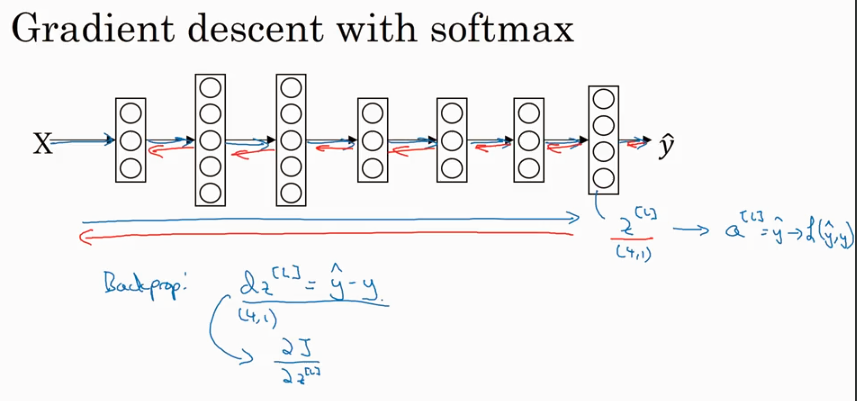

Softmax Regression

Softmax regression 就是 logistic regression 的generaliazation 版本, 它可以用在multi-class clarification 问题上。和logistic regression 一样,decision boudary 都是线性的. 如果要使得decison boudary 是非线性的就需要deep network.

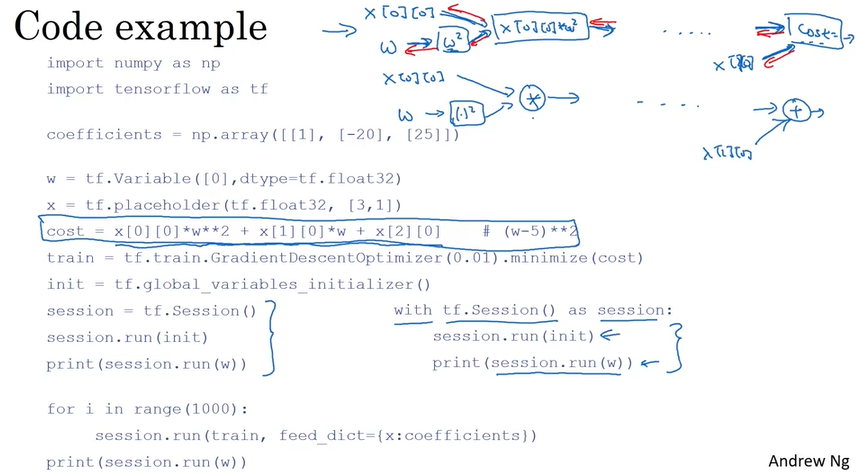

Programing framework

TensorFlow by google, an example

Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week3, Hyperparameter tuning, Batch Normalization and Programming Frameworks的更多相关文章

- [C2W3] Improving Deep Neural Networks : Hyperparameter tuning, Batch Normalization and Programming Frameworks

第三周:Hyperparameter tuning, Batch Normalization and Programming Frameworks 调试处理(Tuning process) 目前为止, ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week1, Assignment(Initialization)

声明:所有内容来自coursera,作为个人学习笔记记录在这里. Initialization Welcome to the first assignment of "Improving D ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week1, Assignment(Gradient Checking)

声明:所有内容来自coursera,作为个人学习笔记记录在这里. Gradient Checking Welcome to the final assignment for this week! In ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week1, Assignment(Regularization)

声明:所有内容来自coursera,作为个人学习笔记记录在这里. Regularization Welcome to the second assignment of this week. Deep ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week2, Assignment(Optimization Methods)

声明:所有内容来自coursera,作为个人学习笔记记录在这里. 请不要ctrl+c/ctrl+v作业. Optimization Methods Until now, you've always u ...

- 课程二(Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization),第三周(Hyperparameter tuning, Batch Normalization and Programming Frameworks) —— 2.Programming assignments

Tensorflow Welcome to the Tensorflow Tutorial! In this notebook you will learn all the basics of Ten ...

- 吴恩达《深度学习》-课后测验-第一门课 (Neural Networks and Deep Learning)-Week 3 - Shallow Neural Networks(第三周测验 - 浅层神 经网络)

Week 3 Quiz - Shallow Neural Networks(第三周测验 - 浅层神经网络) \1. Which of the following are true? (Check al ...

- [CS231n-CNN] Training Neural Networks Part 1 : activation functions, weight initialization, gradient flow, batch normalization | babysitting the learning process, hyperparameter optimization

课程主页:http://cs231n.stanford.edu/ Introduction to neural networks -Training Neural Network ________ ...

- Coursera, Deep Learning 2, Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week1, Course

Train/Dev/Test set Bias/Variance Regularization 有下面一些regularization的方法. L2 regularation drop out da ...

随机推荐

- 第三十四节,目标检测之谷歌Object Detection API源码解析

我们在第三十二节,使用谷歌Object Detection API进行目标检测.训练新的模型(使用VOC 2012数据集)那一节我们介绍了如何使用谷歌Object Detection API进行目标检 ...

- linux下静态库和动态库的制作

一.静态库 1.编写.c文件,在其中实现函数源代码,同时制作头文件 2.将.c文件转为.o文件 gcc -c xxx.c -o xxx.o 3.将*.o转换成库文件 a ...

- (数论 最大公约数 最小公倍数) codeVs 1012 最大公约数和最小公倍数问题

题目描述 Description 输入二个正整数x0,y0(2<=x0<100000,2<=y0<=1000000),求出满足下列条件的P,Q的个数 条件: 1.P,Q是正整 ...

- 字符缓冲流BufferedWriter BufferedReader

//字符缓冲流主要用于文本数据的高速写入 package cn.lijun.demo1; import java.io.BufferedReader; import java.io.FileNotFo ...

- 看我如何未授权登陆某APP任意用户(token泄露实例)

转载:https://www.nosafe.org/thread-333-1-1.html 先来看看这个. 首先,我在登陆时候截取返回包修改id值是无效的,因为有一个token验证,经过多次登陆 ...

- qml: 截图(单窗口);

Item提供了grabToImage方法,能够对窗口内指定区域进行截图: Rectangle { id: source width: 100 height: 100 gradient: Gradien ...

- VM中的Linux如何设置共享文件夹

1.点击[编辑虚拟机设置]-[选项]-[共享文件夹],选择“总是启用” 2.点击[确定],并重启系统,已经设置好了

- maven直接饮用jar包的写法

<dependency> <groupId>sample</groupId> <artifactId>com.sample</artifactId ...

- Hbase学习01

1.1 快速介绍 1.1.1 快速入门,单节点Hbase 本小节介绍单节点独立HBase的设置. 独立实例包含所有HBase守护进程 - Master,RegionServers和ZooKeeper ...

- UNIX网络编程中的字节序问题

1.inet_pton 函数原型: inet_pton:将“点分十进制” -> “二进制整数” int inet_pton(int af, const char *src, void *dst) ...