Apache Hadoop 2.9.2 的归档案例剖析

Apache Hadoop 2.9.2 的归档案例剖析

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

能看到这篇文章说明你对NameNode 工作原理是有深入的理解啦!我们知道每个文件按照块存储,没饿过块的元数据存储在NameNode的内存中,因此Hadoop存储小文件会非常低效。因为大量的小文件会耗尽NameNode中的大部分内存。但注意,存储小文件所需要的磁盘容量和存储这些文件原始内容所需要的磁盘空间相比也不会增多。例如,一个2MB的文件大小为128MB的块存储,使用的是2MB的磁盘空间,而不是128MB。

一.Hadoop存档

Hadoop归档文件或HAR文件,是一个更高效的文件存档工具,它将文件存入HDFS块,在减少NameNode内存使用的同时,允许对文件进行透明访问。具体说来,Hadoop归档文件可以用作MapReduce的输入。

二.归档操作

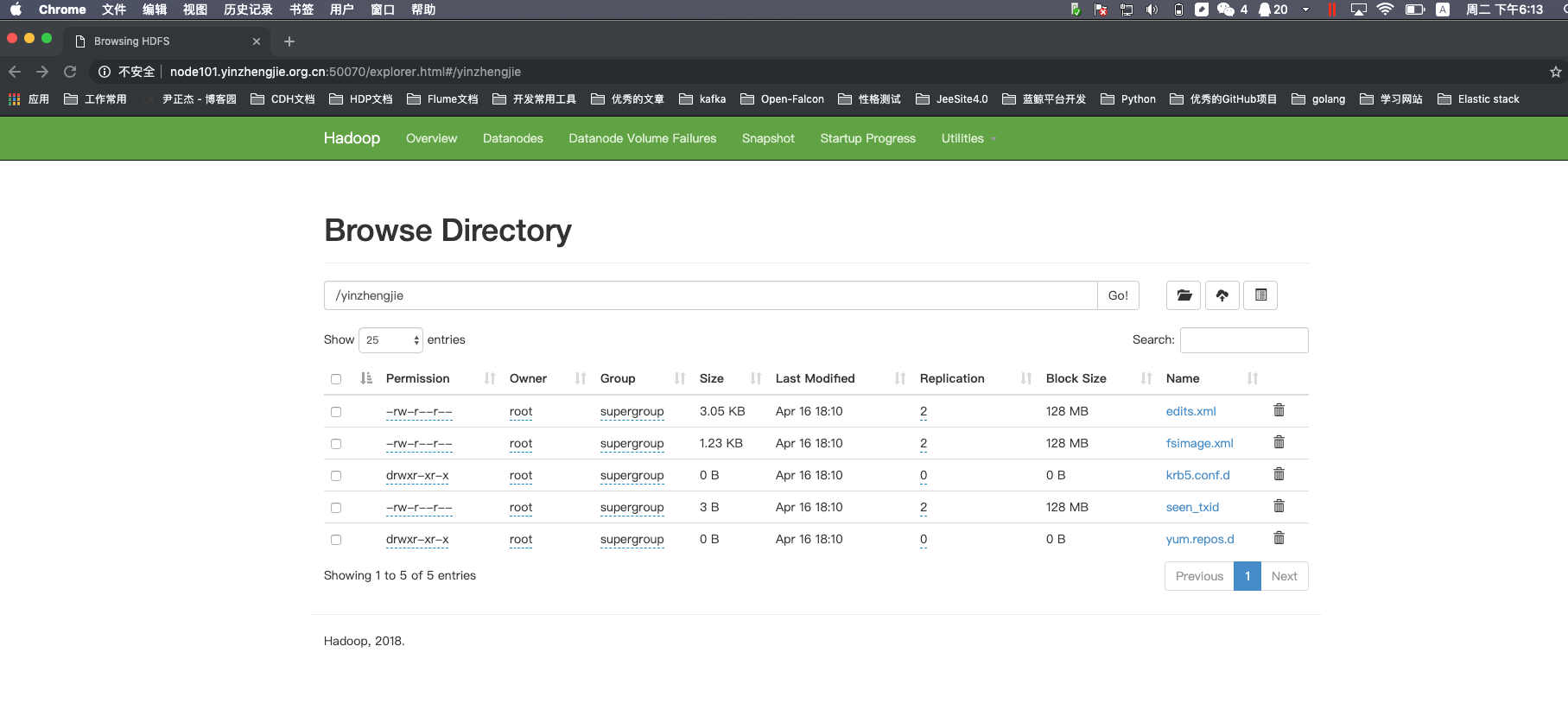

1>.上传测试文件到hdfs集群中

[root@node101.yinzhengjie.org.cn ~]# ll -R

.:

total

-rw-r--r--. root root Apr : edits.xml

-rw-r--r--. root root Apr : fsimage.xml

drwxr-xr-x root root Apr : krb5.conf.d

-rw-r--r--. root root Apr : seen_txid

drwxr-xr-x root root Apr : yum.repos.d ./krb5.conf.d:

total

-rw-r--r-- root root Apr : krb5.conf ./yum.repos.d:

total

drwxr-xr-x root root Apr : back

-rw-r--r-- root root Apr : CentOS-Base.repo

drwxr-xr-x root root Apr : default

-rw-r--r-- root root Apr : epel.repo

-rw-r--r-- root root Apr : epel-testing.repo ./yum.repos.d/back:

total

-rw-r--r-- root root Apr : CentOS-Base.repo ./yum.repos.d/default:

total

-rw-r--r-- root root Apr : CentOS-Base.repo

-rw-r--r-- root root Apr : CentOS-CR.repo

-rw-r--r-- root root Apr : CentOS-Debuginfo.repo

-rw-r--r-- root root Apr : CentOS-fasttrack.repo

-rw-r--r-- root root Apr : CentOS-Media.repo

-rw-r--r-- root root Apr : CentOS-Sources.repo

-rw-r--r-- root root Apr : CentOS-Vault.repo

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ll -R

[root@node101.yinzhengjie.org.cn ~]# ll

total

-rw-r--r--. root root Apr : edits.xml

-rw-r--r--. root root Apr : fsimage.xml

drwxr-xr-x root root Apr : krb5.conf.d

-rw-r--r--. root root Apr : seen_txid

drwxr-xr-x root root Apr : yum.repos.d

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hadoop fs -mkdir /yinzhengjie

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -put ./* /yinzhengjie

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie

Found 5 items

-rw-r--r-- 2 root supergroup 3124 2019-04-16 18:10 /yinzhengjie/edits.xml

-rw-r--r-- 2 root supergroup 1264 2019-04-16 18:10 /yinzhengjie/fsimage.xml

drwxr-xr-x - root supergroup 0 2019-04-16 18:10 /yinzhengjie/krb5.conf.d

-rw-r--r-- 2 root supergroup 3 2019-04-16 18:10 /yinzhengjie/seen_txid

drwxr-xr-x - root supergroup 0 2019-04-16 18:10 /yinzhengjie/yum.repos.d

[root@node101.yinzhengjie.org.cn ~]#

2>. 启动yarn进程(我们使用归档时需要用到该服务进行资源调度)

[root@node101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps'

node110.yinzhengjie.org.cn | SUCCESS | rc= >>

Jps

DataNode node101.yinzhengjie.org.cn | SUCCESS | rc= >>

FsShell

NameNode

DataNode

SecondaryNameNode

Jps node103.yinzhengjie.org.cn | SUCCESS | rc= >>

DataNode

Jps [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' #yarn服务启动之前存在的进程

[root@node101.yinzhengjie.org.cn ~]# start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /yinzhengjie/softwares/hadoop-2.9./logs/yarn-root-resourcemanager-node101.yinzhengjie.org.cn.out

node103.yinzhengjie.org.cn: starting nodemanager, logging to /yinzhengjie/softwares/hadoop-2.9./logs/yarn-root-nodemanager-node103.yinzhengjie.org.cn.out

node101.yinzhengjie.org.cn: starting nodemanager, logging to /yinzhengjie/softwares/hadoop-2.9./logs/yarn-root-nodemanager-node101.yinzhengjie.org.cn.out

node110.yinzhengjie.org.cn: starting nodemanager, logging to /yinzhengjie/softwares/hadoop-2.9./logs/yarn-root-nodemanager-node110.yinzhengjie.org.cn.out

node102.yinzhengjie.org.cn: ssh: connect to host node102.yinzhengjie.org.cn port : No route to host

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# start-yarn.sh

[root@node101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps'

node110.yinzhengjie.org.cn | SUCCESS | rc= >>

DataNode

NodeManager

Jps node103.yinzhengjie.org.cn | SUCCESS | rc= >>

NodeManager

DataNode

Jps node101.yinzhengjie.org.cn | SUCCESS | rc= >>

NodeManager

FsShell

NameNode

Jps

DataNode

SecondaryNameNode

ResourceManager [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' #启动yarn服务之后,我们观察哪些进程启动成功啦!

3>.将多个目录进行归档操作

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie

Found items

-rw-r--r-- root supergroup -- : /yinzhengjie/edits.xml

-rw-r--r-- root supergroup -- : /yinzhengjie/fsimage.xml

drwxr-xr-x - root supergroup -- : /yinzhengjie/krb5.conf.d

-rw-r--r-- root supergroup -- : /yinzhengjie/seen_txid

drwxr-xr-x - root supergroup -- : /yinzhengjie/yum.repos.d

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie #归档之前查看目录结构

[root@node101.yinzhengjie.org.cn ~]# hadoop archive -archiveName yinzhengjie-test.har -p /yinzhengjie/yum.repos.d /yinzhengjie/output

// :: INFO client.RMProxy: Connecting to ResourceManager at node101.yinzhengjie.org.cn/172.30.1.101:

// :: INFO client.RMProxy: Connecting to ResourceManager at node101.yinzhengjie.org.cn/172.30.1.101:

// :: INFO client.RMProxy: Connecting to ResourceManager at node101.yinzhengjie.org.cn/172.30.1.101:

// :: INFO mapreduce.JobSubmitter: number of splits:

// :: INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

// :: INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1555408586551_0006

// :: INFO impl.YarnClientImpl: Submitted application application_1555408586551_0006

// :: INFO mapreduce.Job: The url to track the job: http://node101.yinzhengjie.org.cn:8088/proxy/application_1555408586551_0006/

// :: INFO mapreduce.Job: Running job: job_1555408586551_0006

// :: INFO mapreduce.Job: Job job_1555408586551_0006 running in uber mode : false

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: Job job_1555408586551_0006 completed successfully

// :: INFO mapreduce.Job: Counters:

File System Counters

FILE: Number of bytes read=

FILE: Number of bytes written=

FILE: Number of read operations=

FILE: Number of large read operations=

FILE: Number of write operations=

HDFS: Number of bytes read=

HDFS: Number of bytes written=

HDFS: Number of read operations=

HDFS: Number of large read operations=

HDFS: Number of write operations=

Job Counters

Launched map tasks=

Launched reduce tasks=

Other local map tasks=

Total time spent by all maps in occupied slots (ms)=

Total time spent by all reduces in occupied slots (ms)=

Total time spent by all map tasks (ms)=

Total time spent by all reduce tasks (ms)=

Total vcore-milliseconds taken by all map tasks=

Total vcore-milliseconds taken by all reduce tasks=

Total megabyte-milliseconds taken by all map tasks=

Total megabyte-milliseconds taken by all reduce tasks=

Map-Reduce Framework

Map input records=

Map output records=

Map output bytes=

Map output materialized bytes=

Input split bytes=

Combine input records=

Combine output records=

Reduce input groups=

Reduce shuffle bytes=

Reduce input records=

Reduce output records=

Spilled Records=

Shuffled Maps =

Failed Shuffles=

Merged Map outputs=

GC time elapsed (ms)=

CPU time spent (ms)=

Physical memory (bytes) snapshot=

Virtual memory (bytes) snapshot=

Total committed heap usage (bytes)=

Shuffle Errors

BAD_ID=

CONNECTION=

IO_ERROR=

WRONG_LENGTH=

WRONG_MAP=

WRONG_REDUCE=

File Input Format Counters

Bytes Read=

File Output Format Counters

Bytes Written=

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hadoop archive -archiveName yinzhengjie-test.har -p /yinzhengjie/yum.repos.d /yinzhengjie/output

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie

Found items

-rw-r--r-- root supergroup -- : /yinzhengjie/edits.xml

-rw-r--r-- root supergroup -- : /yinzhengjie/fsimage.xml

drwxr-xr-x - root supergroup -- : /yinzhengjie/krb5.conf.d

drwxr-xr-x - root supergroup -- : /yinzhengjie/output #这个目录就是我们存储归档文件的,我们在上一步已经指明了,我们可以查看该目录下存放文件的名称!

-rw-r--r-- root supergroup -- : /yinzhengjie/seen_txid

drwxr-xr-x - root supergroup -- : /yinzhengjie/yum.repos.d

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/output

Found items

drwxr-xr-x - root supergroup -- : /yinzhengjie/output/yinzhengjie-test.har #大家看这个名称,我们在归档时使用了-archiveName参数归档文件目录! [root@node101.yinzhengjie.org.cn ~]#

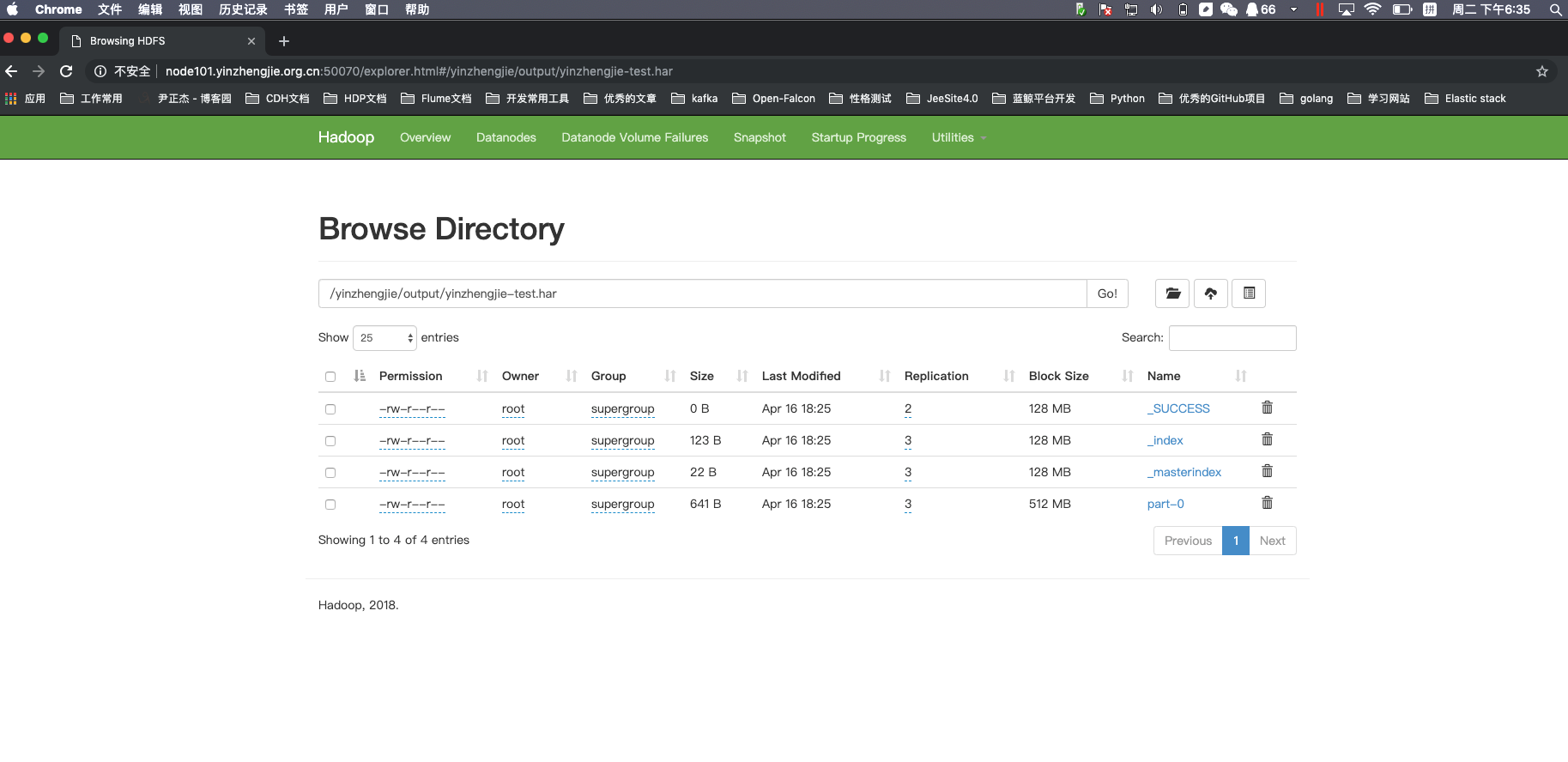

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/output/yinzhengjie-test.har

Found items

-rw-r--r-- root supergroup -- : /yinzhengjie/output/yinzhengjie-test.har/_SUCCESS

-rw-r--r-- root supergroup -- : /yinzhengjie/output/yinzhengjie-test.har/_index

-rw-r--r-- root supergroup -- : /yinzhengjie/output/yinzhengjie-test.har/_masterindex

-rw-r--r-- root supergroup -- : /yinzhengjie/output/yinzhengjie-test.har/part-

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

4>.查看归档文件

[root@node101.yinzhengjie.org.cn ~]# hadoop fs -ls -R /yinzhengjie/output/yinzhengjie-test.har

-rw-r--r-- root supergroup -- : /yinzhengjie/output/yinzhengjie-test.har/_SUCCESS

-rw-r--r-- root supergroup -- : /yinzhengjie/output/yinzhengjie-test.har/_index

-rw-r--r-- root supergroup -- : /yinzhengjie/output/yinzhengjie-test.har/_masterindex

-rw-r--r-- root supergroup -- : /yinzhengjie/output/yinzhengjie-test.har/part-

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hadoop fs -ls -R har:///yinzhengjie/output/yinzhengjie-test.har

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/CentOS-Base.repo

drwxr-xr-x - root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/back

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/back/CentOS-Base.repo

drwxr-xr-x - root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/default

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/default/CentOS-Base.repo

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/default/CentOS-CR.repo

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/default/CentOS-Debuginfo.repo

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/default/CentOS-Media.repo

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/default/CentOS-Sources.repo

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/default/CentOS-Vault.repo

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/default/CentOS-fasttrack.repo

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/epel-testing.repo

-rw-r--r-- root supergroup -- : har:///yinzhengjie/output/yinzhengjie-test.har/epel.repo

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

三.解归档文件

1>.查看解归档之前的目录情况

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie

Found items

-rw-r--r-- root supergroup -- : /yinzhengjie/edits.xml

-rw-r--r-- root supergroup -- : /yinzhengjie/fsimage.xml

drwxr-xr-x - root supergroup -- : /yinzhengjie/krb5.conf.d

drwxr-xr-x - root supergroup -- : /yinzhengjie/output

-rw-r--r-- root supergroup -- : /yinzhengjie/seen_txid

drwxr-xr-x - root supergroup -- : /yinzhengjie/yum.repos.d

[root@node101.yinzhengjie.org.cn ~]#

2>.进行解归档操作

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hadoop fs -cp har:///yinzhengjie/output/yinzhengjie-test.har /yinzhengjie/output2019

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie

Found items

-rw-r--r-- root supergroup -- : /yinzhengjie/edits.xml

-rw-r--r-- root supergroup -- : /yinzhengjie/fsimage.xml

drwxr-xr-x - root supergroup -- : /yinzhengjie/krb5.conf.d

drwxr-xr-x - root supergroup -- : /yinzhengjie/output

drwxr-xr-x - root supergroup -- : /yinzhengjie/output2019

-rw-r--r-- root supergroup -- : /yinzhengjie/seen_txid

drwxr-xr-x - root supergroup -- : /yinzhengjie/yum.repos.d

[root@node101.yinzhengjie.org.cn ~]#

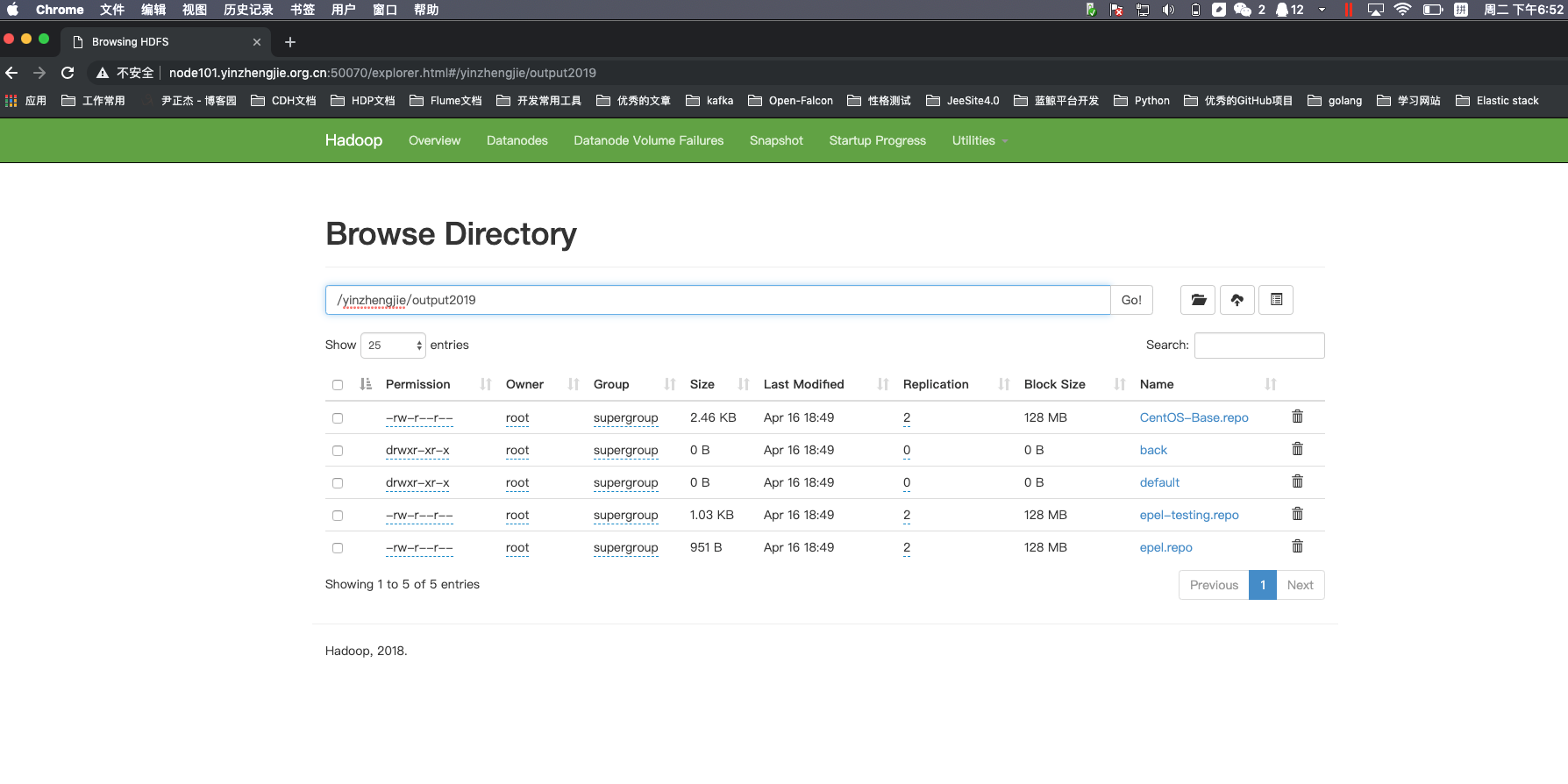

3>.对比归档前和解压后的数据是否一致

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/output2019

Found items

-rw-r--r-- root supergroup -- : /yinzhengjie/output2019/CentOS-Base.repo

drwxr-xr-x - root supergroup -- : /yinzhengjie/output2019/back

drwxr-xr-x - root supergroup -- : /yinzhengjie/output2019/default

-rw-r--r-- root supergroup -- : /yinzhengjie/output2019/epel-testing.repo

-rw-r--r-- root supergroup -- : /yinzhengjie/output2019/epel.repo

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/yum.repos.d

Found items

-rw-r--r-- root supergroup -- : /yinzhengjie/yum.repos.d/CentOS-Base.repo

drwxr-xr-x - root supergroup -- : /yinzhengjie/yum.repos.d/back

drwxr-xr-x - root supergroup -- : /yinzhengjie/yum.repos.d/default

-rw-r--r-- root supergroup -- : /yinzhengjie/yum.repos.d/epel-testing.repo

-rw-r--r-- root supergroup -- : /yinzhengjie/yum.repos.d/epel.repo

[root@node101.yinzhengjie.org.cn ~]#

Apache Hadoop 2.9.2 的归档案例剖析的更多相关文章

- Hadoop入门进阶课程6--MapReduce应用案例

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,博主为石山园,博客地址为 http://www.cnblogs.com/shishanyuan ...

- hadoop笔记之MapReduce的应用案例(利用MapReduce进行排序)

MapReduce的应用案例(利用MapReduce进行排序) MapReduce的应用案例(利用MapReduce进行排序) 思路: Reduce之后直接进行结果合并 具体样例: 程序名:Sort. ...

- hadoop笔记之MapReduce的应用案例(WordCount单词计数)

MapReduce的应用案例(WordCount单词计数) MapReduce的应用案例(WordCount单词计数) 1. WordCount单词计数 作用: 计算文件中出现每个单词的频数 输入结果 ...

- Hadoop生态圈-Knox网关的应用案例

Hadoop生态圈-Knox网关的应用案例 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.Knox网关简介 据Knox官网所述(http://knox.apache.org/) ...

- Hadoop生态圈-CDH与HUE使用案例

Hadoop生态圈-CDH与HUE使用案例 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.HUE的介绍 1>.HUE的由来 HUE全称是HadoopUser Experi ...

- hadoop一代集群运行代码案例

hadoop一代集群运行代码案例 集群 一个 master,两个slave,IP分别是192.168.1.2.192.168.1.3.192.168.1.4 hadoop版 ...

- Hadoop基础-MapReduce的Partitioner用法案例

Hadoop基础-MapReduce的Partitioner用法案例 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.Partitioner关键代码剖析 1>.返回的分区号 ...

- Hadoop基础-MapReduce的Combiner用法案例

Hadoop基础-MapReduce的Combiner用法案例 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.编写年度最高气温统计 如上图说所示:有一个temp的文件,里面存放 ...

- Hadoop的ChainMapper和ChainReducer使用案例(链式处理)(四)

不多说,直接上干货! Hadoop的MR作业支持链式处理,类似在一个生产牛奶的流水线上,每一个阶段都有特定的任务要处理,比如提供牛奶盒,装入牛奶,封盒,打印出厂日期,等等,通过这样进一步的分 ...

随机推荐

- Oracle 常用Sql 语句

Oracle数据库常常被用作项目开发的数据库之一:有时隔段时间没使用就会忘记一些常用的sql语法,所以我们有必要记录下常用的sql 语句,当我们需要时可以快速找到并运用. 1 创建表空间.创建用户及授 ...

- Android Studio教程04-Task和Back stack

目录 1.Tasks and Back Stack 1.1. 当点击Back按钮返回到上一个Activity时发生了什么? 1.2. 点击HOME按钮 1.3.多次点击进入Activity-Back按 ...

- 章节十、6-CSS---用CSS 定位子节点

以该网址为例(https://learn.letskodeit.com/p/practice) 一.通过子节点定位元素 1.例如我们需要定位这个table表格 2.当我们通过table标签直接定位时, ...

- Chromium被用于Microsoft Edge与ChakraCore的未来【译】

注:英语不好,力求大概能懂.持笔人是:Limin Zhu,好像是中国人,但是没有提供中文版本. 大家好,ChakraCore的朋友们: 昨天,微软公布,Microsoft Edge桌面浏览器采用Chr ...

- MyDAL - 引用类型对象 .DeepClone() 深度克隆[深度复制] 工具 使用

索引: 目录索引 一.API 列表 .DeepClone() 用于 Model / Entity / ... ... 等引用类型对象的深度克隆 特性说明 1.不需要对对象做任何特殊处理,直接 .Dee ...

- Redis入门教程(一)

一.NoSQL概述 1.什么是NoSQL NoSQL,泛指非关系型的数据库.随着互联网web2.0网站的兴起,传统的关系数据库在应付web2.0网站,特别是超大规模和高并发的SNS类型的web2.0纯 ...

- 《SQL CookBook 》笔记-第三章-多表查询

目录 3.1 叠加两个行集 3.2 合并相关行 3.3 查找两个表中相同的行 3.4 查找只存在于一个表中的数据 3.5 从一个表检索与另一个表不相关的行 3.6 新增连接查询而不影响其他连接查询 3 ...

- 黑阔主流攻防之不合理的cookie验证方式

最近博主没事干中(ZIZUOZISHOU),于是拿起某校的习题研究一番,名字很6,叫做黑阔主流攻防习题 虚拟机环境经过一番折腾,配置好后,打开目标地址:192.168.5.155 如图所示 这里看出题 ...

- requests库下载图片的方法

方法: 传入图片url,requests.get()方法请求一下,将源码以二进制的形式写在本地即可. 以前一直以为requests库中有特定的方法获取图片,类似urllib.request.urlre ...

- Zabbix3.4-RHEL 7.4 X64 YUM联网安装

OS准备 关闭selinux vi /etc/selinux/config setenforce 0 开启防火墙80端口访问 firewall-cmd --permanent --add-rich-r ...