附014.Kubernetes Prometheus+Grafana+EFK+Kibana+Glusterfs整合解决方案

一 glusterfs存储集群部署

1.1 架构示意

1.2 相关规划

|

主机

|

IP

|

磁盘

|

备注

|

|

k8smaster01

|

172.24.8.71

|

——

|

Kubernetes Master节点

Heketi主机

|

|

k8smaster02

|

172.24.8.72

|

——

|

Kubernetes Master节点

Heketi主机

|

|

k8smaster03

|

172.24.8.73

|

——

|

Kubernetes Master节点

Heketi主机

|

|

k8snode01

|

172.24.8.74

|

sdb

|

Kubernetes Worker节点

glusterfs 01节点

|

|

k8snode02

|

172.24.8.75

|

sdb

|

Kubernetes Worker节点

glusterfs 02节点

|

|

k8snode03

|

172.24.8.76

|

sdb

|

Kubernetes Worker节点

glusterfs 03节点

|

1.3 安装glusterfs

1.4 添加信任池

1.5 安装heketi

1.6 配置heketi

{ " "", " " " " " " " }, " " " } }, " " " " " " " " " " ], " " " " " "", " }, " " " " " " ], " } }

1.7 配置免秘钥

1.8 启动heketi

1.9 配置Heketi拓扑

{ " { " { " " " " ], " " ] }, " }, " " ] }, { " " " " ], " " ] }, " }, " " ] }, { " " " " ], " " ] }, " }, " " ] } ] } ] }

1.10 集群管理及测试

1.11 创建StorageClass

apiVersion: v1 kind: Secret metadata: namespace: heketi key: YWRtaW4xMjM= type: kubernetes.io/glusterfs

StorageClass apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: parameters: resturl: " clusterid: " restauthenabled: " restuser: " secretName: " secretNamespace: " volumetype: " provisioner: kubernetes.io/glusterfs reclaimPolicy: Delete

二 集群监控Metrics

2.1 开启聚合层

2.2 获取部署文件

…… image: mirrorgooglecontainers/metrics-server-amd64:v0.3.6 command: - /metrics-server - --metric-resolution=30s - --kubelet-insecure-tls - --kubelet-preferred-address- ……

2.3 正式部署

2.4 确认验证

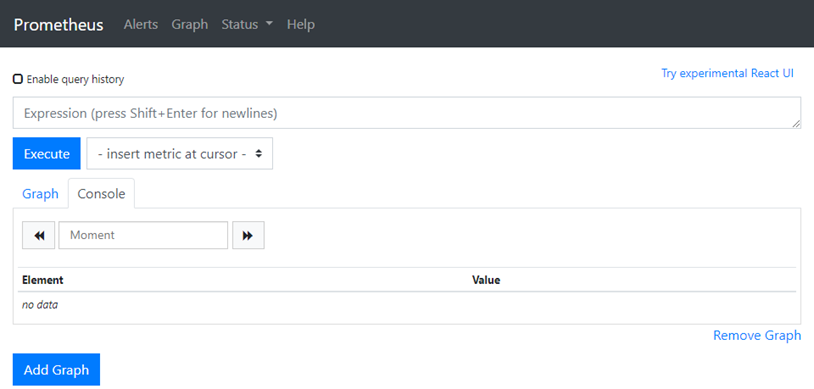

三 Prometheus部署

3.1 获取部署文件

3.2 创建命名空间

apiVersion: v1 kind: Namespace metadata:

3.3 创建RBAC

apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: rules: - apiGroups: [""] resources: - nodes - nodes/ - services - endpoints - pods verbs: [" - apiGroups: - extensions resources: - ingresses verbs: [" - nonResourceURLs: [" verbs: [" --- apiVersion: v1 kind: ServiceAccount metadata: namespace: monitoring --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole subjects: - kind: ServiceAccount namespace: monitoring #仅需修改命名空间

3.4 创建Prometheus ConfigMap

apiVersion: v1 kind: ConfigMap metadata: labels: namespace: monitoring prometheus.yml: |- global: scrape_interval: 10s evaluation_interval: 10s scrape_configs: - job_name: 'kubernetes-apiservers' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-nodes' scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/ - job_name: 'kubernetes-cadvisor' scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/ - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: target_label: __address__ regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: target_label: kubernetes_name - job_name: 'kubernetes-services' metrics_path: /probe params: kubernetes_sd_configs: - role: service relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__address__] target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] target_label: kubernetes_name - job_name: 'kubernetes-ingresses' kubernetes_sd_configs: - role: ingress relabel_configs: - source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path] regex: (.+);(.+);(.+) replacement: ${1}://${2}${3} target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: - action: labelmap regex: __meta_kubernetes_ingress_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_ingress_name] target_label: kubernetes_name - job_name: 'kubernetes-pods' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: target_label: kubernetes_pod_name

3.5 创建持久PVC

apiVersion: v1 kind: PersistentVolumeClaim metadata: namespace: monitoring annotations: volume.beta.kubernetes.io/storage- spec: accessModes: - ReadWriteMany resources: requests: storage: 5Gi

3.6 Prometheus部署

apiVersion: apps/v1beta2 kind: Deployment metadata: labels: namespace: monitoring spec: replicas: 1 selector: matchLabels: app: prometheus-server template: metadata: labels: app: prometheus-server spec: containers: - image: prom/prometheus:v2.14.0 command: - " args: - " - " - " ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: /etc/prometheus/ - mountPath: /prometheus/ serviceAccountName: prometheus imagePullSecrets: - volumes: - configMap: defaultMode: 420 - persistentVolumeClaim: claimName: prometheus-pvc

3.7 创建Prometheus Service

apiVersion: v1 kind: Service metadata: labels: app: prometheus-service namespace: monitoring spec: type: NodePort selector: app: prometheus-server ports: - port: 9090 targetPort: 9090 nodePort: 30001

3.8 确认验证Prometheus

四 部署grafana

4.1 获取部署文件

4.2 创建持久PVC

apiVersion: v1 kind: PersistentVolumeClaim metadata: namespace: monitoring annotations: volume.beta.kubernetes.io/storage- spec: accessModes: - ReadWriteOnce resources: requests: storage: 5Gi

4.3 grafana部署

apiVersion: extensions/v1beta1 kind: Deployment metadata: namespace: monitoring spec: replicas: 1 template: metadata: labels: task: monitoring k8s-app: grafana spec: containers: - image: grafana/grafana:6.5.0 imagePullPolicy: IfNotPresent ports: - containerPort: 3000 protocol: TCP volumeMounts: - mountPath: /var/lib/grafana env: - value: monitoring-influxdb - value: "" - value: " - value: " - value: Admin - value: / readinessProbe: httpGet: port: 3000 volumes: - persistentVolumeClaim: claimName: grafana- nodeSelector: node-role.kubernetes.io/master: " tolerations: - key: " effect: " --- apiVersion: v1 kind: Service metadata: labels: kubernetes.io/cluster-service: 'true' kubernetes.io/ annotations: prometheus.io/scrape: 'true' prometheus.io/tcp-probe: 'true' prometheus.io/tcp-probe-port: '80' namespace: monitoring spec: type: NodePort ports: - port: 80 targetPort: 3000 nodePort: 30002 selector: k8s-app: grafana

4.4 确认验证Prometheus

4.4 grafana配置

- 添加数据源:略

- 创建用户:略

4.5 查看监控

五 日志管理

5.1 获取部署文件

5.2 修改相关源

…… - image: quay-mirror.qiniu.com/fluentd_elasticsearch/elasticsearch:v7.3.2 imagePullPolicy: IfNotPresent ……

…… image: quay-mirror.qiniu.com/fluentd_elasticsearch/fluentd:v2.7.0 imagePullPolicy: IfNotPresent ……

…… image: docker.elastic.co/kibana/kibana-oss:7.3.2 imagePullPolicy: IfNotPresent ……

5.3 创建持久PVC

apiVersion: v1 kind: PersistentVolumeClaim metadata: namespace: kube-system annotations: volume.beta.kubernetes.io/storage- spec: accessModes: - ReadWriteMany resources: requests: storage: 5Gi

apiVersion: v1

kind: ServiceAccount

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: elasticsearch-logging

labels:

k8s-app: elasticsearch-logging

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "services"

- "namespaces"

- "endpoints"

verbs:

- "get"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: kube-system

name: elasticsearch-logging

labels:

k8s-app: elasticsearch-logging

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: elasticsearch-logging

namespace: kube-system

apiGroup: ""

roleRef:

kind: ClusterRole

name: elasticsearch-logging

apiGroup: ""

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

version: v7.3.2

addonmanager.kubernetes.io/mode: Reconcile

spec:

serviceName: elasticsearch-logging

replicas: 1

selector:

matchLabels:

k8s-app: elasticsearch-logging

version: v7.3.2

template:

metadata:

labels:

k8s-app: elasticsearch-logging

version: v7.3.2

spec:

serviceAccountName: elasticsearch-logging

containers:

- image: quay-mirror.qiniu.com/fluentd_elasticsearch/elasticsearch:v7.3.2

name: elasticsearch-logging

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

memory: 3Gi

requests:

cpu: 100m

memory: 3Gi

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- name: elasticsearch-logging

mountPath: /data

env:

- name: "NAMESPACE"

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: elasticsearch-logging #挂载永久存储PVC

persistentVolumeClaim:

claimName: elasticsearch-pvc

initContainers:

- image: alpine:3.6

command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"]

name: elasticsearch-logging-init

securityContext:

privileged: true

5.5 部署Elasticsearch SVC

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Elasticsearch"

spec:

ports:

- port: 9200

protocol: TCP

targetPort: db

selector:

k8s-app: elasticsearch-logging

5.6 部署fluentd

[root@k8smaster01 fluentd-elasticsearch]# kubectl create -f fluentd-es-ds.yaml #部署fluentd

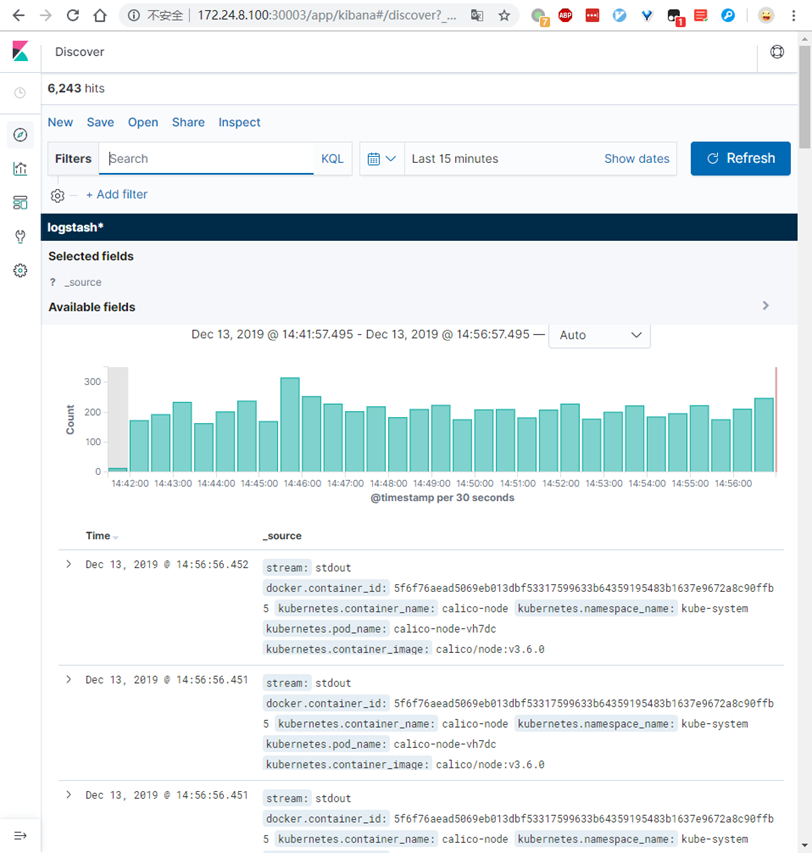

5.7 部署Kibana

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana-logging

namespace: kube-system

labels:

k8s-app: kibana-logging

addonmanager.kubernetes.io/mode: Reconcile

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana-logging

template:

metadata:

labels:

k8s-app: kibana-logging

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

containers:

- name: kibana-logging

image: docker.elastic.co/kibana/kibana-oss:7.3.2

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch-logging:9200

ports:

- containerPort: 5601

name: ui

protocol: TCP

5.8 部署Kibana SVC

apiVersion: v1

kind: Service

metadata:

name: kibana-logging

namespace: kube-system

labels:

k8s-app: kibana-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Kibana"

spec:

type: NodePort

ports:

- port: 5601

protocol: TCP

nodePort: 30003

targetPort: ui

selector:

k8s-app: kibana-logging

5.9 确认验证

附014.Kubernetes Prometheus+Grafana+EFK+Kibana+Glusterfs整合解决方案的更多相关文章

- 附014.Kubernetes Prometheus+Grafana+EFK+Kibana+Glusterfs整合性方案

一 glusterfs存储集群部署 注意:以下为简略步骤,详情参考<附009.Kubernetes永久存储之GlusterFS独立部署>. 1.1 架构示意 略 1.2 相关规划 主机 I ...

- Kubernetes+Prometheus+Grafana部署笔记

一.基础概念 1.1 基础概念 Kubernetes(通常写成“k8s”)Kubernetes是Google开源的容器集群管理系统.其设计目标是在主机集群之间提供一个能够自动化部署.可拓展.应用容器可 ...

- Kubernetes prometheus+grafana k8s 监控

参考: https://www.cnblogs.com/terrycy/p/10058944.html https://www.cnblogs.com/weiBlog/p/10629966.html ...

- 附024.Kubernetes全系列大总结

Kubernetes全系列总结如下,后期不定期更新.欢迎基于学习.交流目的的转载和分享,禁止任何商业盗用,同时希望能带上原文出处,尊重ITer的成果,也是尊重知识.若发现任何错误或纰漏,留言反馈或右侧 ...

- 基于Docker+Prometheus+Grafana监控SpringBoot健康信息

在微服务体系当中,监控是必不可少的.当系统环境超过指定的阀值以后,需要提醒指定的运维人员或开发人员进行有效的防范,从而降低系统宕机的风险.在CNCF云计算平台中,Prometheus+Grafana是 ...

- 使用 Prometheus + Grafana 对 Kubernetes 进行性能监控的实践

1 什么是 Kubernetes? Kubernetes 是 Google 开源的容器集群管理系统,其管理操作包括部署,调度和节点集群间扩展等. 如下图所示为目前 Kubernetes 的架构图,由 ...

- [转帖]Prometheus+Grafana监控Kubernetes

原博客的位置: https://blog.csdn.net/shenhonglei1234/article/details/80503353 感谢原作者 这里记录一下自己试验过程中遇到的问题: . 自 ...

- 附010.Kubernetes永久存储之GlusterFS超融合部署

一 前期准备 1.1 基础知识 在Kubernetes中,使用GlusterFS文件系统,操作步骤通常是: 创建brick-->创建volume-->创建PV-->创建PVC--&g ...

- [转帖]安装prometheus+grafana监控mysql redis kubernetes等

安装prometheus+grafana监控mysql redis kubernetes等 https://www.cnblogs.com/sfnz/p/6566951.html plug 的模式进行 ...

随机推荐

- mvn相关介绍和命令

1.前言 Maven,发音是[`meivin],"专家"的意思.它是一个很好的项目管理工具,很早就进入了我的必备工具行列,但是这次为了把project1项目完全迁移并应用maven ...

- WordPress快速打造个人博客

前些天用wordpress搭建了现在这个博客,所以总结了一篇文章,讲讲怎么样简单的创建一个博客.开始前这里有篇我搭建时所遇到的问题可以作为参考<WordPress建站注意事项>,首先我们要 ...

- 启动Tomcat报WEB-INF\lib\j2ee.jar jar not loaded异常的解决办法

今天加载工程时突然发现Tomcat报: 2010-7-1 12:11:38 org.apache.catalina.loader.WebappClassLoader validateJarFile 信 ...

- unittest(22)- p2p项目实战(4)-read_config

# 4. read_config.py import configparser class ReadConfig: @staticmethod def get_config(file_path, se ...

- SQL Server 2008R2各个版本,如何查看是否激活,剩余可用日期?

SELECT create_date AS 'SQL Server Installed Date', Expiry_date AS 'SQL Server Expiry Date', DATEDIFF ...

- android 应用程序与服务端交互

http://www.cnblogs.com/freeliver54/archive/2012/06/13/2547765.html 简述了Service的一些基础知识以及Service和Thread ...

- LISTAGG函数

官网进入 该函数作用是可以实现对列值得拼接: 根据官网介绍,可以对列值排序进行拼接,也可以分组拼接 1.1运行结果 1.2运行结果 2运行结果 注意该函数提供的 over( partition by ...

- JMeter接口测试-计数器

前言 在测试注册接口的时候,需要批量注册账号时,每注册一个并且需要随时去修改数据,比较繁琐,除了使用随机函数生成账号,我们还可以使用计数器来进行批量注册. 一:添加配置元件-计数器 二:注册10个账号 ...

- linux学习--2.文件管理的基本命令

文件的基本操作 前言: 看完这篇图文我应该能保证读者在Linux系统下对文件的操作能跟用Windows环境下一样流畅吧,好了下面正文 正文: 基础知识: linux里共有以下几类文件,分别为目录(di ...

- 小白学 Python 数据分析(10):Pandas (九)数据运算

人生苦短,我用 Python 前文传送门: 小白学 Python 数据分析(1):数据分析基础 小白学 Python 数据分析(2):Pandas (一)概述 小白学 Python 数据分析(3):P ...