关于 tf.nn.softmax_cross_entropy_with_logits 及 tf.clip_by_value

In order to train our model, we need to define what it means for the model to be good. Well, actually, in machine learning we typically define what it means for a model to be bad. We call this the cost, or the loss, and it represents how far off our model is from our desired outcome. We try to minimize that error, and the smaller the error margin, the better our model is.

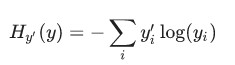

One very common, very nice function to determine the loss of a model is called "cross-entropy." Cross-entropy arises from thinking about information compressing codes in information theory but it winds up being an important idea in lots of areas, from gambling to machine learning. It's defined as:

Where y is our predicted probability distribution, and y′ is the true distribution (the one-hot vector with the digit labels). In some rough sense, the cross-entropy is measuring how inefficient our predictions are for describing the truth. Going into more detail about cross-entropy is beyond the scope of this tutorial, but it's well worthunderstanding.

To implement cross-entropy we need to first add a new placeholder to input the correct answers:

y_ = tf.placeholder(tf.float32, [None, 10])

Then we can implement the cross-entropy function,

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_ * tf.log(y), reduction_indices=[1]))

First, tf.log computes the logarithm of each element of y. Next, we multiply each element of y_ with the corresponding element of tf.log(y). Then tf.reduce_sum adds the elements in the second dimension of y, due to the reduction_indices=[1] parameter. Finally, tf.reduce_mean computes the mean over all the examples in the batch.

Note that in the source code, we don't use this formulation, because it is numerically unstable. Instead, we apply tf.nn.softmax_cross_entropy_with_logits on the unnormalized logits (e.g., we call softmax_cross_entropy_with_logits on tf.matmul(x, W) + b), because this more numerically stable function internally computes the softmax activation. In your code, consider usingtf.nn.softmax_cross_entropy_with_logits instead.

大意是:如果使用 cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_ * tf.log(y), reduction_indices=[1]))

来计算交叉熵,则需要使用 tf.clip_by_value 来使某些求 log 的值,因为 log 会产生 none (如 log-3 ), 用它来限定不出现none,具体使用方式如下:

cross_entropy = -tf.reduce_sum(y_*tf.log(tf.clip_by_value(y_conv, 1e-10, 1.0)))但后来有人用了一个更好的方法来避免none:

cross_entropy = -tf.reduce_sum(y_*tf.log(y_conv + 1e-10))具体参见 http://stackoverflow.com/questions/33712178/tensorflow-nan-bug 的讨论。

而如果直接用 tf.nn.softmax_cross_entropy_with_logits 则你再没有上面的后顾之忧了,它自动解决了上面的问题。

关于 tf.nn.softmax_cross_entropy_with_logits 及 tf.clip_by_value的更多相关文章

- 【TensorFlow】tf.nn.softmax_cross_entropy_with_logits的用法

在计算loss的时候,最常见的一句话就是 tf.nn.softmax_cross_entropy_with_logits ,那么它到底是怎么做的呢? 首先明确一点,loss是代价值,也就是我们要最小化 ...

- 深度学习原理与框架-Tensorflow卷积神经网络-卷积神经网络mnist分类 1.tf.nn.conv2d(卷积操作) 2.tf.nn.max_pool(最大池化操作) 3.tf.nn.dropout(执行dropout操作) 4.tf.nn.softmax_cross_entropy_with_logits(交叉熵损失) 5.tf.truncated_normal(两个标准差内的正态分布)

1. tf.nn.conv2d(x, w, strides=[1, 1, 1, 1], padding='SAME') # 对数据进行卷积操作 参数说明:x表示输入数据,w表示卷积核, stride ...

- [TensorFlow] tf.nn.softmax_cross_entropy_with_logits的用法

在计算loss的时候,最常见的一句话就是tf.nn.softmax_cross_entropy_with_logits,那么它到底是怎么做的呢? 首先明确一点,loss是代价值,也就是我们要最小化的值 ...

- tf.nn.softmax_cross_entropy_with_logits的用法

http://blog.csdn.net/mao_xiao_feng/article/details/53382790 计算loss的时候,最常见的一句话就是tf.nn.softmax_cross_e ...

- tf.nn.softmax & tf.nn.reduce_sum & tf.nn.softmax_cross_entropy_with_logits

tf.nn.softmax softmax是神经网络的最后一层将实数空间映射到概率空间的常用方法,公式如下: \[ softmax(x)_i=\frac{exp(x_i)}{\sum_jexp(x_j ...

- tf.nn.softmax_cross_entropy_with_logits()函数的使用方法

import tensorflow as tf labels = [[0.2,0.3,0.5], [0.1,0.6,0.3]]logits = [[2,0.5,1], [0.1,1,3]] a=tf. ...

- 1、求loss:tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits, labels, name=None))

1.求loss: tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits, labels, name=None)) 第一个参数log ...

- tf.nn.softmax_cross_entropy_with_logits 分类

tf.nn.softmax_cross_entropy_with_logits(logits, labels, name=None) 参数: logits:就是神经网络最后一层的输出,如果有batch ...

- 深度学习原理与框架-图像补全(原理与代码) 1.tf.nn.moments(求平均值和标准差) 2.tf.control_dependencies(先执行内部操作) 3.tf.cond(判别执行前或后函数) 4.tf.nn.atrous_conv2d 5.tf.nn.conv2d_transpose(反卷积) 7.tf.train.get_checkpoint_state(判断sess是否存在

1. tf.nn.moments(x, axes=[0, 1, 2]) # 对前三个维度求平均值和标准差,结果为最后一个维度,即对每个feature_map求平均值和标准差 参数说明:x为输入的fe ...

随机推荐

- DMA—直接存储区访问

本章参考资料:< STM32F4xx 中文参考手册> DMA 控制器章节.学习本章时,配合< STM32F4xx 中文参考手册> DMA 控制器章节一起阅读,效果会更佳,特别是 ...

- ARM汇编(2)(指令)

一,ARM汇编语言立即数的表示方法 十六进制:前缀:0x 十进制:无前缀 二制:前缀:0b 二,常用的ARM指令(标准的ARM语法,GNU的ARM语法) 1.@M开头系列 MOV R0, #12 @R ...

- BlockingQueue(阻塞队列)分析

如果读者还有一点印象,我们在实现线程池时,用了队列这种数据结构来存储接收到的任务,在多线程环境中阻塞队列是一种非常有用的队列,在介绍BlockingQueue之前,我们先解释一下Queue接口. Qu ...

- CFontDialog学习

void CMfcFontDlgDlg::OnBtnFont() { // Show the font dialog with all the default settings. CFontDialo ...

- push certificate

developer_identity.cer <= download from Applemykey.p12 <= Your private key openssl x509 -in de ...

- CSS:层叠样式表的冲突处理

前言 重叠样式表的冲突是通过重叠过程排序,最终确定文档的显示方式的,也就是是说通过重叠排序来处理冲突问题.这过程起决定性作用的是选择器及其相关申明的特殊性,以及继承机制. 基本流程 1.找出所有相关规 ...

- AWS那些需要注意的问题

自己走过的坑,才知道 1.1 EC2实例限制 EC2实例在申请超过20台后,会有数量限制. 解决方法: AWS控制台需要提工单,进行申请解除限制.把EC2数量提高到50台或者100台 此工单审核大概要 ...

- js封装日历控件

最终效果 代码实现 <script> $(function () { $(".j-calendar").calendar({ date: '2017-08-03', c ...

- Arcgis for Js之GeometryService实现測量距离和面积

距离和面积的測量时GIS常见的功能.在本节,讲述的是通过GeometryService实现測量面积和距离.先看看实现后的效果: watermark/2/text/aHR0cDovL2Jsb2cuY3N ...

- linux DNS服务

DNS服务器的安装和配置 首先在终端输入命令#vi /etc/apt/sources.list 输入更新源 # kali repos installed by TARDIS deb http://h ...