Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week2, Optimization algorithms

Gradient descent

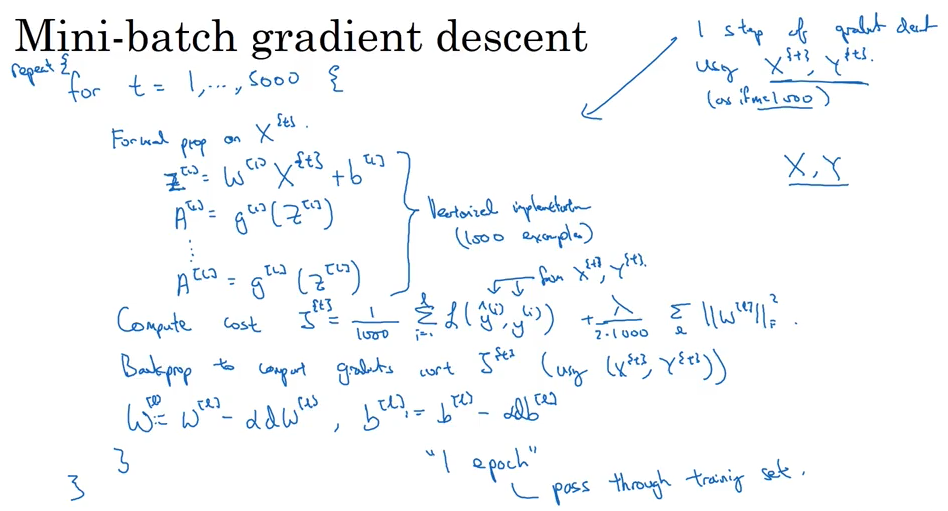

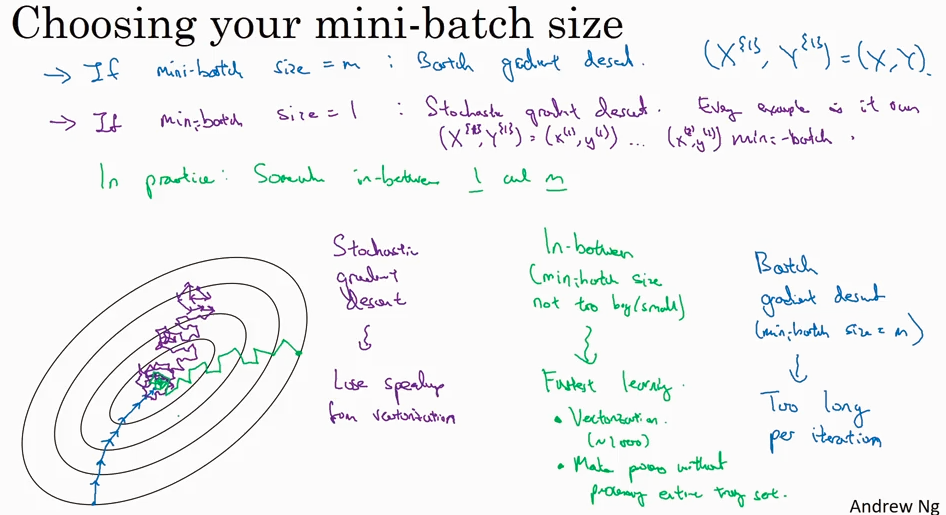

Batch Gradient Decent, Mini-batch gradient descent, Stochastic gradient descent

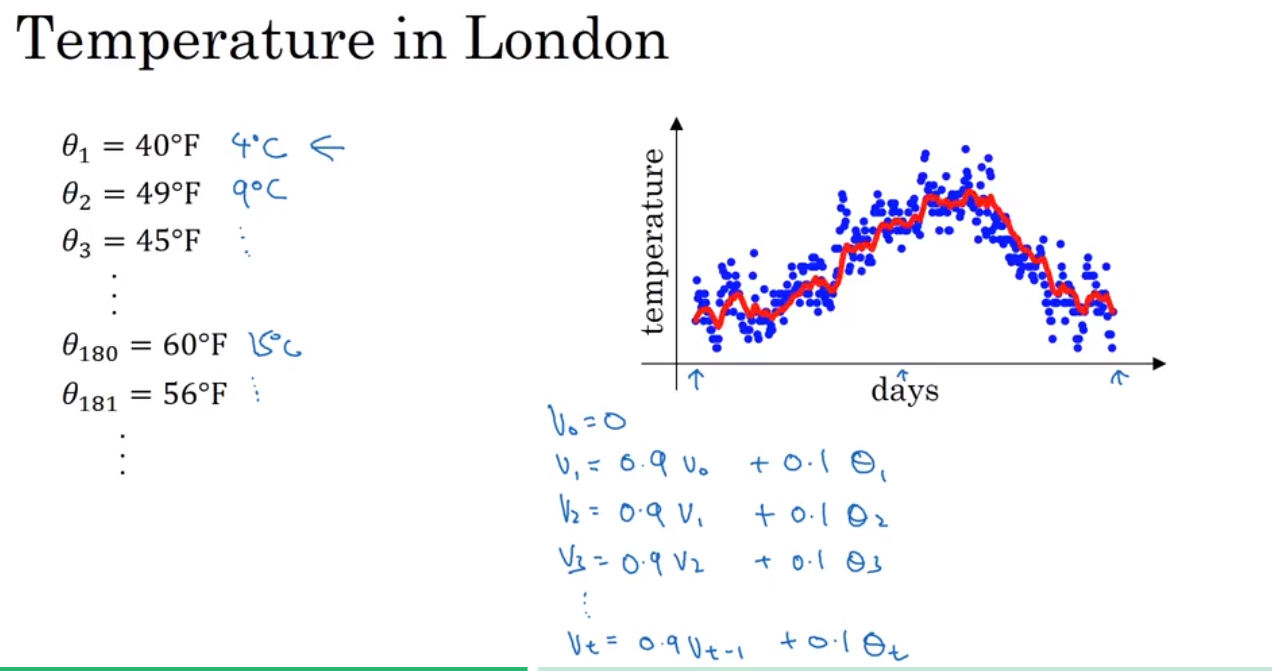

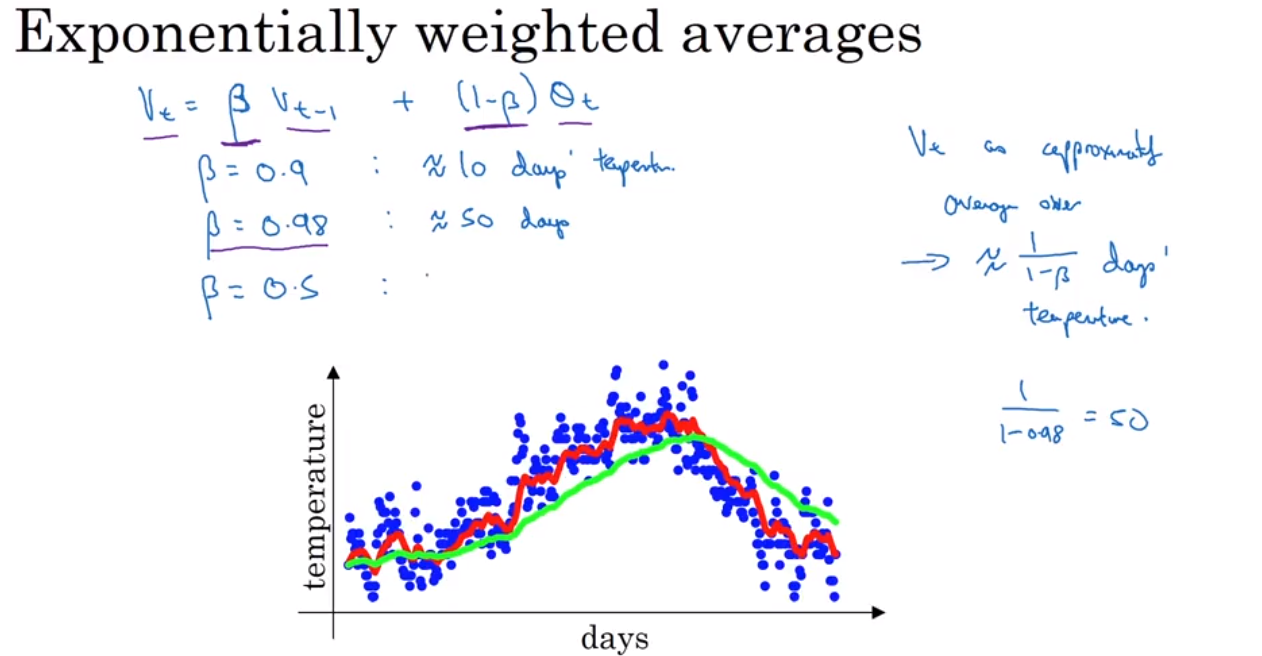

还有很多比gradient decent 更优化的算法,在了解这些算法前,需要先理解 Exponentially weighted averages 这个概念

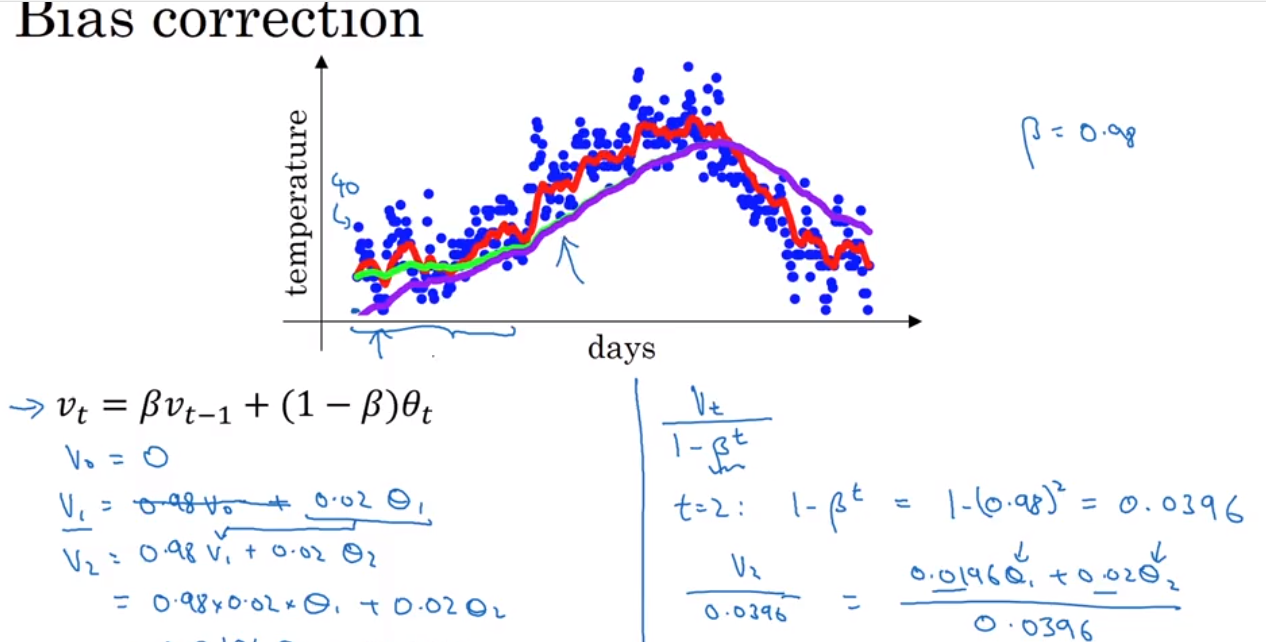

Exponentially weighted average 是一种计算平均值的方法,非常省storage 和 memory, 但是不是很精确。 然后引出一个bias correction 的概念,就是为了能使得 Exponentially weighted average 更加精确.

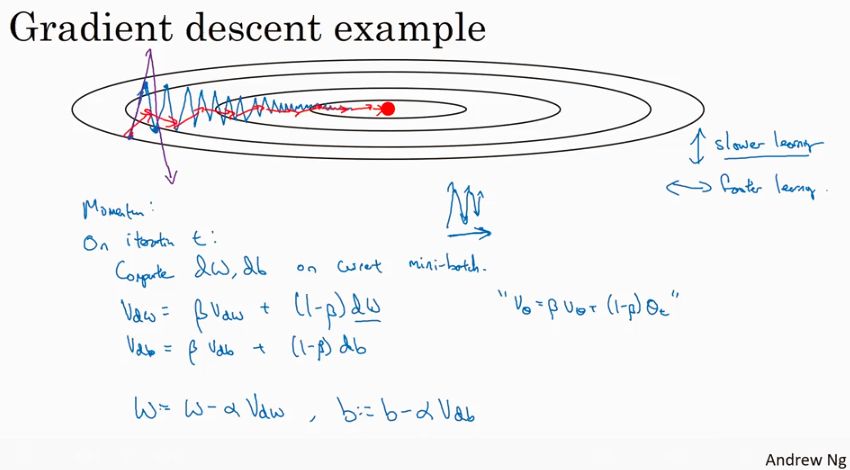

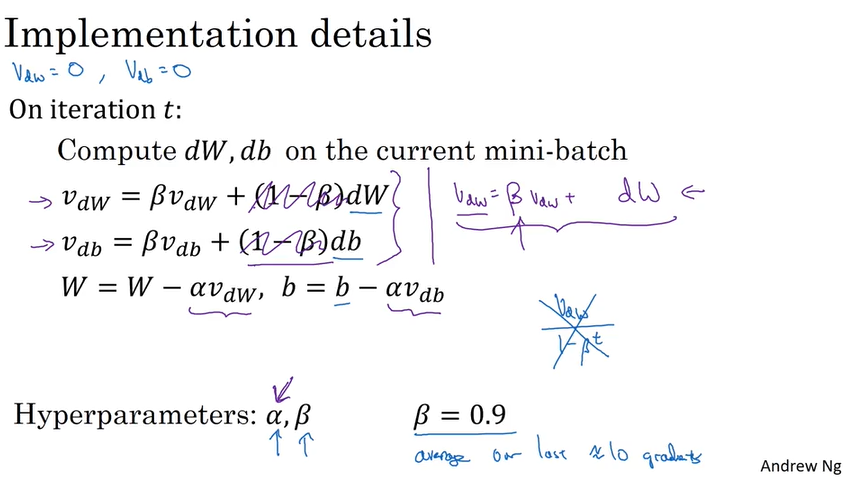

momentum (or called Gradient descent with momentum)

传统的Gradient descent 算法有如下图所示的问题 - 每次迭代都会来回跳动,不直接指向optimum, 在没有做feature scaling 的时候尤其明显。所以引出一个修正的算法 - Gradient descent with momentum.

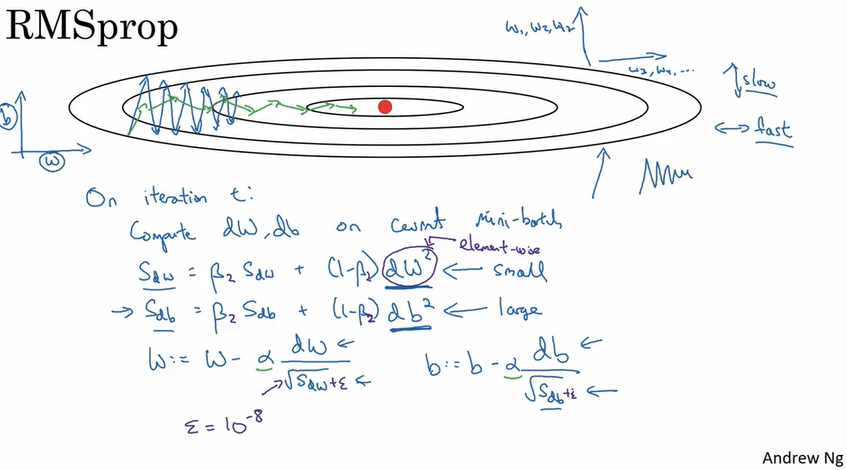

RMSprop

目的和上面讲到的Momentum是一样的,就是使得每次迭代都尽量指向optimum而不是来回跳动. 算法实现如下. RMSprop带来的好处是迭代更快,和可以选用更大的learning rate.

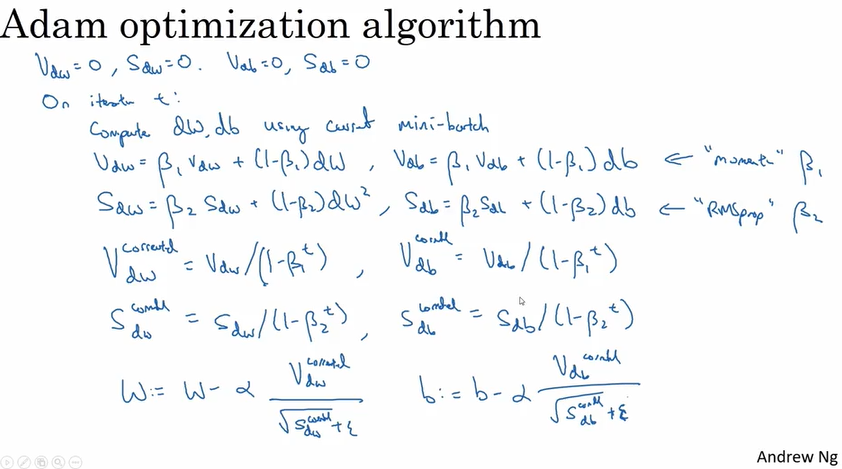

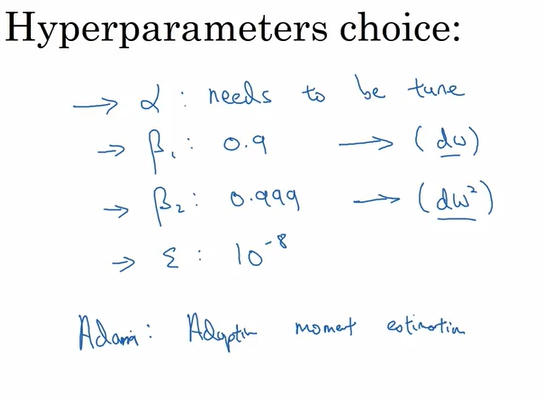

Adam optimation algorithm:

结合了Momentum 和 RMSprop 两种算法. Adam stands for Adaptive mement estimation.

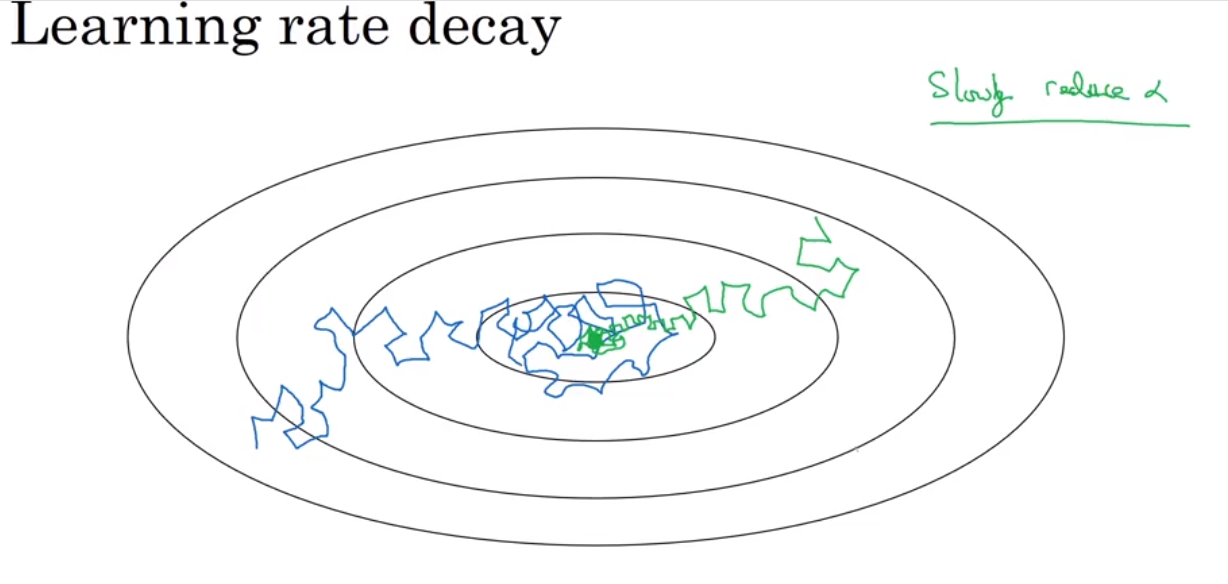

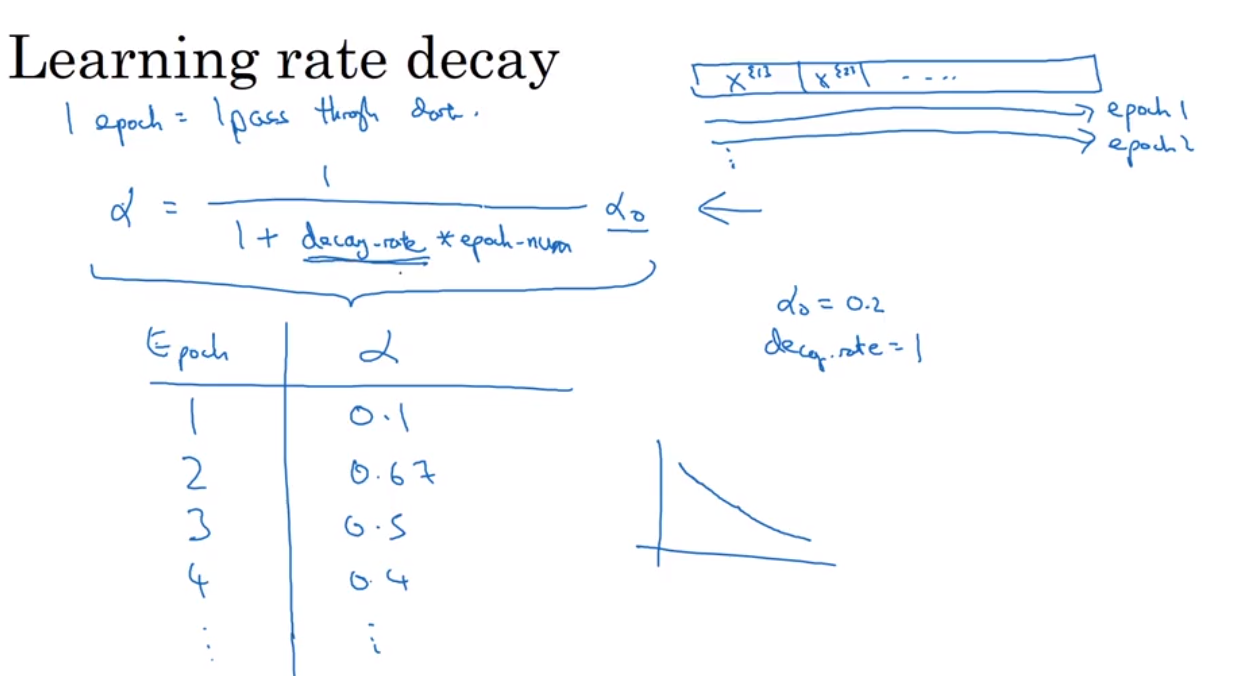

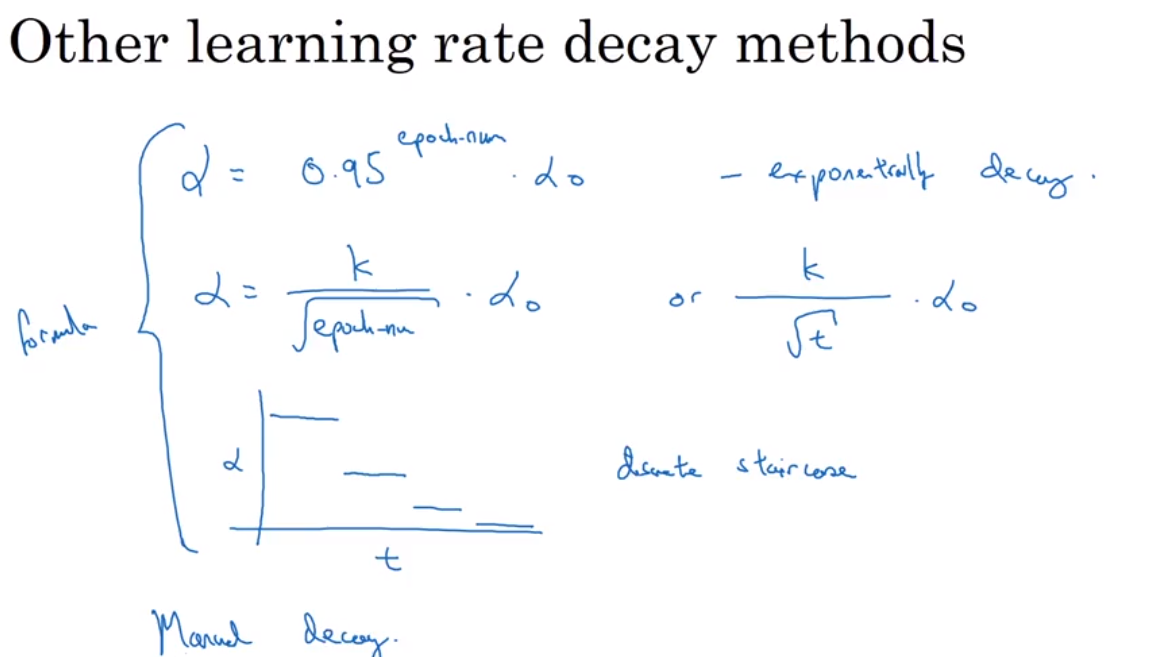

Learning rate decay

why? to reduce the oscillation near the central point.

有哪些实现方式呢?

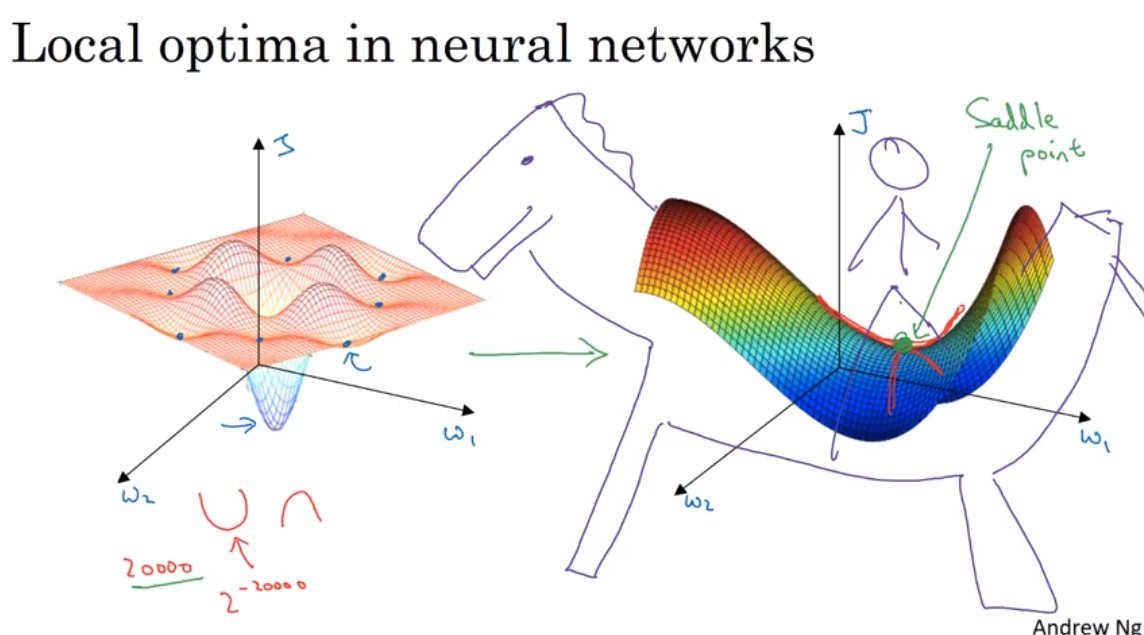

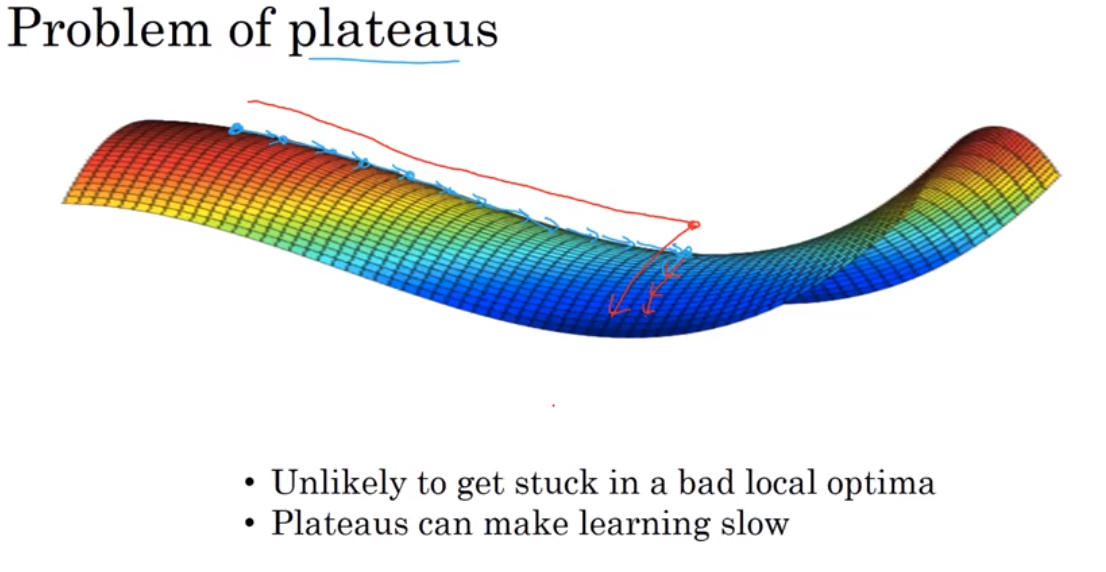

Local optima and saddle point

在大型神经网络里,saddle point 可能比local optima更常见.

Ref:

Coursera, Deep leaning, Andrew Ng

Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week2, Optimization algorithms的更多相关文章

- 《Improving Deep Neural Networks:Hyperparameter tuning, Regularization and Optimization》课堂笔记

Lesson 2 Improving Deep Neural Networks:Hyperparameter tuning, Regularization and Optimization 这篇文章其 ...

- [C4] Andrew Ng - Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization

About this Course This course will teach you the "magic" of getting deep learning to work ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week1, Assignment(Initialization)

声明:所有内容来自coursera,作为个人学习笔记记录在这里. Initialization Welcome to the first assignment of "Improving D ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week1, Assignment(Gradient Checking)

声明:所有内容来自coursera,作为个人学习笔记记录在这里. Gradient Checking Welcome to the final assignment for this week! In ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week1, Assignment(Regularization)

声明:所有内容来自coursera,作为个人学习笔记记录在这里. Regularization Welcome to the second assignment of this week. Deep ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week2, Assignment(Optimization Methods)

声明:所有内容来自coursera,作为个人学习笔记记录在这里. 请不要ctrl+c/ctrl+v作业. Optimization Methods Until now, you've always u ...

- 课程二(Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization),第一周(Practical aspects of Deep Learning) —— 4.Programming assignments:Gradient Checking

Gradient Checking Welcome to this week's third programming assignment! You will be implementing grad ...

- 吴恩达《深度学习》-课后测验-第二门课 (Improving Deep Neural Networks:Hyperparameter tuning, Regularization and Optimization)-Week 1 - Practical aspects of deep learning(第一周测验 - 深度学习的实践)

Week 1 Quiz - Practical aspects of deep learning(第一周测验 - 深度学习的实践) \1. If you have 10,000,000 example ...

- 吴恩达《深度学习》-第二门课 (Improving Deep Neural Networks:Hyperparameter tuning, Regularization and Optimization)-第一周:深度学习的实践层面 (Practical aspects of Deep Learning) -课程笔记

第一周:深度学习的实践层面 (Practical aspects of Deep Learning) 1.1 训练,验证,测试集(Train / Dev / Test sets) 创建新应用的过程中, ...

- 课程二(Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization),第三周(Hyperparameter tuning, Batch Normalization and Programming Frameworks) —— 2.Programming assignments

Tensorflow Welcome to the Tensorflow Tutorial! In this notebook you will learn all the basics of Ten ...

随机推荐

- Linux基本命令总结(二)

接上篇: 7,cp命令用来复制文件或者目录,是Linux系统中最常用的命令之一.一般情况下,shell会设置一个别名,在命令行下复制文件时,如果目标文件已经存在,就会询问是否覆盖,不管你是否使用-i参 ...

- (转)MySQL中In与Exists的区别

背景:总结mysql相关的知识点. 如果A表有n条记录,那么exists查询就是将这n条记录逐条取出,然后判断n遍exists条件. select * from user where exists s ...

- Elastic 开发篇(3)

1.报错: java.lang.NoSuchFieldError: FAIL_ON_SYMBOL_HASH_OVERFLOW 原因: 系统中已引入jackson版本,但版本较低,缺少所需要的字段. 解 ...

- 阿里面试:MYSQL的引擎区别

MyISAM是MySQL的默认数据库引擎(5.5版之前),由早期的ISAM(Indexed Sequential Access Method:有索引的顺序访问方法)所改良.虽然性能极佳,但却有一个缺点 ...

- 表连接join on

表A记录如下: aID aNum 1 a20050111 2 a20050112 3 a20050113 4 a20050114 5 a20050115 表B记录如下: bID bNa ...

- mac crontab调用python时出现ImportError: No module named XXX的问题

写了一个监控mq的脚本,把这个脚本加入crontab里进行时刻监控,于是#crontab -e,添加语句: * * * * * cd /目录 && python mq脚本名.py &g ...

- Matplotlib中柱状图bar使用

一.函数原型 matplotlib.pyplot.bar(left, height, alpha=1, width=0.8, color=, edgecolor=, label=, lw=3) 1. ...

- 本地服务器上挂载A目录到B目录

原因: 由于某个分区满了,切磁盘无法扩大分区空间,但是项目依赖该分区,需要继续像该分区存储文件,此时其他分区还有很大的空间,使用挂载的方式,在有空间的分区创建新目录,将新目录挂载到源目录下即可. 执行 ...

- 关于Django启动创建测试库的问题

最近项目迁移到别的机器上进行开发,启动Django的时候,有如下提示: Creating test database for alias 'default' 其实这个可能是在Django启动按钮的设置 ...

- 【SqlServer】SqlServer中的计算列

计算列区别于需要我们手动或者程序给予赋值的列,它的值来源于该表中其它列的计算值.比如,一个表中包含有数量列Number与单价列Price,我们就可以创建计算列金额Amount来表示数量*单价的结果值, ...