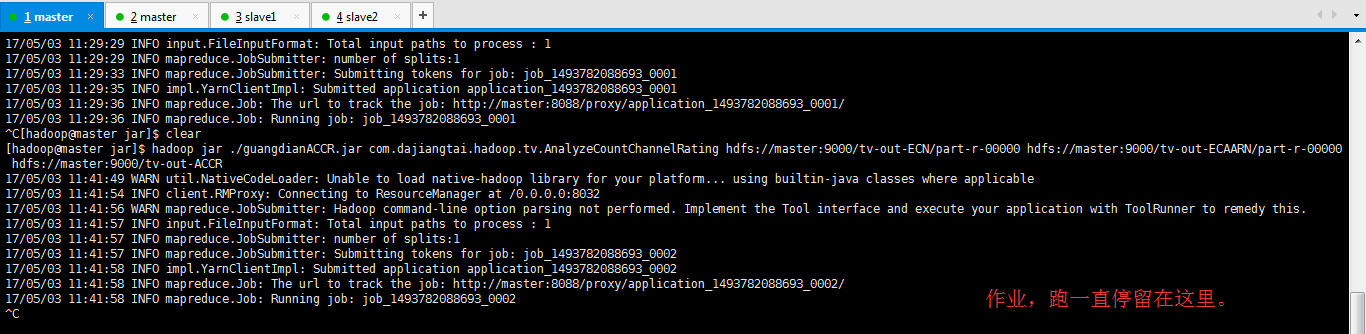

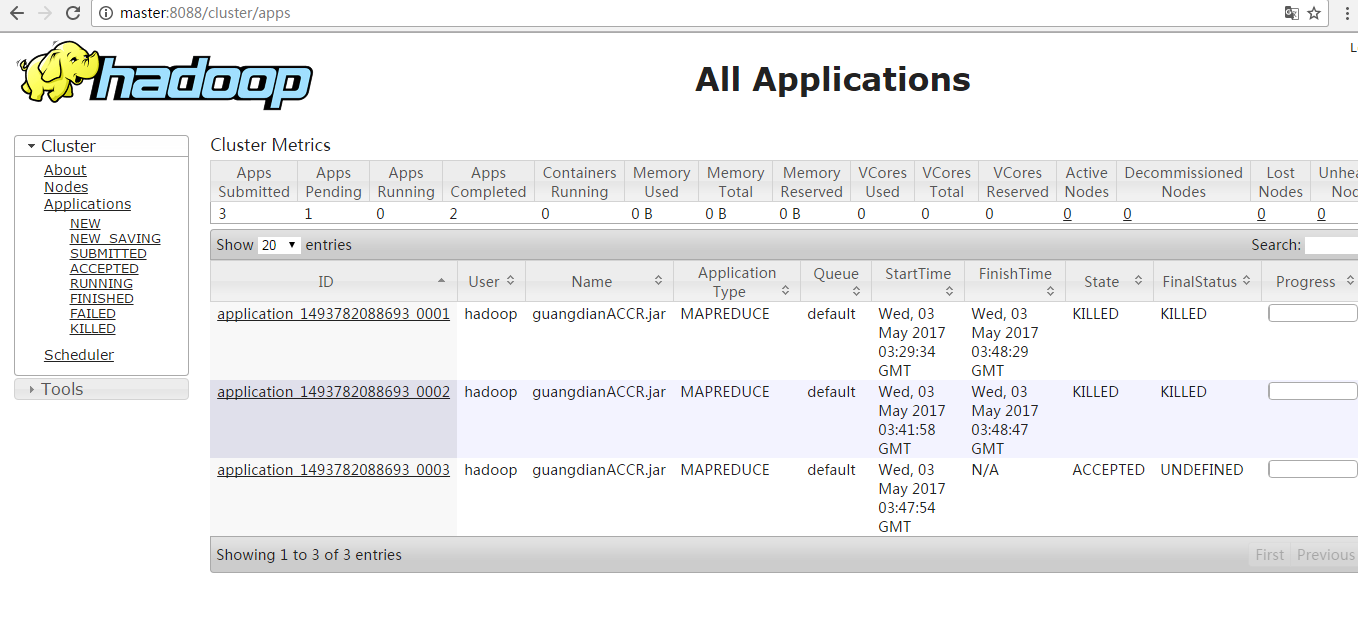

hadoop job -kill 与 yarn application -kii(作业卡了或作业重复提交或MapReduce任务运行到running job卡住)

问题详情

解决办法

[hadoop@master ~]$ hadoop job -kill job_1493782088693_0001

DEPRECATED: Use of this script to execute mapred command is deprecated.

Instead use the mapred command for it. // :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

// :: INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:

// :: INFO impl.YarnClientImpl: Killed application application_1493782088693_0001

Killed job job_1493782088693_0001 [hadoop@master ~]$ hadoop job -kill job_1493782088693_0002

DEPRECATED: Use of this script to execute mapred command is deprecated.

Instead use the mapred command for it. // :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

// :: INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:

// :: INFO impl.YarnClientImpl: Killed application application_1493782088693_0001

Killed job job_1493782088693_0002 [hadoop@master ~]$ hadoop job -kill job_1493782088693_0003

DEPRECATED: Use of this script to execute mapred command is deprecated.

Instead use the mapred command for it. // :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

// :: INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:

// :: INFO impl.YarnClientImpl: Killed application application_1493782088693_0001

Killed job job_1493782088693_0003

有时候上述这样kill做下来,并不管用,得再来

[hadoop@master ~]$ yarn application -kill application_1493782088693_0001

// :: INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:

// :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Application application_1493782088693_0001 has already finished

[hadoop@master ~]$ yarn application -kill application_1493782088693_0002

// :: INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:

// :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Application application_1493782088693_0002 has already finished

[hadoop@master ~]$ yarn application -kill application_1493782088693_0003

// :: INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:

// :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Application application_1493782088693_0003 has already finished

[hadoop@master ~]$

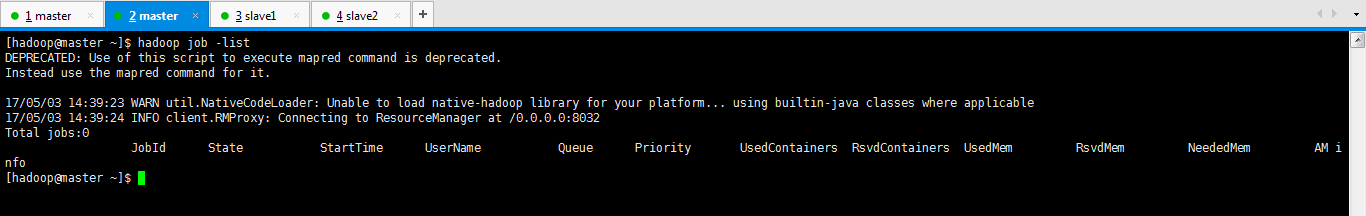

[hadoop@master ~]$ hadoop job -list

DEPRECATED: Use of this script to execute mapred command is deprecated.

Instead use the mapred command for it. // :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

// :: INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:

Total jobs:

JobId State StartTime UserName Queue Priority UsedContainers RsvdContainers UsedMem RsvdMem NeededMem AM info

[hadoop@master ~]$

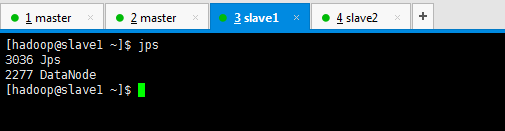

同时,大家要注意,是否是进程的消失?

也会是你的slave1 还是 slave2的进程自动消失了。注意 ,这是个很隐蔽的问题。

重新停止集群,再重新启动集群。

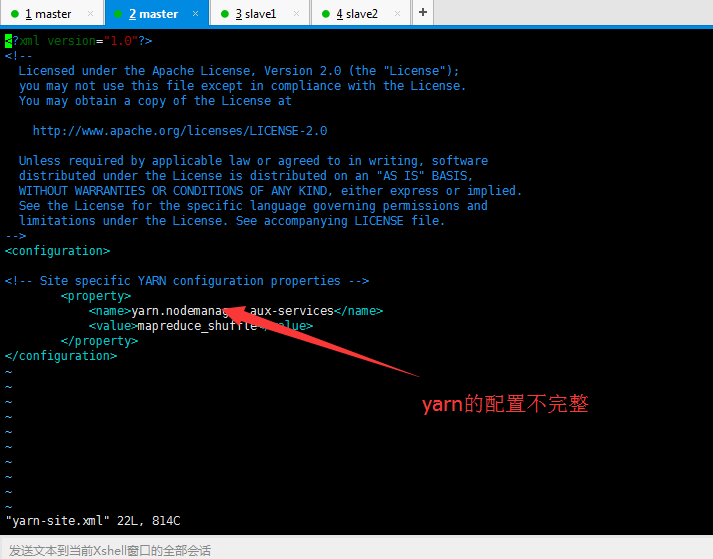

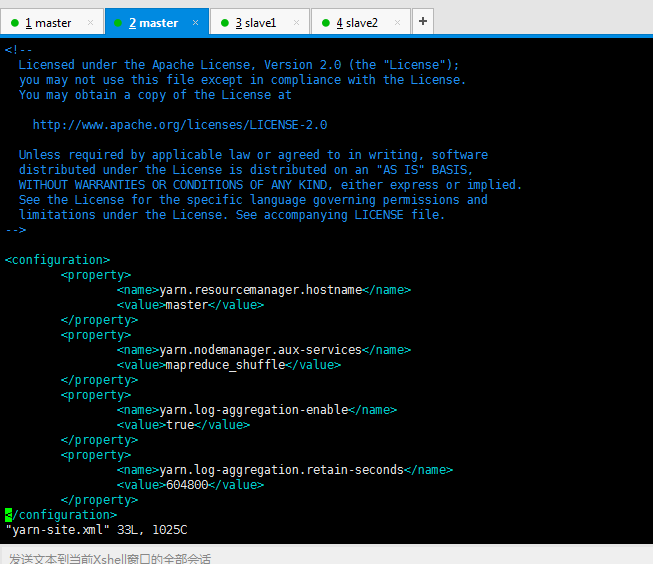

如果还是出现这个问题的话,则

关于这里参数配置的解释,见

Hadoop YARN配置参数剖析(2)—权限与日志聚集相关参数

注意,对master、slave1和slave2都要操作,然后,再[hadoop@master hadoop-2.6.0]$ sbin/stop-all.sh

再[hadoop@master hadoop-2.6.0]$ sbin/start-all.sh即可。

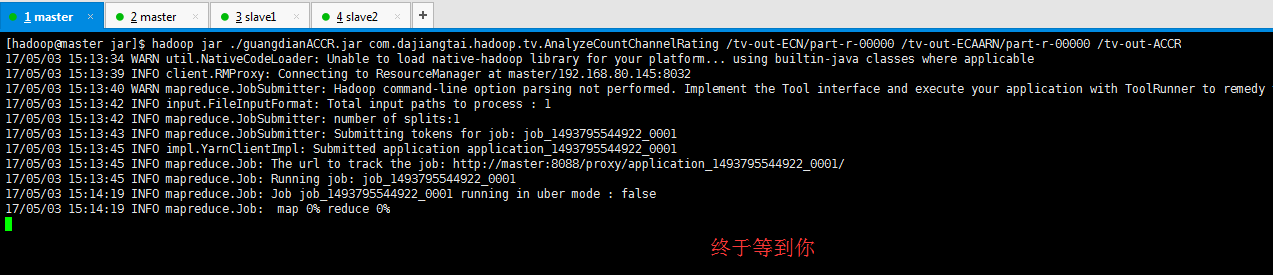

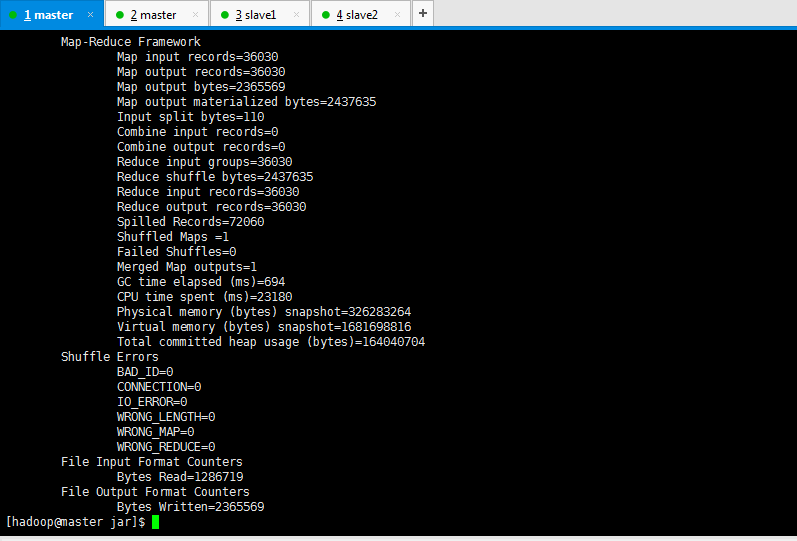

成功!

hadoop job -kill 与 yarn application -kii(作业卡了或作业重复提交或MapReduce任务运行到running job卡住)的更多相关文章

- hadoop job -kill 和 yarn application -kill 区别

hadoop job -kill 调用的是CLI.java里面的job.killJob(); 这里会分几种情况,如果是能查询到状态是RUNNING的话,是直接向AppMaster发送kill请求的.Y ...

- yarn application -kill application_id yarn kill 超时任务脚本

需求:kill 掉yarn上超时的任务,实现不同队列不同超时时间的kill机制,并带有任务名的白名单功能 此为python脚本,可配置crontab使用 # _*_ coding=utf-8 _*_ ...

- Hadoop(七)YARN的资源调度

一.YARN 概述 YARN 是一个资源调度平台,负责为运算程序提供服务器运算资源,相当于一个分布式的操 作系统平台,而 MapReduce 等运算程序则相当于运行于操作系统之上的应用程序 YARN ...

- spark-shell启动报错:Yarn application has already ended! It might have been killed or unable to launch application master

spark-shell不支持yarn cluster,以yarn client方式启动 spark-shell --master=yarn --deploy-mode=client 启动日志,错误信息 ...

- yarn application ID 增长达到10000后

Job, Task, and Task Attempt IDs In Hadoop 2, MapReduce job IDs are generated from YARN application I ...

- spark利用yarn提交任务报:YARN application has exited unexpectedly with state UNDEFINED

spark用yarn提交任务会报ERROR cluster.YarnClientSchedulerBackend: YARN application has exited unexpectedly w ...

- yarn application命令介绍

yarn application 1.-list 列出所有 application 信息 示例:yarn application -list 2.-appStates <Stat ...

- Apache hadoop namenode ha和yarn ha ---HDFS高可用性

HDFS高可用性Hadoop HDFS 的两大问题:NameNode单点:虽然有StandbyNameNode,但是冷备方案,达不到高可用--阶段性的合并edits和fsimage,以缩短集群启动的时 ...

- 【深入浅出 Yarn 架构与实现】3-1 Yarn Application 流程与编写方法

本篇学习 Yarn Application 编写方法,将带你更清楚的了解一个任务是如何提交到 Yarn ,在运行中的交互和任务停止的过程.通过了解整个任务的运行流程,帮你更好的理解 Yarn 运作方式 ...

随机推荐

- SqlCacheDependency轮询数据库表的更改情况的频率

下面的示例向 ASP.NET 应用程序添加一个 SqlCacheDependency. <sqlCacheDependency enabled="true" pollTi ...

- 去除编译警告@SuppressWarnings注解用法详解(转)

使用:@SuppressWarnings(“”)@SuppressWarnings({})@SuppressWarnings(value={}) 编码时我们总会发现如下变量未被使用的警告提示: 上述代 ...

- Linux下添加,删除,修改,查看用户和用户组

linux下添加,删除,修改,查看用户和用户组 1,创建组 groupadd test 增加一个test组 2,修改组 groupmod -n test2 test 将test组的名子改成test ...

- Senior Manufacturing Technical Manager

Job Description As a Manufacturing Technical Manager, you will be responsible for bringing new produ ...

- SQL夯实基础(二):连接操作中使用on与where筛选的差异

一.on筛选和where筛选 在连接查询语法中,另人迷惑首当其冲的就要属on筛选和where筛选的区别了,如果在我们编写查询的时候, 筛选条件的放置不管是在on后面还是where后面, 查出来的结果总 ...

- 加密第四节_IPSec基本理论

加密第四节_IPSec基本理论 本节内容 IPSec简介 IPSec两种工作模式 判断隧道模式和传输模式 IPSec两种模型 IPSec两个数据库 IPSec基本理论 IPSec简介 提供了网络层的安 ...

- 新的开发域名 fastadmin.tk

新的开发域名 fastadmin.tk 这个的所有子域名批向 127.0.0.1,可以用于开发. 以后不用再修改系统的 hosts. 使用案例 手把手教你安装 FastAdmin 到虚拟主机 (php ...

- centOS5.5 配置vnc,开启linux远程桌面

如何远程控制centOS桌面? 如何使用windows远程控制centOS桌面? 1.查看本机是否有安装vnc(centOS5默认有安装vnc) rpm -q vnc vnc-server 如果显示结 ...

- 1.react的基础知识

React 的基础之:JSX 语法 react 使用 JSX 语法,js 代码中可以写 HTML 代码. let myTitle = <h1>Hello, World!</h1> ...

- (转)C#正则表达式Regex类的用法

原文地址如下:http://www.studyofnet.com/news/297.html 一.C#正则表达式符号模式 字 符 描 述 \ 转义字符,将一个具有特殊功能的字符转义为一个普通字符,或反 ...