Build an ETL Pipeline With Kafka Connect via JDBC Connectors

This article is an in-depth tutorial for using Kafka to move data from PostgreSQL to Hadoop HDFS via JDBC connections.

Read this eGuide to discover the fundamental differences between iPaaS and dPaaS and how the innovative approach of dPaaS gets to the heart of today’s most pressing integration problems, brought to you in partnership with Liaison.

Tutorial: Discover how to build a pipeline with Kafka leveraging DataDirect PostgreSQL JDBC driver to move the data from PostgreSQL to HDFS. Let’s go streaming!

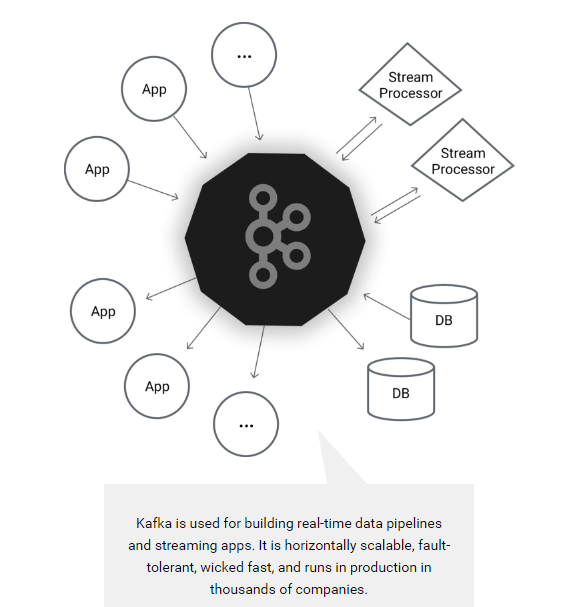

Apache Kafka is an open source distributed streaming platform which enables you to build streaming data pipelines between different applications. You can also build real-time streaming applications that interact with streams of data, focusing on providing a scalable, high throughput and low latency platform to interact with data streams.

Earlier this year, Apache Kafka announced a new tool called Kafka Connect which can helps users to easily move datasets in and out of Kafka using connectors, and it has support for JDBC connectors out of the box! One of the major benefits for DataDirect customers is that you can now easily build an ETL pipeline using Kafka leveraging your DataDirect JDBC drivers. Now you can easily connect and get the data from your data sources into Kafka and export the data from there to another data source.

Image From https://kafka.apache.org/

Environment Setup

Before proceeding any further with this tutorial, make sure that you have installed the following and are configured properly. This tutorial is written assuming you are also working on Ubuntu 16.04 LTS, you have PostgreSQL, Apache Hadoop, and Hive installed.

- Installing Apache Kafka and required toolsTo make the installation process easier for people trying this out for the first time, we will be installing Confluent Platform. This takes care of installing Apache Kafka, Schema Registry and Kafka Connect which includes connectors for moving files, JDBC connectors and HDFS connector for Hadoop.

- To begin with, install Confluent’s public key by running the command:

wget -qO -http://packages.confluent.io/deb/2.0/archive.key | sudo apt-key add - - Now add the repository to your sources.list by running the following command:

sudo add-apt-repository "deb http://packages.confluent.io/deb/2.0 stable main" - Update your package lists and then install the Confluent platform by running the following commands:

sudo apt-get updatesudo apt-get install confluent-platform-2.11.7

- To begin with, install Confluent’s public key by running the command:

- Install DataDirect PostgreSQL JDBC driver

- Download DataDirect PostgreSQL JDBC driver by visiting here.

- Install the PostgreSQL JDBC driver by running the following command:

java -jar PROGRESS_DATADIRECT_JDBC_POSTGRESQL_ALL.jar - Follow the instructions on the screen to install the driver successfully (you can install the driver in evaluation mode where you can try it for 15 days, or in license mode, if you have bought the driver)

- Configuring data sources for Kafka Connect

- Create a new file called postgres.properties, paste the following configuration and save the file. To learn more about the modes that are being used in the below configuration file, visit this page.

name=test-postgres-jdbcconnector.class=io.confluent.connect.jdbc.JdbcSourceConnectortasks.max=1connection.url=jdbc:datadirect:postgresql://<;server>:<port>;User=<user>;Password=<password>;Database=<dbname>mode=timestamp+incrementingincrementing.column.name=<id>timestamp.column.name=<modifiedtimestamp>topic.prefix=test_jdbc_table.whitelist=actor - Create another file called hdfs.properties, paste the following configuration and save the file. To learn more about HDFS connector and configuration options used, visit this page.

name=hdfs-sinkconnector.class=io.confluent.connect.hdfs.HdfsSinkConnectortasks.max=1topics=test_jdbc_actorhdfs.url=hdfs://<;server>:<port>flush.size=2hive.metastore.uris=thrift://<;server>:<port>hive.integration=trueschema.compatibility=BACKWARD - Note that postgres.properties and hdfs.properties have basically the connection configuration details and behavior of the JDBC and HDFS connectors.

- Create a symbolic link for DataDirect Postgres JDBC driver in Hive lib folder by using the following command:

ln -s /path/to/datadirect/lib/postgresql.jar /path/to/hive/lib/postgresql.jar - Also make the DataDirect Postgres JDBC driver available on Kafka Connect process’s CLASSPATH by running the following command:

export CLASSPATH=/path/to/datadirect/lib/postgresql.jar - Start the Hadoop cluster by running following commands:

cd /path/to/hadoop/sbin./start-dfs.sh./start-yarn.sh

- Create a new file called postgres.properties, paste the following configuration and save the file. To learn more about the modes that are being used in the below configuration file, visit this page.

- Configuring and running Kafka Services

- Download the configuration files for Kafka, zookeeper and schema-registry services

- Start the Zookeeper service by providing the zookeeper.properties file path as a parameter by using the command:

zookeeper-server-start /path/to/zookeeper.properties - Start the Kafka service by providing the server.properties file path as a parameter by using the command:

kafka-server-start /path/to/server.properties - Start the Schema registry service by providing the schema-registry.properties file path as a parameter by using the command:

schema-registry-start /path/to/ schema-registry.properties

Ingesting Data Into HDFS using Kafka Connect

To start ingesting data from PostgreSQL, the final thing that you have to do is start Kafka Connect. You can start Kafka Connect by running the following command:

connect-standalone /path/to/connect-avro-standalone.properties \ /path/to/postgres.properties /path/to/hdfs.properties

This will import the data from PostgreSQL to Kafka using DataDirect PostgreSQL JDBC drivers and create a topic with name test_jdbc_actor. Then the data is exported from Kafka to HDFS by reading the topic test_jdbc_actor through the HDFS connector. The data stays in Kafka, so you can reuse it to export to any other data sources.

Next Steps

We hope this tutorial helped you understand on how you can build a simple ETL pipeline using Kafka Connect leveraging DataDirect PostgreSQL JDBC drivers. This tutorial is not limited to PostgreSQL. In fact, you can create ETL pipelines leveraging any of our DataDirect JDBC drivers that we offer for Relational databases like Oracle, DB2 and SQL Server, Cloud sources likeSalesforce and Eloqua or BigData sources like CDH Hive, Spark SQL and Cassandra by following similar steps. Also, subscribe to our blog via email or RSS feed for more awesome tutorials.

Discover the unprecedented possibilities and challenges, created by today’s fast paced data climate andwhy your current integration solution is not enough, brought to you in partnership with Liaison.

Build an ETL Pipeline With Kafka Connect via JDBC Connectors的更多相关文章

- 1.3 Quick Start中 Step 7: Use Kafka Connect to import/export data官网剖析(博主推荐)

不多说,直接上干货! 一切来源于官网 http://kafka.apache.org/documentation/ Step 7: Use Kafka Connect to import/export ...

- Kafka connect快速构建数据ETL通道

摘要: 作者:Syn良子 出处:http://www.cnblogs.com/cssdongl 转载请注明出处 业余时间调研了一下Kafka connect的配置和使用,记录一些自己的理解和心得,欢迎 ...

- Kafka Connect Architecture

Kafka Connect's goal of copying data between systems has been tackled by a variety of frameworks, ma ...

- Streaming data from Oracle using Oracle GoldenGate and Kafka Connect

This is a guest blog from Robin Moffatt. Robin Moffatt is Head of R&D (Europe) at Rittman Mead, ...

- 基于Kafka Connect框架DataPipeline在实时数据集成上做了哪些提升?

在不断满足当前企业客户数据集成需求的同时,DataPipeline也基于Kafka Connect 框架做了很多非常重要的提升. 1. 系统架构层面. DataPipeline引入DataPipeli ...

- 打造实时数据集成平台——DataPipeline基于Kafka Connect的应用实践

导读:传统ETL方案让企业难以承受数据集成之重,基于Kafka Connect构建的新型实时数据集成平台被寄予厚望. 在4月21日的Kafka Beijing Meetup第四场活动上,DataPip ...

- 替代Flume——Kafka Connect简介

我们知道过去对于Kafka的定义是分布式,分区化的,带备份机制的日志提交服务.也就是一个分布式的消息队列,这也是他最常见的用法.但是Kafka不止于此,打开最新的官网. 我们看到Kafka最新的定义是 ...

- Apache Kafka Connect - 2019完整指南

今天,我们将讨论Apache Kafka Connect.此Kafka Connect文章包含有关Kafka Connector类型的信息,Kafka Connect的功能和限制.此外,我们将了解Ka ...

- SQL Server CDC配合Kafka Connect监听数据变化

写在前面 好久没更新Blog了,从CRUD Boy转型大数据开发,拉宽了不少的知识面,从今年年初开始筹备.组建.招兵买马,到现在稳定开搞中,期间踏过无数的火坑,也许除了这篇还很写上三四篇. 进入主题, ...

随机推荐

- Android Broadcast 和 iOS Notification

感觉以上2个机能有许多相似之处,作个记录,待研究!

- nginx和apache的一些比较

1.两者所用的驱动模式不同. nginx使用的是epoll的非阻塞模式事件驱动. apache使用的是select的阻塞模式事件驱动. 2.fastcgi和cgi的区别 当用户请求web服务的时候,w ...

- Selenium 模拟人输入

public static void type(WebElement e,String str) throws InterruptedException { String[] singleCharac ...

- Effective C++ -----条款28:避免返回handles指向对象内部成分

避免返回handles(包括reference.指针.迭代器)指向对象内部.遵守这个条款可增加封装性,帮助const成员函数的行为像个const,并将发生“虚吊号码牌”(dangling handle ...

- malloc原理和内存碎片

当一个进程发生缺页中断的时候,进程会陷入内核态,执行以下操作: 1.检查要访问的虚拟地址是否合法 2.查找/分配一个物理页 3.填充物理页内容(读取磁盘,或者直接置0,或者啥也不干) 4.建立映射关系 ...

- Parallels Destop软件配置

Parallels Destop个人感觉最好用的mac虚拟win软件 http://pan.baidu.com/s/1jHFwIGm 密码:ab21百度云下载(或者下载自己百度云的) 安装方法: 1. ...

- 【QT】自己生成ui加入工程

在三个月前 我就在纠结 C++ GUI Qt 4编程这本书中2.3节 快速设计对话框这一段. 按照书上的做没有办法生成能够成功运行的程序. 这两天又折腾了好久,终于成功了. 注意事项: 1. 我之前装 ...

- 在某公司时的java开发环境配置文档

1 开发环境配置 1.1. MyEclipse 配置 1.MyEclipse下载地址:\\server\共享文件\backup\MyEclipse9.0 2.修改工作空间编码为UTF-8,如下图 3 ...

- IOS - 控件的AutoresizingMask属性

在 UIView 中有一个autoresizingMask的属性,它对应的是一个枚举的值(如下),属性的意思就是自动调整子控件与父控件中间的位置,宽高. enum { UIViewAutoresi ...

- ASP.NET SignalR 与 LayIM2.0 配合轻松实现Web聊天室(五) 之 加好友,加群流程,消息管理和即时消息提示的实现

前言 前前一篇留了个小问题,在上一篇中忘了写了,就是关于LayIM已经封装好的上传文件或者图片的问题.对接好接口之后,如果上传速度慢,界面就会出现假死情况,虽然文件正在上传.于是我就简单做了个图标替代 ...