OpenCV图像处理与视频分析详解

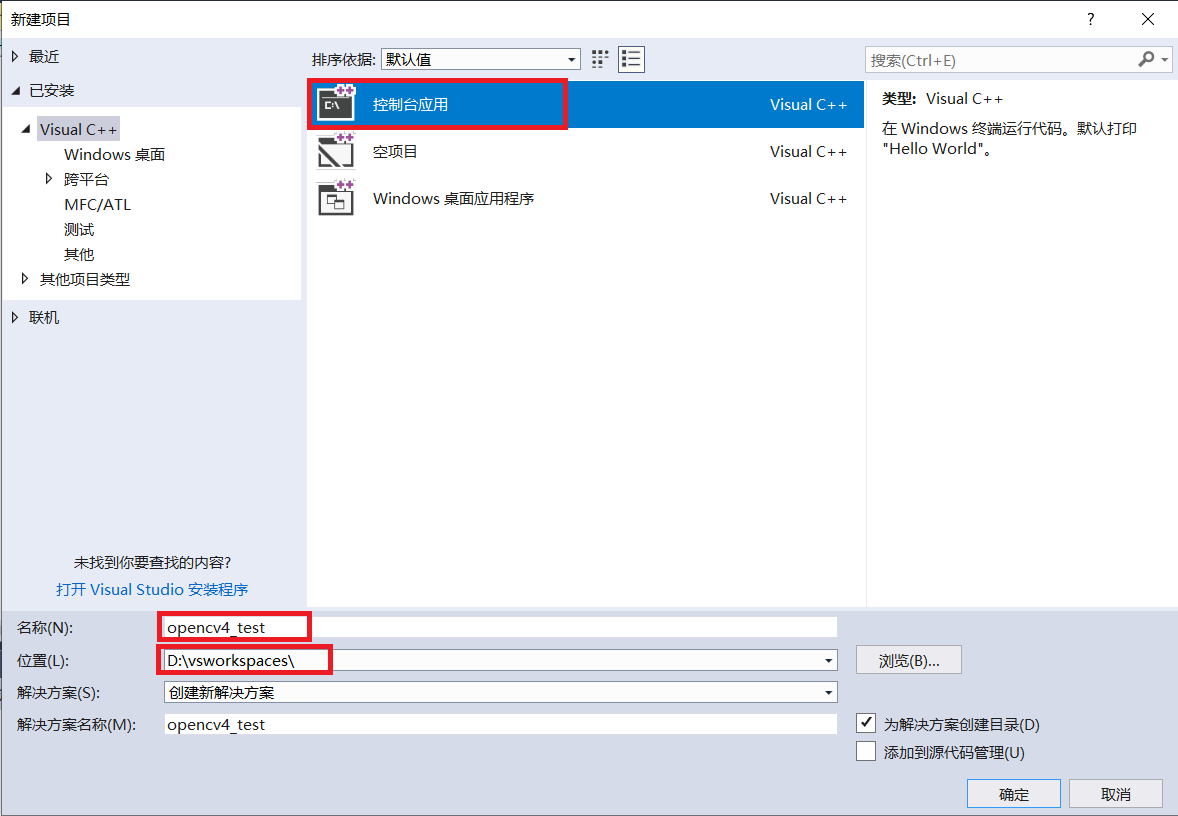

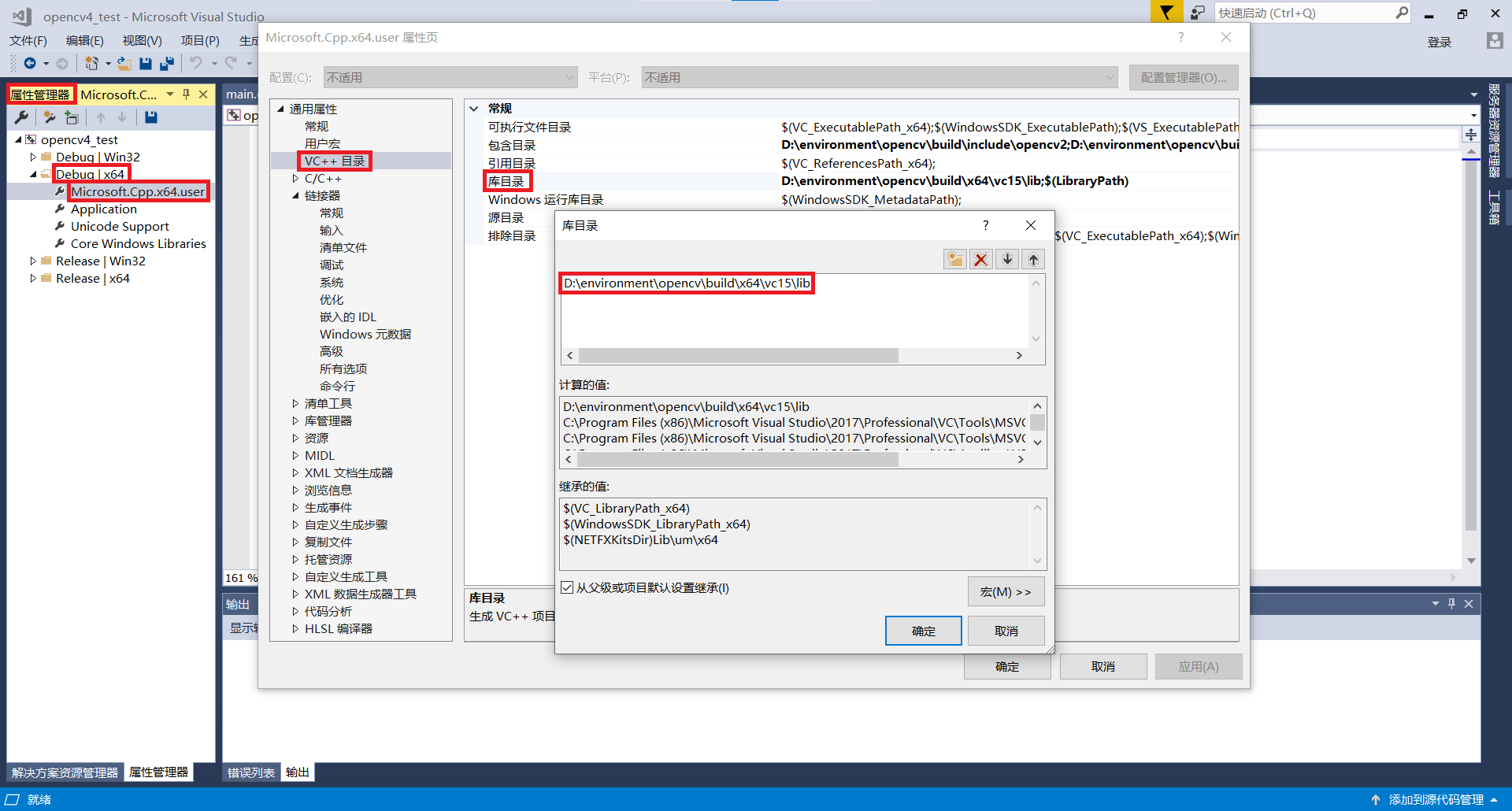

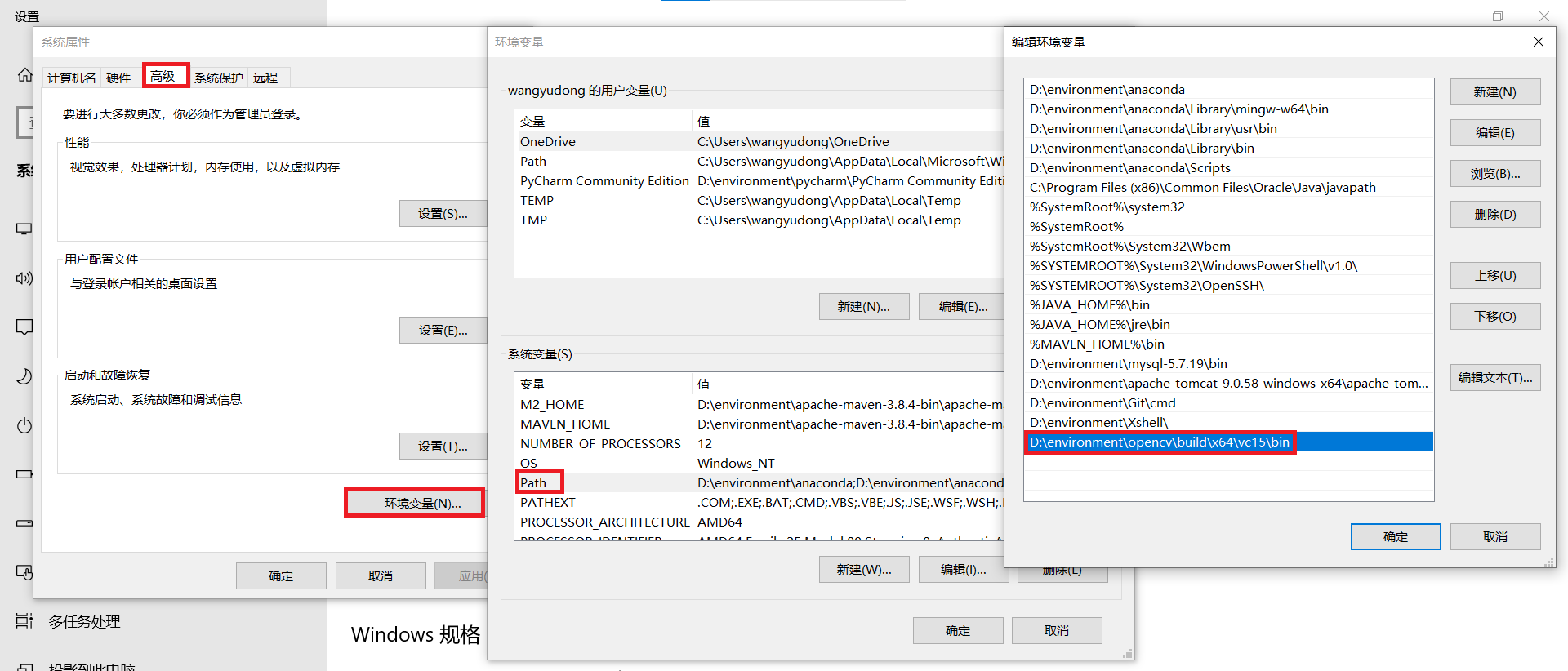

1、OpenCV4环境搭建

VS2017新建一个控制台项目

配置包含目录

配置库目录

配置链接器

配置环境变量

重新启动VS2017

2、第一个图像显示程序

main.cpp

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

waitKey(0);

destroyAllWindows();

return 0;

}

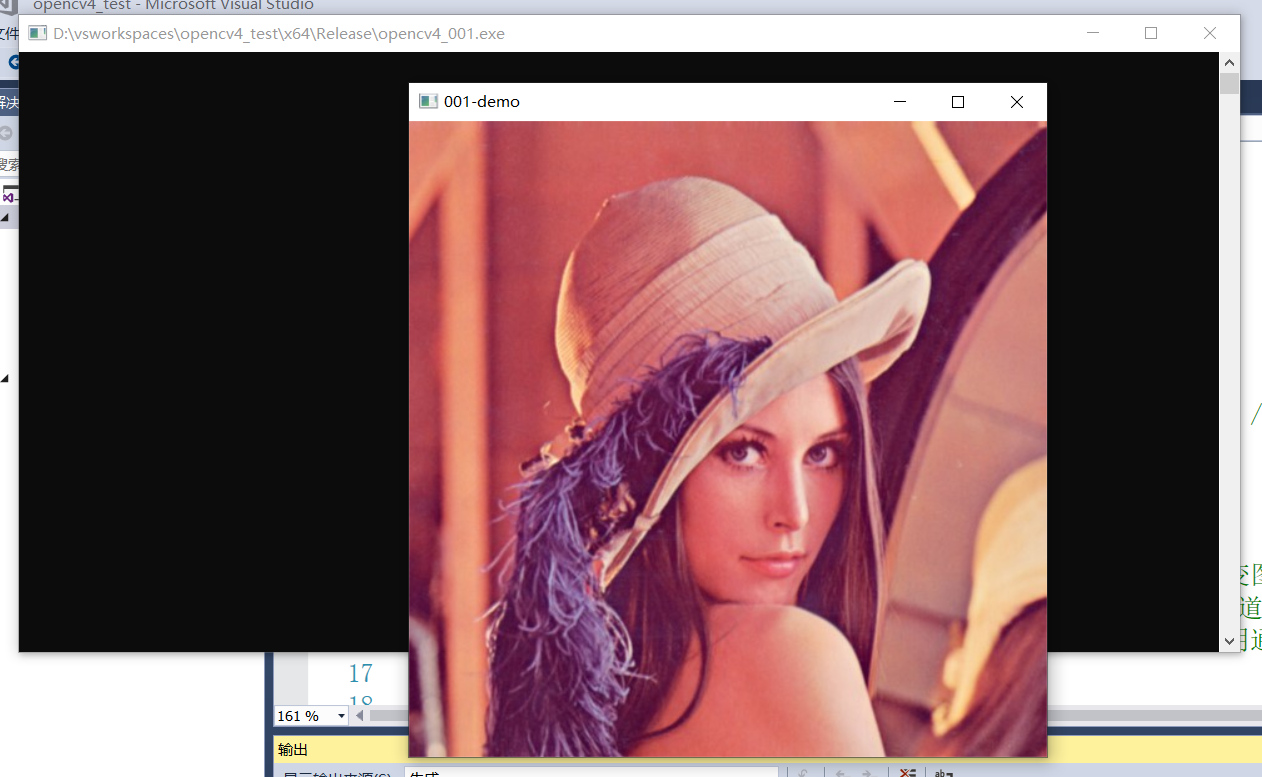

效果:

3、图像加载与保存

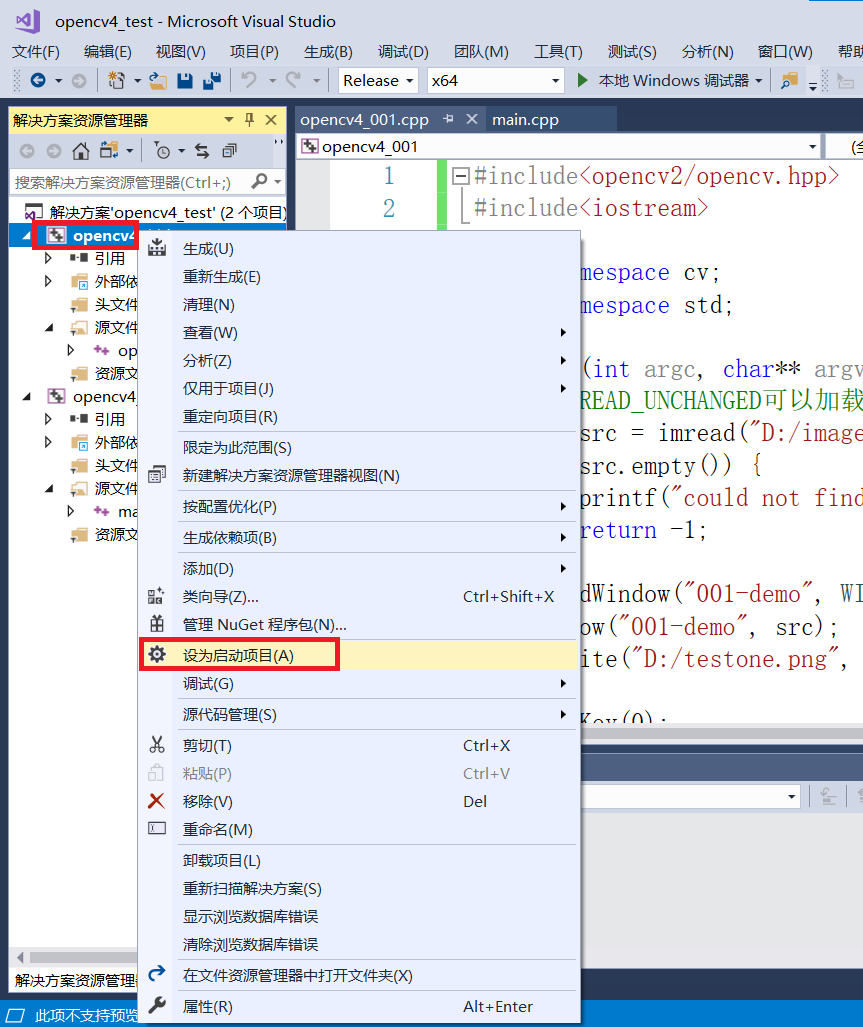

添加一个项目opencv4_001

将该项目设为启动项目

opencv4_001.cpp

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

//IMREAD_UNCHANGED可以加载png四通道,带透明通道的图像

Mat src = imread("D:/images/lena.jpg",IMREAD_ANYCOLOR); //改变加载图像的色彩空间

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("001-demo", WINDOW_AUTOSIZE); //可以设置改变图形显示窗口大小WINDOW_FREERATIO

imshow("001-demo", src); //imshow()彩色图像只能显示三通道,处理四通道图像时,需要将图像保存

imwrite("D:/testone.png", src); //可以保存四通道,带透明通道的图像

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

4、认识Mat对象

depth:深度,即每一个像素的位数(bits),在opencv的Mat.depth()中得到的是一个 0-6 的数字,分别代表不同的位数:enum{ CV_8U=0,CV_8S=1,CV_16U=2,CV_16S=3,CV_32S=4,CV_32F=5,CV_64F=6};可见 0和1都代表8位,2和3都代表16位,4和5代表32位,6代表64位;

depth:深度,即每一个像素的位数(bits),那么我们创建的时候就可以知道根据类型也就可以知道每个像素的位数,也就是知道了创建mat的深度。

图像深度(depth)

| 代表数字 | 图像深度 | 对应数据类型 |

|---|---|---|

| 0 | CV_8U | 8位无符号-字节类型 |

| 1 | CV_8S | 8位有符号-字节类型 |

| 2 | CV_16U | 16位无符号类型 |

| 3 | CV_16S | 16位有符号类型 |

| 4 | CV_32S | 32整型数据类型 |

| 5 | CV_32F | 32位浮点数类型 |

| 6 | CV_64F | 64位双精度类型 |

图像类型即图像深度与图像通道数组合,单通道图像类型代表的数字与图像深度相同,每增加一个通道,在单通道对应数字基础上加8。例如:单通道CV_8UC1=0,双通道CV_8UC2=CV8UC1+8=8,三通道CV_8UC3=CV8UC1+8+8=16。

图像类型(type(对应数字))

| 单通道 | 双通道 | 三通道 | 四通道 |

|---|---|---|---|

| CV_8UC1(0) | CV_8UC2(8) | CV_8UC3(16) | CV_8UC4(24) |

| CV_32SC1(4) | CV_32SC2(12) | CV_32SC3(20) | CV_32SC4(28) |

| CV_32FC1(5) | CV_32FC2(13) | CV_32FC3(21) | CV_32FC4(29) |

| CV_64FC1(6) | CV_64FC2(14) | CV_64FC3(22) | CV_64FC4(30) |

| CV_16SC1(3) | CV_16SC2(11) | CV_16SC3(19) | CV_16SC4(27) |

opencv4_002.cpp

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("opencv4_002", WINDOW_AUTOSIZE);

imshow("opencv4_002", src);

int width = src.cols; //图像宽度

int height = src.rows; //图像高度

int dim = src.channels(); //dimension 维度

int d = src.depth(); //图像深度

int t = src.type(); //图像类型

printf("width:%d, height:%d, dim:%d, depth:%d, type:%d \n", width, height, dim, d, t);

waitKey(0);

destroyAllWindows();

return 0;

}

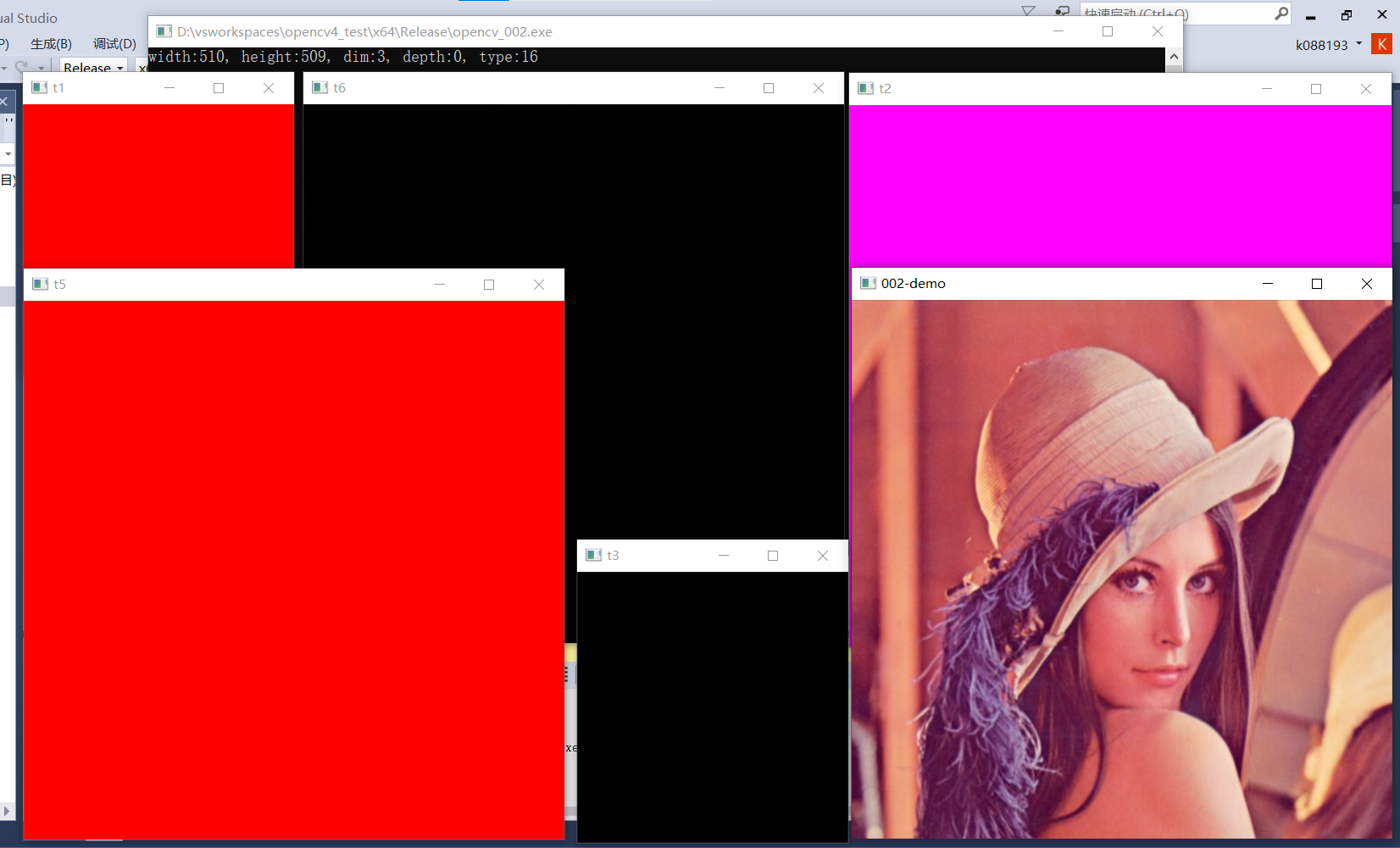

效果:

5、Mat对象创建与使用

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

//namedWindow("opencv4_002", WINDOW_AUTOSIZE);

//imshow("opencv4_002", src);

int width = src.cols; //图像宽度

int height = src.rows; //图像高度

int dim = src.channels(); //dimension 维度

int d = src.depth(); //图像深度

int t = src.type(); //图像类型

if (t == CV_8UC3) {

printf("width:%d, height:%d, dim:%d, depth:%d, type:%d \n", width, height, dim, d, t);

}

//create one

Mat t1 = Mat(256, 256, CV_8UC3);

t1 = Scalar(0, 0, 255);

imshow("t1", t1);

//create two

Mat t2 = Mat(Size(512, 512), CV_8UC3);

t2 = Scalar(255, 0, 255);

imshow("t2", t2);

//create three

Mat t3 = Mat::zeros(Size(256, 256), CV_8UC3);

imshow("t3", t3);

//create from source

Mat t4 = src;

Mat t5;

//Mat t5 = src.clone();

src.copyTo(t5);

t5 = Scalar(0, 0, 255);

imshow("t5", t5);

imshow("002-demo", src);

Mat t6 = Mat::zeros(src.size(), src.type());

imshow("t6", t6);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

6、遍历与访问每个像素

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

//namedWindow("opencv4_002", WINDOW_AUTOSIZE);

//imshow("opencv4_002", src);

int width = src.cols; //图像宽度

int height = src.rows; //图像高度

int dim = src.channels(); //dimension 维度

int d = src.depth(); //图像深度

int t = src.type(); //图像类型

if (t == CV_8UC3) {

printf("width:%d, height:%d, dim:%d, depth:%d, type:%d \n", width, height, dim, d, t);

}

//create one

Mat t1 = Mat(256, 256, CV_8UC3);

t1 = Scalar(0, 0, 255);

//imshow("t1", t1);

//create two

Mat t2 = Mat(Size(512, 512), CV_8UC3);

t2 = Scalar(255, 0, 255);

//imshow("t2", t2);

//create three

Mat t3 = Mat::zeros(Size(256, 256), CV_8UC3);

//imshow("t3", t3);

//create from source

Mat t4 = src;

Mat t5;

//Mat t5 = src.clone();

src.copyTo(t5);

t5 = Scalar(0, 0, 255);

//imshow("t5", t5);

imshow("002-demo", src);

Mat t6 = Mat::zeros(src.size(), src.type());

//imshow("t6", t6);

//int height = src.rows;

//int width = src.cols;

/*int ch = src.channels();

for (int row = 0; row < height; row++) { //遍历每行的每个像素

for (int col = 0; col < width; col++) {

if (ch == 3) {

Vec3b pixel = src.at<Vec3b>(row, col); //Vec3i,Vec3f对应不同类型图像像素值

int blue = pixel[0];

int green = pixel[1];

int red = pixel[2];

src.at<Vec3b>(row, col)[0] = 255 - blue; //BGR图像反转

src.at<Vec3b>(row, col)[1] = 255 - green;

src.at<Vec3b>(row, col)[2] = 255 - red;

}

if (ch == 1) {

int pv = src.at<uchar>(row, col);

src.at<uchar>(row, col) = 255 - pv;

}

}

}*/

Mat result = Mat::zeros(src.size(), src.type());

for (int row = 0; row < height; row++) { //使用指针遍历

uchar* current_row = src.ptr<uchar>(row);

uchar* result_row = result.ptr<uchar>(row); //使用指针控制图像复制输出

for (int col = 0; col < width; col++) {

if (dim == 3) {

int blue = *current_row++;

int green = *current_row++;

int red = *current_row++;

*result_row++ = blue;

*result_row++ = green;

*result_row++ = red;

}

if (dim == 1) {

int pv = *current_row++;

*result_row++ = pv;

}

}

}

//imshow("pixel-demo", src);

imshow("pixel-demo", result);

waitKey(0);

destroyAllWindows();

return 0;

}

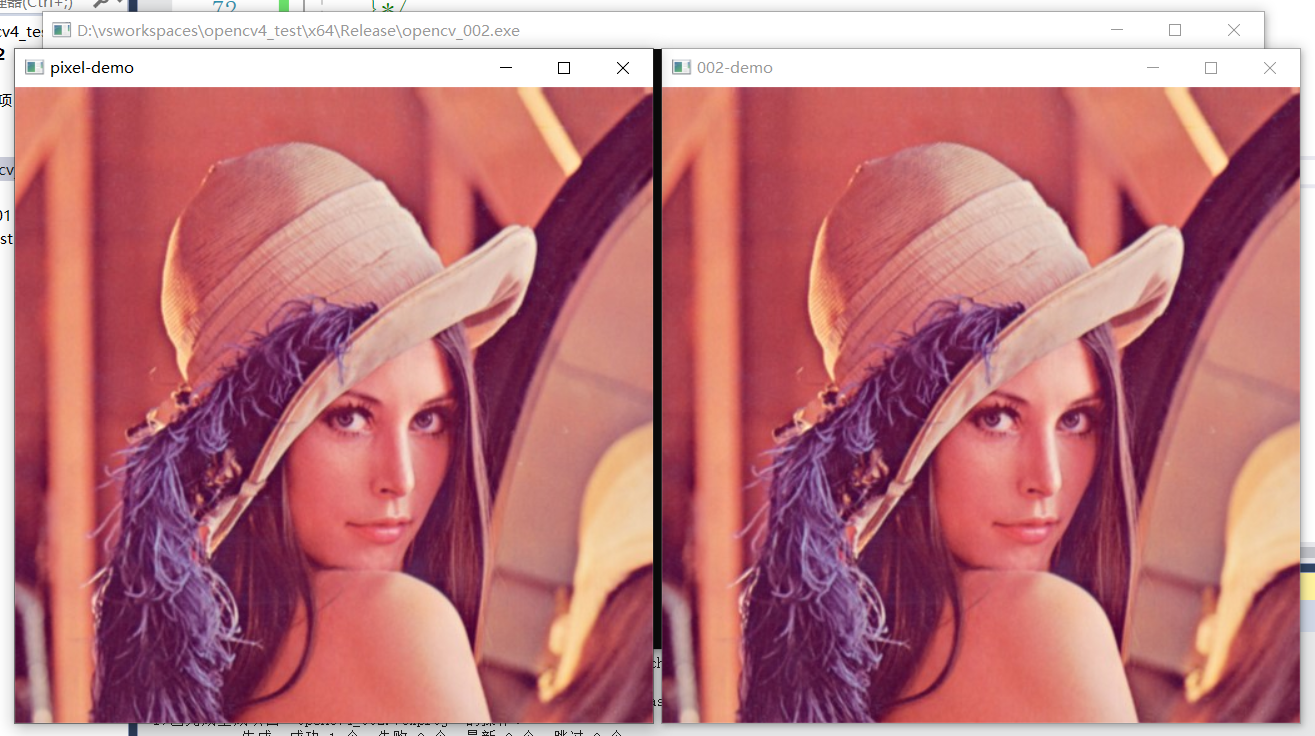

效果:

1、通过行列遍历每个像素

2、使用指针遍历图像

7、图像的算术操作

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src1 = imread("D:/environment/opencv/sources/samples/data/WindowsLogo.jpg");

Mat src2 = imread("D:/environment/opencv/sources/samples/data/LinuxLogo.jpg");

if (src1.empty() || src2.empty()) {

printf("could not find image file");

return -1;

}

//imshow("input1", src1);

//imshow("input2", src2);

/*

//代码演示

Mat dst1;

add(src1, src2, dst1);

imshow("add-demo", dst1);

Mat dst2;

subtract(src1, src2, dst2);

imshow("subtract-demo", dst2);

Mat dst3;

multiply(src1, src2, dst3);

imshow("multiply-demo", dst3);

Mat dst4;

divide(src1, src2, dst4);

imshow("divide-demo", dst4);

*/

Mat src = imread("D:/images/lena.jpg");

imshow("input", src);

Mat black = Mat::zeros(src.size(), src.type());

black = Scalar(40, 40, 40);

Mat dst_add,dst_subtract,dst_addWeight;

add(src, black, dst_add); //提升亮度

subtract(src, black, dst_subtract); //降低亮度

addWeighted(src, 1.5, black, 0.5, 0.0, dst_addWeight); //提升图像对比度,addWeighted(图1,权重1,图2,权重2,增加的常量,输出结果)

imshow("result_add", dst_add);

imshow("result_subtract", dst_subtract);

imshow("result_addWeight", dst_addWeight);

waitKey(0);

destroyAllWindows();

return 0;

}

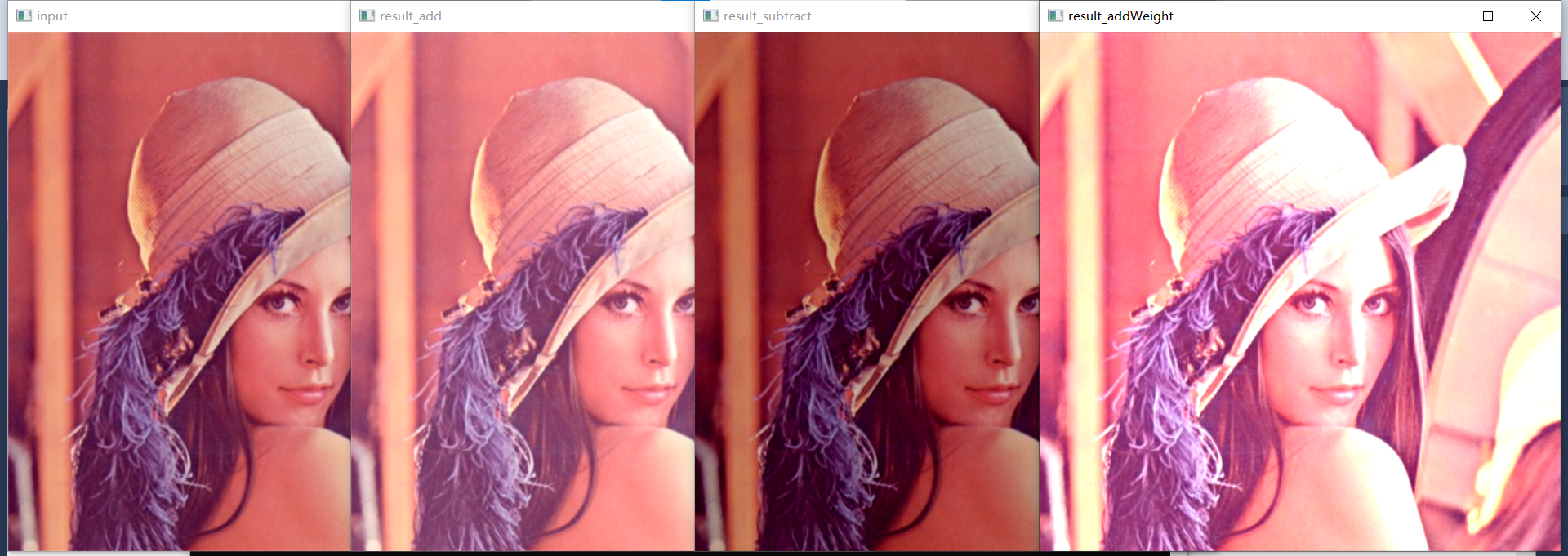

效果:

1、不同图像加减乘除运算

2、图像亮度及对比度调整

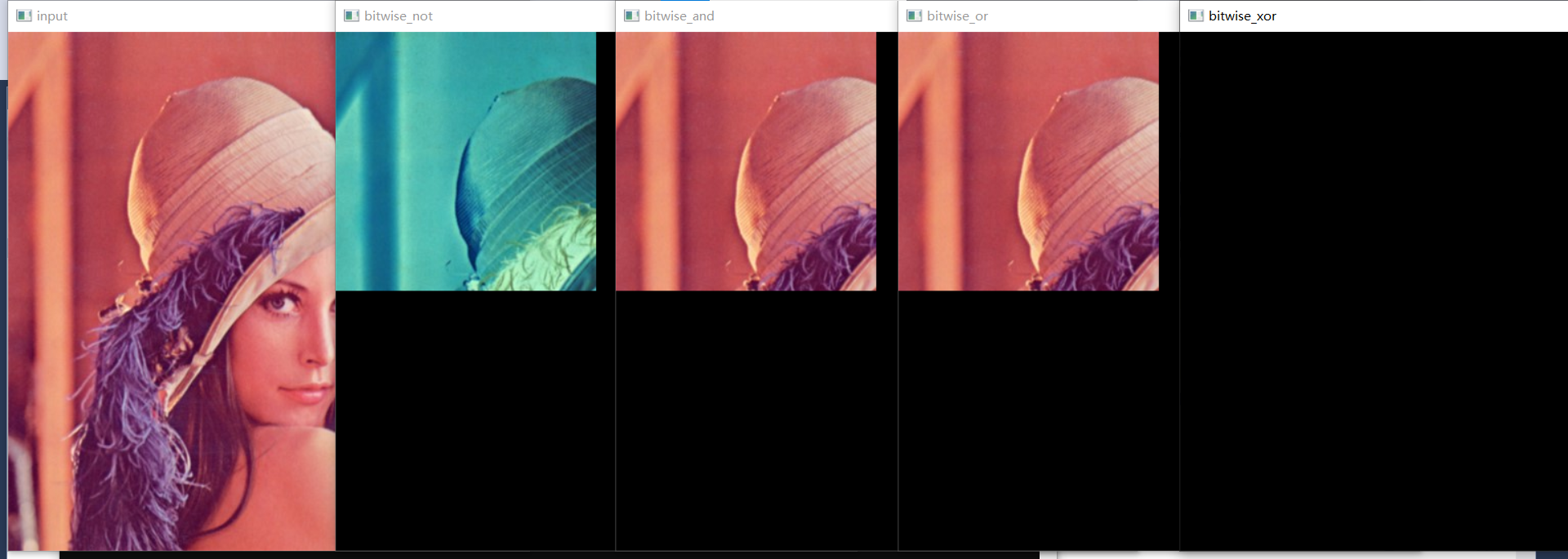

8、图像位操作

位操作包括:与、或、非、异或,进行图像取反等操作时效率较高。

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

imshow("input", src);

//创建mask矩阵,用于限定ROI区域范围

Mat mask = Mat::zeros(src.size(), CV_8UC1);

int width = src.cols / 2;

int height = src.rows / 2;

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

mask.at<uchar>(row, col) = 127;

}

}

Mat dst; //图像取反

//mask表示ROI区域

//非操作

bitwise_not(src, dst, mask); //bitwise_not(输入图像,输出图像,进行图像取反的范围(对mask中不为0的部分对原图像进行取反))

imshow("bitwise_not", dst);

//与操作

bitwise_and(src, src, dst, mask);

imshow("bitwise_and", dst);

//或操作

bitwise_or(src, src, dst, mask);

imshow("bitwise_or", dst);

//异或操作,表示两幅图或操作后不相同的部分

bitwise_xor(src, src, dst, mask);

imshow("bitwise_xor", dst);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

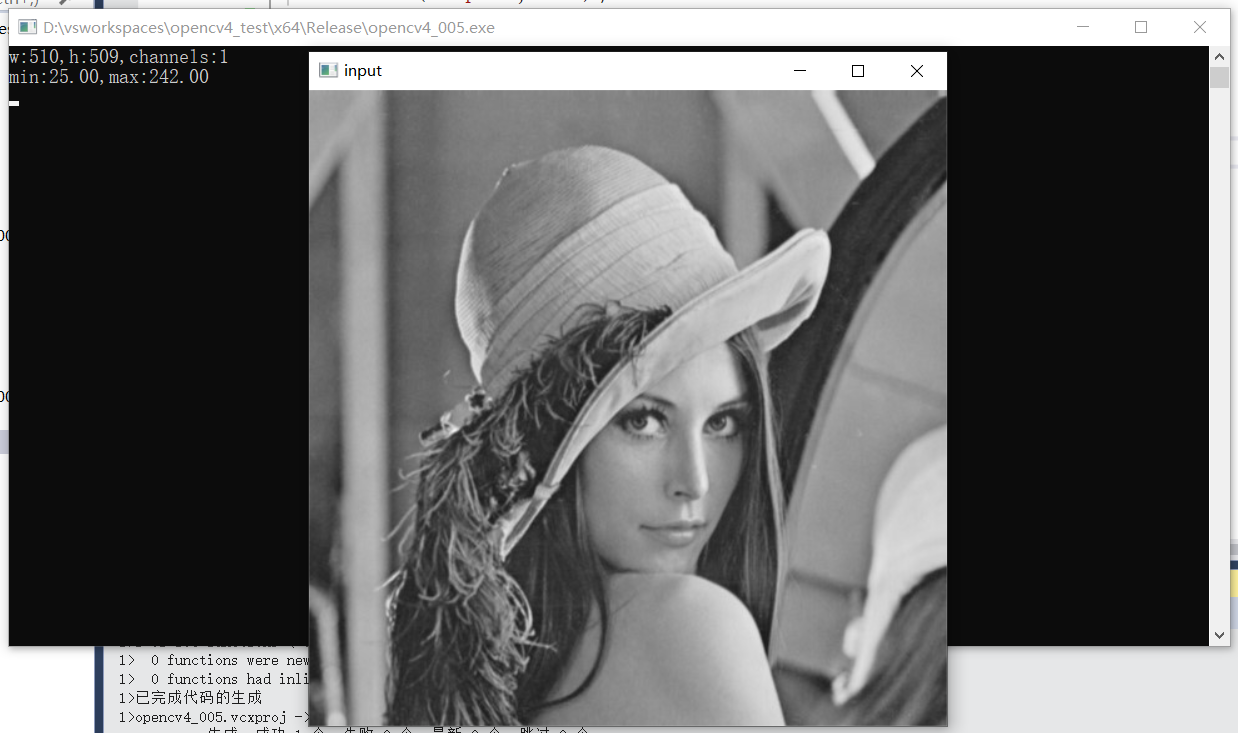

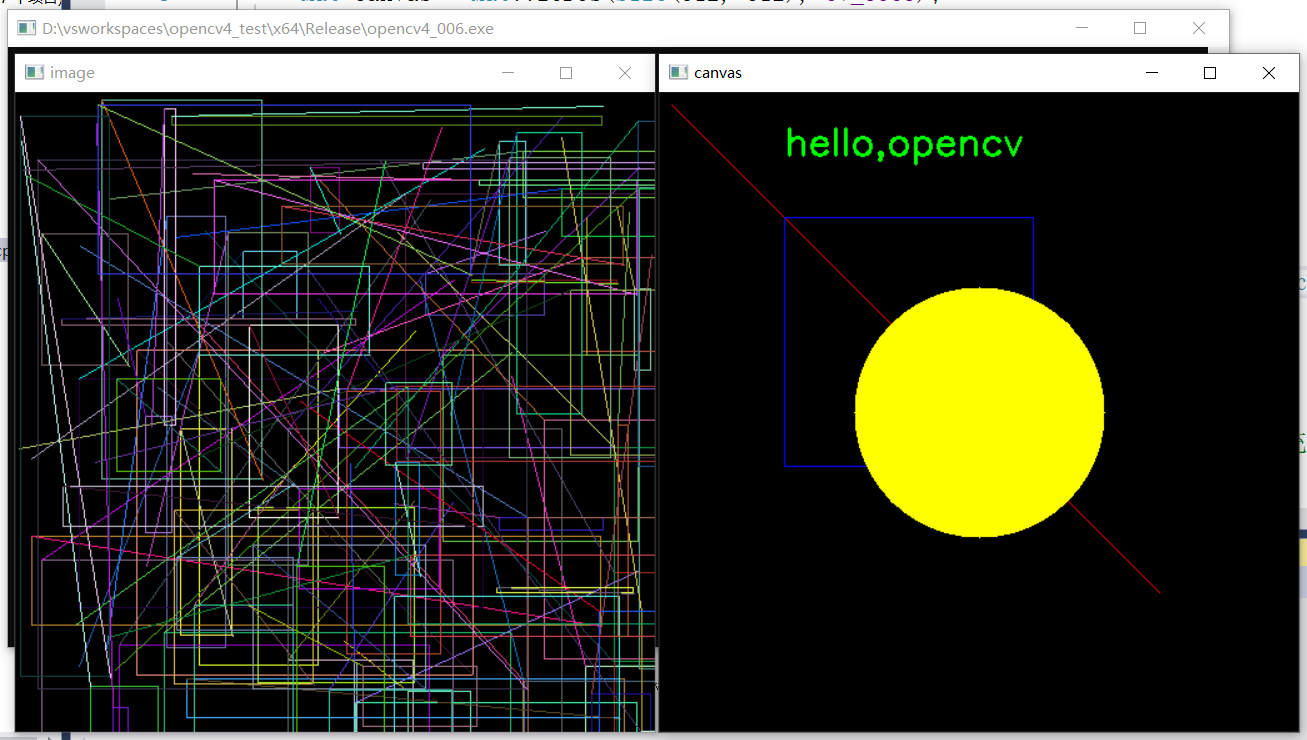

9、像素信息统计

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

//Mat src = imread("D:/images/lena.jpg",IMREAD_GRAYSCALE);

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

int h = src.rows;

int w = src.cols;

int ch = src.channels();

printf("w:%d,h:%d,channels:%d\n", w, h, ch);

//最大最小值

//double min_val;

//double max_val;

//Point minloc;

//Point maxloc;

//minMaxLoc(src, &min_val, &max_val, &minloc, &maxloc);

//printf("min:%.2f,max:%.2f\n", min_val, max_val);

//像素值统计信息,获取每个像素值的像素个数

vector<int> hist(256);

for (int i = 0; i < 256; i++) {

hist[i] = 0;

}

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

int pv = src.at<uchar>(row, col);

hist[pv]++;

}

}

//均值方差

Scalar s = mean(src);

printf("mean:%.2f,%.2f,%.2f\n", s[0], s[1], s[2]);

Mat mm, mstd;

meanStdDev(src, mm, mstd);

int rows = mstd.rows;

printf("rows:%d\n", rows);

printf("stddev:%.2f,%.2f,%.2f\n", mstd.at<double>(0, 0), mstd.at<double>(1, 0), mstd.at<double>(2, 0));

waitKey(0);

destroyAllWindows();

return 0;

}

效果一(最大最小值):

效果二(均值方差):

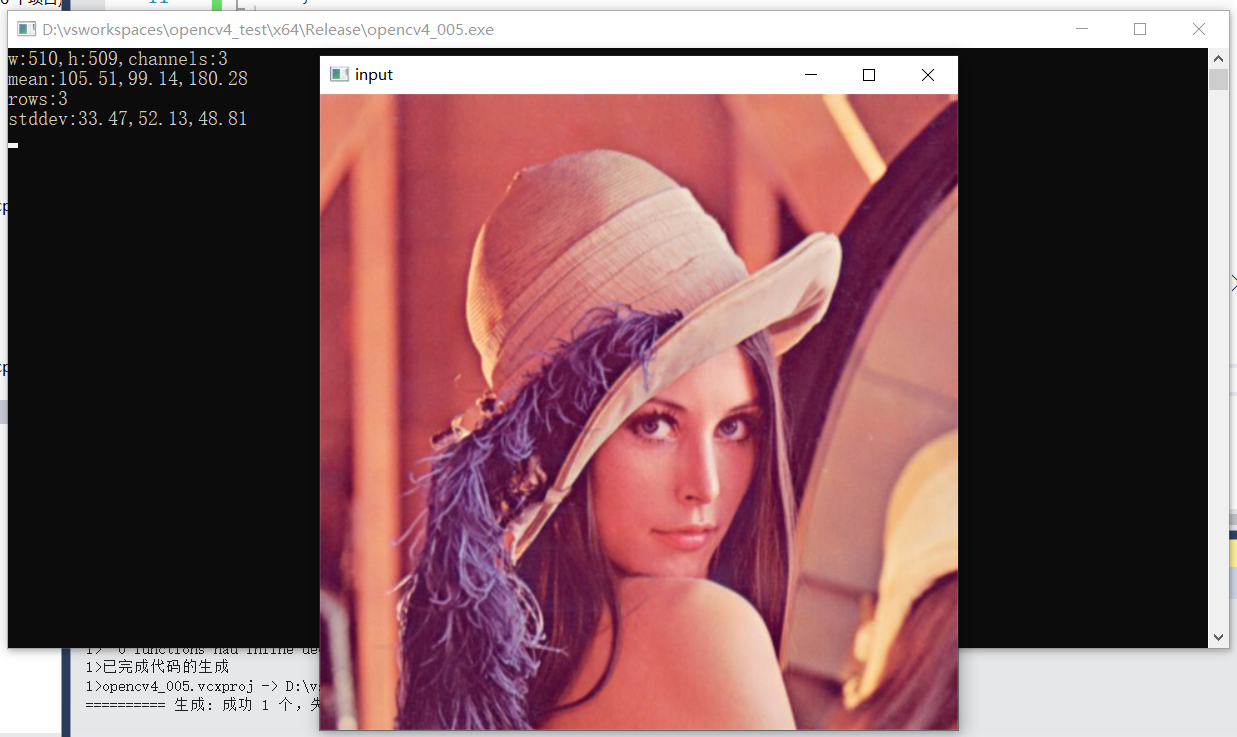

10、图形绘制与填充

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat canvas = Mat::zeros(Size(512, 512), CV_8UC3);

namedWindow("canvas", WINDOW_AUTOSIZE);

imshow("canvas", canvas);

//相关绘制API演示

line(canvas, Point(10, 10), Point(400, 400), Scalar(0, 0, 255), 1, LINE_8); //输入线段的起止点

Rect rect(100, 100, 200, 200); //绘制长方形

rectangle(canvas, rect, Scalar(255, 0, 0), 1, 8);

circle(canvas, Point(256, 256), 100, Scalar(0, 255, 255), -1, 8); //绘制圆

RotatedRect rrt; //绘制椭圆

rrt.center = Point2f(256, 256);

rrt.angle = 45.0;

rrt.size = Size(100, 200);

ellipse(canvas, rrt, Scalar(0, 255, 255), -1, 8); //线段宽度为负数时表示填充,整数表示线宽

imshow("canvas", canvas);

Mat image = Mat::zeros(Size(512, 512), CV_8UC3);

int x1 = 0, y1 = 0;

int x2 = 0, y2 = 0;

RNG rng(12345); //生成随机数

while (true) {

x1 = (int)rng.uniform(0, 512); //将随机数转换成整数

x2 = (int)rng.uniform(0, 512);

y1 = (int)rng.uniform(0, 512);

y2 = (int)rng.uniform(0, 512);

int w = abs(x2 - x1);

int h = abs(y2 - y1);

rect.x = x1; //给定矩形左上角坐标

rect.y = y1;

rect.width = w;

rect.height = h;

//image = Scalar(0, 0, 0); //将画布清空的操作,可以保证每次只绘制一个图形

rectangle(image, rect, Scalar((int)rng.uniform(0, 255), (int)rng.uniform(0, 255), (int)rng.uniform(0, 255)), 1, LINE_8);

//line(image, Point(x1, y1), Point(x2, y2), Scalar((int)rng.uniform(0, 255), (int)rng.uniform(0, 255), (int)rng.uniform(0, 255)), 1, LINE_8);

imshow("image", image);

char c = waitKey(10);

if (c == 27) {

break;

}

}

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

11、图像通道合并与分离

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

vector<Mat> mv; //通道分离保存的容器

split(src, mv);

int size = mv.size();

printf("number of channels:%d\n", size);

imshow("blue channel", mv[0]);

imshow("green channel", mv[1]);

imshow("red channel", mv[2]);

mv[1] = Scalar(0); //将mv[1]通道像素值都设为0

Mat dst;

merge(mv, dst);

imshow("result", dst);

Rect roi; //选取图像中的roi区域显示

roi.x = 100;

roi.y = 100;

roi.width = 250;

roi.height = 200;

Mat sub = src(roi);

imshow("roi", sub);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

12、图像直方图统计

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

vector<Mat> mv; //通道分离保存的容器

split(src, mv);

int size = mv.size();

//计算直方图

int histSize = 256;

float range[] = { 0,255 };

const float* histRanges = { range };

Mat blue_hist, green_hist, red_hist;

calcHist(&mv[0], 1, 0, Mat(), blue_hist, 1, &histSize, &histRanges, true, false); //计算每个通道的直方图

calcHist(&mv[1], 1, 0, Mat(), green_hist, 1, &histSize, &histRanges, true, false);

calcHist(&mv[2], 1, 0, Mat(), red_hist, 1, &histSize, &histRanges, true, false);

Mat result = Mat::zeros(Size(600, 400), CV_8UC3);

int margin = 50;

int nm = result.rows - 2 * margin;

normalize(blue_hist, blue_hist, 0, nm, NORM_MINMAX, -1, Mat()); //将直方图进行归一化操作,映射到指定范围内

normalize(green_hist, green_hist, 0, nm, NORM_MINMAX, -1, Mat());

normalize(red_hist, red_hist, 0, nm, NORM_MINMAX, -1, Mat());

float step = 500.0 / 256.0;

for (int i = 0; i < 255; i++) { //循环遍历直方图每个像素值的数量,将相邻的两个像素值数量相连

line(result, Point(step*i + 50, 50 + nm - blue_hist.at<float>(i, 0)), Point(step*(i + 1) + 50, 50 + nm - blue_hist.at<float>(i + 1, 0)), Scalar(255, 0, 0), 2, LINE_AA, 0);

line(result, Point(step*i + 50, 50 + nm - green_hist.at<float>(i, 0)), Point(step*(i + 1) + 50, 50 + nm - green_hist.at<float>(i + 1, 0)), Scalar(0, 255, 0), 2, LINE_AA, 0);

line(result, Point(step*i + 50, 50 + nm - red_hist.at<float>(i, 0)), Point(step*(i + 1) + 50, 50 + nm - red_hist.at<float>(i + 1, 0)), Scalar(0, 0, 255), 2, 8, 0);

}

imshow("histgram-demo", result);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

13、图像直方图均衡化

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

void eh_demo();

int main(int argc, char** argv) {

eh_demo();

}

void eh_demo() {

//Mat src = imread("D:/images/box_in_scene.png");

Mat src = imread("D:/images/gray.png");

Mat gray, dst;

cvtColor(src, gray, COLOR_BGR2GRAY);

imshow("gray", gray);

equalizeHist(gray, dst);

imshow("eh-demo", dst);

//计算直方图

int histSize = 256;

float range[] = { 0,255 };

const float* histRanges = { range };

Mat blue_hist, green_hist, red_hist;

calcHist(&gray, 1, 0, Mat(), blue_hist, 1, &histSize, &histRanges, true, false); //计算每个通道的直方图

calcHist(&dst, 1, 0, Mat(), green_hist, 1, &histSize, &histRanges, true, false);

Mat result = Mat::zeros(Size(600, 400), CV_8UC3);

int margin = 50;

int nm = result.rows - 2 * margin;

normalize(blue_hist, blue_hist, 0, nm, NORM_MINMAX, -1, Mat()); //将直方图进行归一化操作,映射到指定范围内

normalize(green_hist, green_hist, 0, nm, NORM_MINMAX, -1, Mat());

normalize(red_hist, red_hist, 0, nm, NORM_MINMAX, -1, Mat());

float step = 500.0 / 256.0;

for (int i = 0; i < 255; i++) { //循环遍历直方图每个像素值的数量,将相邻的两个像素值数量相连

line(result, Point(step*i + 50, 50 + nm - blue_hist.at<float>(i, 0)), Point(step*(i + 1) + 50, 50 + nm - blue_hist.at<float>(i + 1, 0)), Scalar(0, 0, 255), 2, LINE_AA, 0);

line(result, Point(step*i + 50, 50 + nm - green_hist.at<float>(i, 0)), Point(step*(i + 1) + 50, 50 + nm - green_hist.at<float>(i + 1, 0)), Scalar(0, 255, 255), 2, LINE_AA, 0);

}

imshow("result", result);

waitKey(0);

destroyAllWindows();

}

效果:

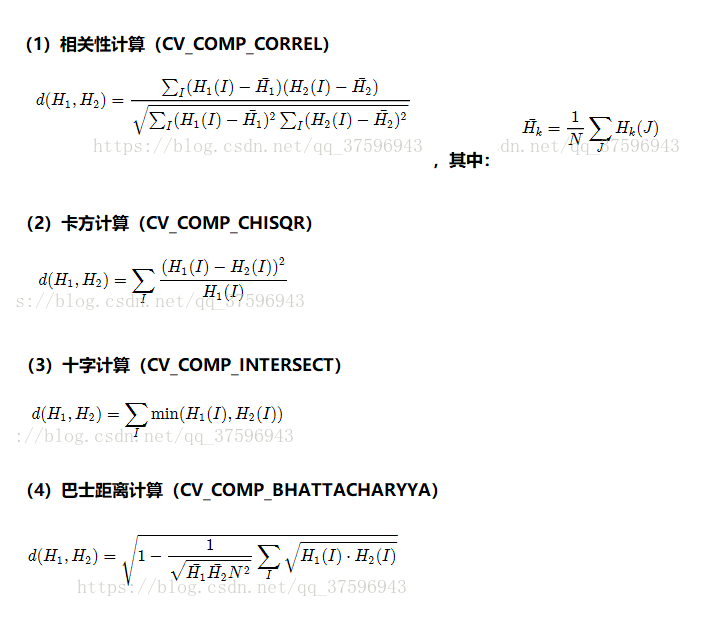

14、图像直方图相似比较

对输入的两张图像计算得到直方图H1与H2,归一化到相同的尺度空间,然后可以通过计算H1与H2的之间的距离得到两个直方图的

相似度进而比较图像本身的相似程度。

OpenCV提供的比较方法有四种:

Correlation 相关性比较

Chi-Square 卡方比较

Intersection 十字交叉性

Bhattacharyya distance 巴氏距离

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

void hist_compare();

int main(int argc, char** argv) {

hist_compare();

}

void hist_compare() {

Mat src1 = imread("D:/images/gray.png");

Mat src2 = imread("D:/images/box_in_scene.png");

imshow("input1", src1);

imshow("input2", src2);

//计算直方图

int histSize[] = { 256,256,256 };

int channels[] = { 0,1,2 };

Mat hist1, hist2;

float c1[] = { 0,255 };

float c2[] = { 0,255 };

float c3[] = { 0,255 };

const float* histRanges[] = { c1,c2,c3 };

Mat blue_hist, green_hist, red_hist;

calcHist(&src1, 1, channels, Mat(), hist1, 3, histSize, histRanges, true, false); //计算每个通道的直方图

calcHist(&src2, 1, channels, Mat(), hist2, 3, histSize, histRanges, true, false);

//归一化

normalize(hist1, hist1, 0, 1.0, NORM_MINMAX, -1, Mat());

normalize(hist2, hist2, 0, 1.0, NORM_MINMAX, -1, Mat());

//比较巴氏距离,值越小表示越相似,与相似度负相关,最小为0.00

double h12 = compareHist(hist1, hist2, HISTCMP_BHATTACHARYYA);

double h11 = compareHist(hist1, hist1, HISTCMP_BHATTACHARYYA);

printf("h12:%.2f,h11:%.2f\n", h12, h11);

//相关性比较,值越大表示越相关,与相似度正相关,最大为1.00

double c12 = compareHist(hist1, hist2, HISTCMP_CORREL);

double c11 = compareHist(hist1, hist1, HISTCMP_CORREL);

printf("c12:%.2f,c11:%.2f\n", c12, c11);

waitKey(0);

destroyAllWindows();

}

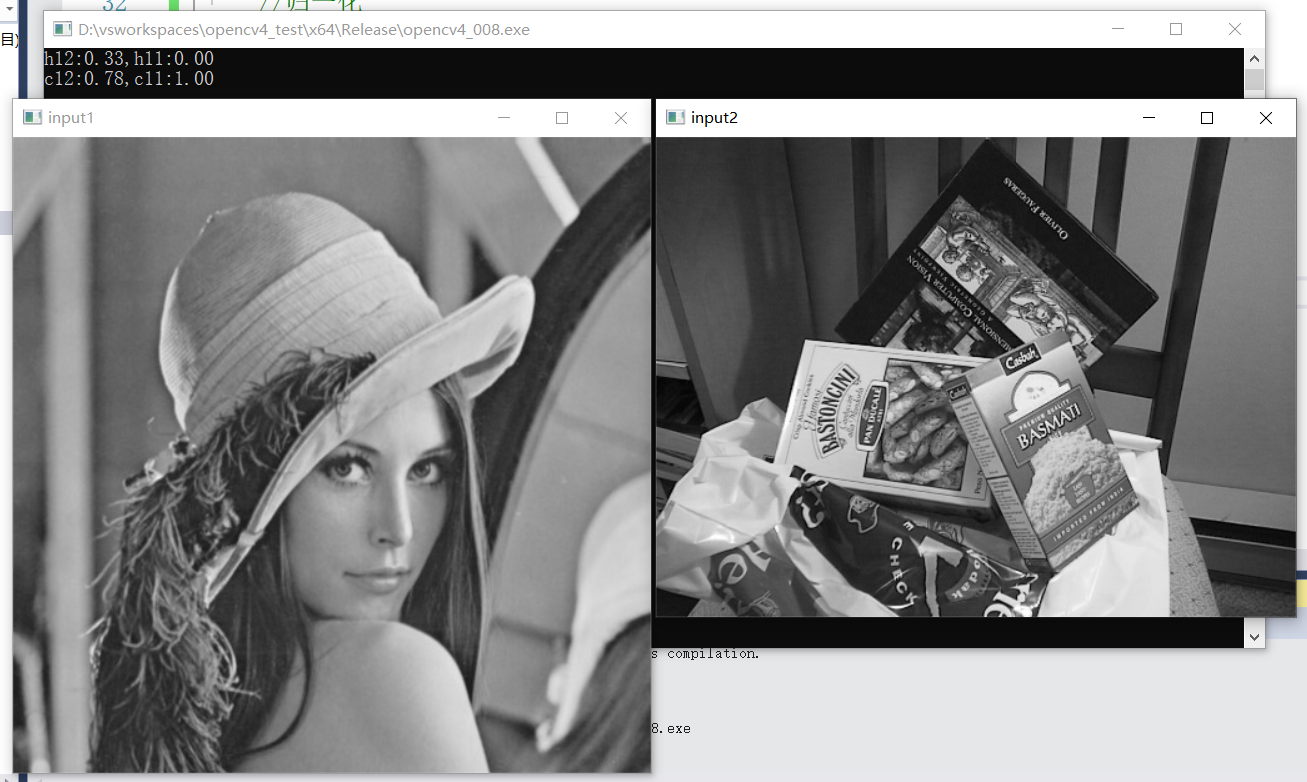

效果:

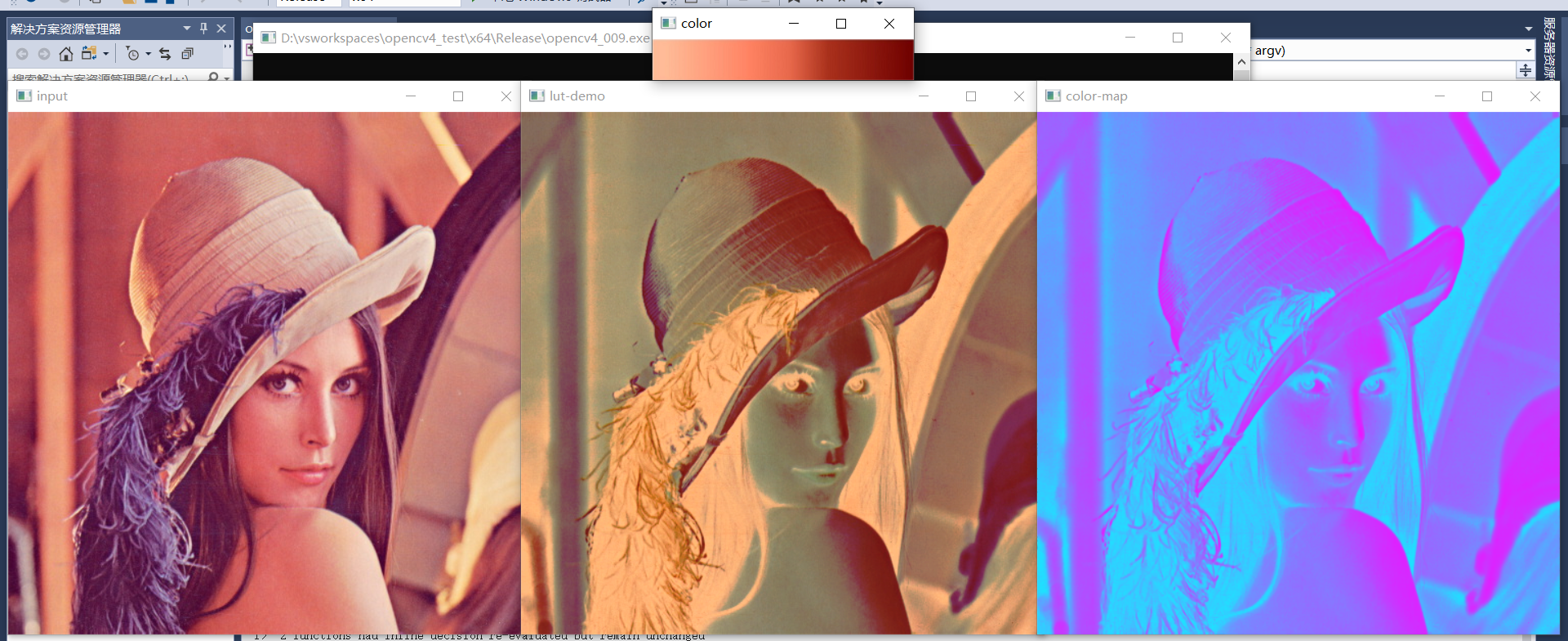

15、图像查找表与颜色表

LUT(Look Up Tables):图像查找表。通过预先对每一个像素值对应的计算结果进行计算,将计算结果放在图像查找表中,在实际图像处理时,只需要查找每个像素在图像查找表中对应的结果即可,不需要再进行大量重复计算。

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

Mat color = imread("D:/images/lut.png");

Mat lut = Mat::zeros(256, 1, CV_8UC3); //第一个参数为行数,第二个参数为列数

for (int i = 0; i < 256; i++) {

lut.at<Vec3b>(i, 0) = color.at<Vec3b>(10, i);

}

imshow("color", color);

Mat dst;

LUT(src, lut, dst); //使用自己的图像查找表

imshow("lut-demo", dst);

applyColorMap(src, dst, COLORMAP_COOL); //使用OpenCV内置的图像查找表

imshow("color-map", dst);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

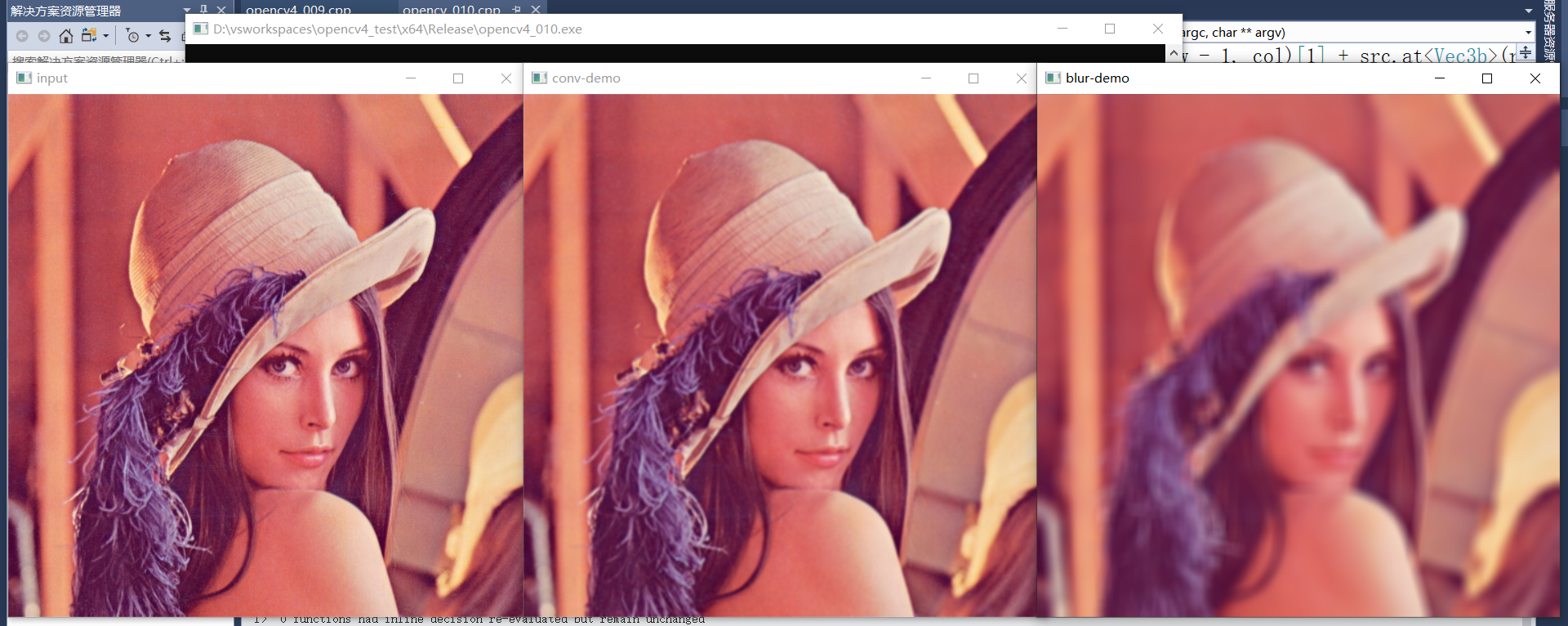

16、图像卷积

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

Mat result = src.clone();

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

int h = src.rows;

int w = src.cols;

for (int row = 1; row < h - 1; row++) {

for (int col = 1; col < w - 1; col++) {

int sb = src.at<Vec3b>(row - 1, col - 1)[0] + src.at<Vec3b>(row - 1, col)[0] + src.at<Vec3b>(row - 1, col + 1)[0] +

src.at<Vec3b>(row, col - 1)[0] + src.at<Vec3b>(row, col)[0] + src.at<Vec3b>(row, col + 1)[0] +

src.at<Vec3b>(row + 1, col - 1)[0] + src.at<Vec3b>(row + 1, col)[0] + src.at<Vec3b>(row + 1, col + 1)[0];

int sg = src.at<Vec3b>(row - 1, col - 1)[1] + src.at<Vec3b>(row - 1, col)[1] + src.at<Vec3b>(row - 1, col + 1)[1] +

src.at<Vec3b>(row, col - 1)[1] + src.at<Vec3b>(row, col)[1] + src.at<Vec3b>(row, col + 1)[1] +

src.at<Vec3b>(row + 1, col - 1)[1] + src.at<Vec3b>(row + 1, col)[1] + src.at<Vec3b>(row + 1, col + 1)[1];

int sr = src.at<Vec3b>(row - 1, col - 1)[2] + src.at<Vec3b>(row - 1, col)[2] + src.at<Vec3b>(row - 1, col + 1)[2] +

src.at<Vec3b>(row, col - 1)[2] + src.at<Vec3b>(row, col)[2] + src.at<Vec3b>(row, col + 1)[2] +

src.at<Vec3b>(row + 1, col - 1)[2] + src.at<Vec3b>(row + 1, col)[2] + src.at<Vec3b>(row + 1, col + 1)[2];

result.at<Vec3b>(row, col)[0] = sb / 9;

result.at<Vec3b>(row, col)[1] = sg / 9;

result.at<Vec3b>(row, col)[2] = sr / 9;

}

}

imshow("conv-demo", result);

Mat dst;

blur(src, dst, Size(13, 13), Point(-1, -1), BORDER_DEFAULT); //锚定点Point坐标为负值时,默认取图像中心点

imshow("blur-demo", dst);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

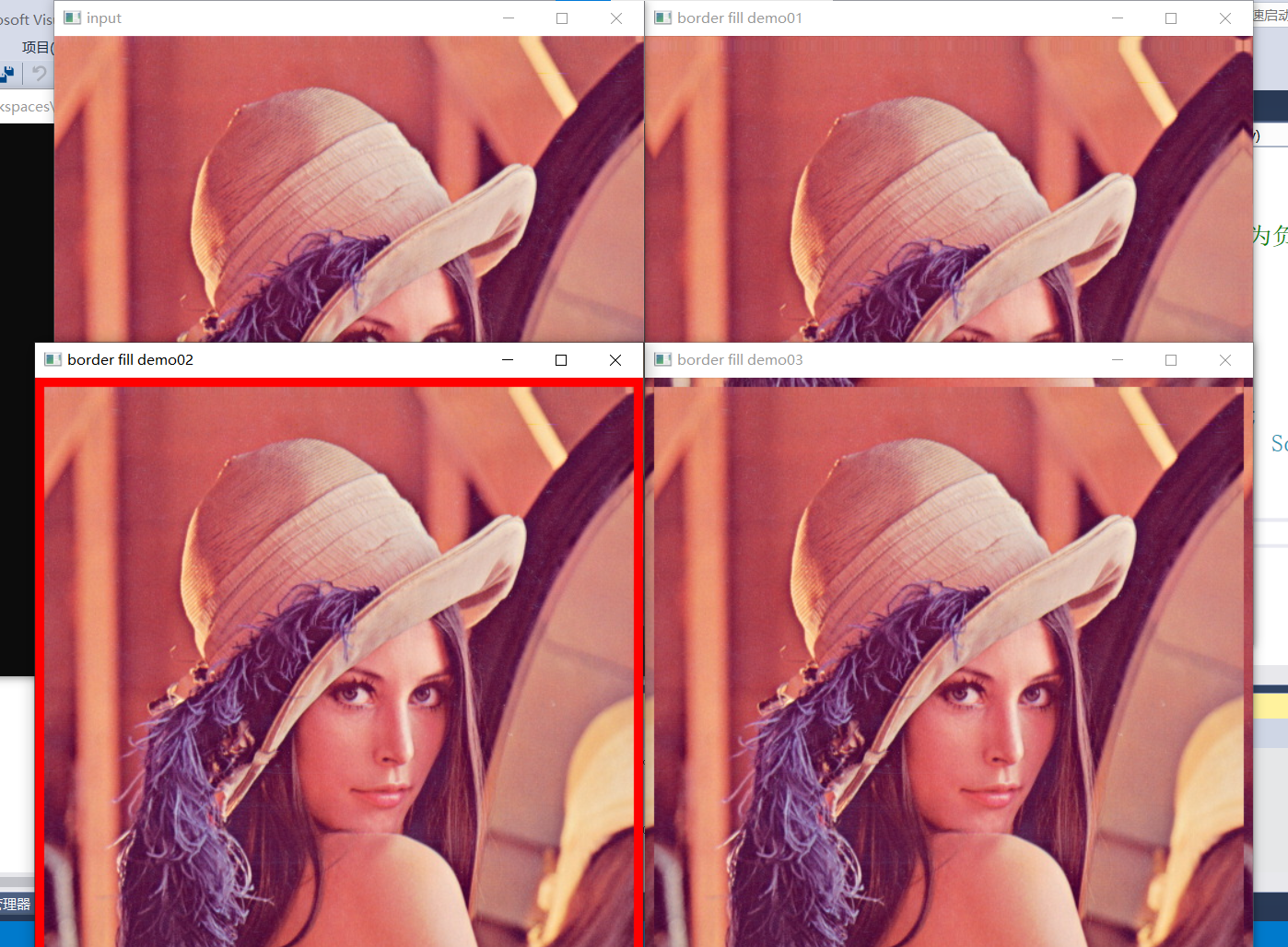

17、卷积边缘处理

- 卷积处理时的边缘像素填充方法

| 填充类型 | 方法 |

|---|---|

| BORDER_CONSTANT | iiiiii|abcdefgh|iiiiiii |

| BORDER_REPLICATE | aaaaaa|abcdefgh|hhhhhhh |

| BORDER_WRAP | cdefgh|abcdefgh|abcdefg |

| BORDER_REFLECT_101(轴对称) | gfedcb|abcdefgh|gfedcba |

| BORDER_DEFAULT | gfedcb|abcdefgh|gfedcba |

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

Mat result = src.clone();

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

int h = src.rows;

int w = src.cols;

for (int row = 1; row < h - 1; row++) {

for (int col = 1; col < w - 1; col++) {

int sb = src.at<Vec3b>(row - 1, col - 1)[0] + src.at<Vec3b>(row - 1, col)[0] + src.at<Vec3b>(row - 1, col + 1)[0] +

src.at<Vec3b>(row, col - 1)[0] + src.at<Vec3b>(row, col)[0] + src.at<Vec3b>(row, col + 1)[0] +

src.at<Vec3b>(row + 1, col - 1)[0] + src.at<Vec3b>(row + 1, col)[0] + src.at<Vec3b>(row + 1, col + 1)[0];

int sg = src.at<Vec3b>(row - 1, col - 1)[1] + src.at<Vec3b>(row - 1, col)[1] + src.at<Vec3b>(row - 1, col + 1)[1] +

src.at<Vec3b>(row, col - 1)[1] + src.at<Vec3b>(row, col)[1] + src.at<Vec3b>(row, col + 1)[1] +

src.at<Vec3b>(row + 1, col - 1)[1] + src.at<Vec3b>(row + 1, col)[1] + src.at<Vec3b>(row + 1, col + 1)[1];

int sr = src.at<Vec3b>(row - 1, col - 1)[2] + src.at<Vec3b>(row - 1, col)[2] + src.at<Vec3b>(row - 1, col + 1)[2] +

src.at<Vec3b>(row, col - 1)[2] + src.at<Vec3b>(row, col)[2] + src.at<Vec3b>(row, col + 1)[2] +

src.at<Vec3b>(row + 1, col - 1)[2] + src.at<Vec3b>(row + 1, col)[2] + src.at<Vec3b>(row + 1, col + 1)[2];

result.at<Vec3b>(row, col)[0] = sb / 9;

result.at<Vec3b>(row, col)[1] = sg / 9;

result.at<Vec3b>(row, col)[2] = sr / 9;

}

}

imshow("conv-demo", result);

Mat dst;

blur(src, dst, Size(13, 13), Point(-1, -1), BORDER_DEFAULT); //锚定点Point坐标为负值时,默认取图像中心点

imshow("blur-demo", dst);

//边缘填充

int border = 8;

Mat border_m01, border_m02, border_m03;

copyMakeBorder(src, border_m01, border, border, border, border, BORDER_DEFAULT);

copyMakeBorder(src, border_m02, border, border, border, border, BORDER_CONSTANT, Scalar(0, 0, 255));

copyMakeBorder(src, border_m03, border, border, border, border, BORDER_WRAP);

imshow("border fill demo01", border_m01);

imshow("border fill demo02", border_m02);

imshow("border fill demo03", border_m03);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

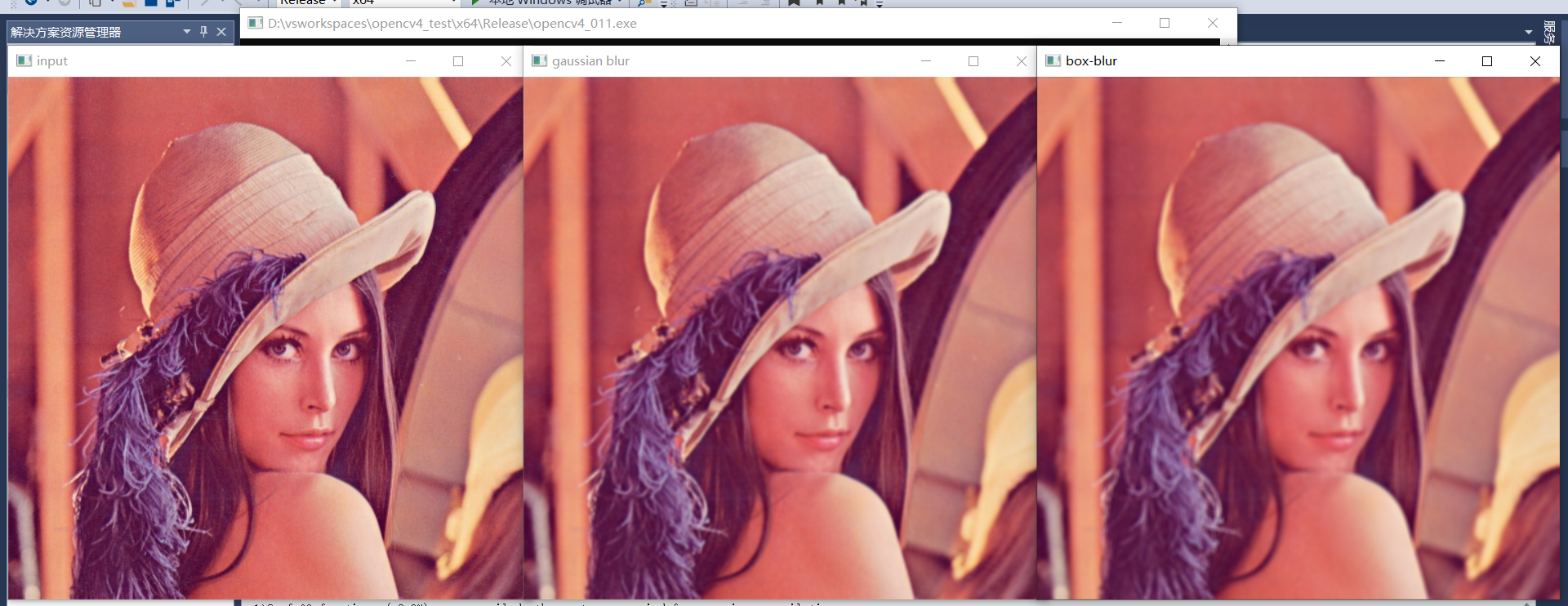

18、图像模糊

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

//高斯模糊

Mat dst;

GaussianBlur(src, dst, Size(5, 5), 0);

imshow("gaussian blur", dst);

//盒子模糊 - 均值模糊

Mat box_dst;

boxFilter(src, box_dst, -1, Size(5, 5), Point(-1, -1), true, BORDER_DEFAULT);

imshow("box-blur", box_dst);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

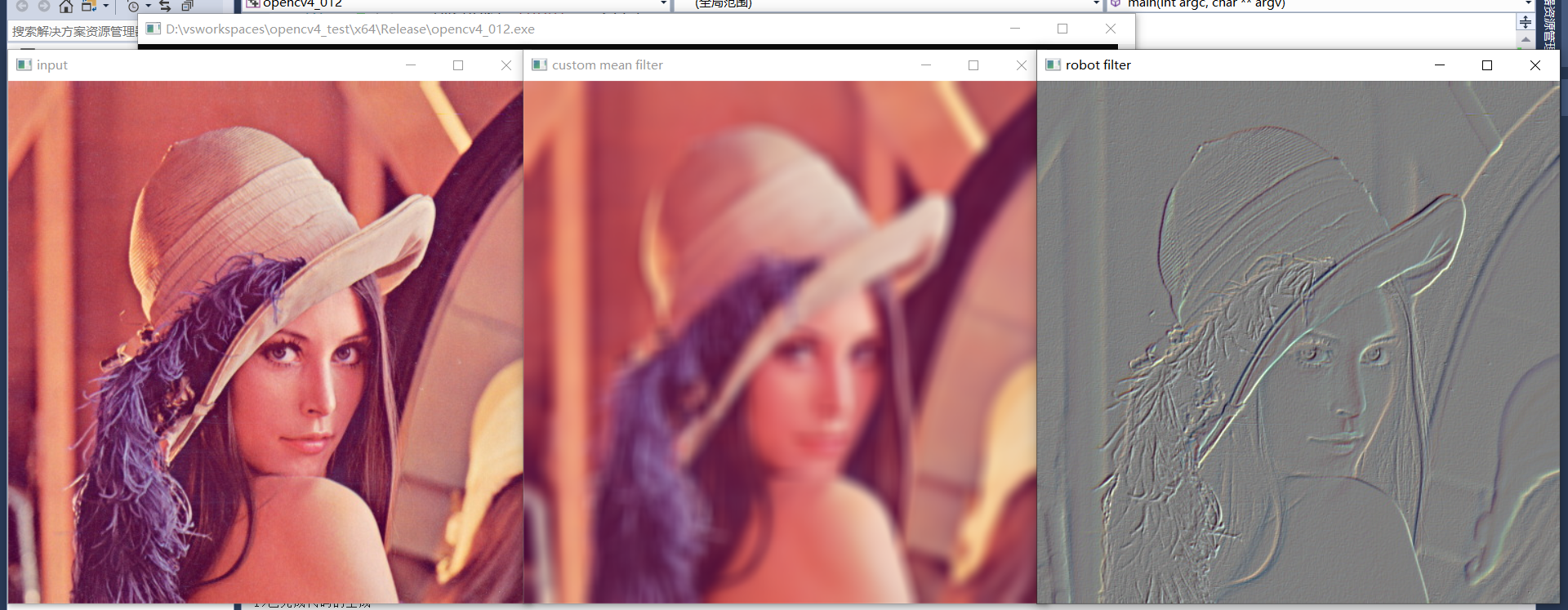

19、自定义滤波

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

//自定义滤波 - 均值卷积

int k = 15;

Mat mkernel = Mat::ones(k, k, CV_32F) / (float)(k*k);

Mat dst;

filter2D(src, dst, -1, mkernel, Point(-1, -1), 0, BORDER_DEFAULT); //delta可用来提升亮度

imshow("custom mean filter", dst);

//非均值滤波,图像梯度,便于观察边缘信息

Mat robot = (Mat_<int>(2, 2) << 1, 0, 0, -1);

Mat result;

filter2D(src, result, CV_32F, robot, Point(-1, -1), 127, BORDER_DEFAULT); //转换之后增加亮度便于观察

convertScaleAbs(result, result); //将正负值的差异转换成绝对值,避免负值无法显示影响最终显示效果

imshow("robot filter", result);

waitKey(0);

destroyAllWindows();

return 0;

}

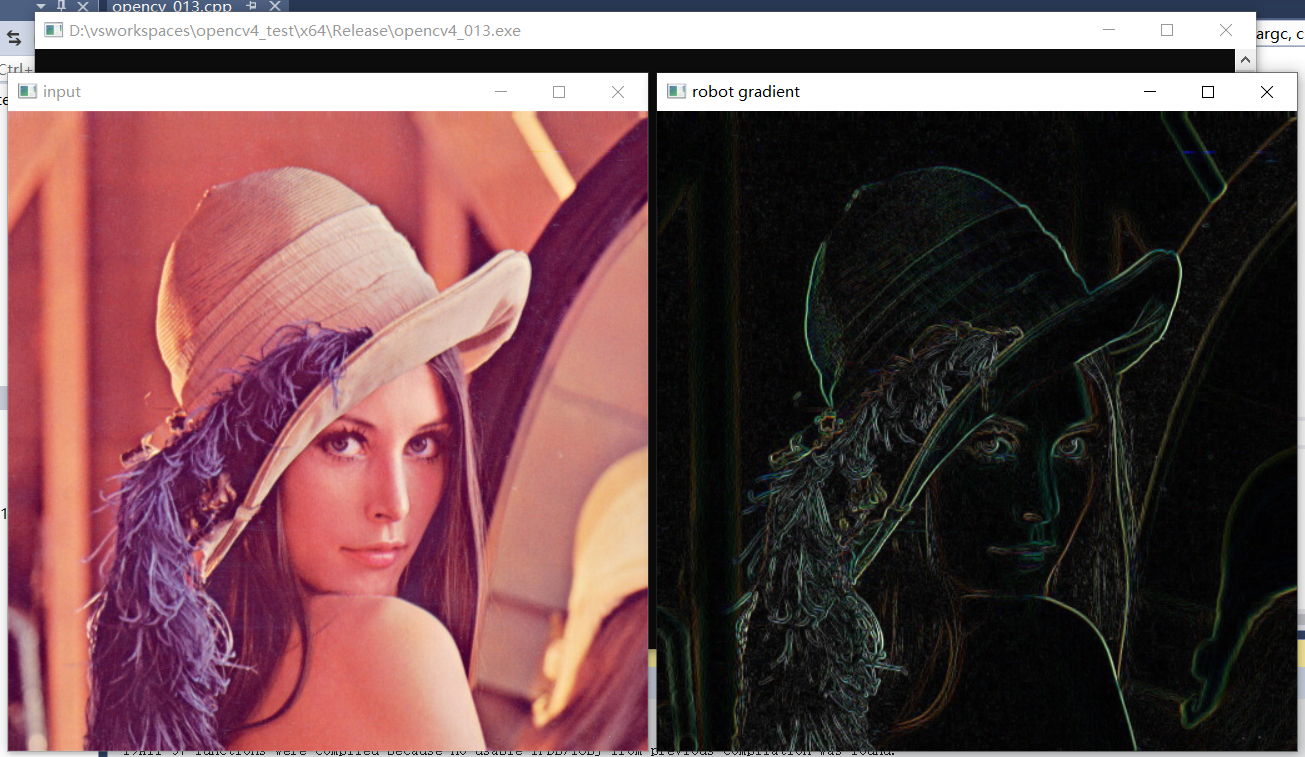

效果:

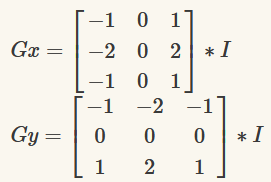

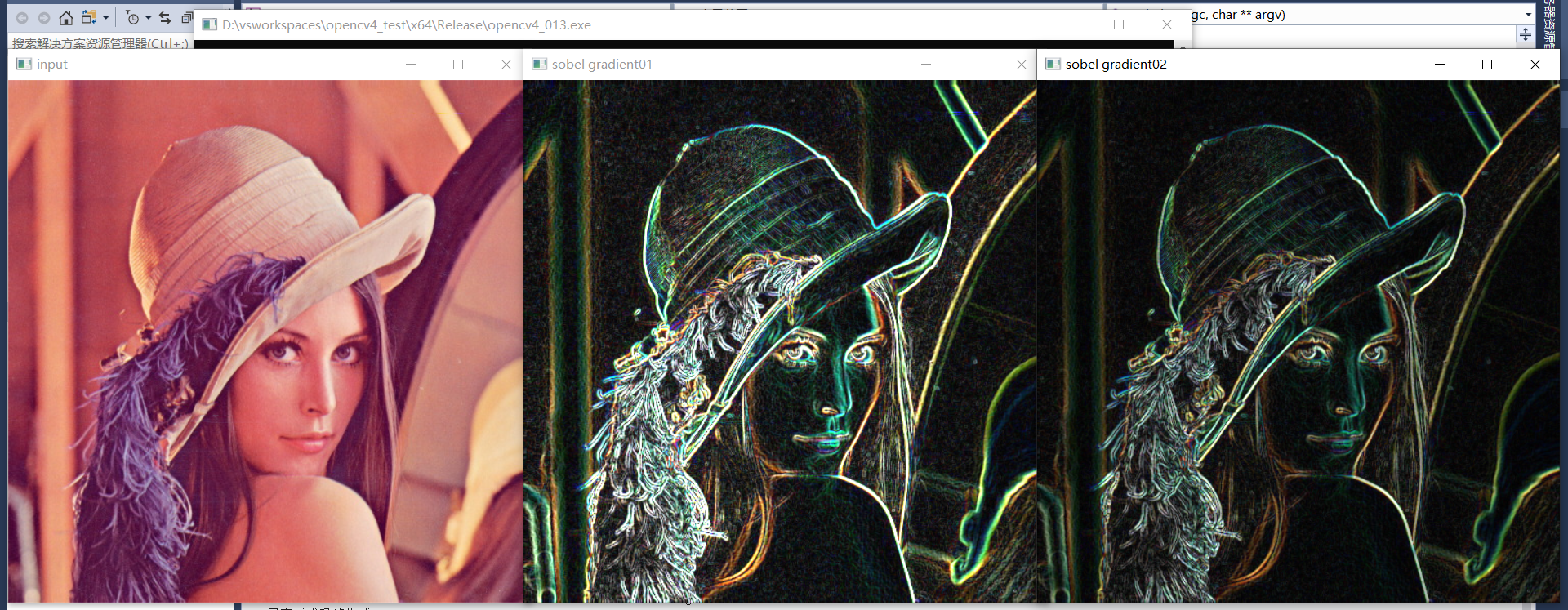

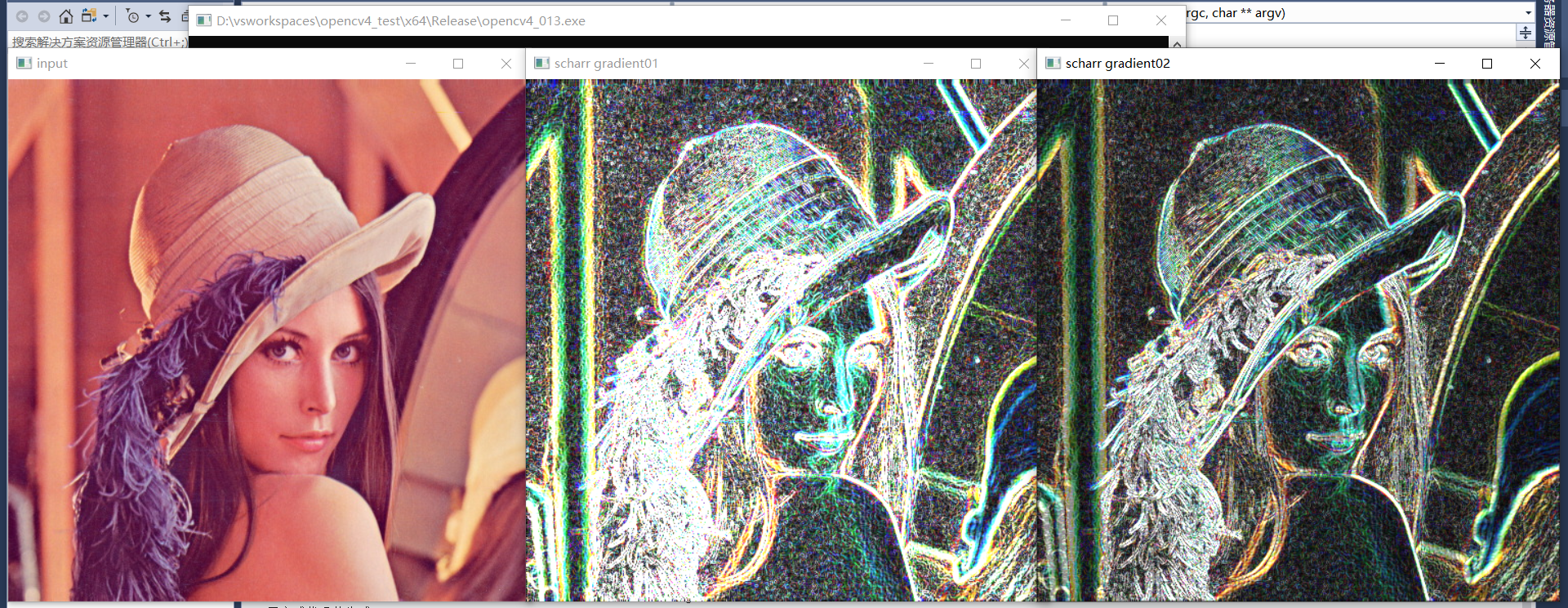

20、图像梯度

- 图像卷积

- 模糊

- 梯度:Sobel算子,Scharr算子,Robot算子

- 边缘

- 锐化

每种算子有两个,分别为x方向算子和y方向算子。

1、robot算子,最简单但效果最差

\]

2、sobel算子,梯度检测效果较好,抗噪能力不如scharr算子

3、scharr算子,抗噪能力比sobel算子好但干扰信息较多

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

//robot gradient 计算

Mat robot_x = (Mat_<int>(2, 2) << 1, 0, 0, -1);

Mat robot_y = (Mat_<int>(2, 2) << 0, 1, -1, 0);

Mat grad_x,grad_y;

filter2D(src, grad_x, CV_32F, robot_x, Point(-1, -1), 0, BORDER_DEFAULT);

filter2D(src, grad_y, CV_32F, robot_y, Point(-1, -1), 0, BORDER_DEFAULT);

convertScaleAbs(grad_x, grad_x); //要转换为差异的绝对值,否则有正负值差异,负值无法显示影响检测显示效果

convertScaleAbs(grad_y, grad_y);

Mat result;

add(grad_x, grad_y, result);

//imshow("robot gradient", result);

//sobel算子

Sobel(src, grad_x, CV_32F, 1, 0);

Sobel(src, grad_y, CV_32F, 0, 1);

convertScaleAbs(grad_x, grad_x);

convertScaleAbs(grad_y, grad_y);

Mat result2,result3;

add(grad_x, grad_y, result2);

addWeighted(grad_x, 0.5, grad_y, 0.5, 0, result3);

imshow("sobel gradient01", result2);

imshow("sobel gradient02", result3);

//scharr算子

Scharr(src, grad_x, CV_32F, 1, 0);

Scharr(src, grad_y, CV_32F, 0, 1);

convertScaleAbs(grad_x, grad_x);

convertScaleAbs(grad_y, grad_y);

Mat result4, result5;

add(grad_x, grad_y, result4);

addWeighted(grad_x, 0.5, grad_y, 0.5, 0, result5);

imshow("scharr gradient01", result4);

imshow("scharr gradient02", result5);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

1、robot算子

2、sobel算子

3、scharr算子

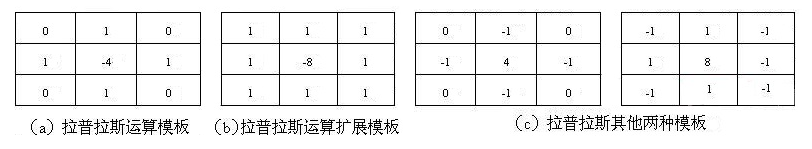

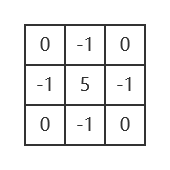

21、图像边缘发现

拉普拉斯算子(二阶导数算子),对噪声敏感!

拉普拉斯运算模板

图像锐化

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

Mat dst;

Laplacian(src, dst, -1, 3, 1.0, 0, BORDER_DEFAULT); //拉普拉斯算子对噪声敏感

imshow("laplacian demo", dst);

//锐化

Mat sh_op = (Mat_<int>(3, 3) << 0, -1, 0, //锐化就相当于拉普拉斯算子再加原图

-1, 5, -1,

0, -1, 0);

Mat result;

filter2D(src, result, CV_32F, sh_op, Point(-1, -1), 0, BORDER_DEFAULT);

convertScaleAbs(result, result);

imshow("sharp filter", result);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

拉普拉斯边缘发现:

图像锐化:

22、USM锐化(Un Sharp Mask)

图像锐化增强:sharp_image = blur - laplacian

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

Mat blur_image, dst;

GaussianBlur(src, blur_image, Size(3, 3), 0); //先进行高斯滤波,再使用拉普拉斯算子获取边缘及噪声

Laplacian(src, dst, -1, 1, 1.0, 0, BORDER_DEFAULT);

imshow("laplacian demo", dst);

Mat usm_image;

//通过权重函数对两个图像相减,去掉噪声和大的边界,增强图像细节和小的边缘

addWeighted(blur_image, 1.0, dst, -1.0, 0, usm_image);

imshow("usm filter", usm_image);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

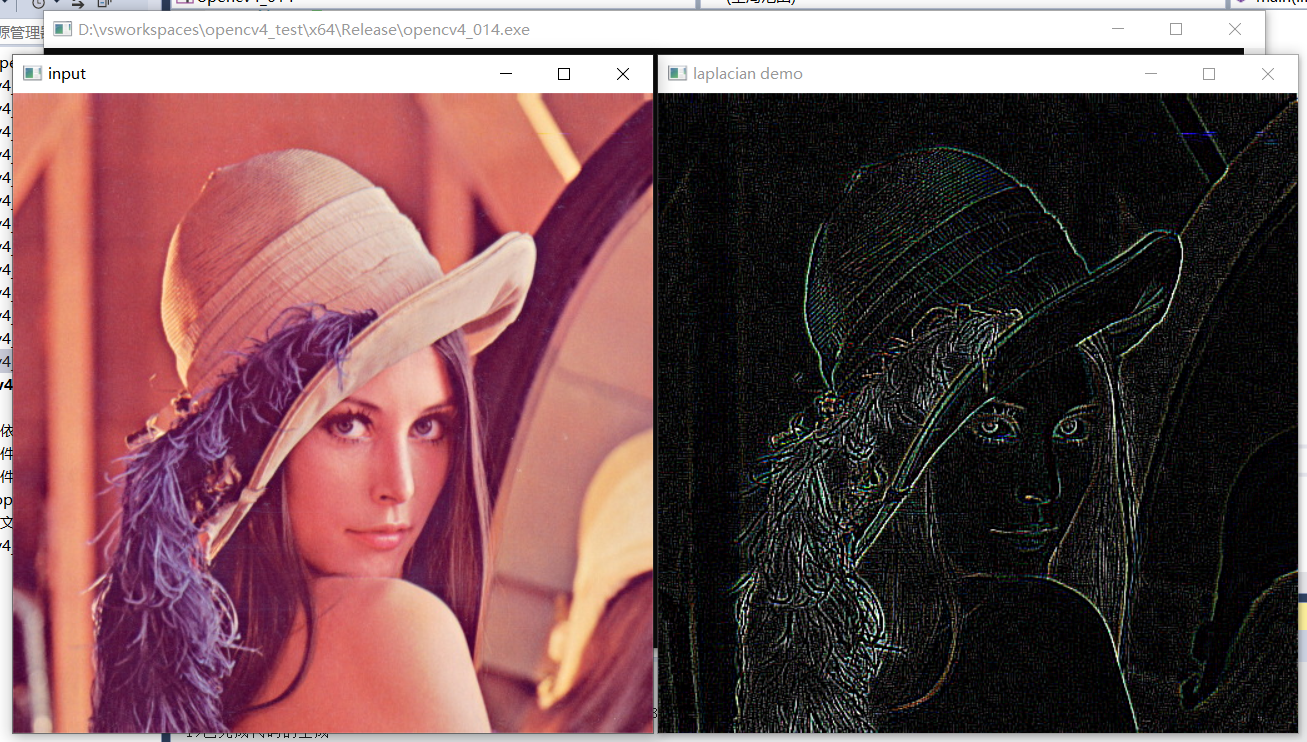

23、图像噪声

噪声类型:

- 椒盐噪声

- 高斯噪声

- 其他噪声...

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

// salt and pepper noise 随机分布的黑白点

RNG rng(12345);

Mat image = src.clone();

int h = src.rows;

int w = src.cols;

int nums = 10000;

for (int i = 0; i < nums; i++) {

int x = rng.uniform(0, w);

int y = rng.uniform(0, h);

if (i % 2 == 1) {

src.at<Vec3b>(y, x) = Vec3b(255, 255, 255);

}

else {

src.at<Vec3b>(y, x) = Vec3b(0, 0, 0);

}

}

imshow("salt and pepper noise", src);

//高斯噪声 正态分布的不同颜色的点

Mat noise = Mat::zeros(image.size(), image.type());

randn(noise, Scalar(25, 25, 25), Scalar(30, 30, 30));

Mat dst;

add(image, noise, dst);

imshow("gaussian noise", dst);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

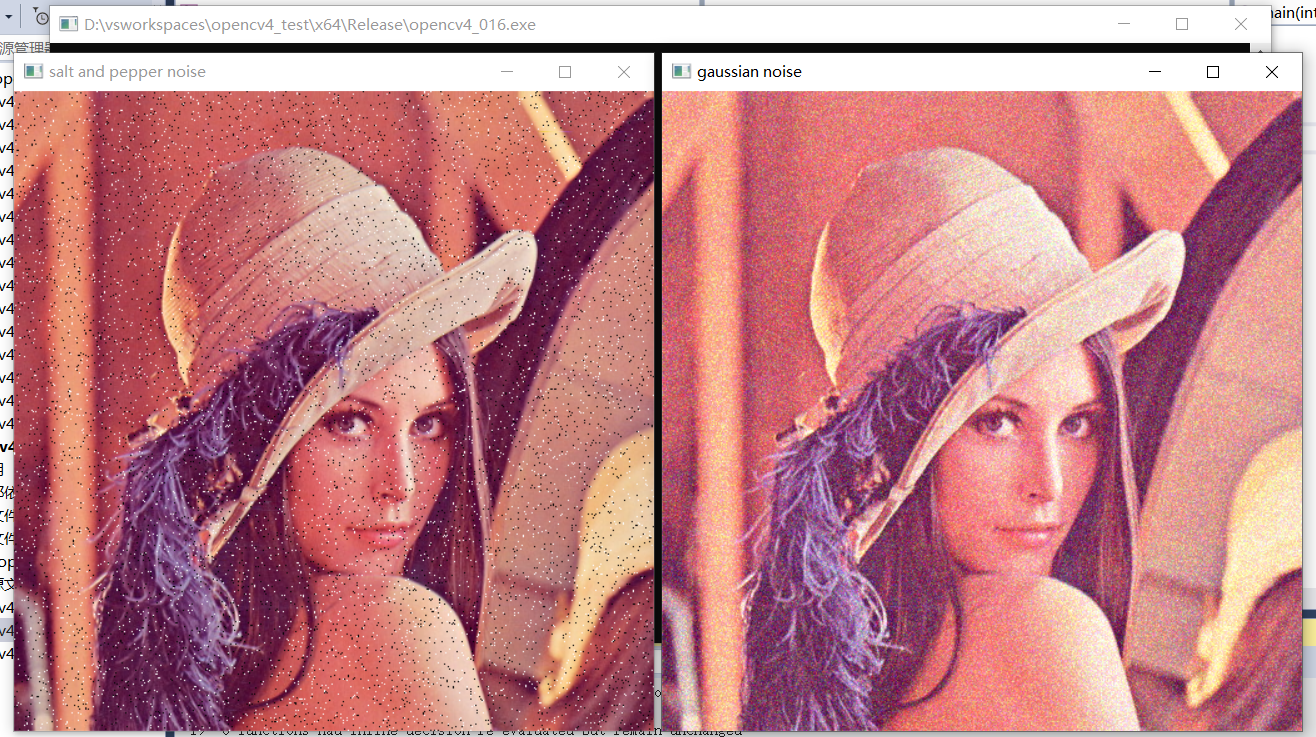

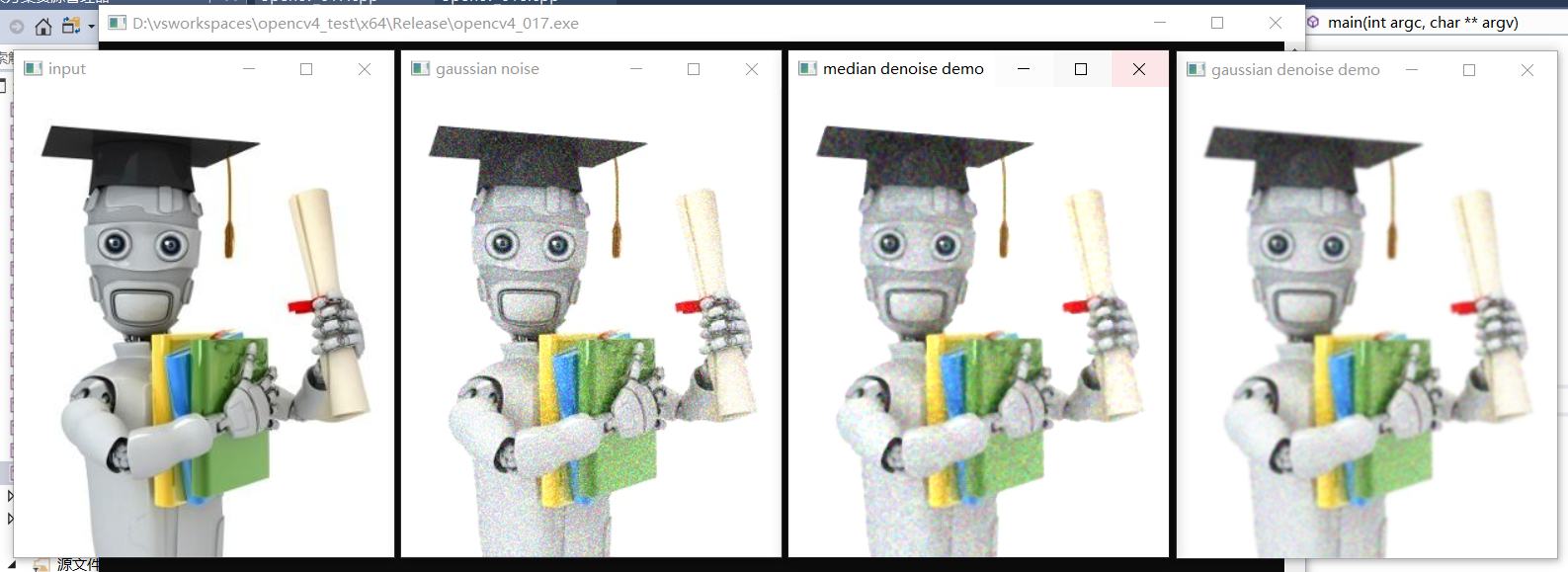

24、图像去噪声

中值滤波与均值滤波示意图:

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

void add_salt_and_pepper_noise(Mat &image);

void add_gaussian_noise(Mat &image);

int main(int argc, char** argv) {

Mat src = imread("D:/images/ml.png");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

add_gaussian_noise(src);

//中值滤波

Mat dst;

medianBlur(src, dst, 3);

imshow("median denoise demo", dst);

//高斯滤波

GaussianBlur(src, dst, Size(5, 5), 0);

imshow("gaussian denoise demo", dst);

waitKey(0);

destroyAllWindows();

return 0;

}

void add_salt_and_pepper_noise(Mat &image) {

// salt and pepper noise 随机分布的黑白点

RNG rng(12345);

int h = image.rows;

int w = image.cols;

int nums = 10000;

for (int i = 0; i < nums; i++) {

int x = rng.uniform(0, w);

int y = rng.uniform(0, h);

if (i % 2 == 1) {

image.at<Vec3b>(y, x) = Vec3b(255, 255, 255);

}

else {

image.at<Vec3b>(y, x) = Vec3b(0, 0, 0);

}

}

imshow("salt and pepper noise", image);

}

void add_gaussian_noise(Mat &image) {

//高斯噪声 正态分布的不同颜色的点

Mat noise = Mat::zeros(image.size(), image.type());

randn(noise, Scalar(25, 25, 25), Scalar(30, 30, 30));

Mat dst;

add(image, noise, dst);

imshow("gaussian noise", dst);

dst.copyTo(image);

}

效果:

椒盐噪声:

高斯噪声:

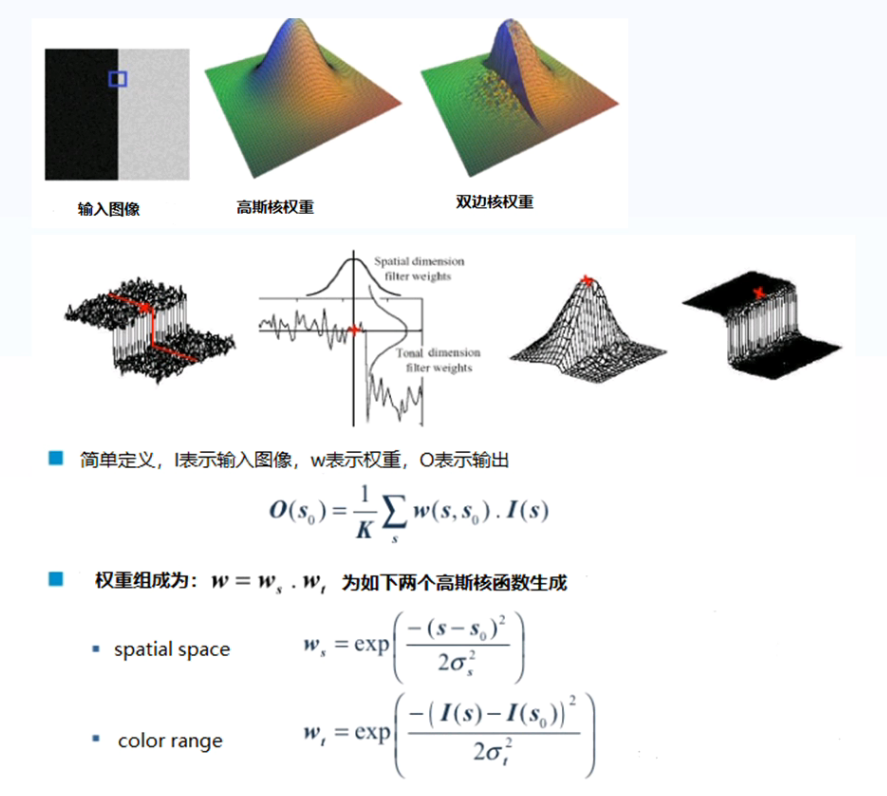

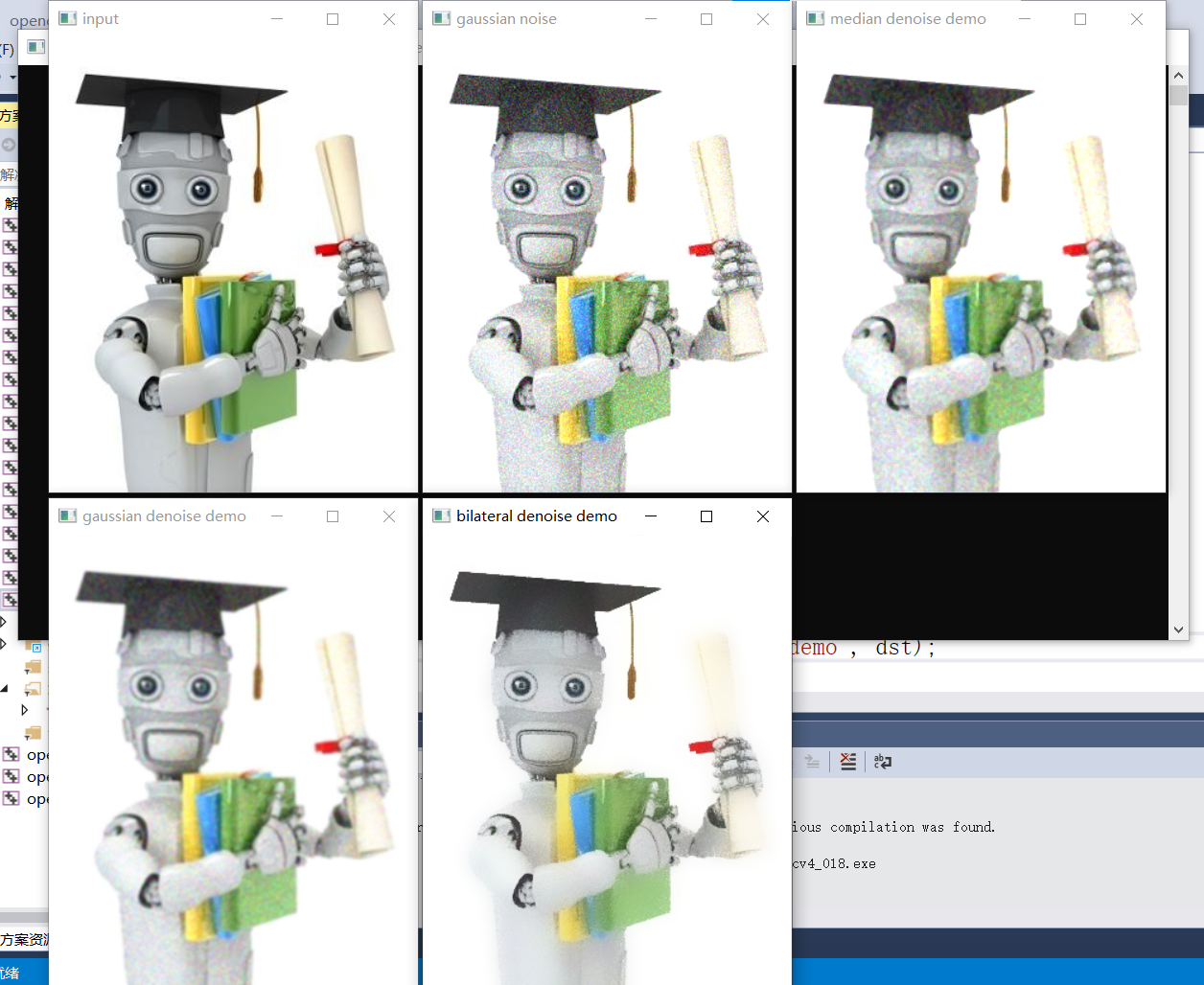

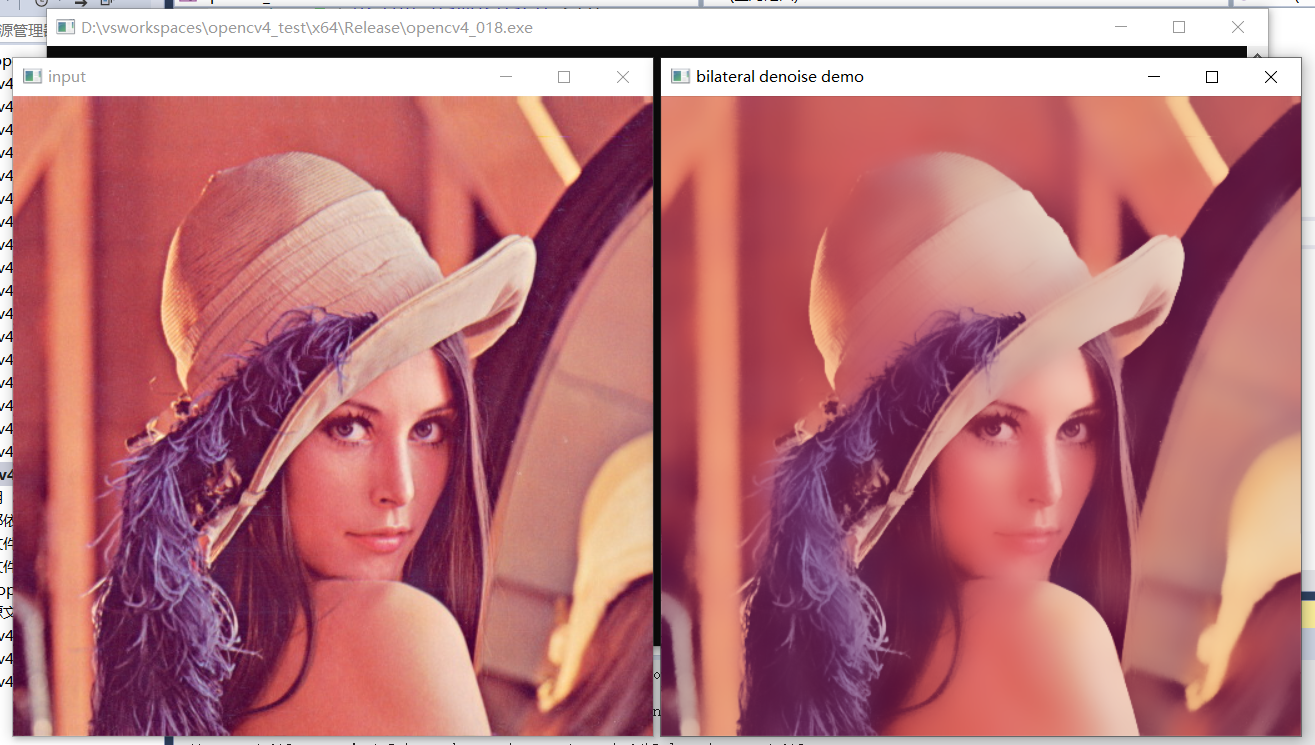

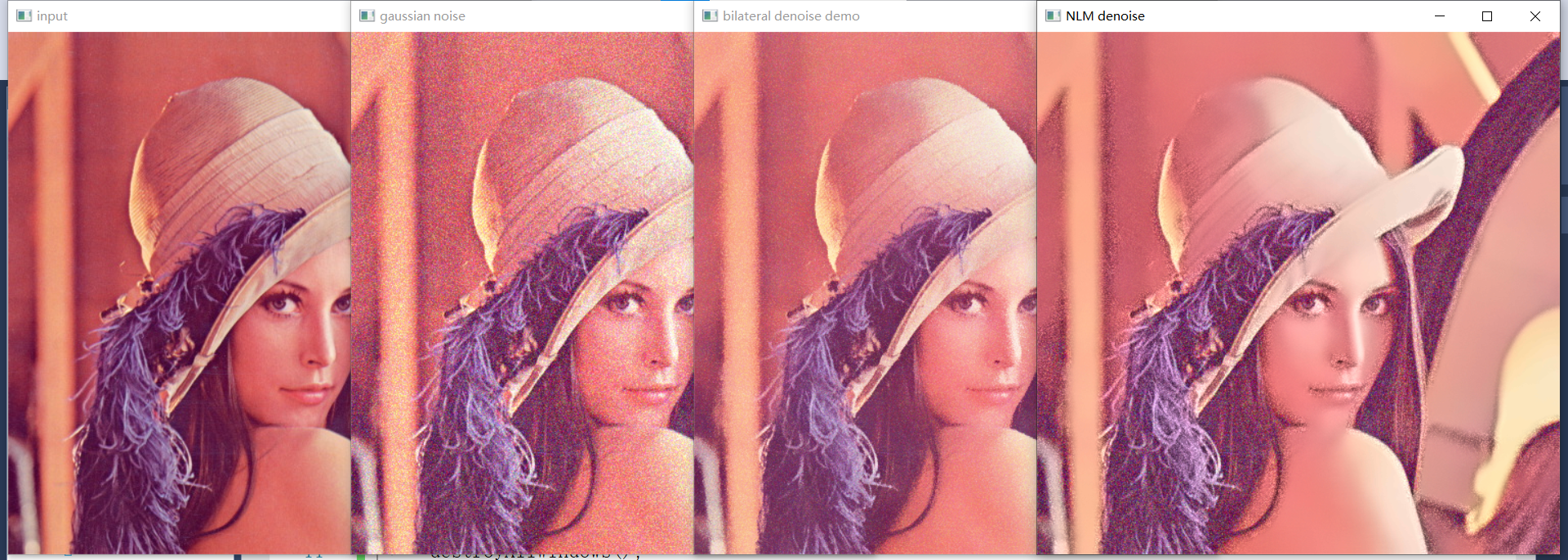

25、边缘保留滤波EPF(高斯噪声)

- 高斯双边滤波

- 除了空间位置核函数以外,增加了一个颜色差异和函数,当中心像素与其他像素颜色差异过大时,则w权重趋近于0,忽略该像素的影响,只对与中心像素颜色相近的像素进行高斯滤波

- 主要是降低像素值高的像素对像素值低的中心像素的影响,像素值高的像素权重低,最终中心像素的值仍然会保持较低。最终保持像素之间的差异。而像素值低的像素不管权重高低,对像素值高的中心像素本来影响都较小。

均值迁移滤波

非局部均值滤波

- 相似的像素块,权重比较大,不相似的像素块权重比较小

- 效果好,但运算量大,速度慢

局部均方差

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

void add_salt_and_pepper_noise(Mat &image);

void add_gaussian_noise(Mat &image);

int main(int argc, char** argv) {

//Mat src = imread("D:/images/ml.png");

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

add_gaussian_noise(src);

/*

//中值滤波

Mat dst;

medianBlur(src, dst, 3);

imshow("median denoise demo", dst);

//高斯滤波

GaussianBlur(src, dst, Size(5, 5), 0);

imshow("gaussian denoise demo", dst);

*/

//双边滤波

Mat dst;

bilateralFilter(src, dst, 0, 50, 10);

imshow("bilateral denoise demo", dst);

//非局部均值滤波

fastNlMeansDenoisingColored(src, dst, 7., 7., 15, 45);

imshow("NLM denoise", dst);

waitKey(0);

destroyAllWindows();

return 0;

}

void add_salt_and_pepper_noise(Mat &image) {

// salt and pepper noise 随机分布的黑白点

RNG rng(12345);

int h = image.rows;

int w = image.cols;

int nums = 10000;

for (int i = 0; i < nums; i++) {

int x = rng.uniform(0, w);

int y = rng.uniform(0, h);

if (i % 2 == 1) {

image.at<Vec3b>(y, x) = Vec3b(255, 255, 255);

}

else {

image.at<Vec3b>(y, x) = Vec3b(0, 0, 0);

}

}

imshow("salt and pepper noise", image);

}

void add_gaussian_noise(Mat &image) {

//高斯噪声 正态分布的不同颜色的点

Mat noise = Mat::zeros(image.size(), image.type());

randn(noise, Scalar(25, 25, 25), Scalar(30, 30, 30));

Mat dst;

add(image, noise, dst);

imshow("gaussian noise", dst);

dst.copyTo(image);

}

效果:

中值滤波、高斯滤波与双边滤波对高斯噪声的效果:

双边滤波对原图的效果:

调整后双边滤波与非局部均值滤波对原图的效果:

双边滤波与非局部均值滤波对高斯噪声的作用:

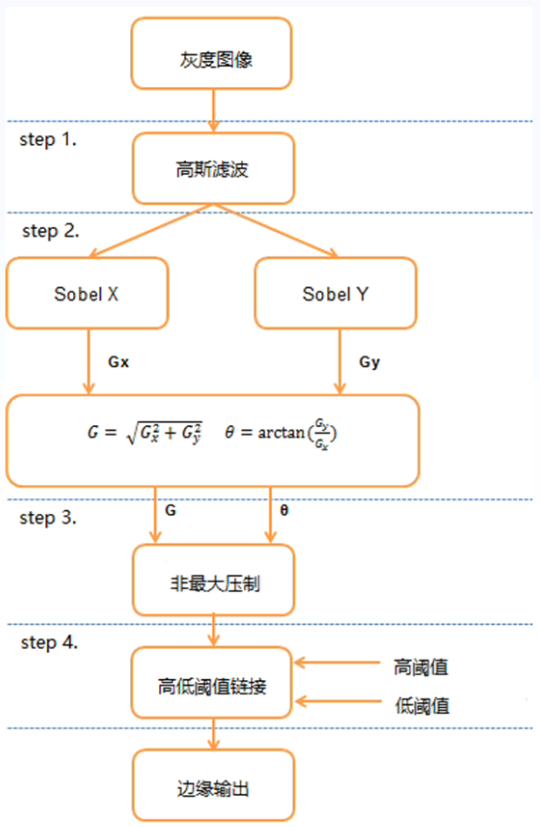

26、边缘提取

- 边缘法线:单位向量在该方向上图像像素强度变化最大。

- 边缘方向:与边缘法线垂直的向量方向。

- 边缘位置或者中心:图像边缘所在位置。

- 边缘强度:跟沿法线方向的图像局部对比相关,对比越大,越是边缘。

Canny边缘提取:

- 模糊去噪声 - 高斯模糊

- 提取梯度与方向

- 非最大信号抑制(比较边缘方向像素值是否比两侧像素值都大)

- 高低阈值链接:

- 高低阈值链接T1,T2,其中T1/T2=2~3,策略:

- 大于高阈值T1的全部保留

- 小于低阈值T2的全部丢弃

- 在T1到T2之间的,可以链接(八邻域)到高阈值的像素点,保留;无法链接的,丢弃

- 高低阈值链接T1,T2,其中T1/T2=2~3,策略:

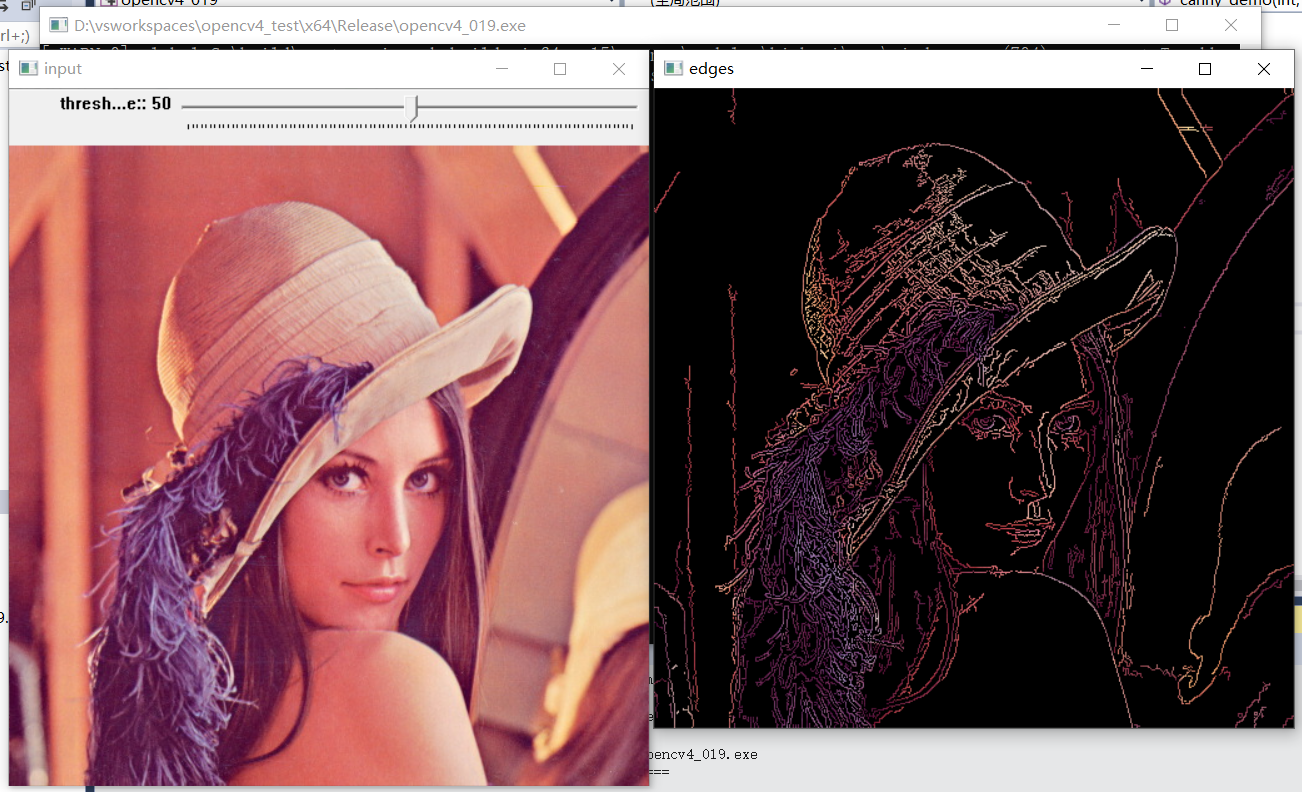

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int t1 = 50;

Mat src;

void canny_demo(int, void*) {

Mat edges, dst;

Canny(src, edges, t1, t1 * 3, 3, false);

bitwise_and(src, src, dst, edges); //获得彩色边缘

imshow("edges", dst);

}

int main(int argc, char** argv) {

src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

//Canny边缘提取

createTrackbar("threshold value:", "input", &t1, 100, canny_demo);

//canny_demo(0, 0); //老版本不调用canny_demo(0, 0); 可能会不拖动trackbar时不会默认显示图像

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

Canny边缘提取:

Canny彩色边缘提取:

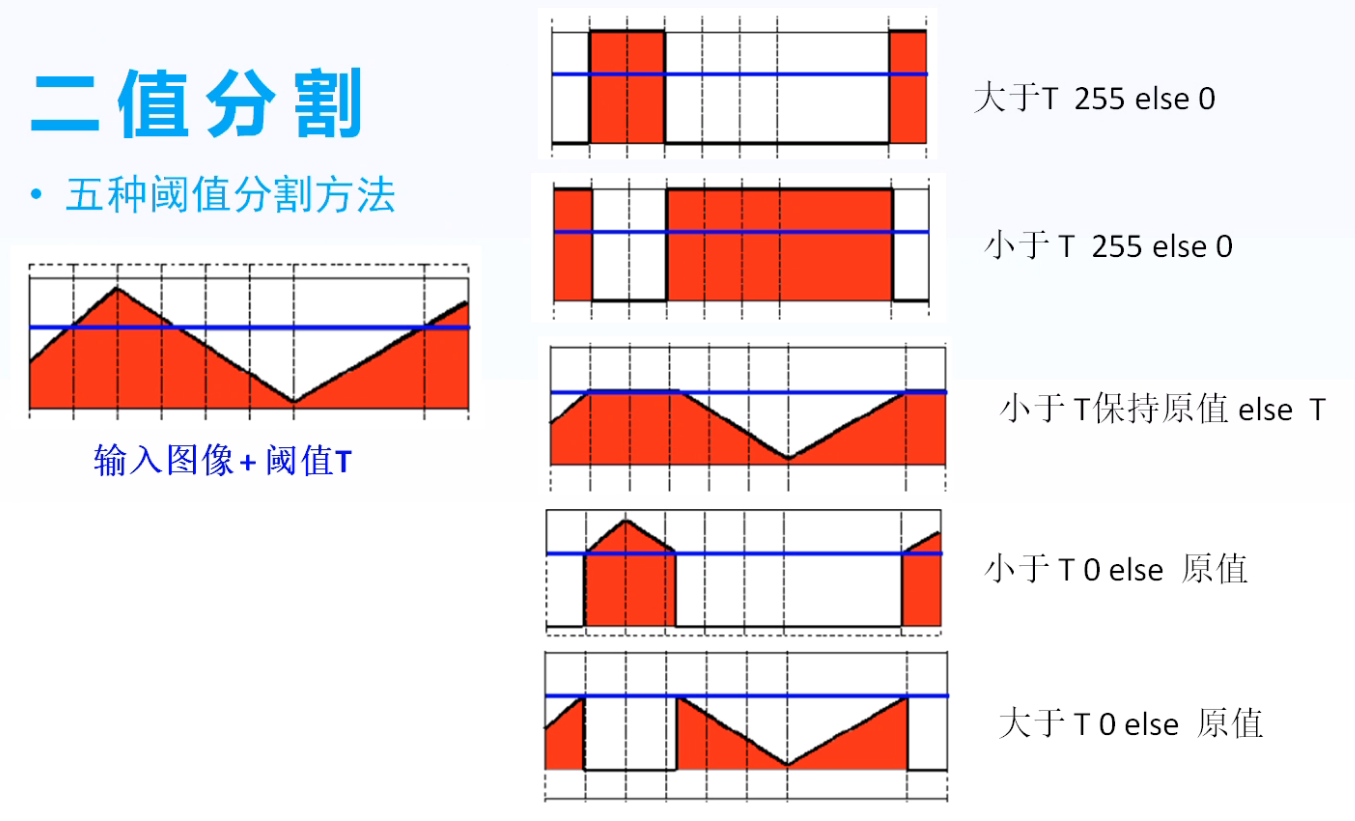

27、二值图像概念

灰度与二值图像:

- 灰度图像 - 单通道,取值范围 0~255

- 二值图像 - 单通道,取值0(黑色)与255(白色)

二值分割:

- 五种阈值分割方法

二值化与阈值化区别:

- 二值化为大于阈值的像素设为255,小于阈值的像素设为0;阈值化为大于阈值或小于阈值的设为0,其余部分不变。

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

//Mat src = imread("D:/images/lena.jpg");

Mat src = imread("D:/images/ml.png");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

imshow("gray", gray);

threshold(gray, binary, 127, 255, THRESH_BINARY);

imshow("threshold binary", binary);

threshold(gray, binary, 127, 255, THRESH_BINARY_INV);

imshow("threshold binary invert", binary);

threshold(gray, binary, 127, 255, THRESH_TRUNC);

imshow("threshold TRUNC", binary);

threshold(gray, binary, 127, 255, THRESH_TOZERO);

imshow("threshold to zero", binary);

threshold(gray, binary, 127, 255, THRESH_TOZERO_INV);

imshow("threshold to zero invert", binary);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

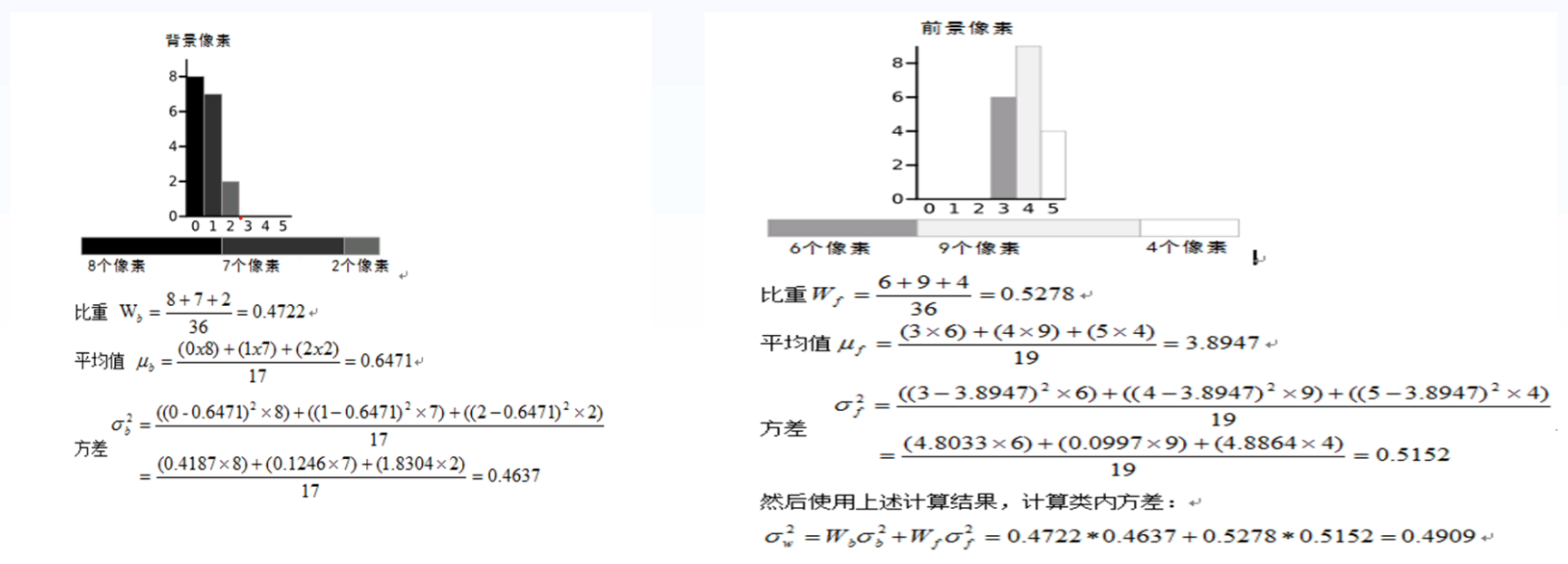

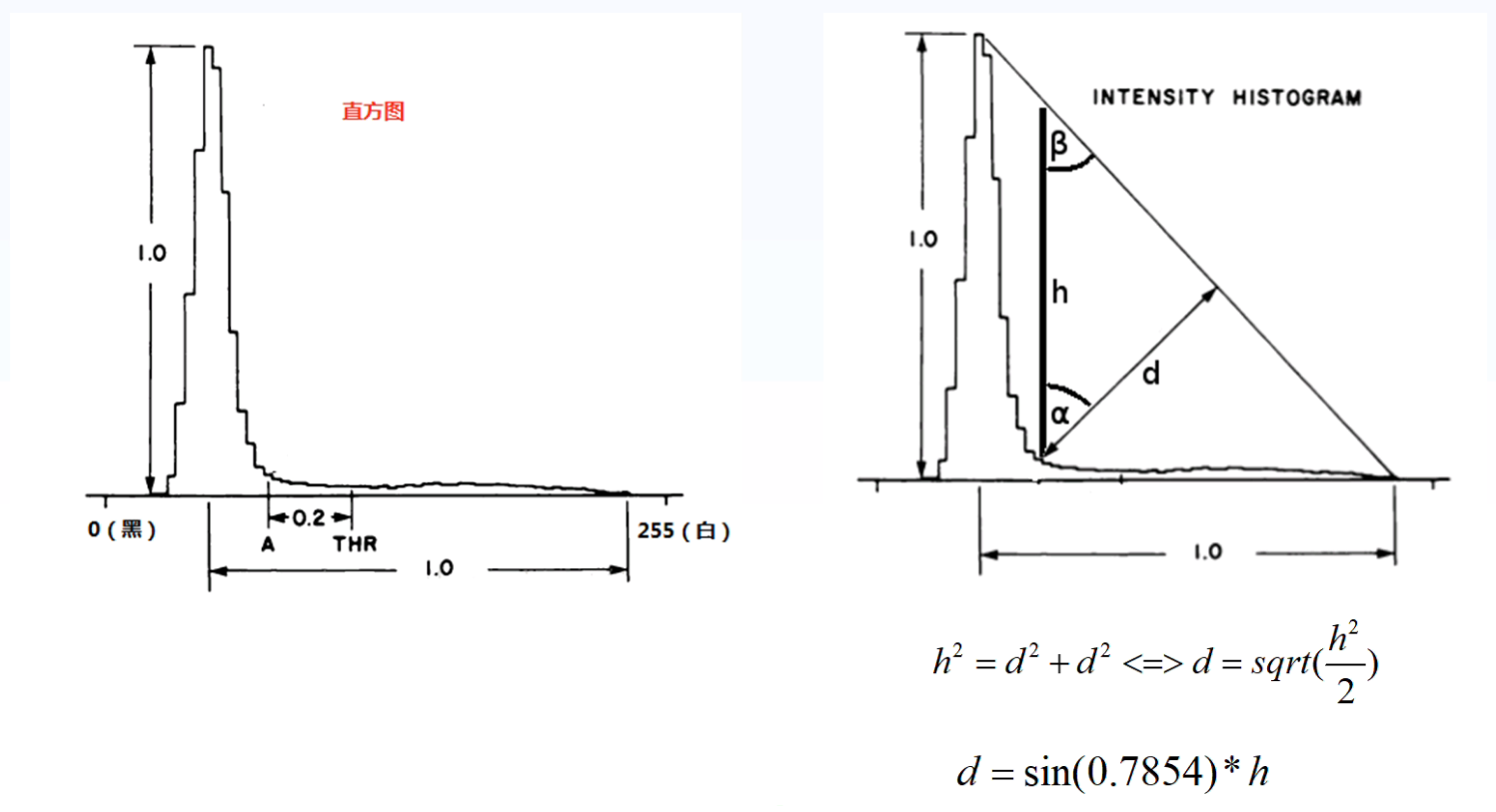

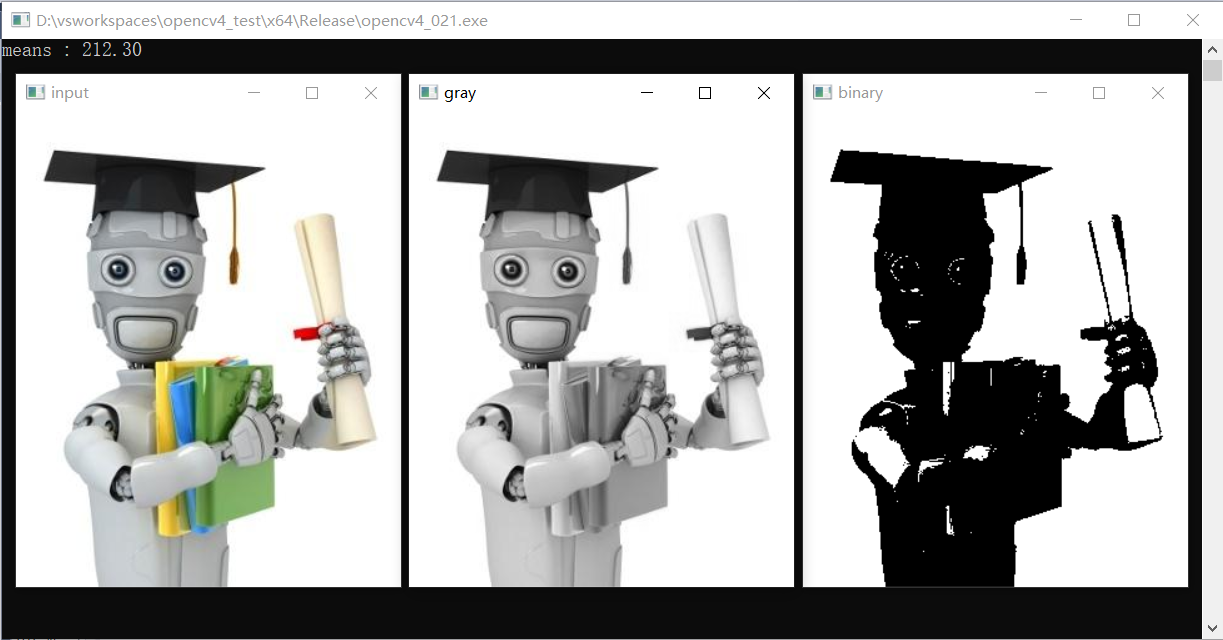

28、全局阈值分割

- 图像二值化分割,最重要的就是计算域值

- 阈值计算算法很多,基本分为两类,全局阈值与自适应阈值

- OTSU(基于最小类内方差之和求取最佳阈值),多峰图像效果较好

- Triangle(三角法),对于医学图像等单峰图像效果较好

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/ml.png");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

imshow("gray", gray);

Scalar m = mean(gray);

printf("means : %.2f\n", m[0]);

threshold(gray, binary, m[0], 255, THRESH_BINARY);

imshow("binary", binary);

double t1 = threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("otsu binary", binary);

double t2 = threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_TRIANGLE);

imshow("triangle binary", binary);

printf("otsu threshold:%.2f,triangle threshold:%.2f\n", t1, t2);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

1、基于像素均值分割

2、基于OTSU、triangle分割

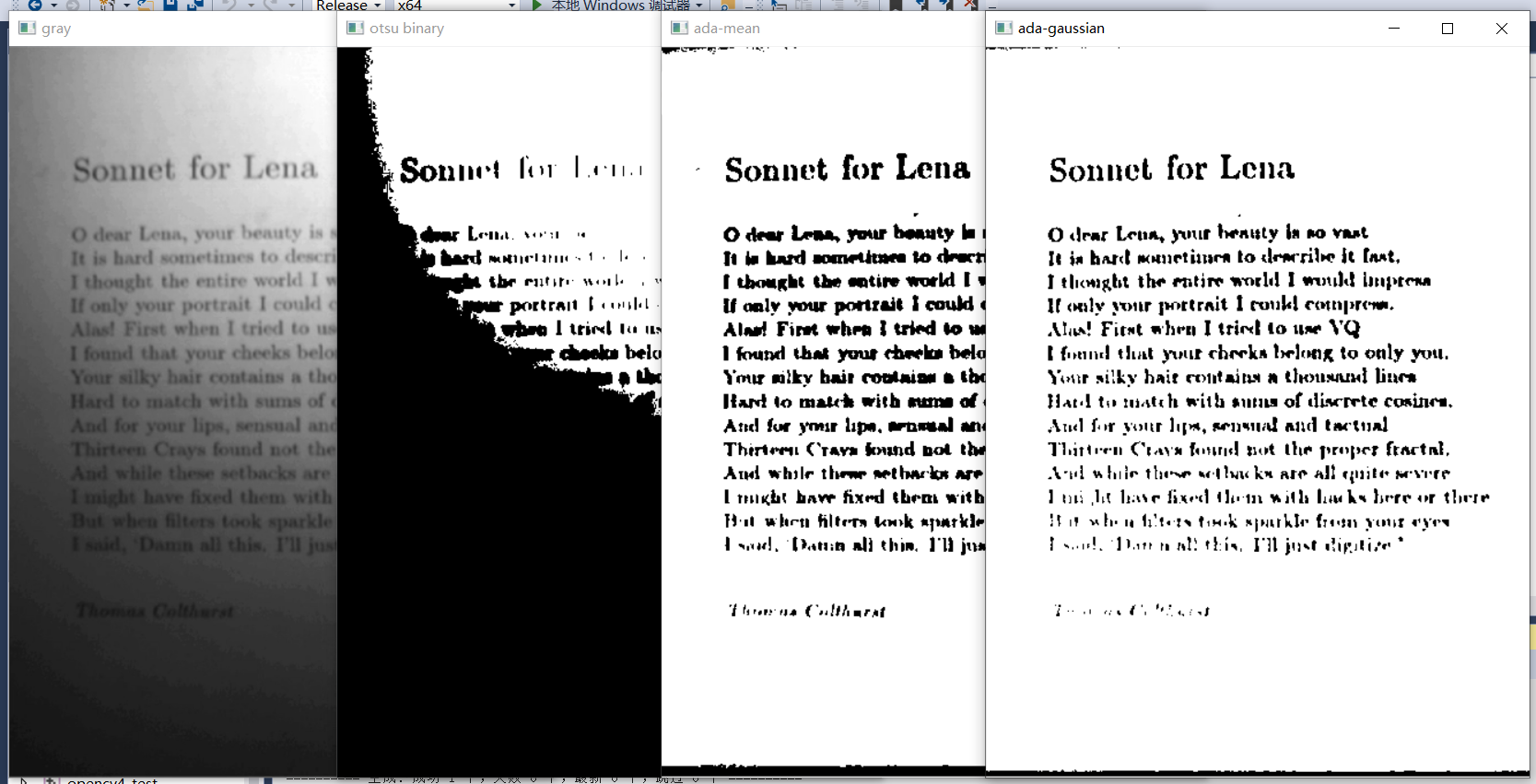

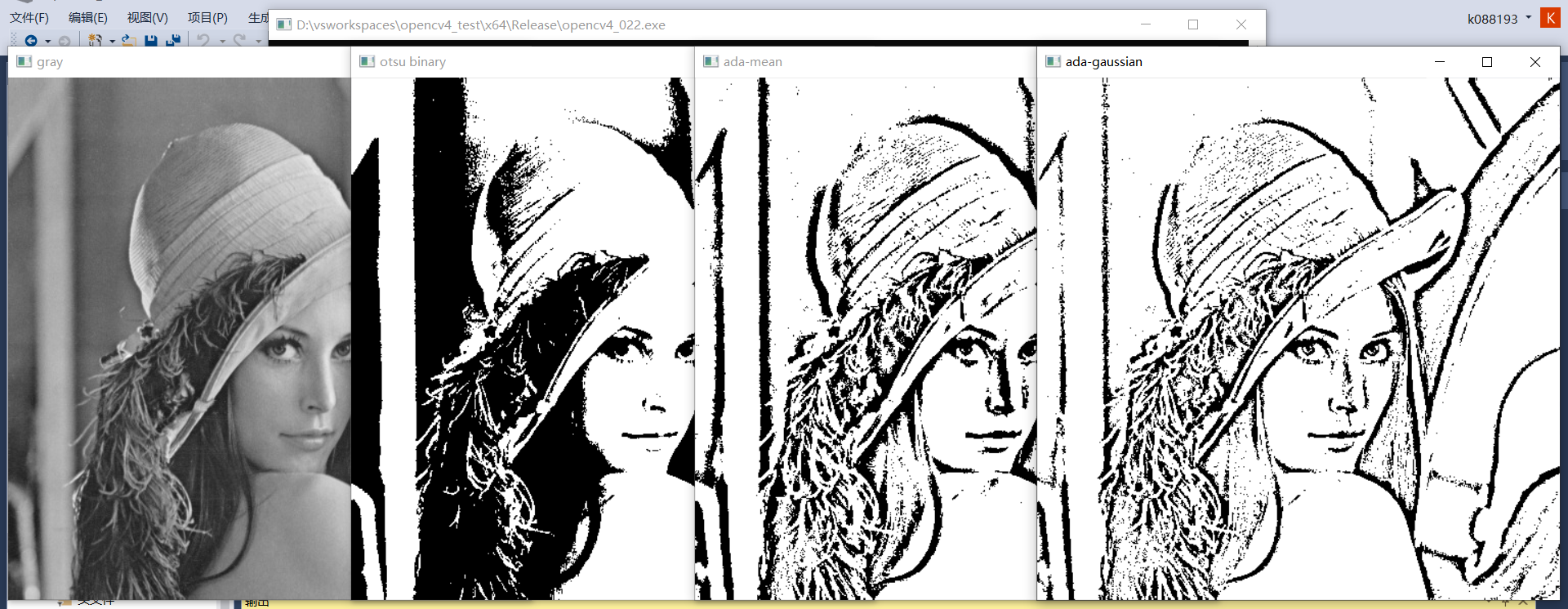

29、自适应阈值分割

全局阈值的局限性,对光照度不均匀的图像容易错误的二值化分割

自适应阈值对图像模糊求差值然后二值化

算法流程:(C+原图) - D > 0 ? 255:0

- 盒子模糊(高斯模糊、均值模糊)图像 → D

- 原图 + 加上偏置常量C得到 → S

- T = S - D + 255

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

//Mat src = imread("D:/images/sonnet for lena.png");

Mat src = imread("D:/images/lena.jpg");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

imshow("gray", gray);

double t1 = threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("otsu binary", binary);

adaptiveThreshold(gray, binary, 255, ADAPTIVE_THRESH_GAUSSIAN_C, THRESH_BINARY, 25, 10);

imshow("ada-gaussian", binary);

adaptiveThreshold(gray, binary, 255, ADAPTIVE_THRESH_MEAN_C, THRESH_BINARY, 25, 10);

imshow("ada-mean", binary);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

1、文字:

2、其他图像:

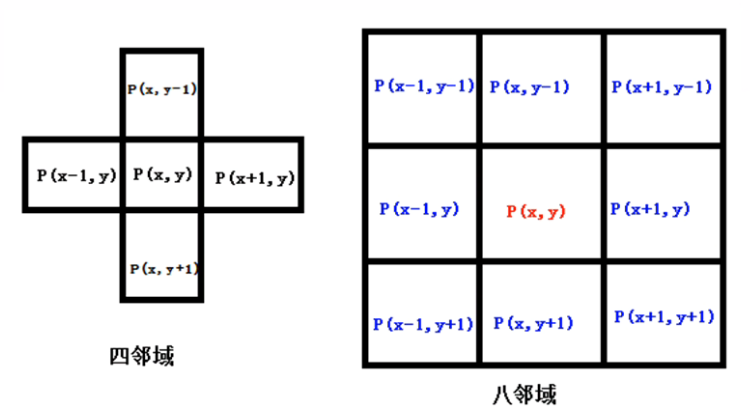

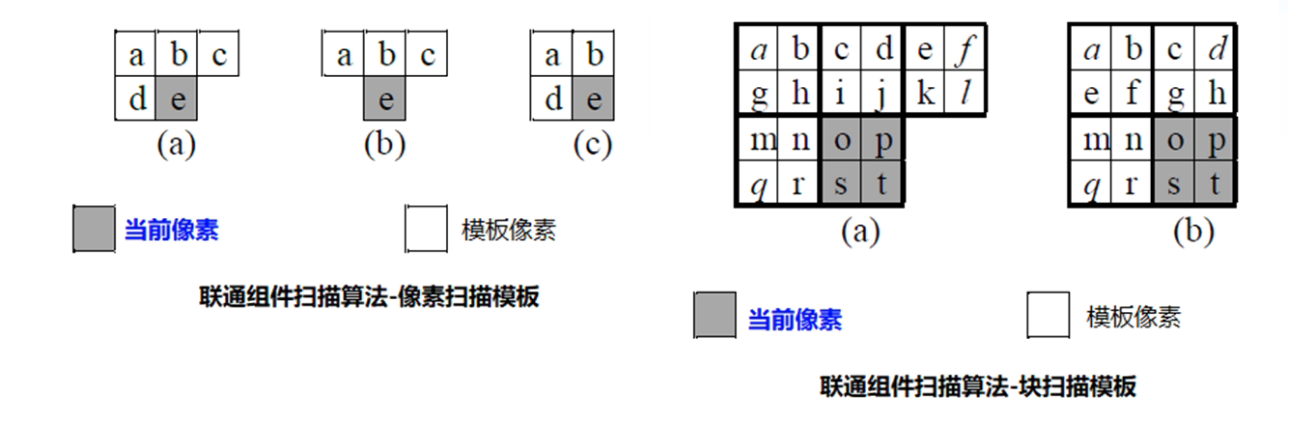

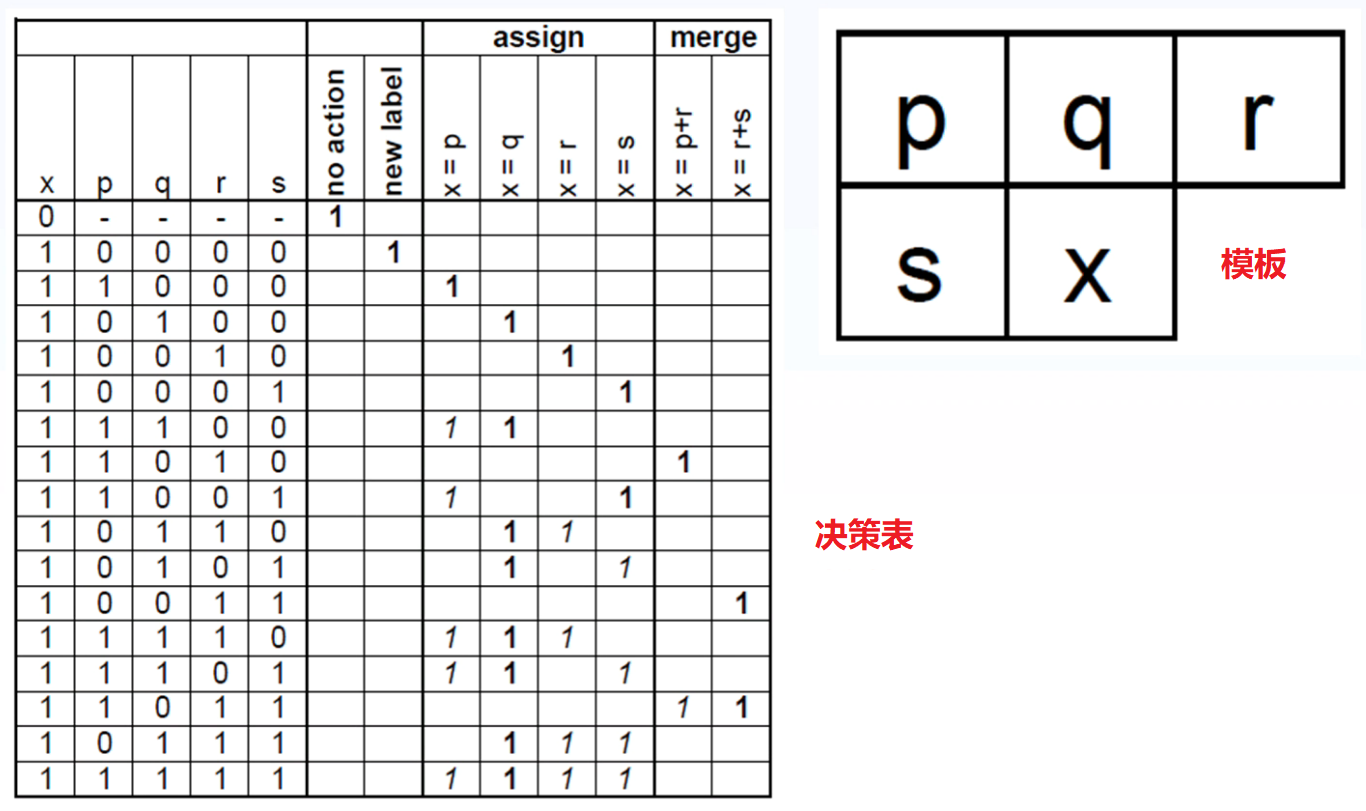

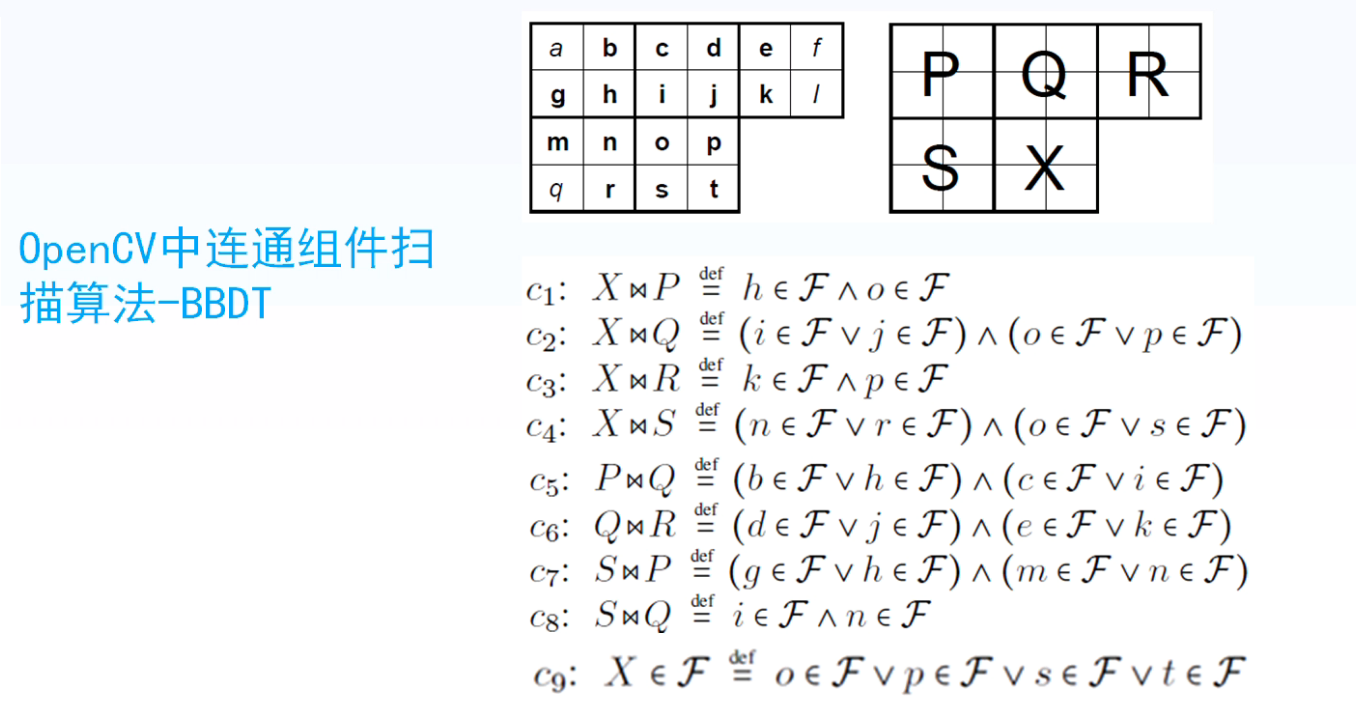

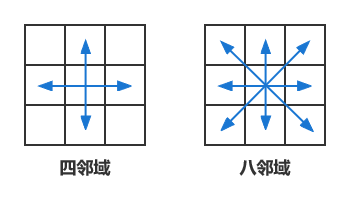

30、联通组件扫描

四邻域与八邻域联通:

常用算法:BBDT(Block Base Decision Table)

基于像素扫描的方法

基于块扫描的方法

两步法扫描:

- 第一步:

- 第二步:

扫描模板:

决策表:

OpenCV中联通组件扫描算法-BBDT:

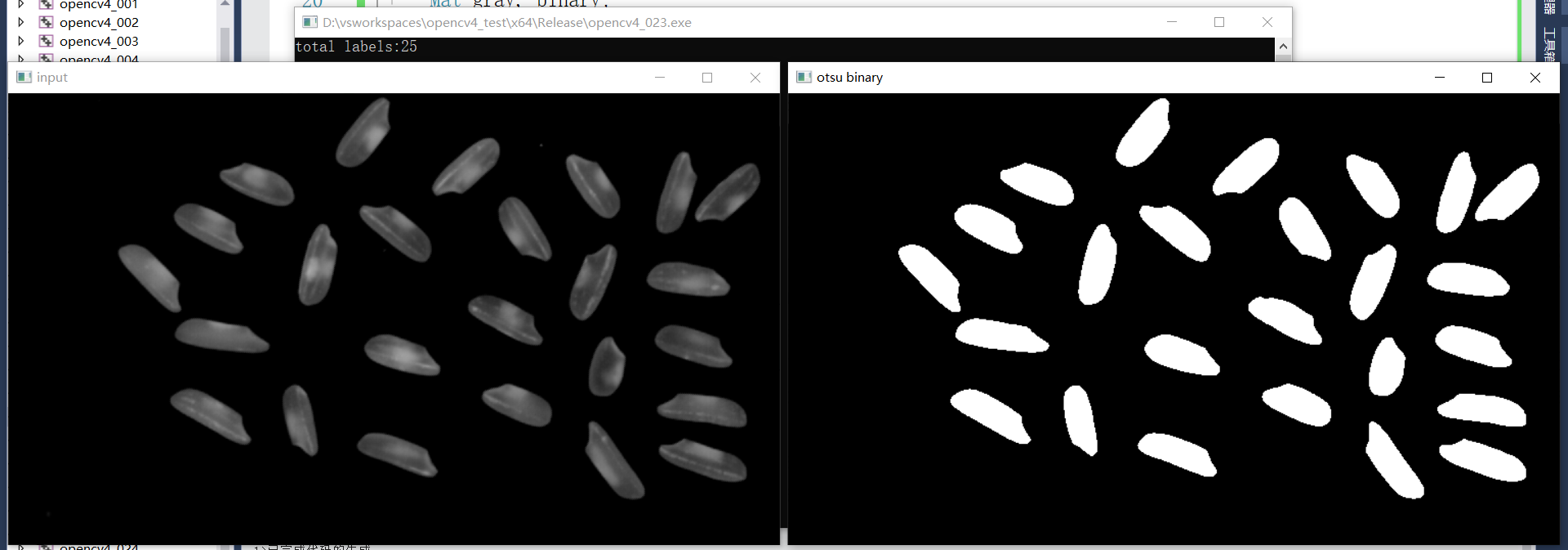

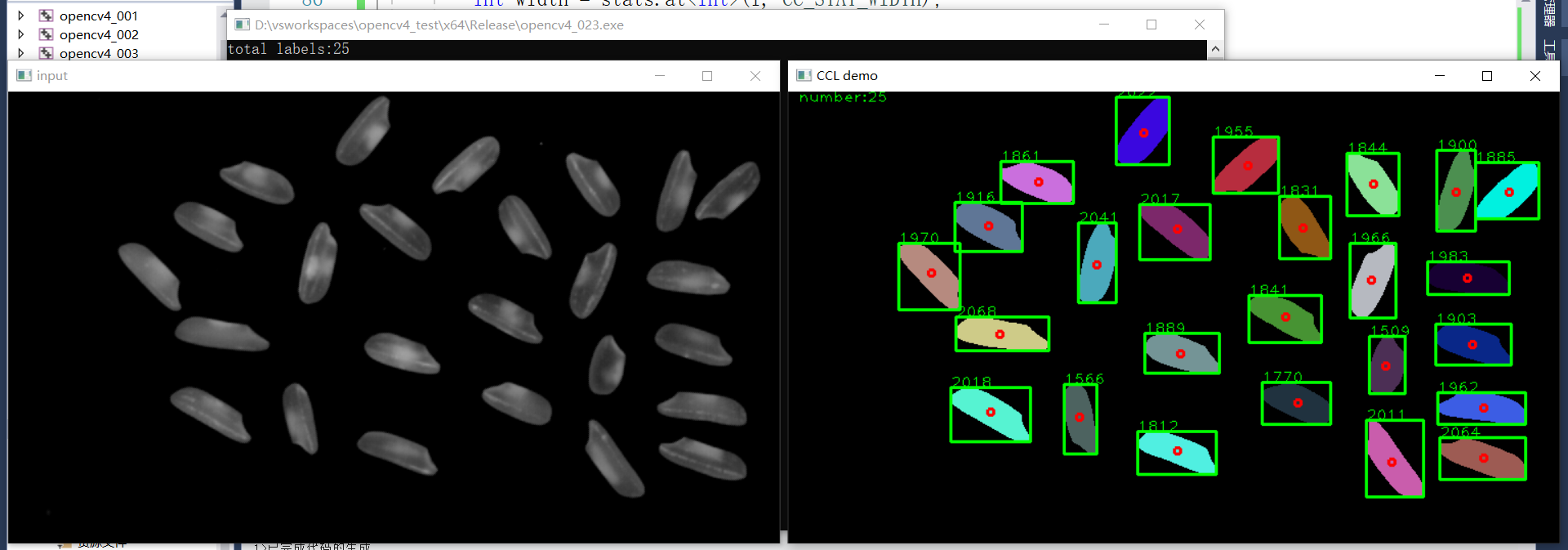

31、带统计信息的联通组件扫描

API知识点:

- connectedComponentsWithStats

- 黑色背景

| 输出 | 内容 |

|---|---|

| 1 | 联通组件数目 |

| 2 | 标签矩阵 |

| 3 | 状态矩阵 |

| 4 | 中心位置坐标 |

状态矩阵[label,COLUMN]

| 标签索引 | 列-1 | 列-1 | 列-1 | 列-1 | 列-1 |

|---|---|---|---|---|---|

| 1 | CC_STAT_LEFT | CC_STAT_TOP | CC_STAT_WIDTH | CC_STAT_HEIGHT | CC_STAT_AREA |

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

RNG rng(12345);

void ccl_stats_demo(Mat &image);

int main(int argc, char** argv) {

//Mat src = imread("D:/images/lena.jpg");

Mat src = imread("D:/images/rice.png");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

GaussianBlur(src, src, Size(3, 3), 0); //最好在分割处理之前使用高斯滤波降噪

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

//adaptiveThreshold(gray, binary, 255, ADAPTIVE_THRESH_GAUSSIAN_C, THRESH_BINARY, 81, 10);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("otsu binary", binary);

ccl_stats_demo(binary); //带统计信息的联通组件

/* 不带统计信息的联通组件

Mat labels = Mat::zeros(binary.size(), CV_32S);

int num_labels = connectedComponents(binary, labels, 8, CV_32S, CCL_DEFAULT); //CCL_DEFAULT就是CCL_GRANA

vector<Vec3b> colorTable(num_labels);

//background color

colorTable[0] = Vec3b(0, 0, 0);

for (int i = 1; i < num_labels; i++) { //背景标签为0,跳过背景从1开始

colorTable[i] = Vec3b(rng.uniform(0, 256), rng.uniform(0, 256), rng.uniform(0, 256));

}

Mat result = Mat::zeros(src.size(), src.type());

int w = result.cols;

int h = result.rows;

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

int label = labels.at<int>(row, col); //判断每一个像素的标签

result.at<Vec3b>(row, col) = colorTable[label]; //根据每一个像素的标签对该像素赋予颜色

}

}

putText(result, format("number:%d", num_labels - 1), Point(50, 50), FONT_HERSHEY_PLAIN, 1.0, Scalar(0, 255, 0), 1);

printf("total labels:%d\n", (num_labels - 1)); //num_labels包含背景,num_labels - 1为前景对象个数

imshow("CCL demo", result);

*/

waitKey(0);

destroyAllWindows();

return 0;

}

void ccl_stats_demo(Mat &image) {

Mat labels = Mat::zeros(image.size(), CV_32S);

Mat stats, centroids;

int num_labels = connectedComponentsWithStats(image, labels, stats, centroids, 8, CV_32S, CCL_DEFAULT);

vector<Vec3b> colorTable(num_labels);

//background color

colorTable[0] = Vec3b(0, 0, 0);

for (int i = 1; i < num_labels; i++) { //背景标签为0,跳过背景从1开始

colorTable[i] = Vec3b(rng.uniform(0, 256), rng.uniform(0, 256), rng.uniform(0, 256));

}

Mat result = Mat::zeros(image.size(), CV_8UC3);

int w = result.cols;

int h = result.rows;

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

int label = labels.at<int>(row, col); //判断每一个像素的标签

result.at<Vec3b>(row, col) = colorTable[label]; //根据每一个像素的标签对该像素赋予颜色

}

}

for (int i = 1; i < num_labels; i++) {

//center

int cx = centroids.at<double>(i, 0);

int cy = centroids.at<double>(i, 1);

//rectangle and area

int x = stats.at<int>(i, CC_STAT_LEFT);

int y = stats.at<int>(i, CC_STAT_TOP);

int width = stats.at<int>(i, CC_STAT_WIDTH);

int height = stats.at<int>(i, CC_STAT_HEIGHT);

int area = stats.at<int>(i, CC_STAT_AREA);

//绘制

circle(result, Point(cx, cy), 3, Scalar(0, 0, 255), 2, 8, 0);

//外接矩形

Rect box(x, y, width, height);

rectangle(result, box, Scalar(0, 255, 0), 2, 8);

putText(result, format("%d", area), Point(x, y), FONT_HERSHEY_PLAIN, 1.0, Scalar(0, 255, 0), 1);

}

putText(result, format("number:%d", num_labels - 1), Point(10, 10), FONT_HERSHEY_PLAIN, 1.0, Scalar(0, 255, 0), 1);

printf("total labels:%d\n", (num_labels - 1)); //num_labels包含背景,num_labels - 1为前景对象个数

imshow("CCL demo", result);

}

效果:

1、OSTU二值化分割

2、带统计信息的联通组件

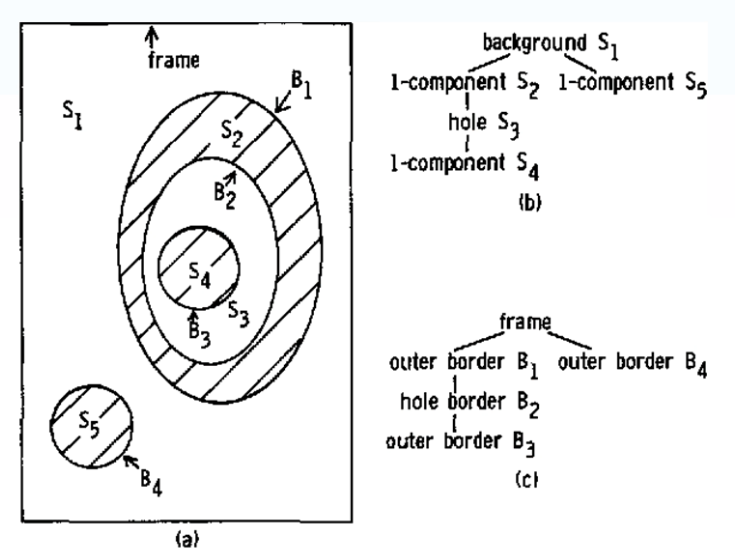

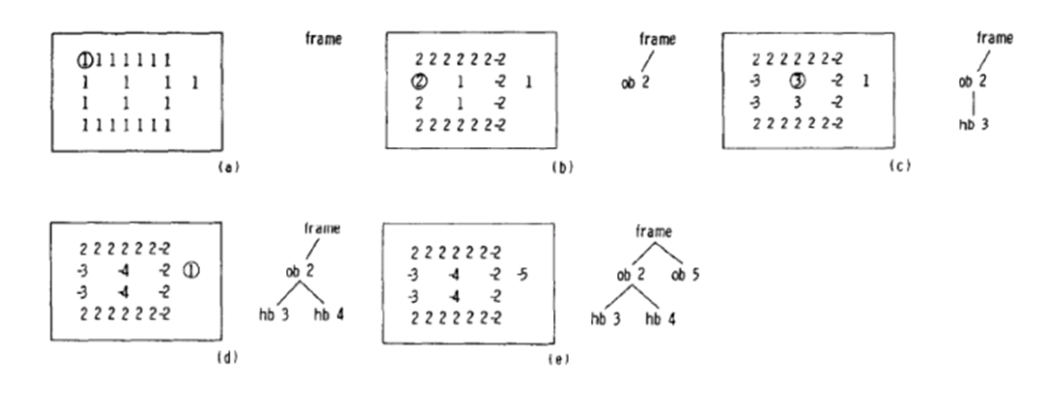

32、图像轮廓发现

- 图像轮廓 - 图像边界

- 主要针对二值图像,轮廓是一系列点的集合

轮廓发现

- 基于联通组件

- 反映图像拓扑结构(将最后一个像素标记为负数,与负数相邻的像素标记为相同的负数)

API知识点

- findContours

- drawContours

层次信息 vector中Vec4i的四个数值的意义:

| 同层下个轮廓索引 | 同层上个轮廓索引 | 下层第一个孩子索引 | 上层父轮廓索引 |

|---|---|---|---|

| hierarchy [i] [0] | hierarchy [i] [1] | hierarchy [i] [2] | hierarchy [i] [3] |

| 参数顺序 | 解释 |

|---|---|

| Image | 二值图像 |

| mode | 拓扑结构(Tree、List、External) |

| method | 编码方式 |

| contours | 轮廓个数 - 每个轮廓有一系列的点组成 |

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

RNG rng(12345);

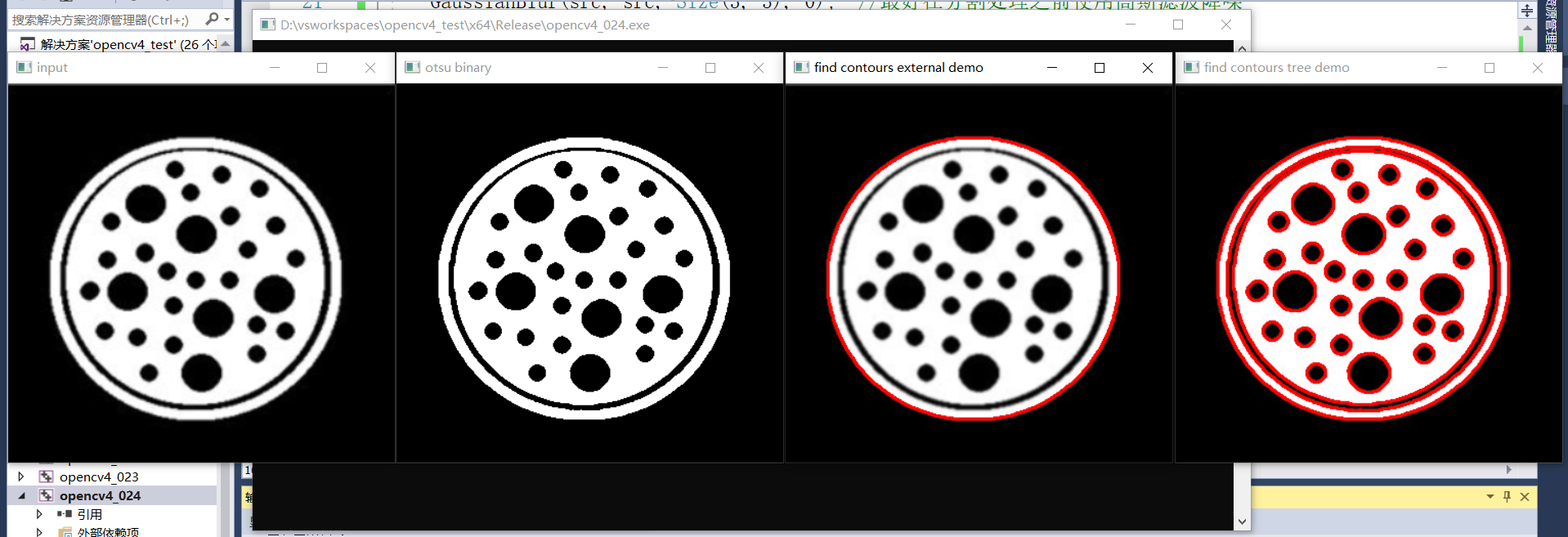

int main(int argc, char** argv) {

//Mat src = imread("D:/images/lena.jpg");

Mat src = imread("D:/images/morph3.png");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

//二值化

GaussianBlur(src, src, Size(3, 3), 0); //最好在分割处理之前使用高斯滤波降噪

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("otsu binary", binary);

//轮廓发现

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

//RETR_EXTERNAL表示只绘制最外面的轮廓(外轮廓内的轮廓不绘制),RETR_TREE表示绘制所有轮廓

findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

drawContours(src, contours, -1, Scalar(0, 0, 255), 2, 8);

imshow("find contours external demo", src);

findContours(binary, contours, hirearchy, RETR_TREE, CHAIN_APPROX_SIMPLE, Point()); //simple表示用四个点表示轮廓

drawContours(src, contours, -1, Scalar(0, 0, 255), 2, 8);

imshow("find contours tree demo", src);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

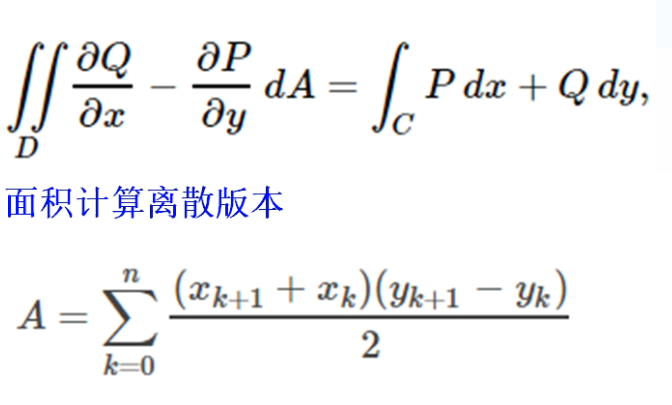

33、图像轮廓计算

轮廓面积与周长:

基于格林公式求面积

- 格林公式:

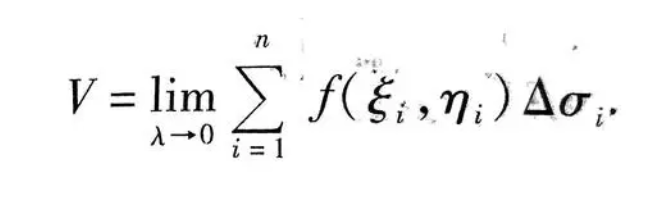

二重积分概念理解:

(1)曲顶柱体的体积

什么是曲顶柱体?设一立体,它的底是一闭合区域D,侧面是以D的边界曲线为准线而母线平行于底的柱面,顶是一个曲面。因为柱体的顶是一个曲面,所以叫曲顶柱体。

想求出这种柱体的体积该怎么办呢?一般柱体的体积=底×高,曲顶柱体显然不适合这种方法,因为顶是曲面。来回想一下不定积分的思想:把曲线分割成非常多份,每一份就相当于一个矩形,所有矩形的面积和就是曲线覆盖的面积。这种思想在这里仍然适用。如果把这个曲顶柱体分割成无穷多份,每一份就可以近似看成一个平顶柱体,这些平顶柱体体积的和就是曲顶柱体的体积。

再详细点讲,当分割的柱体直径最大值趋近0时,取上述和的极限,这个极限就是就是曲顶柱体的体积。

曲顶柱体的体积用公式表达就是这样子的。公式中f(x,y)是平顶柱体的高,Δ&就是平顶柱体的底。

(2) 平面薄片的质量

一个密度不均匀的薄片,如何计算其质量呢?可以拿称测量,总之有很多方法。这里用一个不是特别常规的方法。像上一个例子一样,把薄片分成若干份,当分的份数足够多时,每一份小薄片可以看成密度均匀的薄片,密度均匀的薄片求质量是很好算的,所有小薄片的质量的和就是整个薄片的质量。

整个薄片质量用公式来表达就是这样。公式里的f(x,y)代表在点(x,y)处薄片的面密度,Δ&代表小薄片的面积。

基于L2距离来求周长

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

RNG rng(12345);

int main(int argc, char** argv) {

//Mat src = imread("D:/images/lena.jpg");

Mat src = imread("D:/images/morph3.png");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

//二值化

GaussianBlur(src, src, Size(3, 3), 0); //最好在分割处理之前使用高斯滤波降噪

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("otsu binary", binary);

//轮廓发现

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

//RETR_EXTERNAL表示只绘制最外面的轮廓(外轮廓内的轮廓不绘制),RETR_TREE表示绘制所有轮廓

findContours(binary, contours, hirearchy, RETR_TREE, CHAIN_APPROX_SIMPLE, Point());

for (size_t t = 0; t < contours.size(); t++) {

double area = contourArea(contours[t]); //面积

double length = arcLength(contours[t], true); //周长

if (area < 100 || length < 10) continue; //添加绘制时的过滤条件

Rect box = boundingRect(contours[t]);

//rectangle(src, box, Scalar(0, 0, 255), 2, 8, 0); //绘制最大外接矩形

RotatedRect rrt = minAreaRect(contours[t]);

//ellipse(src, rrt, Scalar(255, 0, 0), 2, 8);

Point2f pts[4];

rrt.points(pts);

for (int i = 0; i < 4; i++) {

line(src, pts[i], pts[(i + 1) % 4], Scalar(0, 255, 0), 2, 8); //绘制最小外接矩形

}

printf("area:%.2f,contour length:%.2f,angle:%.2f\n", area, length, rrt.angle);

//drawContours(src, contours, t, Scalar(0, 0, 255), 2, 8);

}

imshow("find contours demo", src);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

1、最大外接矩形及周长面积

2、最小外接矩形及周长面积角度

34、轮廓匹配

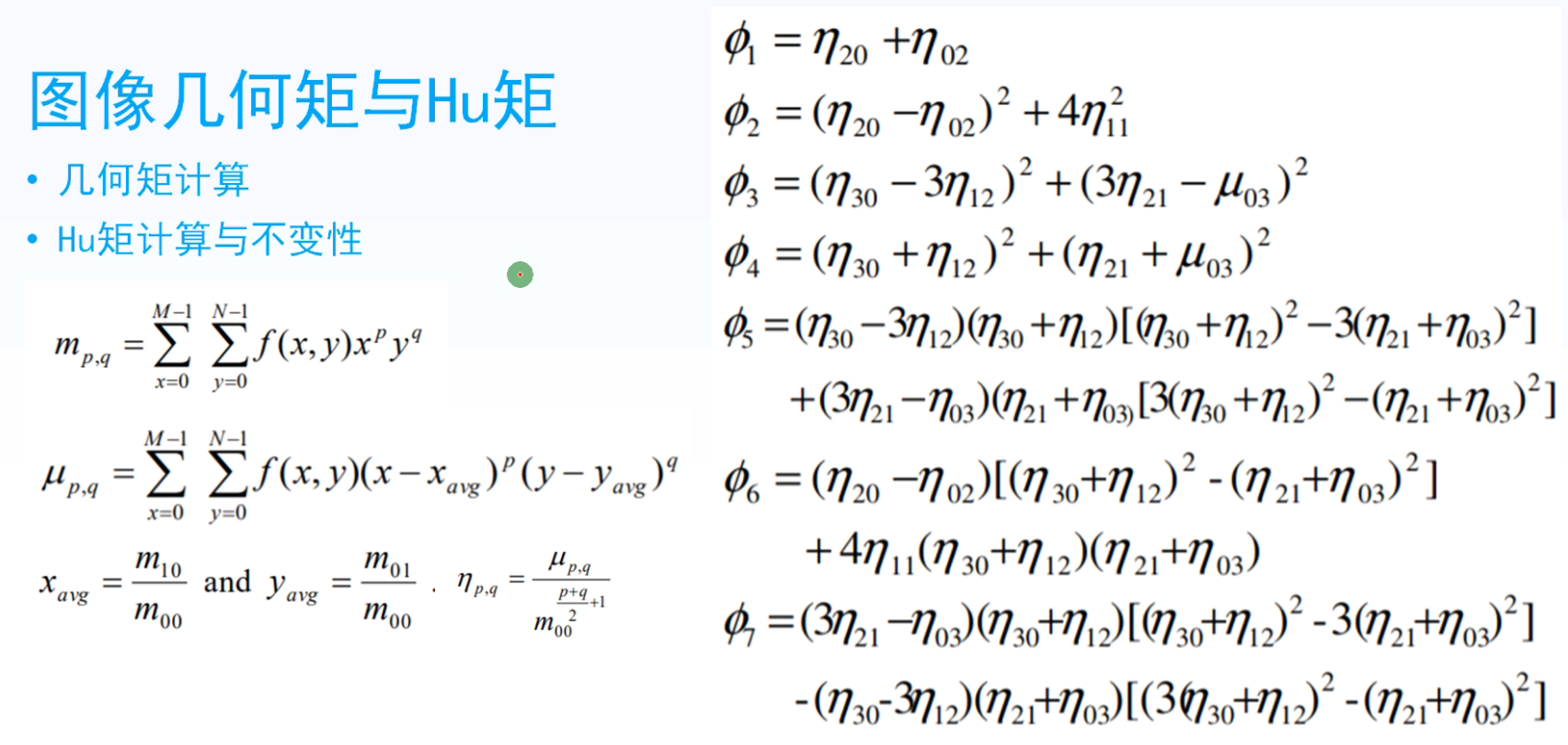

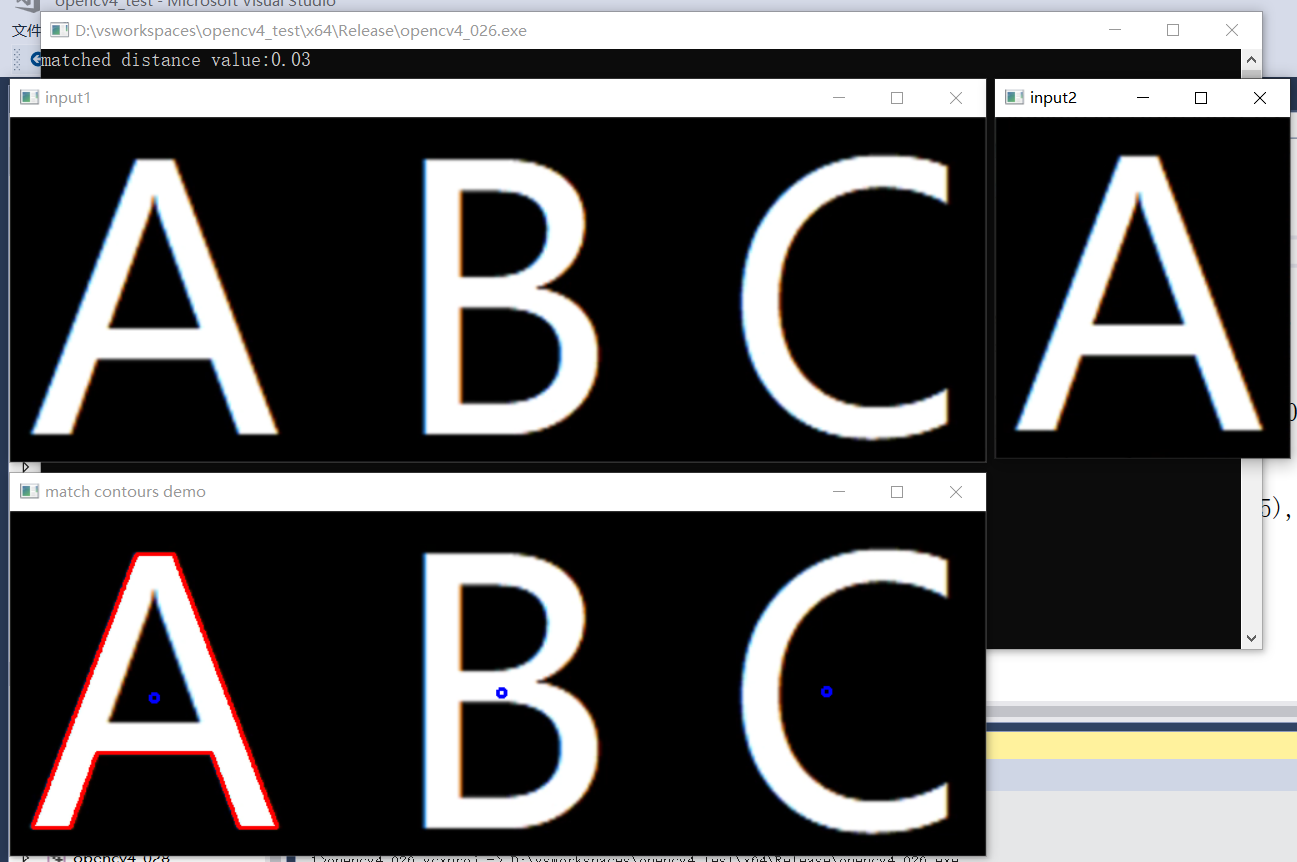

- 图像几何矩与Hu矩(最终得到的七个值有放缩、旋转、平移不变性)

- 先令 x 或 y 等于0,求取 x 和 y 的平均值,再计算Hu矩

- 基于Hu矩轮廓匹配

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

RNG rng(12345);

void contour_info(Mat &image, vector<vector<Point>> &contours);

int main(int argc, char** argv) {

Mat src1 = imread("D:/images/abc.png");

Mat src2 = imread("D:/images/a.png");

if (src1.empty() || src2.empty()) {

printf("could not find image file");

return -1;

}

imshow("input1", src1);

imshow("input2", src2);

vector<vector<Point>> contours1;

vector<vector<Point>> contours2;

contour_info(src1, contours1);

contour_info(src2, contours2);

Moments mm2 = moments(contours2[0]); //计算几何矩

Mat hu2;

HuMoments(mm2, hu2); //计算Hu矩

for (size_t t = 0; t < contours1.size(); t++) {

Moments mm = moments(contours1[t]);

double cx = mm.m10 / mm.m00; //绘制每个轮廓中心点

double cy = mm.m01 / mm.m00;

circle(src1, Point(cx, cy), 3, Scalar(255, 0, 0), 2, 8, 0);

Mat hu;

HuMoments(mm, hu);

double dist = matchShapes(hu, hu2, CONTOURS_MATCH_I1, 0);

if (dist < 1.0) {

printf("matched distance value:%.2f\n", dist);

drawContours(src1, contours1, t, Scalar(0, 0, 255), 2, 8);

}

}

imshow("match contours demo", src1);

waitKey(0);

destroyAllWindows();

return 0;

}

void contour_info(Mat &image, vector<vector<Point>> &contours) {

//二值化

Mat dst;

GaussianBlur(image, dst, Size(3, 3), 0); //最好在分割处理之前使用高斯滤波降噪

Mat gray, binary;

cvtColor(dst, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

//轮廓发现

vector<Vec4i> hirearchy;

//RETR_EXTERNAL表示只绘制最外面的轮廓(外轮廓内的轮廓不绘制),RETR_TREE表示绘制所有轮廓

findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

}

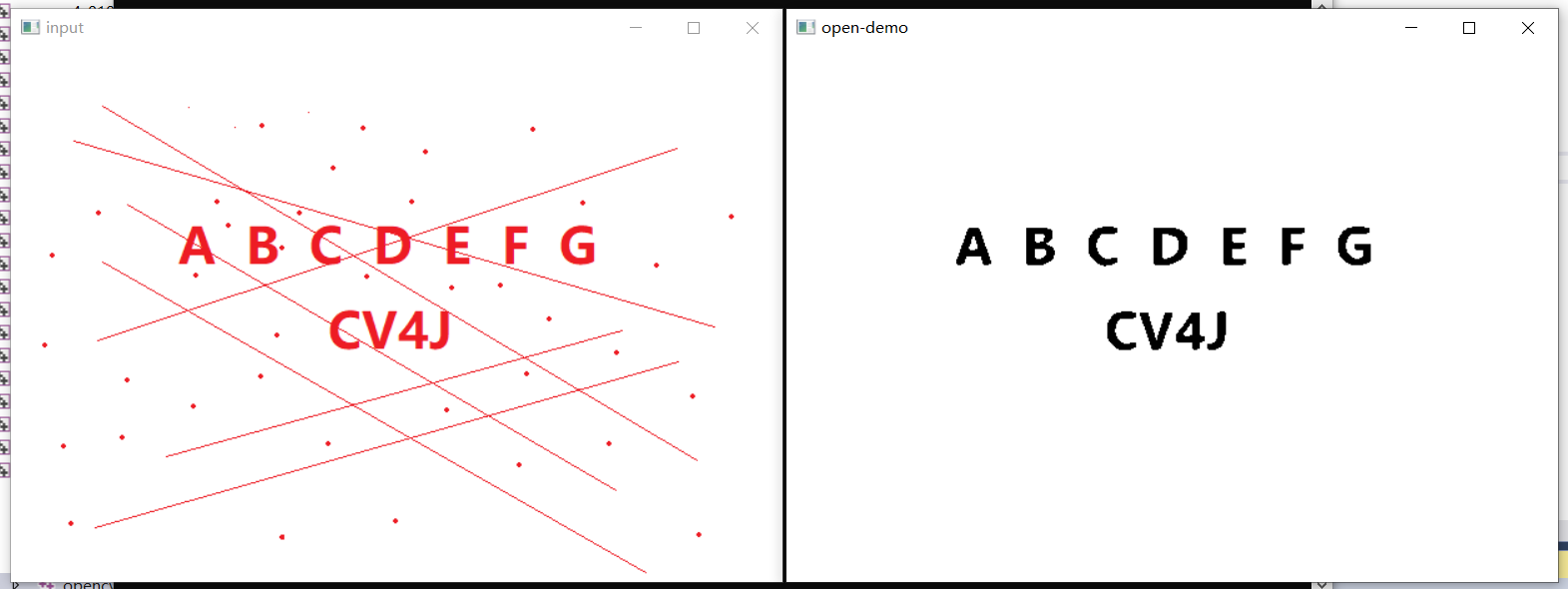

效果:

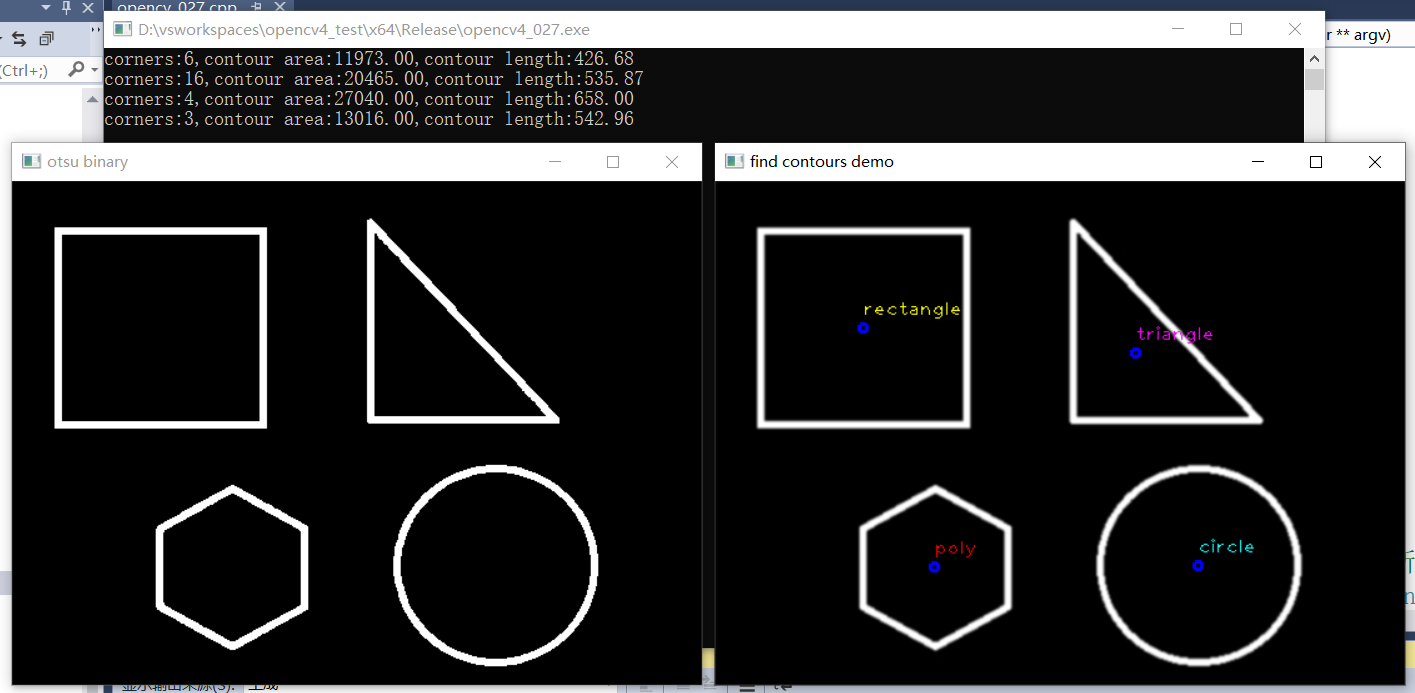

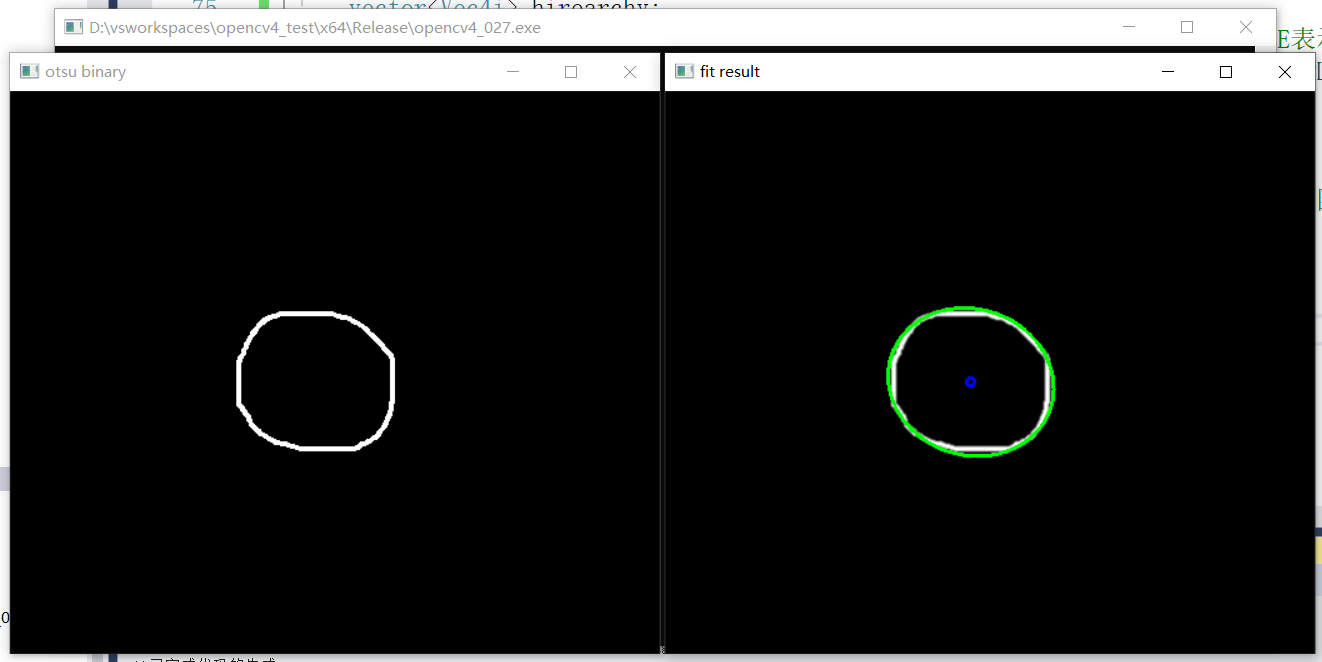

35、轮廓逼近与拟合

- 轮廓逼近,本质是减少编码点

- 拟合圆,生成最相似的圆或者椭圆

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

void fit_circle_demo(Mat &image);

int main(int argc, char** argv) {

Mat src = imread("D:/images/stuff.png");

if (src.empty()) {

printf("could not find image file");

return -1;

}

fit_circle_demo(src);

/*

//二值化

GaussianBlur(src, src, Size(3, 3), 0); //最好在分割处理之前使用高斯滤波降噪

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("otsu binary", binary);

//轮廓发现

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

//RETR_EXTERNAL表示只绘制最外面的轮廓(外轮廓内的轮廓不绘制),RETR_TREE表示绘制所有轮廓

findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

//多边形逼近演示程序

for (size_t t = 0; t < contours.size(); t++) {

Moments mm = moments(contours[t]);

double cx = mm.m10 / mm.m00; //绘制每个轮廓中心点

double cy = mm.m01 / mm.m00;

circle(src, Point(cx, cy), 3, Scalar(255, 0, 0), 2, 8, 0);

double area = contourArea(contours[t]);

double clen = arcLength(contours[t], true);

Mat result;

approxPolyDP(contours[t], result, 4, true);

printf("corners:%d,contour area:%.2f,contour length:%.2f\n", result.rows, area, clen);

if (result.rows == 6) {

putText(src, "poly", Point(cx, cy - 10), FONT_HERSHEY_PLAIN, 1.0, Scalar(0, 0, 255), 1, 8);

}

if (result.rows == 4) {

putText(src, "rectangle", Point(cx, cy - 10), FONT_HERSHEY_PLAIN, 1.0, Scalar(0, 255, 255), 1, 8);

}

if (result.rows == 3) {

putText(src, "triangle", Point(cx, cy - 10), FONT_HERSHEY_PLAIN, 1.0, Scalar(255, 0, 255), 1, 8);

}

if (result.rows > 10) {

putText(src, "circle", Point(cx, cy - 10), FONT_HERSHEY_PLAIN, 1.0, Scalar(255, 255, 0), 1, 8);

}

}

imshow("find contours demo", src);

*/

waitKey(0);

destroyAllWindows();

return 0;

}

void fit_circle_demo(Mat &image) {

//二值化

GaussianBlur(image, image, Size(3, 3), 0); //最好在分割处理之前使用高斯滤波降噪

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("otsu binary", binary);

//轮廓发现

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

//RETR_EXTERNAL表示只绘制最外面的轮廓(外轮廓内的轮廓不绘制),RETR_TREE表示绘制所有轮廓

findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

//拟合圆或者椭圆

for (size_t t = 0; t < contours.size(); t++) {

//drawContours(image, contours, t, Scalar(0, 0, 255), 2, 8); //绘制原图轮廓

RotatedRect rrt = fitEllipse(contours[t]); //获取拟合的椭圆

float w = rrt.size.width;

float h = rrt.size.height;

Point center = rrt.center;

circle(image, center, 3, Scalar(255, 0, 0), 2, 8, 0); //绘制中心

ellipse(image, rrt, Scalar(0, 255, 0), 2, 8); //绘制拟合的椭圆

}

imshow("fit result", image);

}

效果:

1、多边形逼近

2、图像椭圆拟合

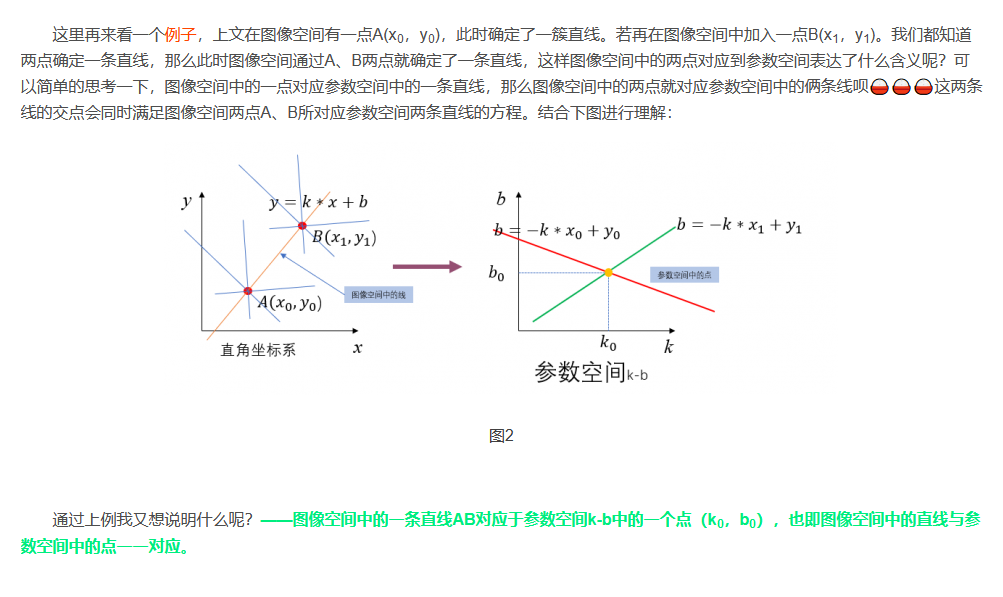

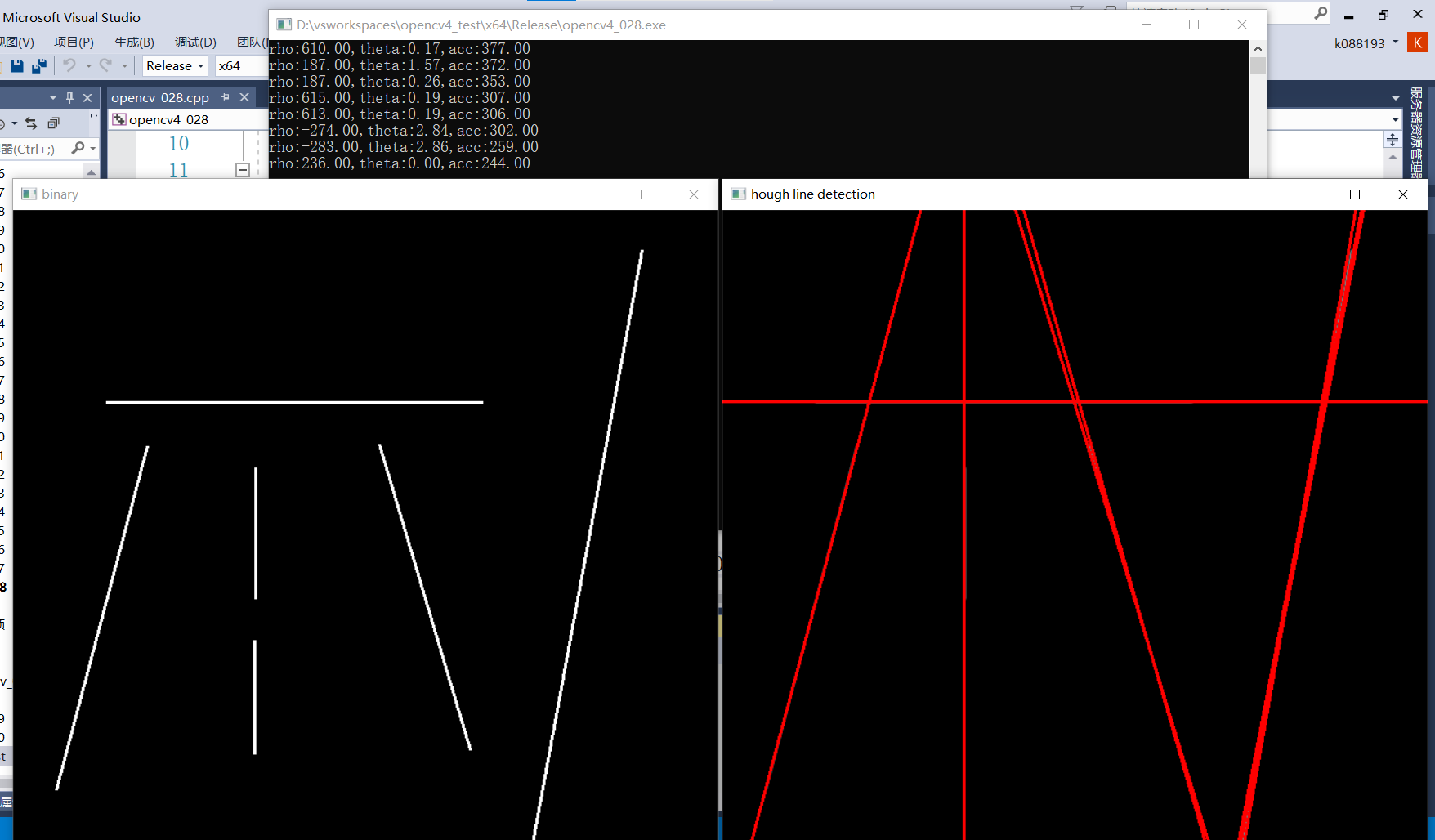

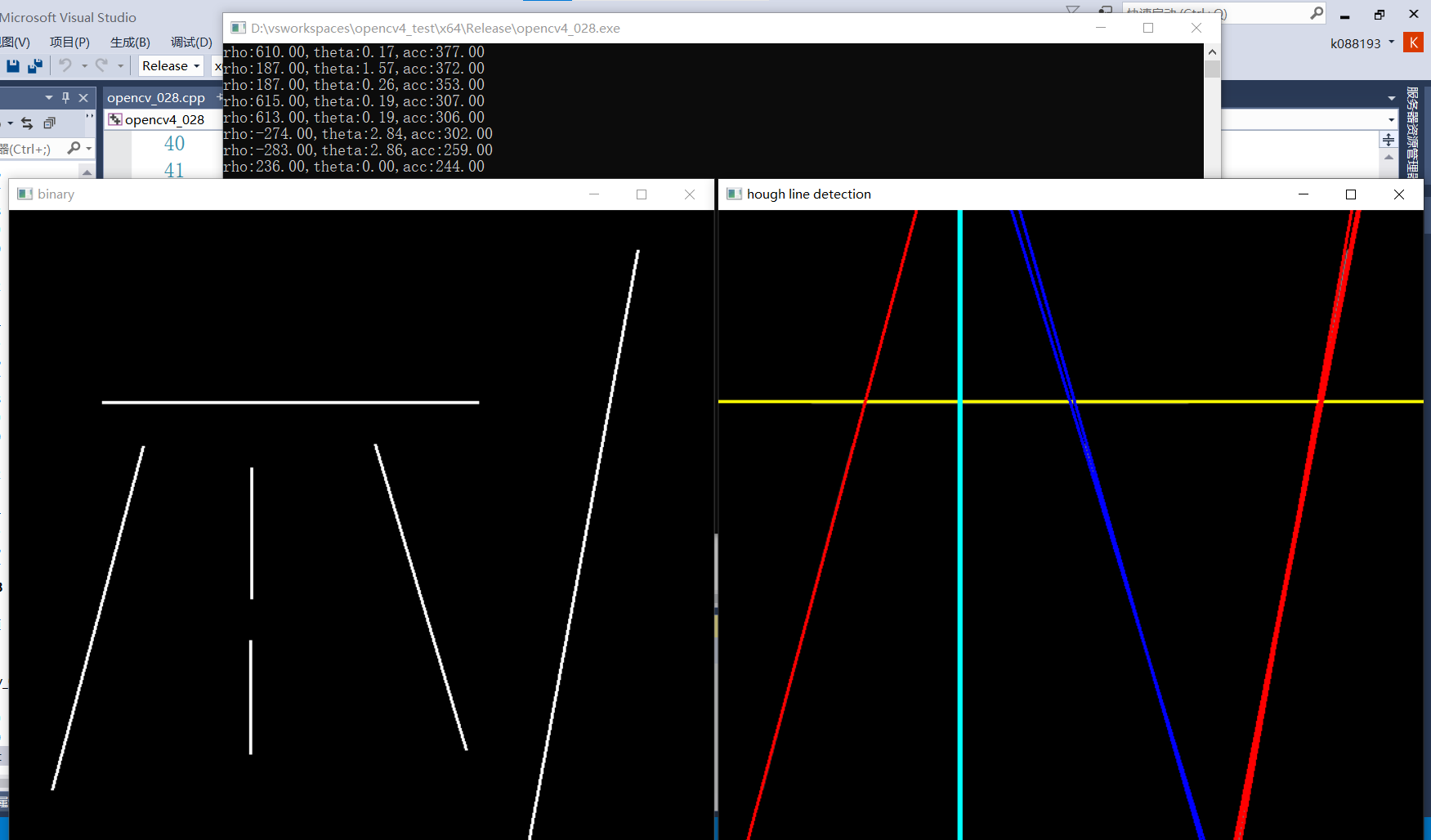

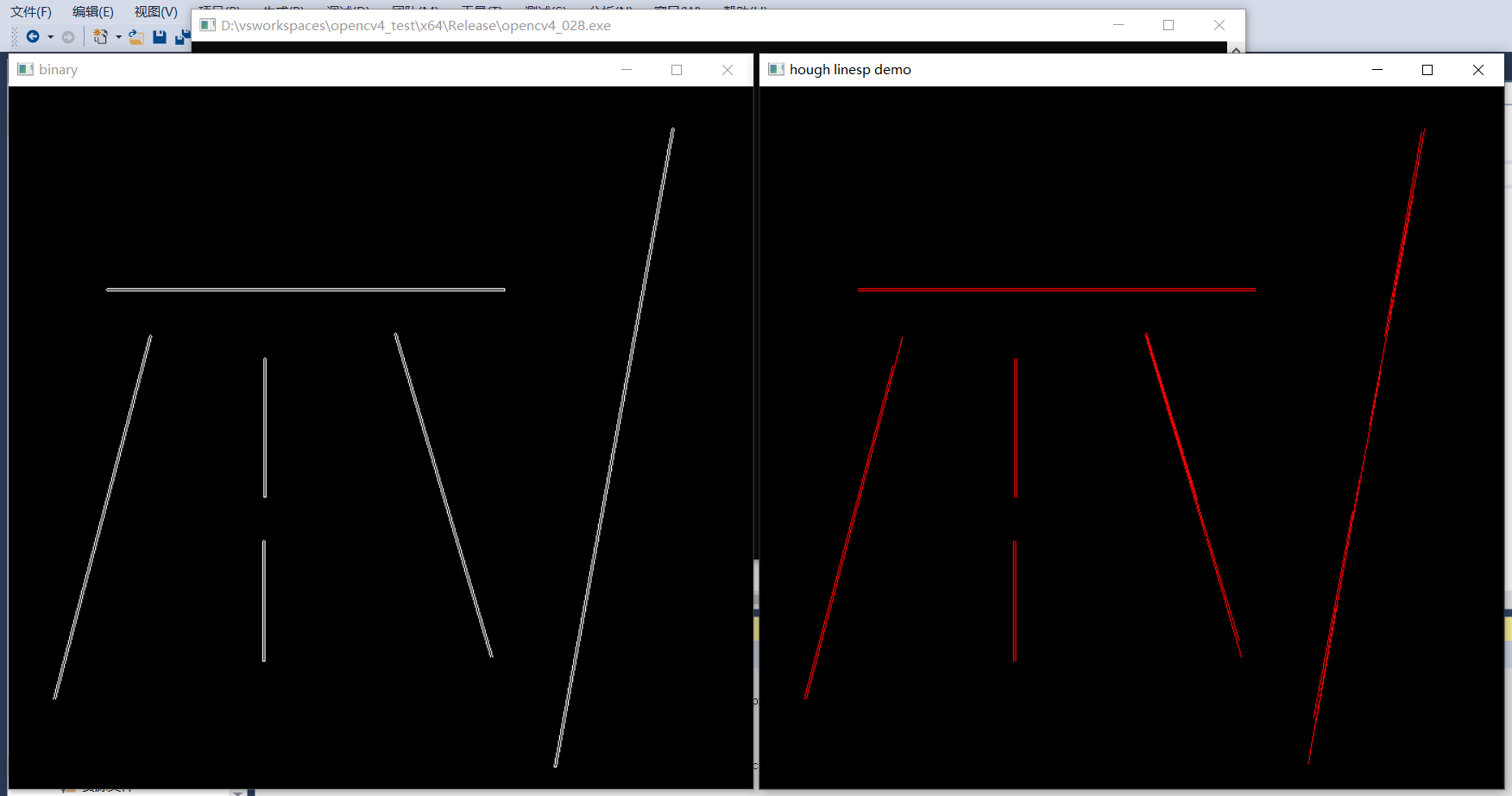

36、霍夫直线检测1

- 直线在平面坐标由两个参数决定(截距B,斜率K)

- 在极坐标空间由两个参数决定(半径R,角度θ)

- 图片讲解:三条曲线相交于一点,由该点确定的 r 和 θ 确定唯一的一条直线。

原理进一步理解:

- 1、图像空间x-y中的一点对应于参数空间k-b中的一条直线。

- 2、图像空间中的一条直线对应于参数空间k-b中的一个点。

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/tline.png");

if (src.empty()) {

printf("could not find image file");

return -1;

}

//二值化

GaussianBlur(src, src, Size(3, 3), 0); //最好在分割处理之前使用高斯滤波降噪

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

namedWindow("binary", WINDOW_AUTOSIZE);

imshow("binary", binary);

//霍夫直线检测

vector<Vec3f> lines;

HoughLines(binary, lines, 1, CV_PI / 180.0, 200, 0, 0);

//绘制直线

Point pt1, pt2;

for (size_t i = 0; i < lines.size(); i++) {

float rho = lines[i][0]; //距离

float theta = lines[i][1]; //角度

float acc = lines[i][2]; //累加值

printf("rho:%.2f,theta:%.2f,acc:%.2f\n", rho, theta, acc);

double a = cos(theta);

double b = sin(theta);

double x0 = a * rho, y0 = b * rho;

pt1.x = cvRound(x0 + 1000 * (-b));

pt1.y = cvRound(y0 + 1000 * (a));

pt2.x = cvRound(x0 - 1000 * (-b));

pt2.y = cvRound(y0 - 1000 * (a));

//line(src, pt1, pt2, Scalar(0, 0, 255), 2, 8, 0);

int angle = round((theta / CV_PI) * 180);

if (rho > 0) { //右倾

line(src, pt1, pt2, Scalar(0, 0, 255), 2, 8, 0);

if (angle == 90) { //水平线

line(src, pt1, pt2, Scalar(0, 255, 255), 2, 8, 0);

}

if (angle < 1) { //垂直线

line(src, pt1, pt2, Scalar(255, 255, 0), 4, 8, 0);

}

}

else { //左倾

line(src, pt1, pt2, Scalar(255, 0, 0), 2, 8, 0);

}

}

imshow("hough line detection", src);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

1、检测直线

2、检测不同角度的直线

37、直线类型与线段(霍夫直线检测2)

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

void hough_linesp_demo();

int main(int argc, char** argv) {

hough_linesp_demo();

waitKey(0);

destroyAllWindows();

return 0;

}

void hough_linesp_demo() {

Mat src = imread("D:/images/tline.png");

Mat binary;

Canny(src, binary, 80, 160, 3, false);

imshow("binary", binary);

vector<Vec4i> lines;

HoughLinesP(binary, lines, 1, CV_PI / 180.0, 80, 30, 10);

Mat result = Mat::zeros(src.size(), src.type());

for (int i = 0; i < lines.size(); i++) {

line(result, Point(lines[i][0], lines[i][1]), Point(lines[i][2], lines[i][3]), Scalar(0, 0, 255), 1, 8);

}

imshow("hough linesp demo", result);

}

效果:

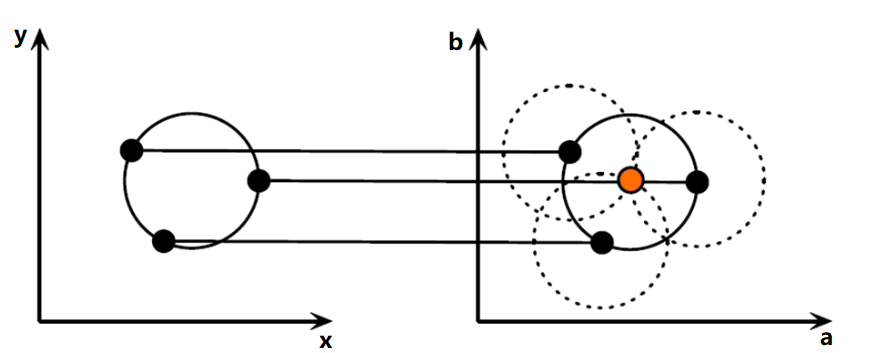

38、霍夫圆检测

原理:

其实检测圆形和检测直线的原理差别不大,只不过直线是在二维空间,因为y=kx+b,只有k和b两个自由度。而圆形的一般性方程表示为(x-a)²+(y-b)²=r²。那么就有三个自由度圆心坐标a,b,和半径r。这就意味着需要更多的计算量,而OpenCV中提供的cvHoughCircle()函数里面可以设定半径r的取值范围,相当于有一个先验设定,在每一个r来说,在二维空间内寻找a和b就可以了,能够减少计算量。

具体步骤如下:

对输入图像进行边缘检测,获取边界点,即前景点。

假如图像中存在圆形,那么其轮廓必定属于前景点(此时请忽略边缘提取的准确性)。

同霍夫变换检测直线一样,将圆形的一般性方程换一种方式表示,进行坐标变换。由x-y坐标系转换到a-b坐标系。写成如下形式(a-x)²+(b-y)²=r²。那么x-y坐标系中圆形边界上的一点对应到a-b坐标系中即为一个圆。

那x-y坐标系中一个圆形边界上有很多个点,对应到a-b坐标系中就会有很多个圆。由于原图像中这些点都在同一个圆形上,那么转换后a,b必定也满足a-b坐标系下的所有圆形的方程式,也就是有那么一对a,b会使得所有点都在以该点为圆心的圆上。直观表现为这许多点对应的圆都会相交于一个点,那么这个交点就可能是圆心(a, b)。

统计局部交点处圆的个数,取每一个局部最大值,就可以获得原图像中对应的圆形的圆心坐标(a,b)。一旦在某一个r下面检测到圆,那么r的值也就随之确定。

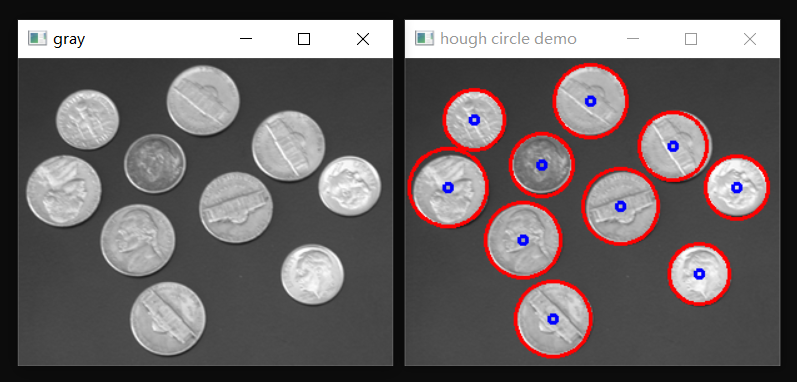

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/test_coins.png");

Mat gray;

cvtColor(src, gray, COLOR_BGR2GRAY);

imshow("gray", gray);

GaussianBlur(gray, gray, Size(9, 9), 2, 2); //降噪增加圆检测成功率,霍夫圆检测对噪声敏感,将检出率调大,多余的圆可通过后续方法过滤

vector<Vec3f> circles;

//通过调整半径等参数来提高圆的检出率

double minDist = 10;

double min_radius = 10;

double max_radius = 50;

HoughCircles(gray, circles, HOUGH_GRADIENT, 3, minDist, 100, 100, min_radius, max_radius); //输入为灰度图像

for (size_t t = 0; t < circles.size(); t++) {

Point center(circles[t][0], circles[t][1]);

int radius = round(circles[t][2]);

//绘制圆

circle(src, center, radius, Scalar(0, 0, 255), 2, 8, 0);

circle(src, center, 3, Scalar(255, 0, 0), 2, 8, 0);

}

imshow("hough circle demo", src);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

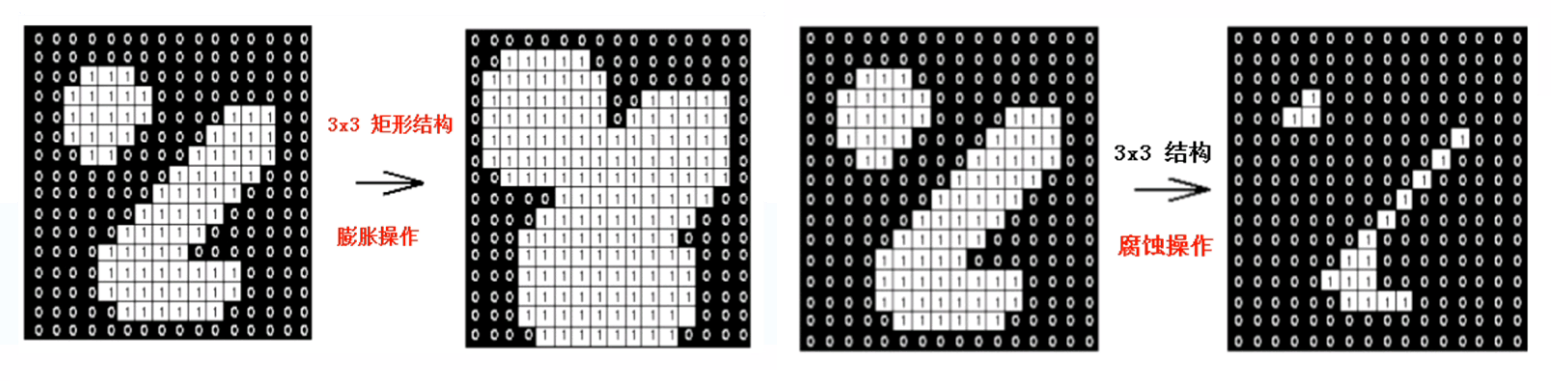

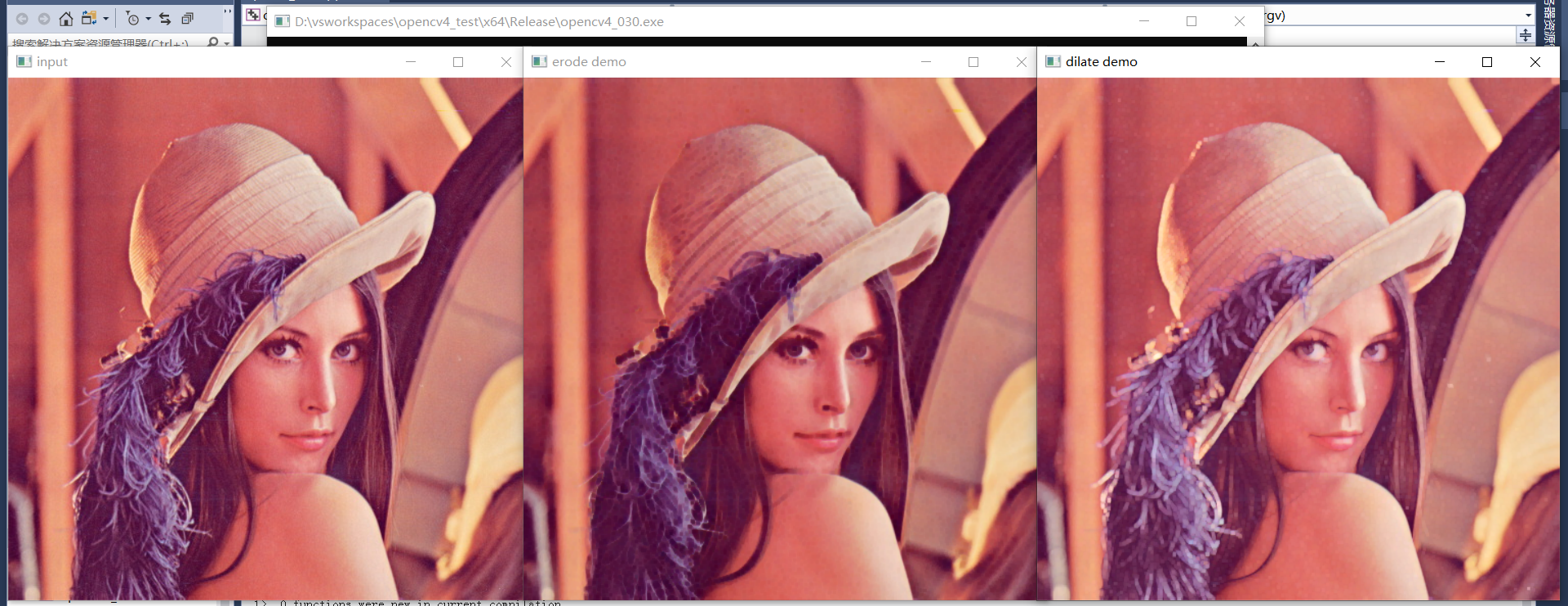

39、图像形态学操作

图像形态学介绍:

- 是图像处理学科的一个单独分支学科

- 灰度与二值图像处理中重要手段

- 是由数学的集合论等相关理论发展起来的

- 在机器视觉、OCR等领域有重要作用

腐蚀与膨胀:

- 膨胀操作(最大值替换中心像素)

- 腐蚀操作(最小值替换中心像素)

结构元素形状:

- 线形 - 水平与垂直

- 矩形 - w*h

- 十字交叉形状

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/morph.png");

imshow("input", src);

/*

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

*/

Mat dst1, dst2;

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1)); //获取结构元素

erode(src, dst1, kernel);

dilate(src, dst2, kernel);

imshow("erode demo", dst1);

imshow("dilate demo", dst2);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

1、二值图像腐蚀与膨胀操作

2、BGR彩色图像腐蚀与膨胀操作

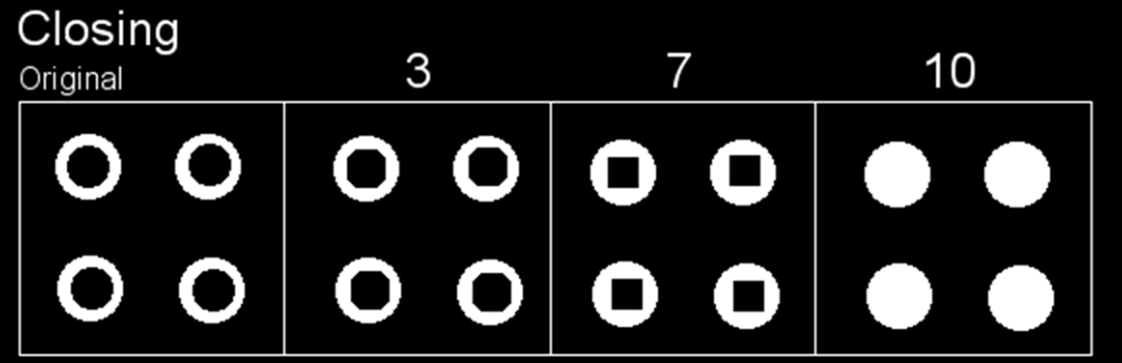

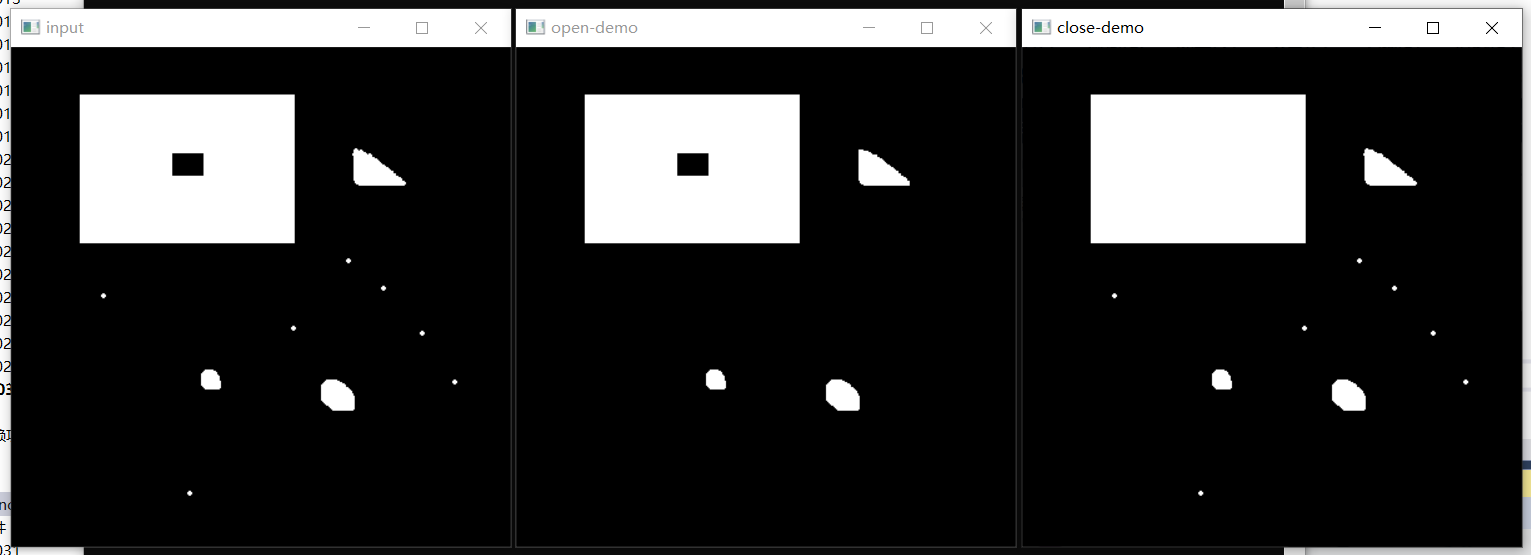

40、开闭操作

开操作:

- 开操作 = 腐蚀 + 膨胀

- 开操作 → 删除小的干扰块

闭操作:

- 闭操作 = 膨胀 + 腐蚀

- 闭操作 → 填充闭合区域

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

//Mat src = imread("D:/images/morph.png");

//Mat src = imread("D:/images/morph02.png");

Mat src = imread("D:/images/fill.png");

imshow("input", src);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

Mat dst1, dst2;

//Mat kernel01 = getStructuringElement(MORPH_RECT, Size(25, 25), Point(-1, -1));

//Mat kernel01 = getStructuringElement(MORPH_RECT, Size(25, 1), Point(-1, -1)); //提取水平线,水平方向闭操作

Mat kernel01 = getStructuringElement(MORPH_RECT, Size(25, 1), Point(-1, -1)); //提取水平线,水平方向闭操作

//Mat kernel01 = getStructuringElement(MORPH_RECT, Size(1, 25), Point(-1, -1)); //提取垂直线,垂直方向闭操作

//Mat kernel01 = getStructuringElement(MORPH_ELLIPSE, Size(25, 25), Point(-1, -1));

//Mat kernel02 = getStructuringElement(MORPH_ELLIPSE, Size(4, 4), Point(-1, -1));

//morphologyEx(binary, dst1, MORPH_OPEN, kernel02, Point(-1, -1), 1);

//morphologyEx(binary, dst2, MORPH_CLOSE, kernel01, Point(-1, -1), 1);

morphologyEx(binary, dst2, MORPH_OPEN, kernel01, Point(-1, -1), 1);

//imshow("open-demo", dst1);

imshow("open-demo", dst2);

waitKey(0);

destroyAllWindows();

return 0;

}

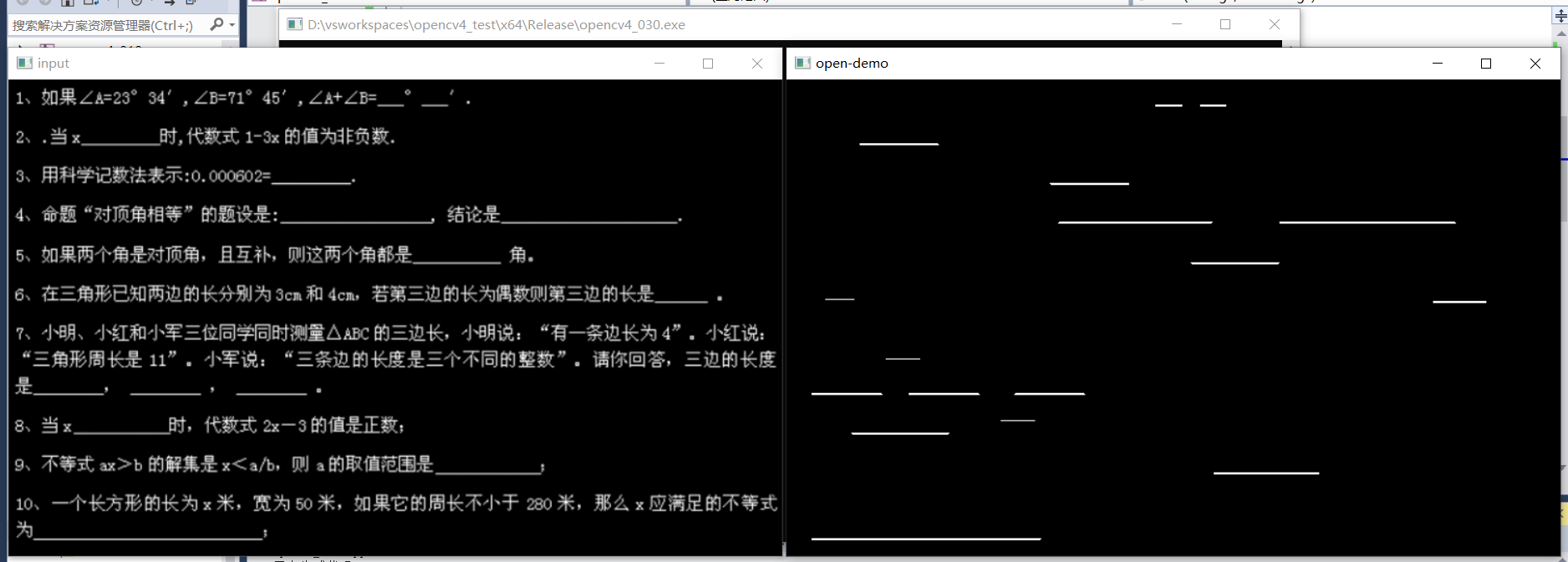

效果:

1、简单图形开闭操作

2、圆形闭操作

3、文字干扰开操作

4、直线提取开操作

41、形态学梯度(轮廓)

形态学梯度定义

- 基本梯度 - 膨胀减去腐蚀之后的结果,OpenCV中支持的计算形态学梯度的方法

- 内梯度 - 原图减去腐蚀之后的结果

- 外梯度 - 膨胀减去原图的结果

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

//Mat src = imread("D:/images/yuan_test.png");

Mat src = imread("D:/images/building.png");

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

imshow("input", gray);

Mat basic_grad, inter_grad, exter_grad;

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

morphologyEx(gray, basic_grad, MORPH_GRADIENT, kernel, Point(-1, -1), 1);

imshow("basic gradient", basic_grad);

Mat dst1, dst2;

erode(gray, dst1, kernel);

dilate(gray, dst2, kernel);

subtract(gray, dst1, inter_grad);

subtract(dst2, gray, exter_grad);

imshow("internal gradient", inter_grad);

imshow("external gradient", exter_grad);

//先求基本梯度,再对基本梯度进行二值化分割处理

threshold(basic_grad, binary, 0, 255, THRESH_BINARY | THRESH_OTSU); //二值化

imshow("binary", binary);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

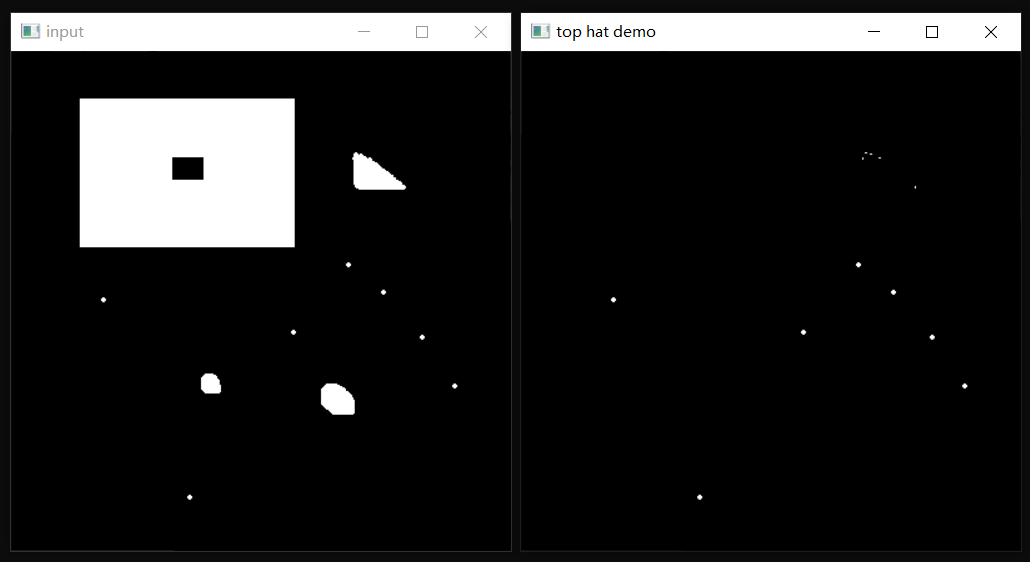

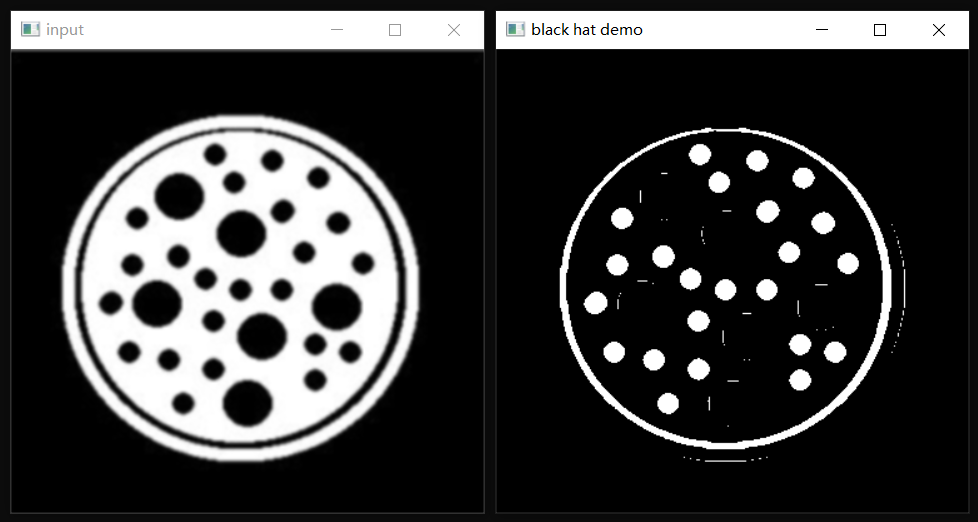

42、更多形态学操作

黑帽与顶帽

- 顶帽:原图减去开操作之后的结果

- 黑帽:闭操作之后的结果减去原图

- 顶帽与黑帽的作用是用来提取图像中微小有用的信息块

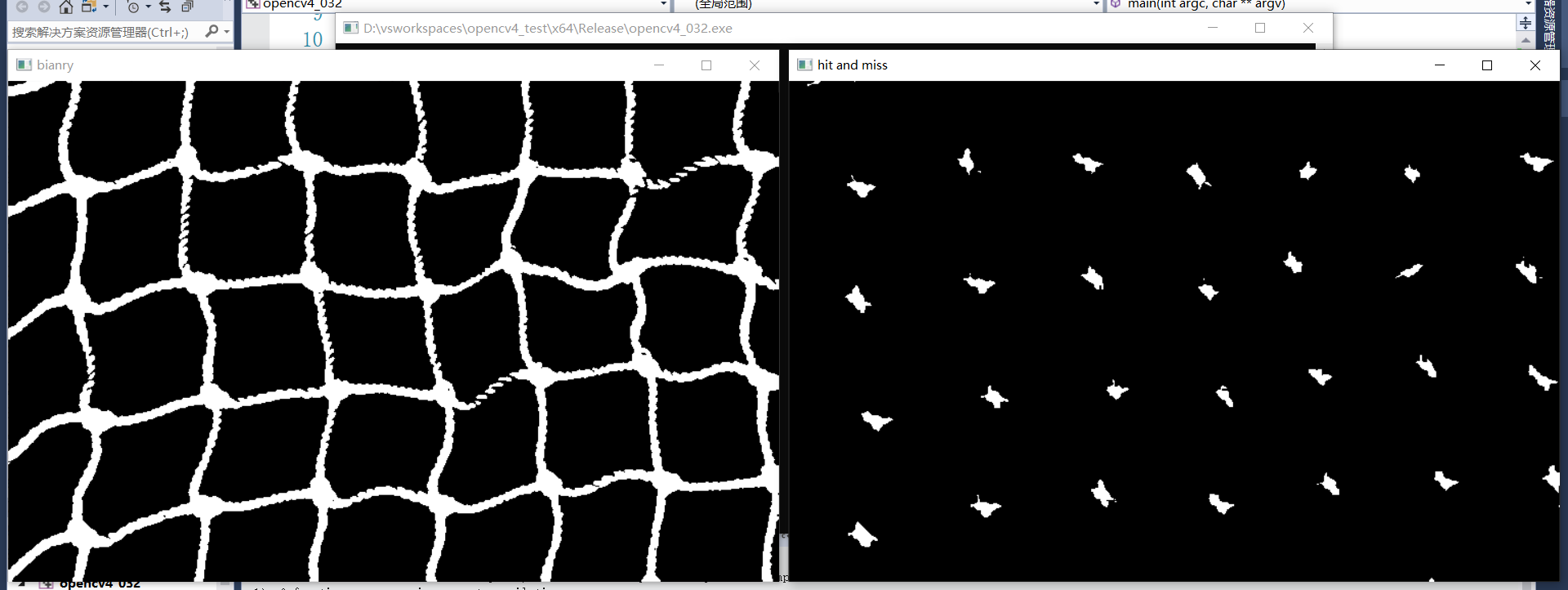

击中击不中(提取与结构元素完全相同的元素)

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

//Mat src = imread("D:/images/morph.png");

//Mat src = imread("D:/images/morph3.png");

Mat src = imread("D:/images/cross.png");

GaussianBlur(src, src, Size(3, 3), 2, 2);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

imshow("input", gray);

//先求基本梯度,再对基本梯度进行二值化分割处理

threshold(gray, binary, 0, 255, THRESH_BINARY_INV | THRESH_OTSU); //二值化

imshow("bianry", binary);

//Mat tophat, blackhat;

//Mat k = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

//Mat k = getStructuringElement(MORPH_ELLIPSE, Size(18, 18), Point(-1, -1));

//morphologyEx(binary, tophat, MORPH_TOPHAT, k);

//imshow("top hat demo", tophat);

//morphologyEx(binary, blackhat, MORPH_BLACKHAT, k);

//imshow("black hat demo", blackhat);

Mat hitmiss;

Mat k = getStructuringElement(MORPH_CROSS, Size(15, 15), Point(-1, -1));

morphologyEx(binary, hitmiss, MORPH_HITMISS, k);

imshow("hit and miss", hitmiss);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

1、顶帽(开操作去除的干扰信息)

2、黑帽(闭操作填补的区域)

3、击中与击不中

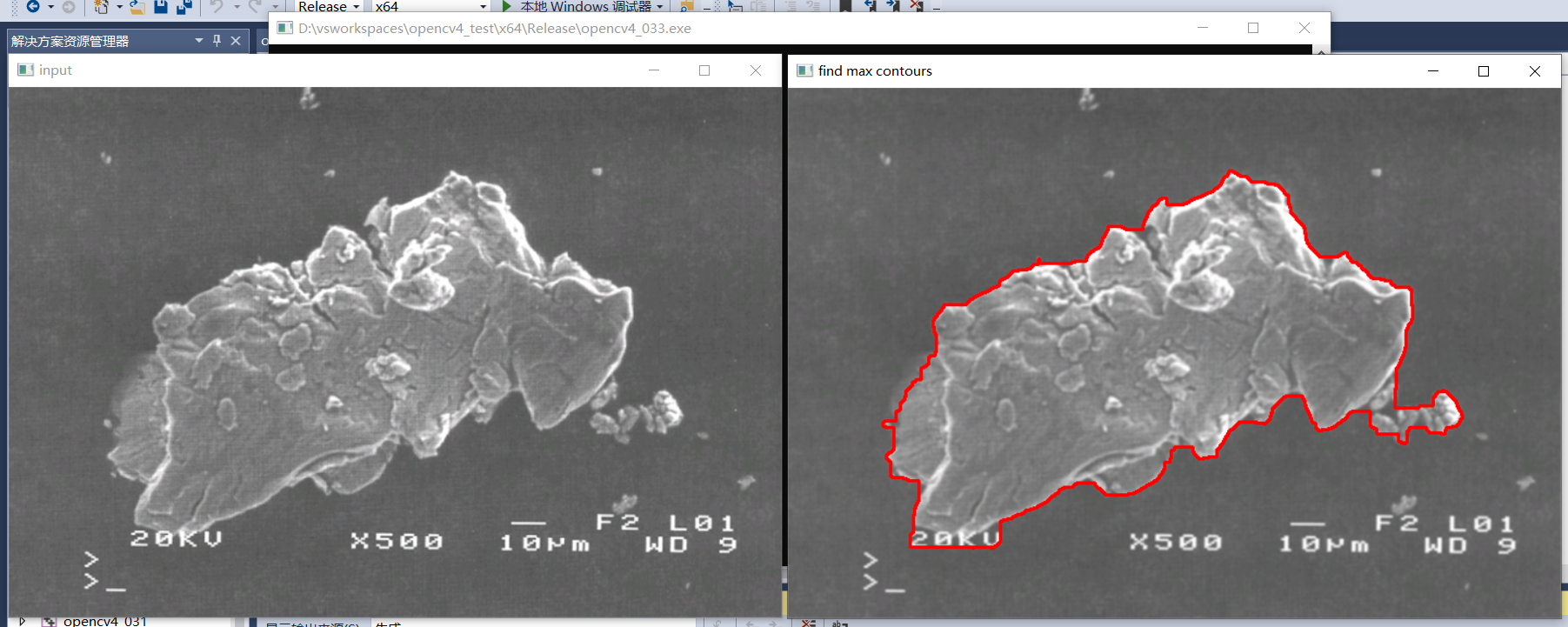

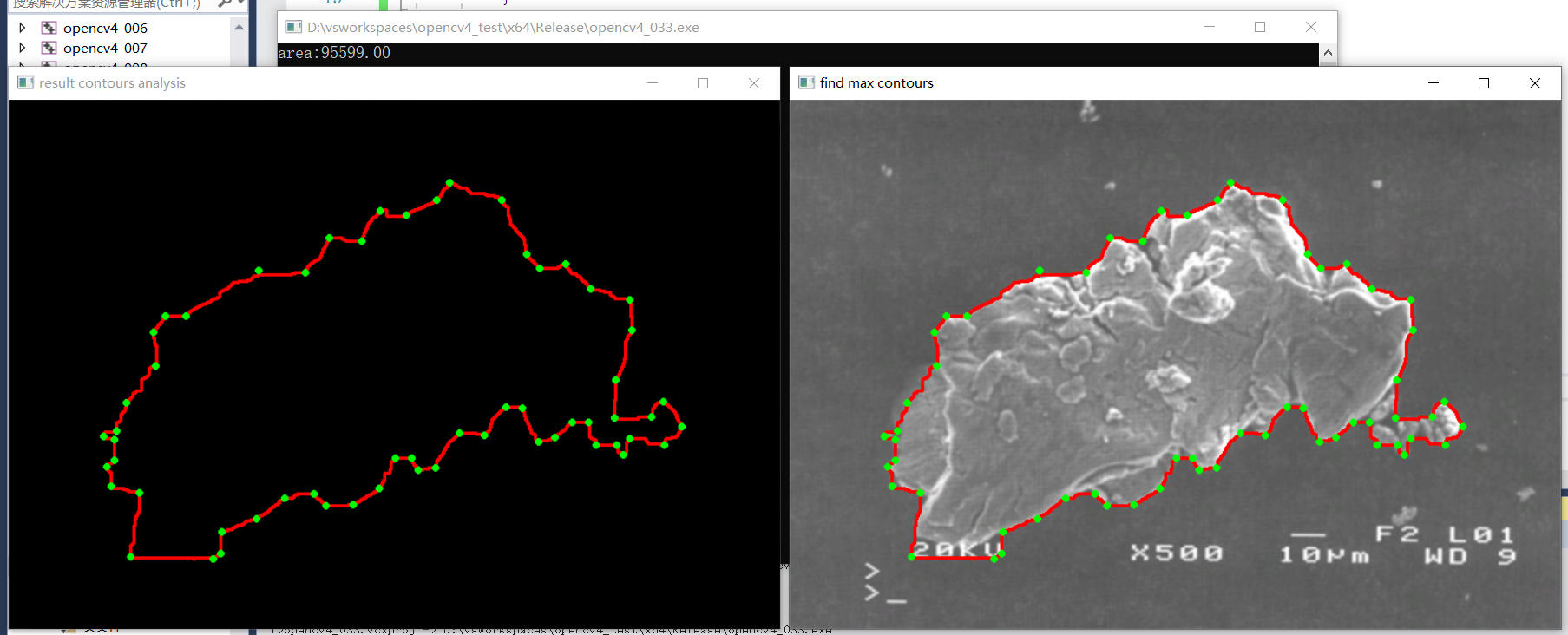

43、图像分析 案例实战一

- 提取星云最大轮廓面积

- 提取编码点/极值点

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

RNG rng(12345);

int main(int argc, char** argv) {

Mat src = imread("D:/images/case6.png");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

//二值化

GaussianBlur(src, src, Size(3, 3), 0); //最好在分割处理之前使用高斯滤波降噪

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

//闭操作

Mat se = getStructuringElement(MORPH_RECT, Size(15, 15), Point(-1, -1));

morphologyEx(binary, binary, MORPH_CLOSE, se);

imshow("binary", binary);

//轮廓发现

int height = binary.rows;

int width = binary.cols;

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

//RETR_EXTERNAL表示只绘制最外面的轮廓(外轮廓内的轮廓不绘制),RETR_TREE表示绘制所有轮廓

findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

double max_area = -1;

int cindex = -1;

for (size_t t = 0; t < contours.size(); t++) {

Rect rect = boundingRect(contours[t]);

if (rect.height >= height || rect.width >= width) { //跳过图像边框轮廓

continue;

}

double area = contourArea(contours[t]); //面积

double length = arcLength(contours[t], true); //周长

if (area > max_area) {

max_area = area;

cindex = t;

}

}

drawContours(src, contours, cindex, Scalar(0, 0, 255), 2, 8);

Mat pts;

approxPolyDP(contours[cindex], pts, 4, true); //通过多边形逼近找到极值点

Mat result = Mat::zeros(src.size(), src.type());

drawContours(result, contours, cindex, Scalar(0, 0, 255), 2, 8);

for (int i = 0; i < pts.rows; i++) {

Vec2i pt = pts.at<Vec2i>(i, 0);

circle(src, Point(pt[0], pt[1]), 2, Scalar(0, 255, 0), 2, 8, 0);

circle(result, Point(pt[0], pt[1]), 2, Scalar(0, 255, 0), 2, 8, 0);

}

imshow("find max contours", src);

imshow("result contours analysis", result);

printf("area:%.2f\n", max_area);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

1、查找绘制最大面积轮廓

2、查找最大轮廓的极值点并绘制

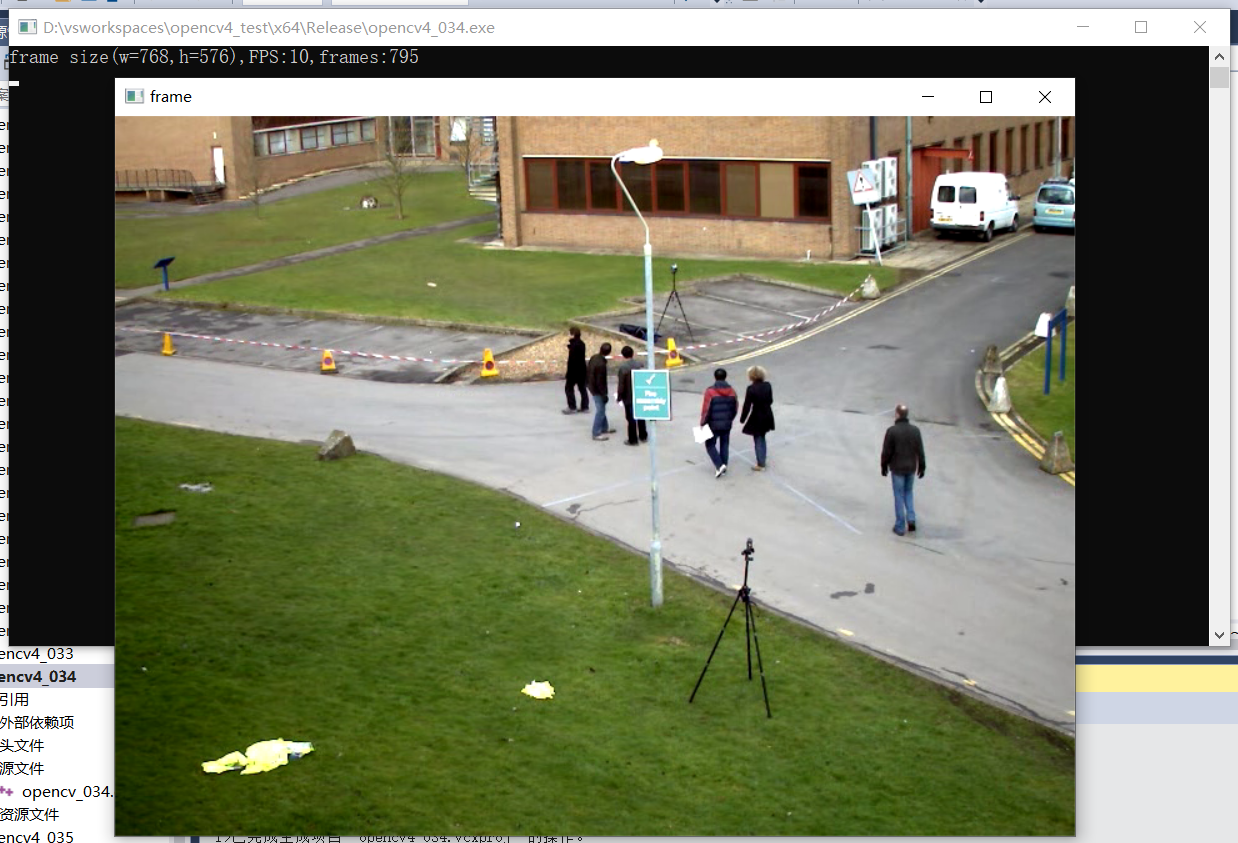

44、视频读写

- 支持视频文件读写

- 摄像头与视频流

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

//VideoCapture capture(0); //获取摄像头

//VideoCapture capture("D:/images/vtest.avi"); //获取摄像头

VideoCapture capture;

capture.open("http://ivi.bupt.edu.cn/hls/cctv6hd.m3u8");

if (!capture.isOpened()) {

printf("could not open the camera...\n");

}

namedWindow("frame", WINDOW_AUTOSIZE);

int fps = capture.get(CAP_PROP_FPS);

int width = capture.get(CAP_PROP_FRAME_WIDTH);

int height = capture.get(CAP_PROP_FRAME_HEIGHT);

int num_of_frames = capture.get(CAP_PROP_FRAME_COUNT);

int type = capture.get(CAP_PROP_FOURCC); //获取原视频编码格式

printf("frame size(w=%d,h=%d),FPS:%d,frames:%d\n", width, height, fps, num_of_frames);

Mat frame;

VideoWriter writer("D:/images/test.mp4", type, fps, Size(width, height), true); //输出视频,设置路径及格式

while (true) {

bool ret = capture.read(frame);

if (!ret) break;

imshow("frame", frame);

writer.write(frame); //写出每一帧

char c = waitKey(50);

if (c == 27) { //ESC

break;

}

}

capture.release(); //读写完成后关闭释放资源

writer.release();

waitKey(0);

destroyAllWindows();

}

效果:

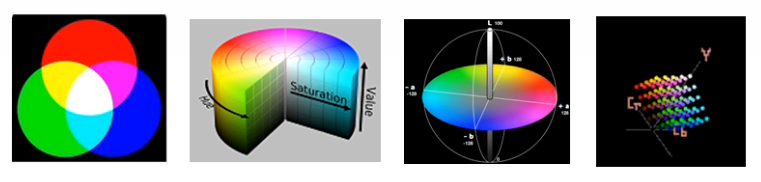

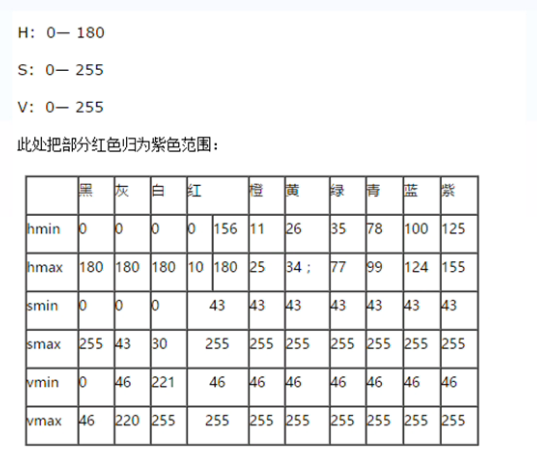

45、图像色彩空间转换

色彩空间

- RGB色彩空间(非设备依赖)

- HSV色彩空间

- Lab色彩空间

- YCbCr色彩空间

相互转换

- BGR2HSV

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

VideoCapture capture("D:/images/vtest.avi"); //获取摄像头

if (!capture.isOpened()) {

printf("could not open the camera...\n");

}

namedWindow("frame", WINDOW_AUTOSIZE);

Mat frame, hsv, Lab, mask, result;

while (true) {

bool ret = capture.read(frame);

if (!ret) break;

imshow("frame", frame);

cvtColor(frame, hsv, COLOR_BGR2HSV);

//cvtColor(frame, Lab, COLOR_BGR2Lab);

imshow("hsv", hsv);

inRange(hsv, Scalar(35, 43, 46), Scalar(77, 255, 255), mask); //获取指定颜色范围内的像素点

bitwise_not(mask, mask); //对获取的像素点取反,取出这些像素

bitwise_and(frame, frame, result, mask);

imshow("mask", mask);

imshow("result", result);

//imshow("Lab", Lab);

char c = waitKey(50);

if (c == 27) { //ESC

break;

}

}

capture.release(); //读写完成后关闭释放资源

//writer.release();

waitKey(0);

destroyAllWindows();

}

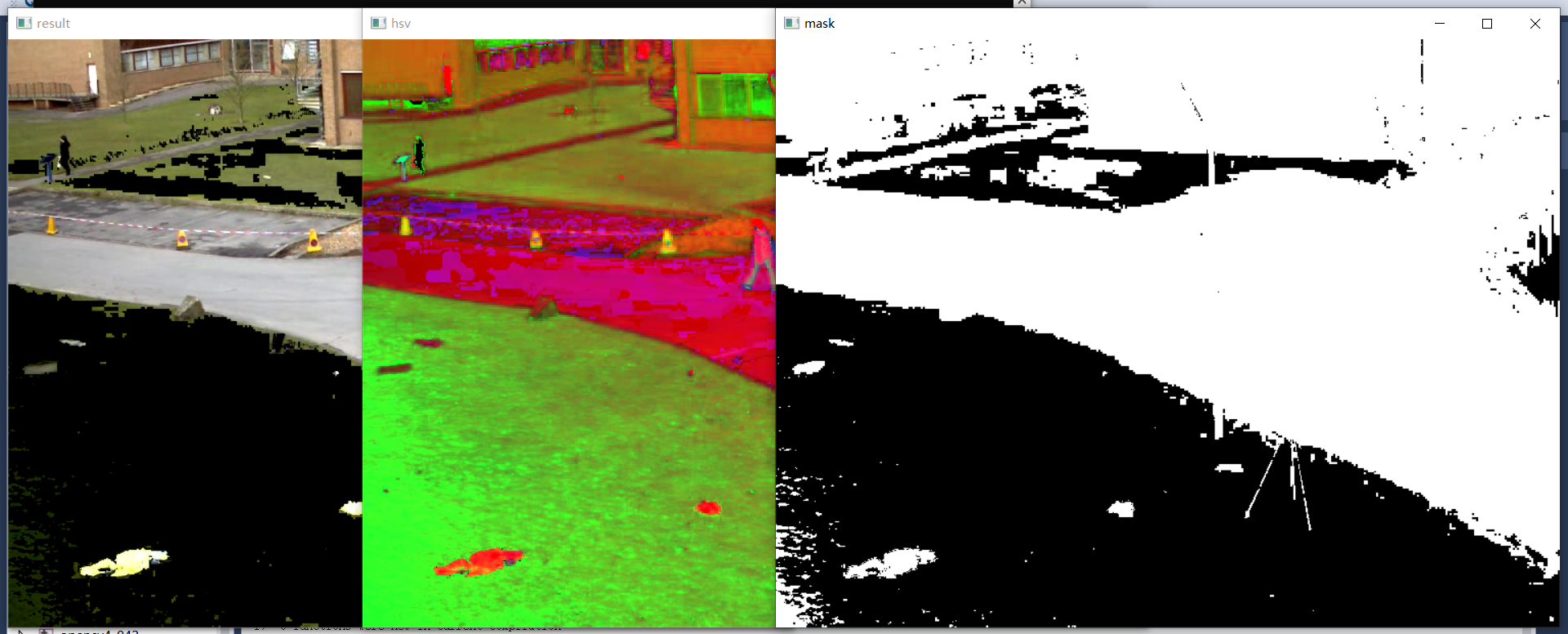

效果:

1、rgb图像转换为hsv及Lab色彩空间

2、去除hsv图像的绿色部分

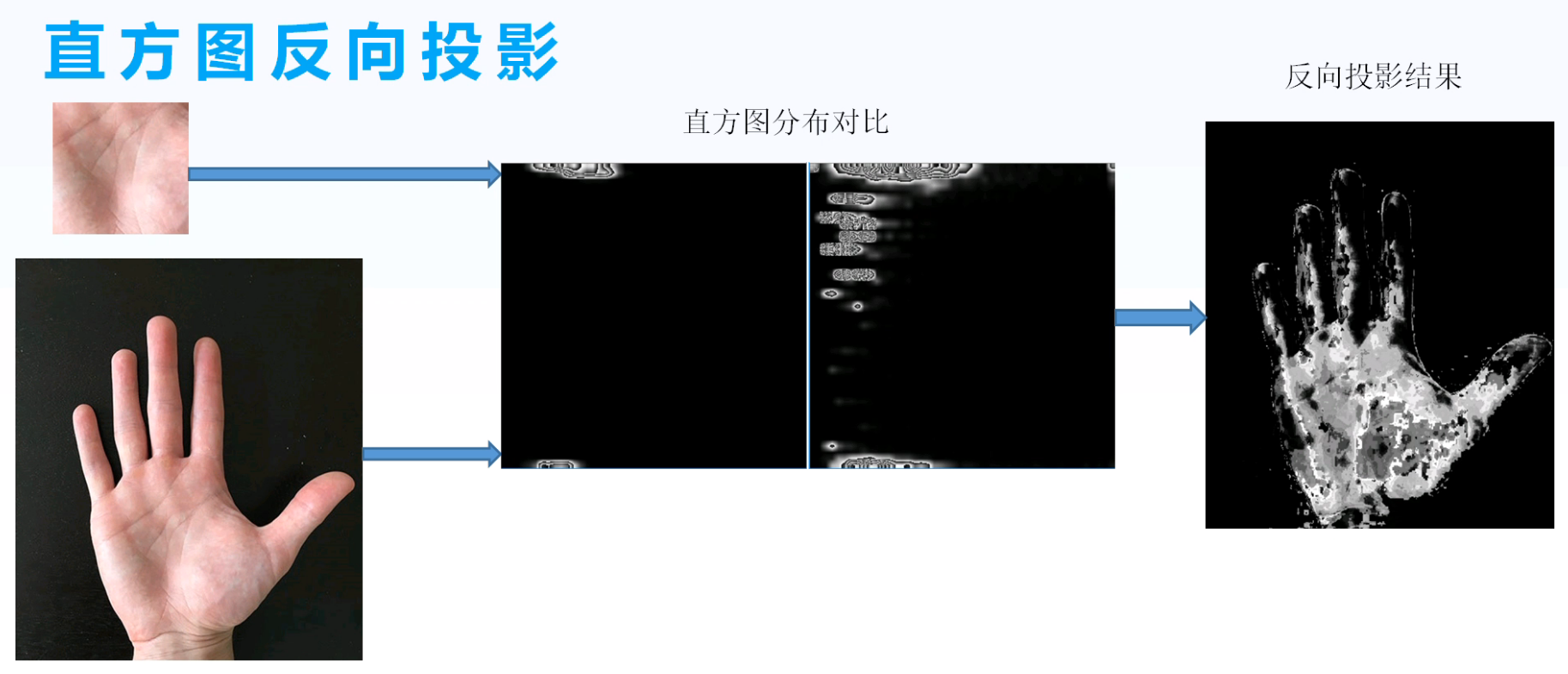

46、直方图反向投影

基本原理

- 计算直方图

- 计算比率

- 卷积模糊

- 反向输出

查找过程示例:

假设我们有一张100x100的输入图像,有一张10x10的模板图像,查找的过程是这样的:

(1)从输入图像的左上角(0,0)开始,切割一块(0,0)至(10,10)的临时图像;

(2)生成临时图像的直方图;

(3)用临时图像的直方图和模板图像的直方图对比,对比结果记为c;

(4)直方图对比结果c,就是结果图像(0,0)处的像素值;

(5)切割输入图像从(0,1)至(10,11)的临时图像,对比直方图,并记录到结果图像;

(6)重复(1)~(5)步直到输入图像的右下角。

反向投影的结果包含了:以每个输入图像像素点为起点的直方图对比结果。可以把它看成是一个二维的浮点型数组,二维矩阵,或者单通道的浮点型图像。

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat model = imread("D:/images/sample.png");

Mat src = imread("D:/images/target.png");

if (src.empty() || model.empty()) {

printf("could not find image files");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

imshow("sample", model);

Mat model_hsv, image_hsv;

cvtColor(model, model_hsv, COLOR_BGR2HSV);

cvtColor(src, image_hsv, COLOR_BGR2HSV);

int h_bins = 24, s_bins = 24;

int histSize[] = { h_bins,s_bins };

int channels[] = { 0,1 };

Mat roiHist;

float h_range[] = { 0,180 };

float s_range[] = { 0,255 };

const float* ranges[] = { h_range,s_range };

calcHist(&model_hsv, 1, channels, Mat(), roiHist, 2, histSize, ranges, true, false);

normalize(roiHist, roiHist, 0, 255, NORM_MINMAX, -1, Mat()); //归一化为0-255

MatND backproj;

calcBackProject(&image_hsv, 1, channels, roiHist, backproj, ranges, 1.0);

imshow("back projection demo", backproj);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

47、Harris角点检测

角点:在x方向和y方向都有梯度变化最大的像素点;边缘:在一个方向上像素梯度变化最大。

Harris角点检测原理:

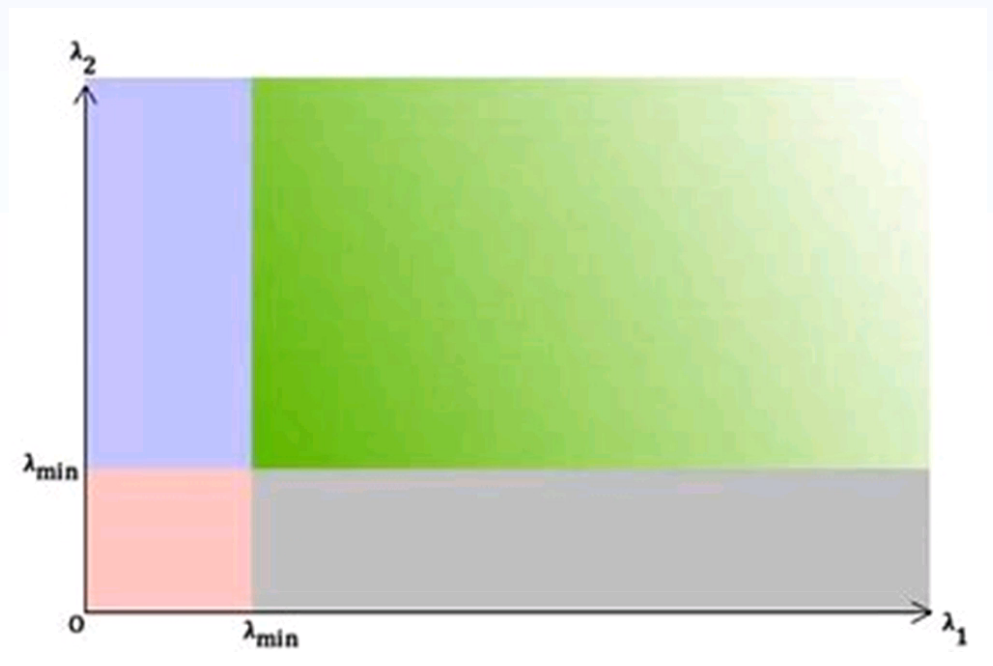

shi-tomasi角点检测:

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

void harris_demo(Mat &image);

int main(int argc, char** argv) {

VideoCapture capture("D:/images/vtest.avi"); //获取摄像头

if (!capture.isOpened()) {

printf("could not open the camera...\n");

}

namedWindow("frame", WINDOW_AUTOSIZE);

Mat frame;

while (true) {

bool ret = capture.read(frame);

if (!ret) break;

imshow("frame", frame);

harris_demo(frame);

imshow("result", frame);

char c = waitKey(50);

if (c == 27) { //ESC

break;

}

}

capture.release(); //读写完成后关闭释放资源

waitKey(0);

destroyAllWindows();

return 0;

}

void harris_demo(Mat &image) {

Mat gray;

cvtColor(image, gray, COLOR_BGR2GRAY);

Mat dst;

double k = 0.04;

int blocksize = 2; //扫描窗大小

int ksize = 3; //sobel算子大小

cornerHarris(gray, dst, blocksize, ksize, k);

Mat dst_norm = Mat::zeros(dst.size(), dst.type());

normalize(dst, dst_norm, 0, 255, NORM_MINMAX, -1, Mat());

convertScaleAbs(dst_norm, dst_norm); //转换为8UC1类型数据

//draw corners

RNG rng(12345);

for (int row = 0; row < image.rows; row++) {

for (int col = 0; col < image.cols; col++) {

int rsp = dst_norm.at<uchar>(row, col);

if (rsp > 150) {

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

circle(image, Point(col, row), 5, Scalar(b, g, r), 2, 8, 0);

}

}

}

}

效果:

48、shi-tomasi角点检测

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

void shitomas_demo(Mat &image);

int main(int argc, char** argv) {

VideoCapture capture("D:/images/vtest.avi"); //获取摄像头

if (!capture.isOpened()) {

printf("could not open the camera...\n");

}

namedWindow("frame", WINDOW_AUTOSIZE);

Mat frame;

while (true) {

bool ret = capture.read(frame);

if (!ret) break;

imshow("frame", frame);

//harris_demo(frame);

shitomas_demo(frame);

imshow("result", frame);

char c = waitKey(50);

if (c == 27) { //ESC

break;

}

}

capture.release(); //读写完成后关闭释放资源

waitKey(0);

destroyAllWindows();

return 0;

}

void shitomas_demo(Mat &image) {

Mat gray, dst;

cvtColor(image, gray, COLOR_BGR2GRAY);

vector<Point2f> corners;

double quality_level = 0.01; //角点检测最小阈值

RNG rng(12345);

goodFeaturesToTrack(gray, corners, 200, quality_level, 3, Mat(), 3, false);

for (int i = 0; i < corners.size(); i++) {

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

circle(image, corners[i], 5, Scalar(b, g, r), 2, 8, 0);

}

}

效果:

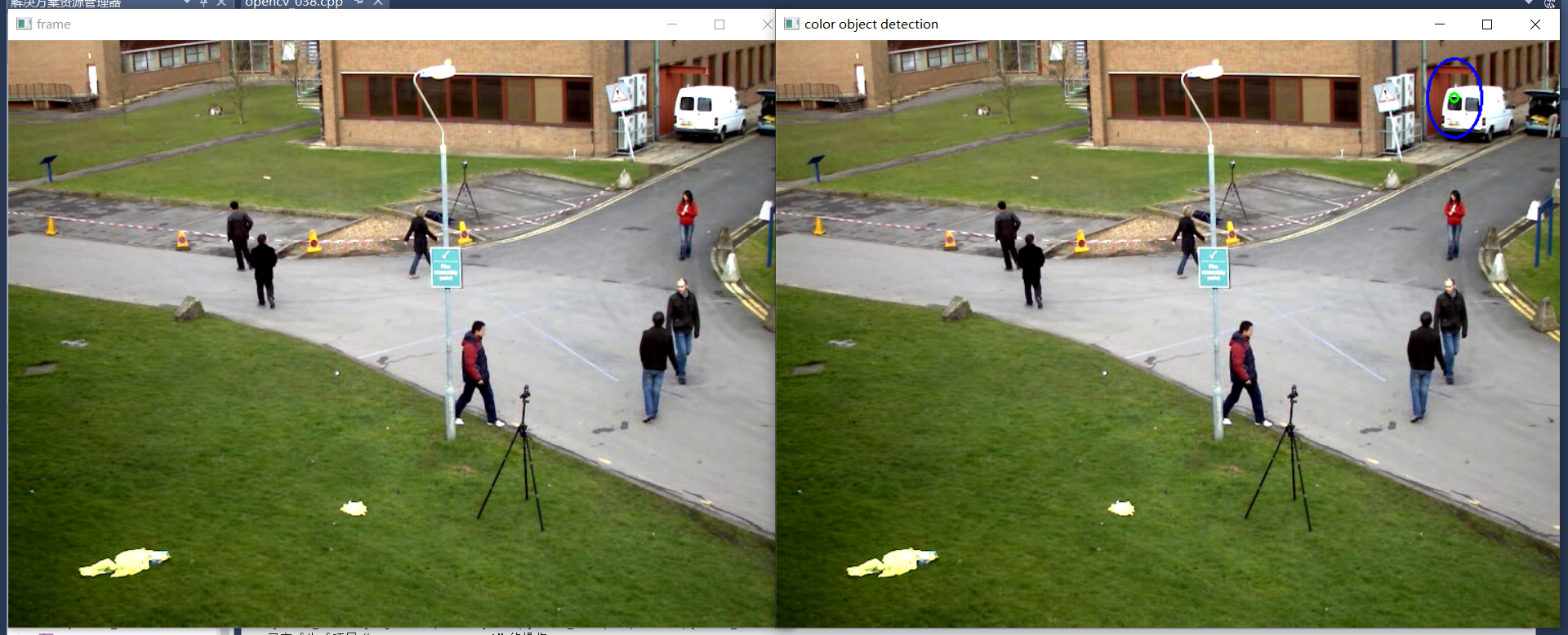

49、基于颜色的对象跟踪

视频帧颜色分析与提取

- 查看帧图像像素值分布(image watch)

- 设置正确range取得mask

- 对mask图像进行轮廓分析

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

void process_frame(Mat &image);

int main(int argc, char** argv) {

VideoCapture capture("D:/images/vtest.avi"); //获取摄像头

if (!capture.isOpened()) {

printf("could not open the camera...\n");

}

namedWindow("frame", WINDOW_AUTOSIZE);

Mat frame;

while (true) {

bool ret = capture.read(frame);

if (!ret) break;

imshow("frame", frame);

process_frame(frame);

char c = waitKey(50);

if (c == 27) { //ESC

break;

}

}

capture.release(); //读写完成后关闭释放资源

waitKey(0);

destroyAllWindows();

return 0;

}

void process_frame(Mat &image) {

Mat hsv, mask;

cvtColor(image, hsv, COLOR_BGR2HSV);

inRange(hsv, Scalar(0, 43, 46), Scalar(10, 255, 255), mask);

//imshow("mask", mask);

Mat se = getStructuringElement(MORPH_RECT, Size(3, 3));

morphologyEx(mask, mask, MORPH_OPEN, se);

//imshow("result", mask);

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

findContours(mask, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

int index = -1;

double max_area = 0;

for (size_t t = 0; t < contours.size(); t++) {

double area = contourArea(contours[t]);

if (area > max_area) {

max_area = area;

index = t;

}

}

//进行轮廓拟合与输出

if (index >= 0) {

RotatedRect rrt = minAreaRect(contours[index]);

ellipse(image, rrt, Scalar(255, 0, 0), 2, 8);

circle(image, rrt.center, 4, Scalar(0, 255, 0), 2, 8, 0);

}

imshow("color object detection", image);

}

效果:

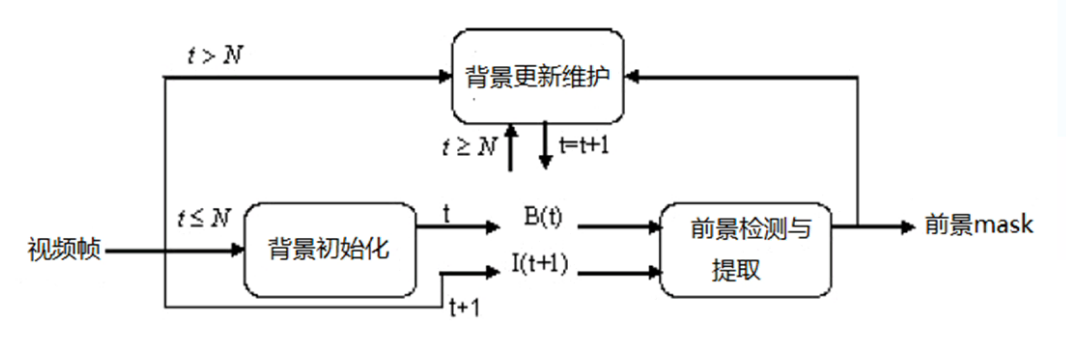

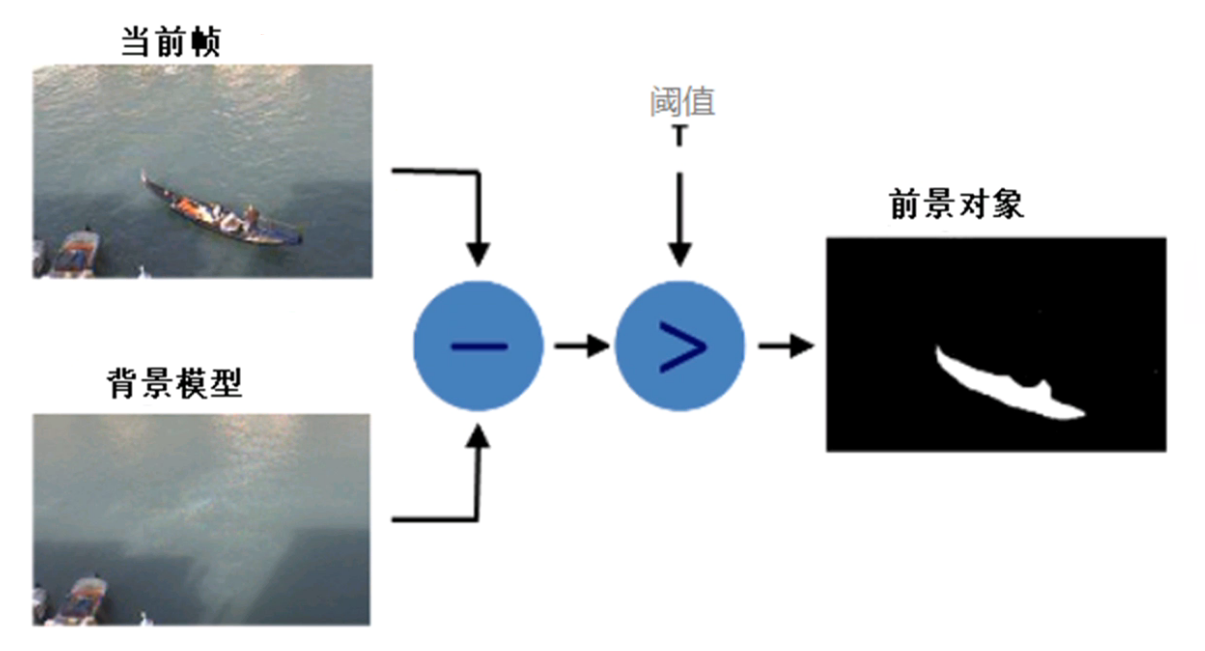

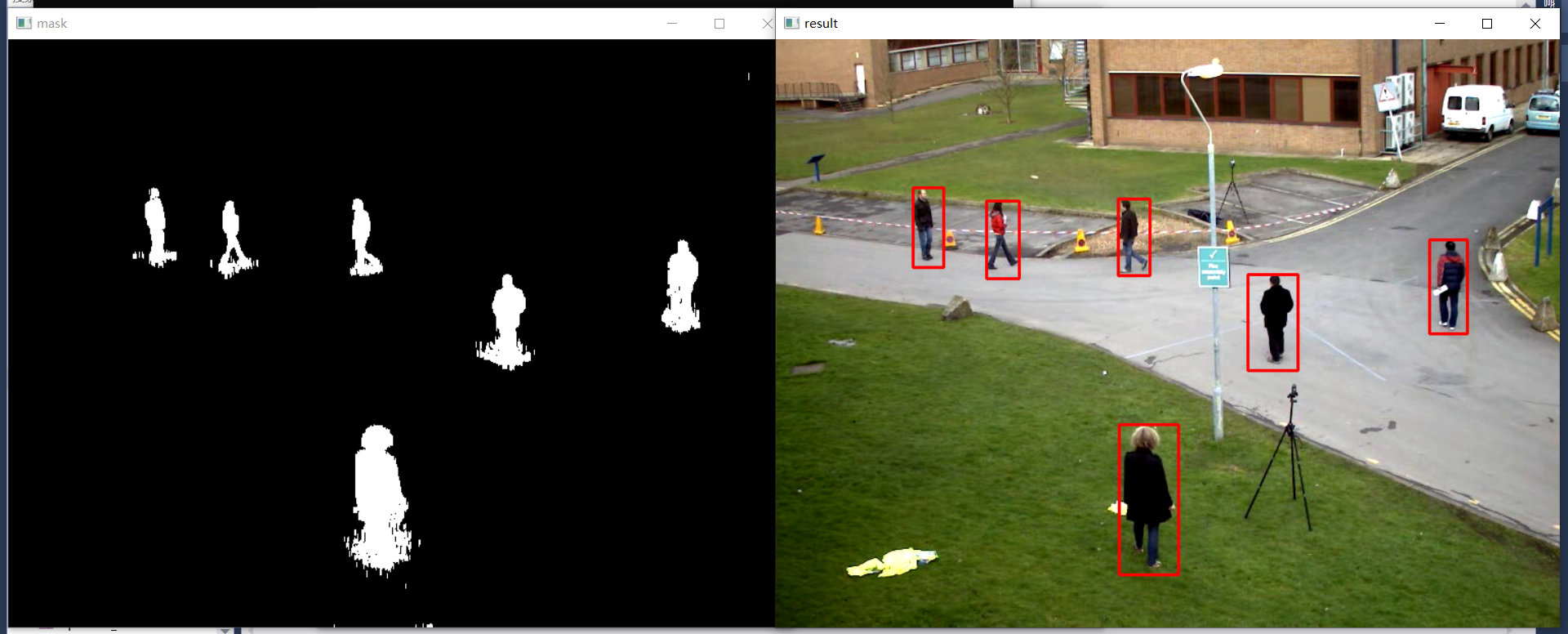

50、背景分析

常见算法

- KNN

- GMM

- Fuzzy Integral

基本流程

- 背景初始化阶段

- 前景检测阶段

- 背景更新/维护

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

void process_frame(Mat &image);

void process2(Mat &image);

auto pMOG2 = createBackgroundSubtractorMOG2(500, 16, false);

int main(int argc, char** argv) {

VideoCapture capture("D:/images/vtest.avi"); //获取摄像头

if (!capture.isOpened()) {

printf("could not open the camera...\n");

}

namedWindow("frame", WINDOW_AUTOSIZE);

Mat frame;

while (true) {

bool ret = capture.read(frame);

if (!ret) break;

imshow("frame", frame);

//process_frame(frame);

process2(frame);

char c = waitKey(50);

if (c == 27) { //ESC

break;

}

}

capture.release(); //读写完成后关闭释放资源

waitKey(0);

destroyAllWindows();

return 0;

}

void process2(Mat &image) {

Mat mask, bg_image;

pMOG2->apply(image, mask); //提取前景

//形态学操作

Mat se = getStructuringElement(MORPH_RECT, Size(1, 5), Point(-1, -1));

morphologyEx(mask, mask, MORPH_OPEN, se);

imshow("mask", mask);

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

findContours(mask, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

int index = -1;

double max_area = 0;

for (size_t t = 0; t < contours.size(); t++) {

double area = contourArea(contours[t]);

if (area < 200) {

continue;

}

Rect box = boundingRect(contours[t]);

RotatedRect rrt = minAreaRect(contours[t]);

//rectangle(image, box, Scalar(0, 0, 255), 2, 8, 0);

circle(image, rrt.center, 2, Scalar(255, 0, 0), 2, 8);

ellipse(image, rrt, Scalar(0, 0, 255), 2, 8);

}

imshow("result", image);

}

void process_frame(Mat &image) {

Mat mask, bg_image;

pMOG2->apply(image, mask); //获取前景

pMOG2->getBackgroundImage(bg_image);

imshow("mask", mask);

imshow("background", bg_image);

}

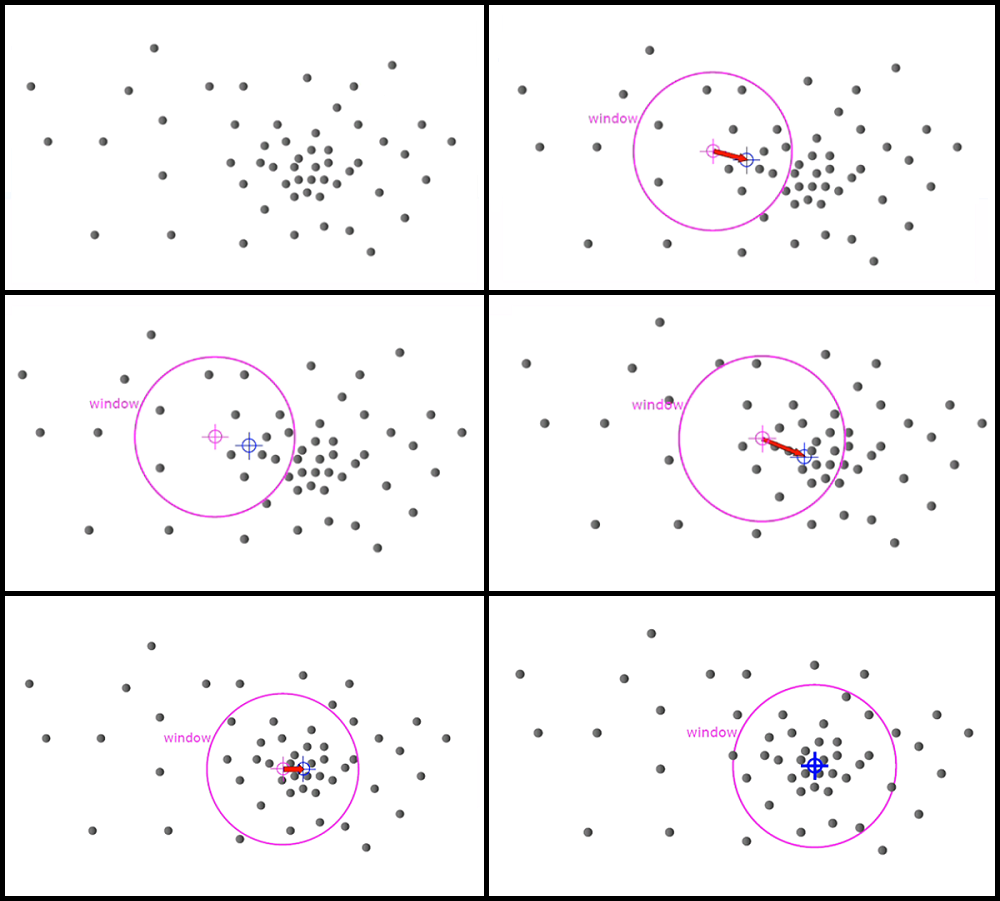

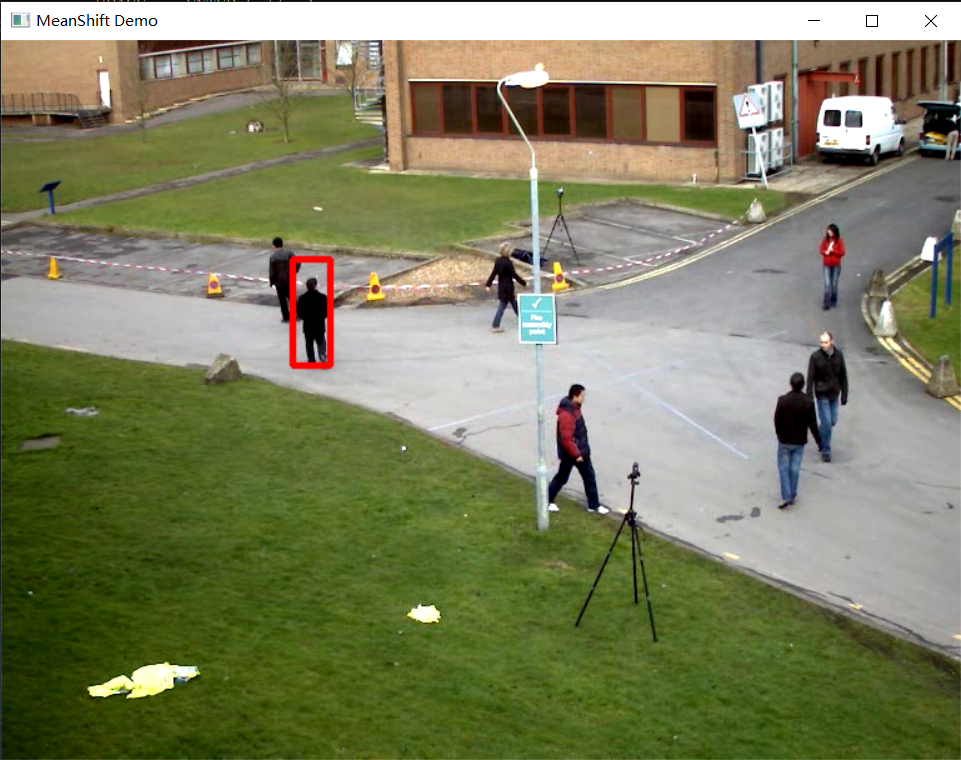

效果: