confluent kafka connect remote debugging

1. Deep inside of kafka-connect start up

To begin with, let's take a look at how kafka connect start.

1.1 start command

# background running mode

cd /home/lenmom/workspace/software/confluent-community-5.1.-2.11/ &&./bin/connect-distributed -daemon ./etc/schema-registry/connect-avro-distributed.properties # or console running mode

cd /home/lenmom/workspace/software/confluent-community-5.1.-2.11/ &&./bin/connect-distributed ./etc/schema-registry/connect-avro-distributed.properties

we saw the start command is connect-distributed, then take a look at content of this file

#!/bin/sh

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License. if [ $# -lt ];

then

echo "USAGE: $0 [-daemon] connect-distributed.properties"

exit

fi base_dir=$(dirname $) ###

### Classpath additions for Confluent Platform releases (LSB-style layout)

###

#cd -P deals with symlink from /bin to /usr/bin

java_base_dir=$( cd -P "$base_dir/../share/java" && pwd ) # confluent-common: required by kafka-serde-tools

# kafka-serde-tools (e.g. Avro serializer): bundled with confluent-schema-registry package

for library in "kafka" "confluent-common" "kafka-serde-tools" "monitoring-interceptors"; do

dir="$java_base_dir/$library"

if [ -d "$dir" ]; then

classpath_prefix="$CLASSPATH:"

if [ "x$CLASSPATH" = "x" ]; then

classpath_prefix=""

fi

CLASSPATH="$classpath_prefix$dir/*"

fi

done if [ "x$KAFKA_LOG4J_OPTS" = "x" ]; then

LOG4J_CONFIG_NORMAL_INSTALL="/etc/kafka/connect-log4j.properties"

LOG4J_CONFIG_ZIP_INSTALL="$base_dir/../etc/kafka/connect-log4j.properties"

if [ -e "$LOG4J_CONFIG_NORMAL_INSTALL" ]; then # Normal install layout

KAFKA_LOG4J_OPTS="-Dlog4j.configuration=file:${LOG4J_CONFIG_NORMAL_INSTALL}"

elif [ -e "${LOG4J_CONFIG_ZIP_INSTALL}" ]; then # Simple zip file layout

KAFKA_LOG4J_OPTS="-Dlog4j.configuration=file:${LOG4J_CONFIG_ZIP_INSTALL}"

else # Fallback to normal default

KAFKA_LOG4J_OPTS="-Dlog4j.configuration=file:$base_dir/../config/connect-log4j.properties"

fi

fi

export KAFKA_LOG4J_OPTS if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

export KAFKA_HEAP_OPTS="-Xms256M -Xmx2G"

fi EXTRA_ARGS=${EXTRA_ARGS-'-name connectDistributed'} COMMAND=$

case $COMMAND in

-daemon)

EXTRA_ARGS="-daemon "$EXTRA_ARGS

shift

;;

*)

;;

esac export CLASSPATH

exec $(dirname $)/kafka-run-class $EXTRA_ARGS org.apache.kafka.connect.cli.ConnectDistributed "$@"

we found that to start the kafka connect process, it called another file kafka-run-class,so let's goto kafka-run-class.

1.2 kafka-run-class

.

.

.

.

# Launch mode

if [ "x$DAEMON_MODE" = "xtrue" ]; then

nohup $JAVA $KAFKA_HEAP_OPTS $KAFKA_JVM_PERFORMANCE_OPTS $KAFKA_GC_LOG_OPTS $KAFKA_JMX_OPTS $KAFKA_LOG4J_OPTS -cp $CLASSPATH $KAFKA_OPTS "$@" > "$CONSOLE_OUTPUT_FILE" >& < /dev/null &

else

exec $JAVA $KAFKA_HEAP_OPTS $KAFKA_JVM_PERFORMANCE_OPTS $KAFKA_GC_LOG_OPTS $KAFKA_JMX_OPTS $KAFKA_LOG4J_OPTS -cp $CLASSPATH $KAFKA_OPTS "$@"

fi

at the end of this file, it launched the connect process by invoking java command, and this is the location where we can add logic to remote debugging.

2. copy kafka-run-class and rename the copy to kafka-connect-debugging

cp bin/kafka-run-class bin/kafka-connect-debugging

modify the invoke command in kafka-connect-debugging to add java remote debugging support.

vim bin/kafka-connect-debugging

the invoke command as follows:

.

.

.

export JPDA_OPTS="-agentlib:jdwp=transport=dt_socket,address=8888,server=y,suspend=y"

#export JPDA_OPTS="" # Launch mode

if [ "x$DAEMON_MODE" = "xtrue" ]; then

nohup $JAVA $JPDA_OPTS $KAFKA_HEAP_OPTS $KAFKA_JVM_PERFORMANCE_OPTS $KAFKA_GC_LOG_OPTS $KAFKA_JMX_OPTS $KAFKA_LOG4J_OPTS -cp $CLASSPATH $KAFKA_OPTS "$@" > "$CONSOLE_OUTPUT_FILE" >& < /dev/null &

else

exec $JAVA $JPDA_OPTS $KAFKA_HEAP_OPTS $KAFKA_JVM_PERFORMANCE_OPTS $KAFKA_GC_LOG_OPTS $KAFKA_JMX_OPTS $KAFKA_LOG4J_OPTS -cp $CLASSPATH $KAFKA_OPTS "$@"

fi

The added command means to start the kafka-connect as server and listen at port number 8888, and paused for the debugging client to connect.

if we don't want to run in debug mode, just uncomment the line

#export JPDA_OPTS=""

which means remote the # symbol in this line.

3. edit connect-distributed file

cd /home/lenmom/workspace/software/confluent-community-5.1.-2.11/

vim ./bin/connect-distributed

replace last line from

exec $(dirname $)/kafka-run-class $EXTRA_ARGS org.apache.kafka.connect.cli.ConnectDistributed "$@"

to

exec $(dirname $)/kafka-connect-debugging $EXTRA_ARGS org.apache.kafka.connect.cli.ConnectDistributed "$@"

4. debugging

4.1 start kafka-connect

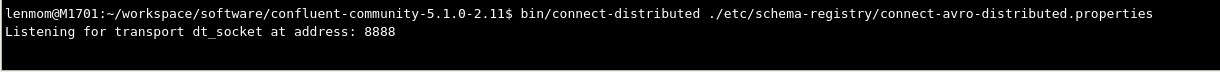

lenmom@M1701:~/workspace/software/confluent-community-5.1.-2.11$ bin/connect-distributed ./etc/schema-registry/connect-avro-distributed.properties

Listening for transport dt_socket at address:

we see the process is paused and listening on port 8888, until the debugging client attached on.

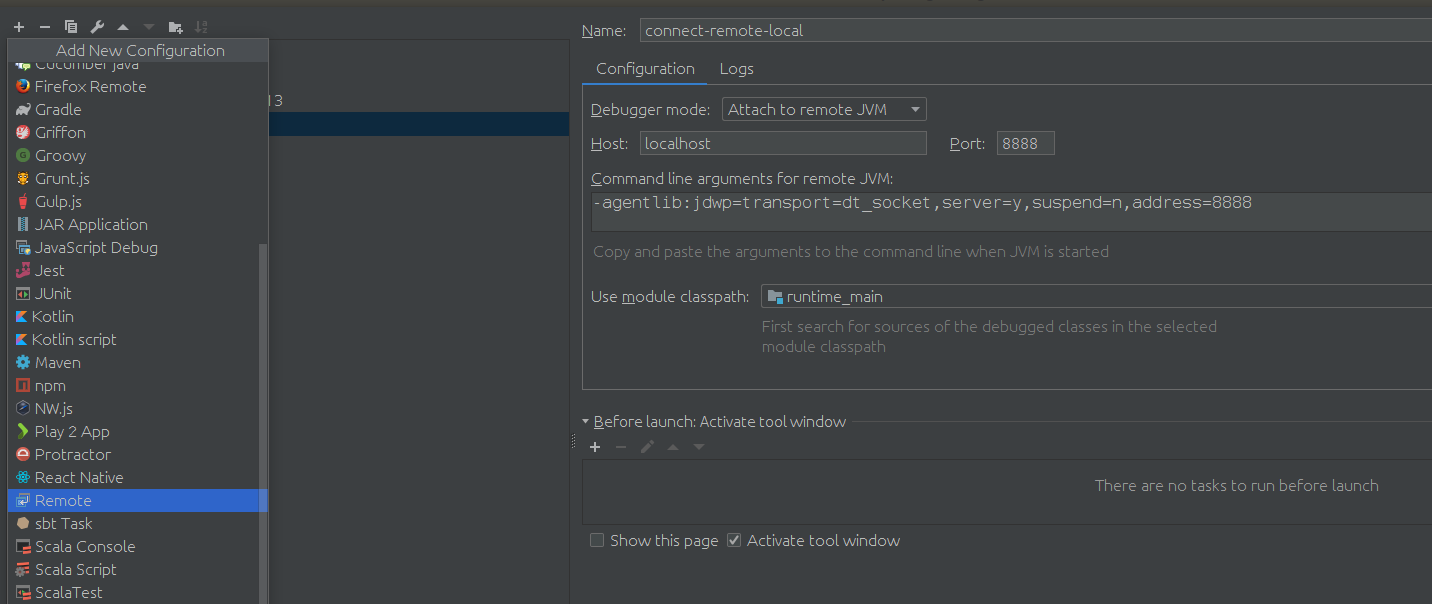

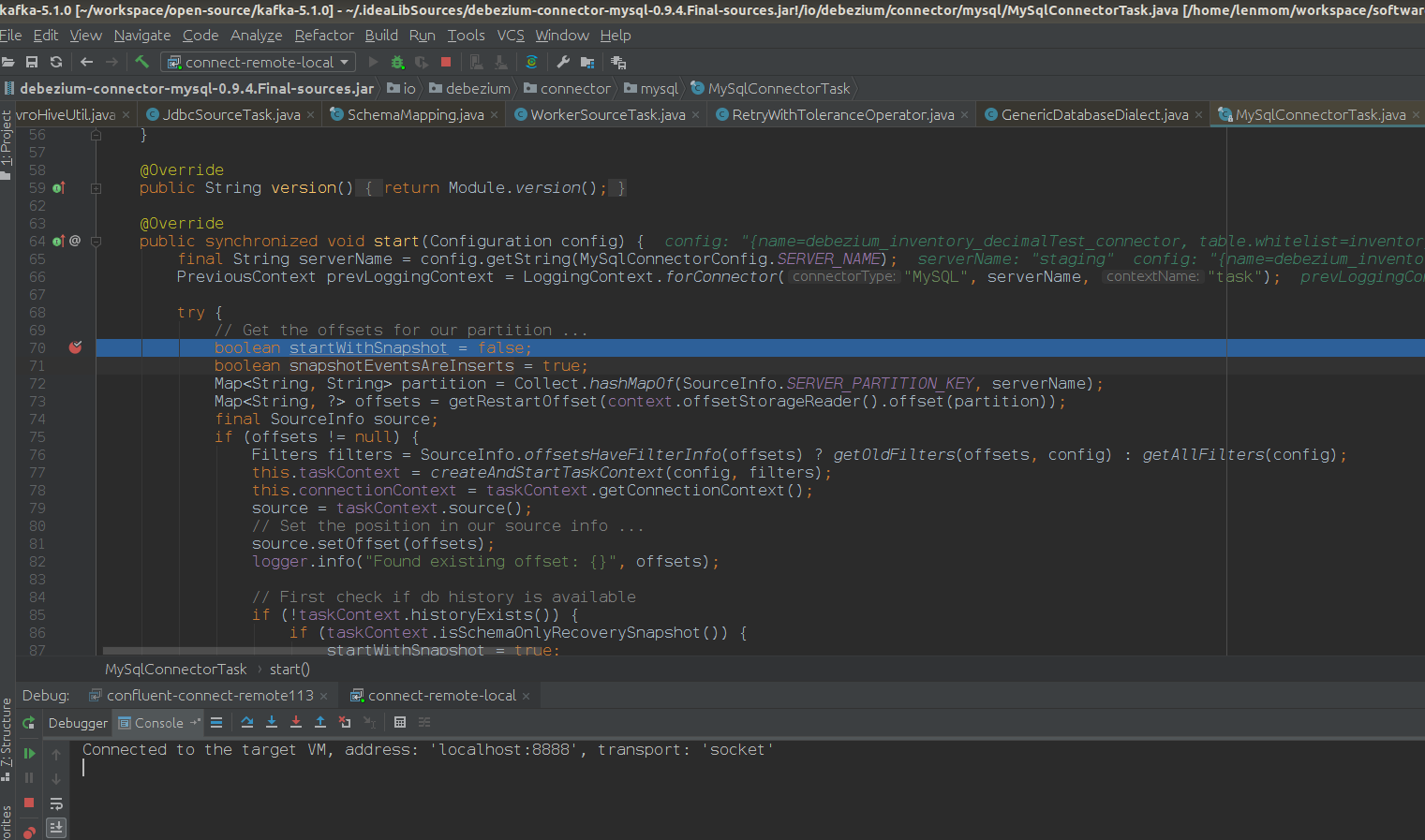

4.2 attach the kafka-connect using idea

after setup the debugg setting, just client debugging, is ok now. show a screenshot of my scenario.

Have fun!

confluent kafka connect remote debugging的更多相关文章

- Oracle GoldenGate to Confluent with Kafka Connect

Confluent is a company founded by the team that built Apache Kafka. It builds a platform around Kafk ...

- Debugging Kafka connect

1. setup debug configuration mainClass: org.apache.kafka.connect.cli.ConnectDistributed VMOption: -D ...

- Streaming data from Oracle using Oracle GoldenGate and Kafka Connect

This is a guest blog from Robin Moffatt. Robin Moffatt is Head of R&D (Europe) at Rittman Mead, ...

- Kafka connect in practice(3): distributed mode mysql binlog ->kafka->hive

In the previous post Kafka connect in practice(1): standalone, I have introduced about the basics of ...

- Build an ETL Pipeline With Kafka Connect via JDBC Connectors

This article is an in-depth tutorial for using Kafka to move data from PostgreSQL to Hadoop HDFS via ...

- Kafka connect快速构建数据ETL通道

摘要: 作者:Syn良子 出处:http://www.cnblogs.com/cssdongl 转载请注明出处 业余时间调研了一下Kafka connect的配置和使用,记录一些自己的理解和心得,欢迎 ...

- 以Kafka Connect作为实时数据集成平台的基础架构有什么优势?

Kafka Connect是一种用于在Kafka和其他系统之间可扩展的.可靠的流式传输数据的工具,可以更快捷和简单地将大量数据集合移入和移出Kafka的连接器.Kafka Connect为DataPi ...

- DataPipeline联合Confluent Kafka Meetup上海站

Confluent作为国际数据“流”处理技术领先者,提供实时数据处理解决方案,在市场上拥有大量企业客户,帮助企业轻松访问各类数据.DataPipeline作为国内首家原生支持Kafka解决方案的“iP ...

- 打造实时数据集成平台——DataPipeline基于Kafka Connect的应用实践

导读:传统ETL方案让企业难以承受数据集成之重,基于Kafka Connect构建的新型实时数据集成平台被寄予厚望. 在4月21日的Kafka Beijing Meetup第四场活动上,DataPip ...

随机推荐

- 001_git: 版本控制软件

一.基础配置 1.安装]# yum install -y git 2.配置用户信息配置用户联系方式:名字.email]# git config --global user.name "Mr. ...

- 贾扬清牛人(zz)

贾扬清加入阿里巴巴后,能否诞生出他的第三个世界级杰作? 文 / 华商韬略 张凌云 本文转载,著作权归原作者所有 贾扬清加入阿里巴巴后,能否诞生出他的第三个世界级杰作? 2017年1月11日,美国 ...

- sweiper做一个tab切换

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8&quo ...

- SpringBoot JPA懒加载异常 - com.fasterxml.jackson.databind.JsonMappingException: could not initialize proxy

问题与分析 某日忽然发现在用postman测试数据时报错如下: com.fasterxml.jackson.databind.JsonMappingException: could not initi ...

- Linux下 为什么有时候使用sudo也提示没有权限

例如: #sudo echo 1 > /proc/sys/net/ipv6/conf/all/disable_ipv6 bash: /proc/sys/net/ipv6/conf/all/dis ...

- Java Heap dump文件分析工具jhat简介

jhat 是Java堆分析工具(Java heap Analyzes Tool). 在JDK6u7之后成为标配. 使用该命令需要有一定的Java开发经验,官方不对此工具提供技术支持和客户服务. 用法: ...

- Tkinter 之Place布局

一.参数说明 参数 作用 anchor 控制组件在 place 分配的空间中的位置"n", "ne", "e", "se&quo ...

- zookpeer 和 redis 集群内一致性协议 及 选举 对比

zookeeper 使用的是zab协议,类似 raft 的 Strong Leader 模式 redis 的哨兵 在 崩溃选举的时候采用的是 raft的那一套term. 因为redis 采用的是异步 ...

- 使用Python调用Zabbix API

Zabbix API官方文档: https://www.zabbix.com/documentation/4.0/zh/manual/api 1.向 api_jsonrpc.php 发送HTTP_PO ...

- [游戏开发]imgui介绍

创建窗口 ImGui::Begin("Hello, world!"); ImGui::End(); 其中, ImGui::Begin("Hello, world!&quo ...