Hadoop生态圈-Kafka的完全分布式部署

Hadoop生态圈-Kafka的完全分布式部署

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

本篇博客主要内容就是搭建Kafka完全分布式,它是在kafka本地模式(https://www.cnblogs.com/yinzhengjie/p/9209058.html)的基础之上进一步实现完全分布式搭建过程。

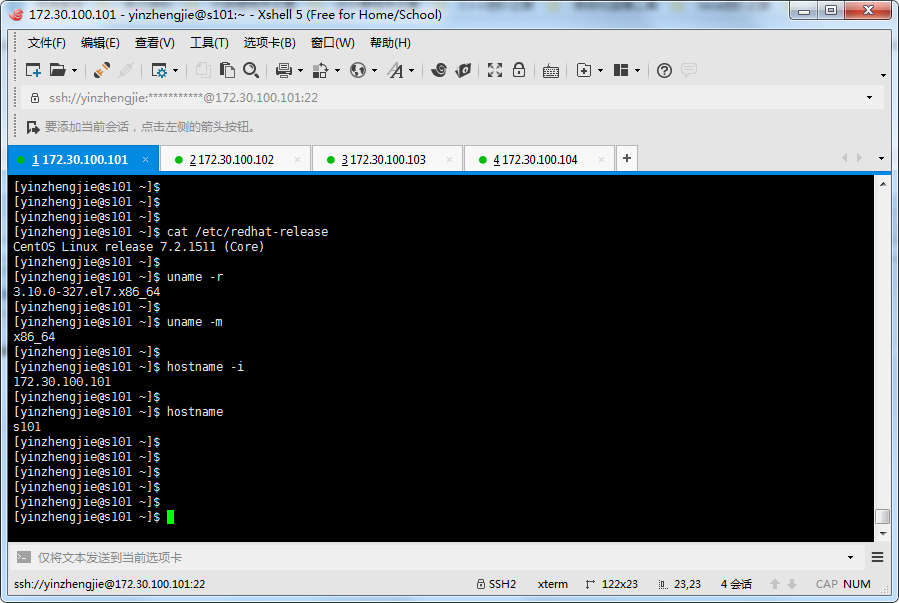

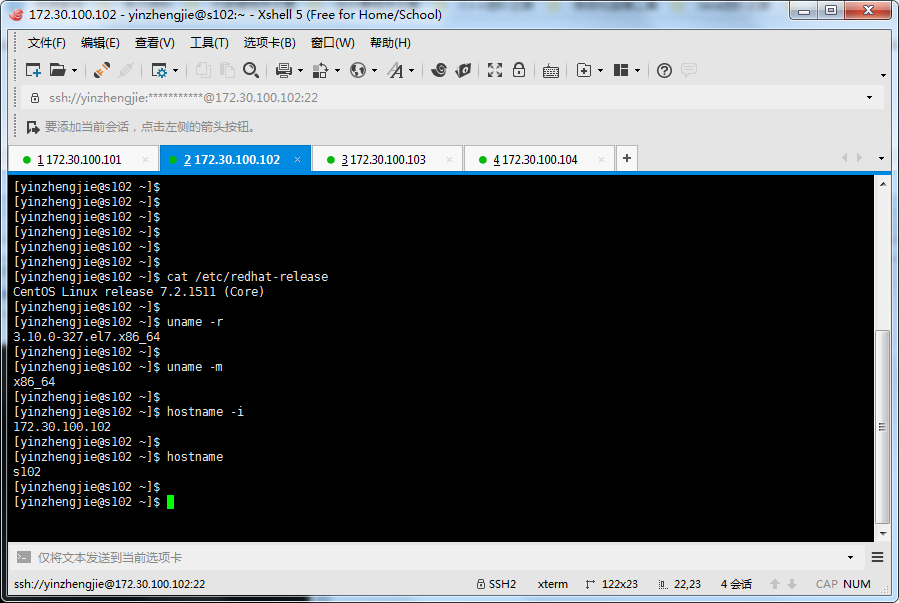

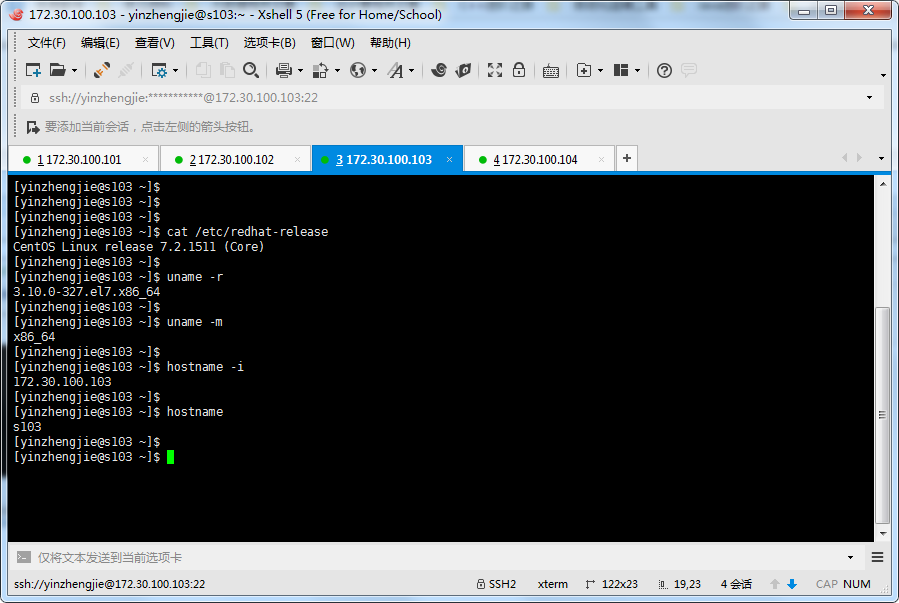

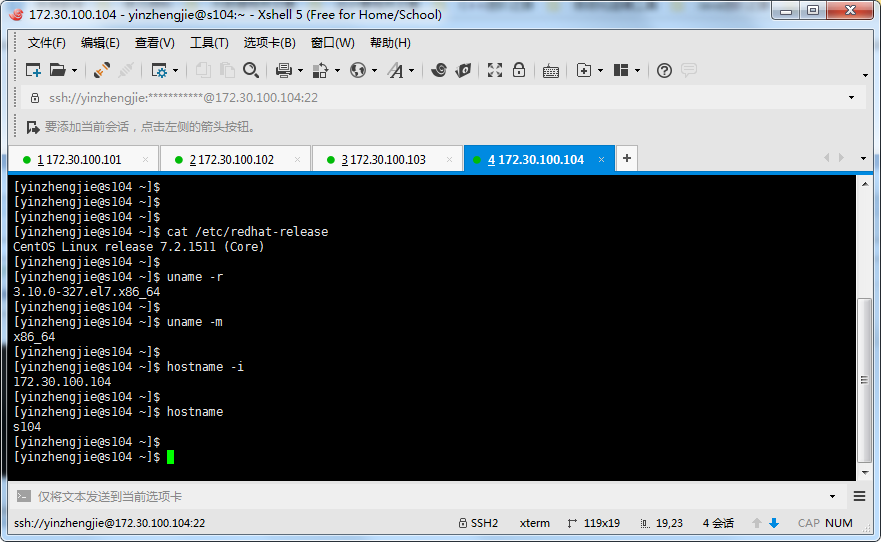

一.试验环境

试验环境共计4台服务器

1>.管理服务器(s101)

2>.Kafka节点二(s102,已经部署好了zookeeper服务)

3>.Kafka节点三(s103,已经部署好了zookeeper服务)

4>.Kafka节点四(s104,已经部署好了zookeeper服务)

二.kafka完全分布式部署

1>.将kafka加压后的安装包发送到其他节点(s102,s103,s104)

[yinzhengjie@s101 data]$ more `which xrsync.sh`

#!/bin/bash

#@author :yinzhengjie

#blog:http://www.cnblogs.com/yinzhengjie

#EMAIL:y1053419035@qq.com

#判断用户是否传参

];then

echo "请输入参数";

exit

fi

#获取文件路径

file=$@

#获取子路径

filename=`basename $file`

#获取父路径

dirpath=`dirname $file`

#获取完整路径

cd $dirpath

fullpath=`pwd -P`

#同步文件到DataNode

;i<=;i++ ))

do

#使终端变绿色

tput setaf

echo =========== s$i %file ===========

#使终端变回原来的颜色,即白灰色

tput setaf

#远程执行命令

rsync -lr $filename `whoami`@s$i:$fullpath

#判断命令是否执行成功

];then

echo "命令执行成功"

fi

done

[yinzhengjie@s101 data]$

[yinzhengjie@s101 data]$ more `which xrsync.sh`

[yinzhengjie@s101 data]$ xrsync.sh /soft/kafka =========== s102 %file =========== 命令执行成功 =========== s103 %file =========== 命令执行成功 =========== s104 %file =========== 命令执行成功 =========== s105 %file =========== 命令执行成功 [yinzhengjie@s101 data]$

[yinzhengjie@s101 data]$ xrsync.sh /soft/kafka

[yinzhengjie@s101 data]$ xrsync.-/ =========== s102 %file =========== 命令执行成功 =========== s103 %file =========== 命令执行成功 =========== s104 %file =========== 命令执行成功 =========== s105 %file =========== 命令执行成功 [yinzhengjie@s101 data]$

[yinzhengjie@s101 data]$ xrsync.sh /soft/kafka_2.11-1.1.0/

2>.分发环境变量

[yinzhengjie@s101 data]$ su Password: [root@s101 data]# xrsync.sh /etc/profile =========== s102 %file =========== 命令执行成功 =========== s103 %file =========== 命令执行成功 =========== s104 %file =========== 命令执行成功 =========== s105 %file =========== 命令执行成功 [root@s101 data]# exit exit [yinzhengjie@s101 data]$

3>.修zk节点的改配置文件

[yinzhengjie@s102 ~]$ grep broker.id /soft/kafka/config/server.properties broker. [yinzhengjie@s102 ~]$ grep listeners /soft/kafka/config/server.properties | grep -v ^# listeners=PLAINTEXT://s102:9092 [yinzhengjie@s102 ~]$

修改s102配置文件(/soft/kafka/config/server.properties)

[yinzhengjie@s103 ~]$ grep broker.id /soft/kafka/config/server.properties broker. [yinzhengjie@s103 ~]$ grep listeners /soft/kafka/config/server.properties | grep -v ^# listeners=PLAINTEXT://s103:9092 [yinzhengjie@s103 ~]$ [yinzhengjie@s103 ~]$

修改s103配置文件(/soft/kafka/config/server.properties)

[yinzhengjie@s104 ~]$ grep broker.id /soft/kafka/config/server.properties broker. [yinzhengjie@s104 ~]$ [yinzhengjie@s104 ~]$ grep listeners /soft/kafka/config/server.properties | grep -v ^# listeners=PLAINTEXT://s104:9092 [yinzhengjie@s104 ~]$

修改s104配置文件(/soft/kafka/config/server.properties)

4>.进入zookeeper客户端并删除zk的kafka节点数据

[yinzhengjie@s104 ~]$ zkCli.sh Connecting to localhost: -- ::, [myid:] - INFO [main:Environment@] - Client environment:zookeeper.version=-e5259e437540f349646870ea94dc2658c4e44b3b, built on // : GMT -- ::, [myid:] - INFO [main:Environment@] - Client environment:host.name=s104 -- ::, [myid:] - INFO [main:Environment@] - Client environment:java.version=1.8.0_131 -- ::, [myid:] - INFO [main:Environment@] - Client environment:java.vendor=Oracle Corporation -- ::, [myid:] - INFO [main:Environment@] - Client environment:java.home=/soft/jdk1..0_131/jre -- ::, [myid:] - INFO [main:Environment@] - Client environment:java.class.path=/soft/zk/bin/../build/classes:/soft/zk/bin/../build/lib/*.jar:/soft/zk/bin/../lib/slf4j-log4j12-1.7.25.jar:/soft/zk/bin/../lib/slf4j-api-1.7.25.jar:/soft/zk/bin/../lib/netty-3.10.6.Final.jar:/soft/zk/bin/../lib/log4j-1.2.17.jar:/soft/zk/bin/../lib/jline-0.9.94.jar:/soft/zk/bin/../lib/audience-annotations-0.5.0.jar:/soft/zk/bin/../zookeeper-3.4.12.jar:/soft/zk/bin/../src/java/lib/*.jar:/soft/zk/bin/../conf: 2018-06-21 01:23:54,940 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib 2018-06-21 01:23:54,940 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp 2018-06-21 01:23:54,940 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA> 2018-06-21 01:23:54,940 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux 2018-06-21 01:23:54,941 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64 2018-06-21 01:23:54,941 [myid:] - INFO [main:Environment@100] - Client environment:os.version=3.10.0-327.el7.x86_64 2018-06-21 01:23:54,941 [myid:] - INFO [main:Environment@100] - Client environment:user.name=yinzhengjie 2018-06-21 01:23:54,941 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/home/yinzhengjie 2018-06-21 01:23:54,941 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/home/yinzhengjie 2018-06-21 01:23:54,942 [myid:] - INFO [main:ZooKeeper@441] - Initiating client connection, connectString=localhost:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@277050dc Welcome to ZooKeeper! JLine support is enabled 2018-06-21 01:23:54,973 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@1028] - Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error) 2018-06-21 01:23:55,031 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@878] - Socket connection established to localhost/127.0.0.1:2181, initiating session 2018-06-21 01:23:55,049 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@1302] - Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x6800003ae7350004, negotiated timeout = 30000 WATCHER:: WatchedEvent state:SyncConnected type:None path:null [zk: localhost:2181(CONNECTED) 0] ls / [a, cluster, controller, brokers, zookeeper, yarn-leader-election, hadoop-ha, admin, isr_change_notification, log_dir_event_notification, controller_epoch, consumers, latest_producer_id_block, config, hbase] [zk: localhost:2181(CONNECTED) 1] rmr /controller /brokers /admin /controller_epoch /consumers /latest_producer_id_block /config /isr_change_notification /cluster /log_dir_event_notification [zk: localhost:2181(CONNECTED) 2]

5>.分别启动s102-s104的kafka

[yinzhengjie@s102 ~]$ kafka-server-start.sh -daemon /soft/kafka/config/server.properties [yinzhengjie@s102 ~]$

[yinzhengjie@s102 ~]$ kafka-server-start.sh -daemon /soft/kafka/config/server.properties

[yinzhengjie@s103 ~]$ kafka-server-start.sh -daemon /soft/kafka/config/server.properties [yinzhengjie@s103 ~]$

[yinzhengjie@s103 ~]$ kafka-server-start.sh -daemon /soft/kafka/config/server.properties

[yinzhengjie@s104 ~]$ kafka-server-start.sh -daemon /soft/kafka/config/server.properties [yinzhengjie@s104 ~]$

[yinzhengjie@s104 ~]$ kafka-server-start.sh -daemon /soft/kafka/config/server.properties

6>.创建主题

[yinzhengjie@s104 ~]$ kafka-topics. --list yinzhengjie [yinzhengjie@s104 ~]$

查看以及有的主题([yinzhengjie@s104 ~]$ kafka-topics.sh --zookeeper s102:2181 --list)

[yinzhengjie@s104 ~]$ kafka-topics. --create --partitions --replication-factor --topic yzj Created topic "yzj". [yinzhengjie@s104 ~]$

创建主题([yinzhengjie@s104 ~]$ kafka-topics.sh --zookeeper s104:2181 --create --partitions 2 --replication-factor 1 --topic yzj)

7>.在任意zk节点开启控制台生产者(例如:在s102上)

[yinzhengjie@s102 ~]$ kafka-server-start.sh -daemon /soft/kafka/config/server.properties [yinzhengjie@s102 ~]$ kafka-console-producer. --topic yzj >尹正杰到此一游! >

[yinzhengjie@s102 ~]$ kafka-server-start.sh -daemon /soft/kafka/config/server.properties

8>.在任意zk节点开启控制台消费者(例如:在s103上)

[yinzhengjie@s103 ~]$ kafka-console-consumer. --topic yzj --from-beginning Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper]. 尹正杰到此一游!

[yinzhengjie@s103 ~]$ kafka-console-consumer.sh --zookeeper s102:2181 --topic yzj --from-beginning

三.编写kafka启动脚本(“/usr/local/bin/xkafka.sh”,别忘记添加执行权限,而且需要你提前配置好秘钥对哟!)

[yinzhengjie@s101 ~]$ more /usr/local/bin/xkafka.sh

#!/bin/bash

#@author :yinzhengjie

#blog:http://www.cnblogs.com/yinzhengjie

#EMAIL:y1053419035@qq.com

#判断用户是否传参

];then

echo "无效参数,用法为: $0 {start|stop}"

exit

fi

#获取用户输入的命令

cmd=$

; i<= ; i++ )) ; do

tput setaf

echo ========== s$i $cmd ================

tput setaf

case $cmd in

start)

ssh s$i "source /etc/profile ; kafka-server-start.sh -daemon /soft/kafka/config/server.properties"

echo s$i "服务已启动"

;;

stop)

ssh s$i "source /etc/profile ; kafka-server-stop.sh"

echo s$i "服务已停止"

;;

*)

echo "无效参数,用法为: $0 {start|stop}"

exit

;;

esac

done

[yinzhengjie@s101 ~]$ sudo chmod a+x /usr/local/bin/xkafka.sh

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ ll /usr/local/bin/xkafka.sh

-rwxr-xr-x root root Jun : /usr/local/bin/xkafka.sh

[yinzhengjie@s101 ~]$

Hadoop生态圈-Kafka的完全分布式部署的更多相关文章

- Hadoop生态圈-Kafka的本地模式部署

Hadoop生态圈-Kafka的本地模式部署 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.Kafka简介 1>.什么是JMS 答:在Java中有一个角消息系统的东西,我 ...

- Hadoop生态圈-通过CDH5.15.1部署spark1.6与spark2.3.0的版本兼容运行

Hadoop生态圈-通过CDH5.15.1部署spark1.6与spark2.3.0的版本兼容运行 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 在我的CDH5.15.1集群中,默 ...

- Apache Hadoop 2.9.2 完全分布式部署

Apache Hadoop 2.9.2 完全分布式部署(HDFS) 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.环境准备 1>.操作平台 [root@node101.y ...

- Hadoop生态圈-kafka事务控制以及性能测试

Hadoop生态圈-kafka事务控制以及性能测试 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任.

- Hadoop生态圈-Kafka的新API实现生产者-消费者

Hadoop生态圈-Kafka的新API实现生产者-消费者 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任.

- Hadoop生态圈-Kafka配置文件详解

Hadoop生态圈-Kafka配置文件详解 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.默认kafka配置文件内容([yinzhengjie@s101 ~]$ more /s ...

- Hadoop生态圈-Kafka的旧API实现生产者-消费者

Hadoop生态圈-Kafka的旧API实现生产者-消费者 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.旧API实现生产者-消费者 1>.开启kafka集群 [yinz ...

- Hadoop生态圈-hbase介绍-完全分布式搭建

Hadoop生态圈-hbase介绍-完全分布式搭建 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任.

- Hadoop生态圈-hbase介绍-伪分布式安装

Hadoop生态圈-hbase介绍-伪分布式安装 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.HBase简介 HBase是一个分布式的,持久的,强一致性的存储系统,具有近似最 ...

随机推荐

- [CF1060F]Shrinking Tree[树dp+组合计数]

题意 你有一棵 \(n\) 个点的树,每次会随机选择树上的一条边,将两个端点 \(u,v\) 合并,新编号随机为 \(u,v\).问最后保留的编号分别为 \(1\) 到 \(n\) 的概率. \(n\ ...

- R绘图 第十篇:绘制文本、注释和主题(ggplot2)

使用ggplot2包绘制时,为了更直观地向用户显示报表的内容和外观,需要使用geom_text()函数添加文本说明,使用annotate()添加注释,并通过theme()来调整非数据的外观. 一,文本 ...

- 起步 - vue-router路由与页面间导航

vue-router 我们知道路由定义了一系列访问的地址规则,路由引擎根据这些规则匹配找到对应的处理页面,然后将请求转发给页进行处理.可以说所有的后端开发都是这样做的,而前端路由是不存在"请 ...

- 软件测试_测试工具_APP测试工具_对比

以下是我自己整理的APP测试工具对比,各个工具相关并不全面.尤其关于收费一项,我只是针对自己公司的实际情况进行对比的,每个工具还有其他收费套餐可以选择,详情可进入相关官网进行查看 以下是部分官网链接: ...

- 华为测试大牛Python+Django接口自动化怎么写的?

有人喜欢创造世界,他们做了开发者:有的人喜欢开发者,他们做了测试员.什么是软件测试?软件测试就是一场本该在用户面前发生的灾难提前在自己面前发生了,这会让他们生出一种救世主的感觉,拯救了用户,也就拯救者 ...

- LeetCode 9. Palindrome Number(回文数)

Determine whether an integer is a palindrome. An integer is a palindrome when it reads the same back ...

- Kubernetes并发控制与数据一致性的实现原理

在大型分布式系统中,定会存在大量并发写入的场景.在这种场景下如何进行更好的并发控制,即在多个任务同时存取数据时保证数据的一致性,成为分布式系统必须解决的问题.悲观并发控制和乐观并发控制是并发控制中采用 ...

- 科普贴 | 以太坊网络中的Gas Limit 和 Gas Price 是什么概念?

接触以太坊的同学都听过 Gas/ Gas Price/ Gas Limit,那么这些词汇究竟是什么意思? 还有,为什么有时候你的ETH转账会很慢?如何提高ETH转账速度? Ethereum平台 Vit ...

- 关于Backbone和Underscore再说几点

1. Backbone本身没有DOM操作功能,所以我们需要导入JQuery/Zepto/Ender 2. Backbone依赖于underscore.js: http://documentcloud. ...

- POI操作Excel(xls、xlsx)

阿帕奇官网:http://poi.apache.org/ POI3.17下载:http://poi.apache.org/download.html#POI-3.17 POI操作Excel教程(易百教 ...