Flink读写Redis(三)-读取redis数据

自定义flink的RedisSource,实现从redis中读取数据,这里借鉴了flink-connector-redis_2.11的实现逻辑,实现对redis读取的逻辑封装,flink-connector-redis_2.11的使用和介绍可参考之前的博客,项目中需要引入flink-connector-redis_2.11依赖

Flink读写Redis(一)-写入Redis

Flink读写Redis(二)-flink-redis-connector代码学习

抽象redis数据

定义MyRedisRecord类,封装redis数据类型和数据对象

package com.jike.flink.examples.redis;

import org.apache.flink.streaming.connectors.redis.common.mapper.RedisDataType;

import java.io.Serializable;

public class MyRedisRecord implements Serializable {

private Object data;

private RedisDataType redisDataType;

public MyRedisRecord(Object data, RedisDataType redisDataType) {

this.data = data;

this.redisDataType = redisDataType;

}

public Object getData() {

return data;

}

public void setData(Object data) {

this.data = data;

}

public RedisDataType getRedisDataType() {

return redisDataType;

}

public void setRedisDataType(RedisDataType redisDataType) {

this.redisDataType = redisDataType;

}

}

定义Redis数据读取类

首先定义接口类,定义redis的读取操作,目前这里只写了哈希表的get操作,可以增加更多的操作

package com.jike.flink.examples.redis;

import java.io.Serializable;

import java.util.Map;

public interface MyRedisCommandsContainer extends Serializable {

Map<String,String> hget(String key);

void close();

}

定义一个实现类,实现对redis的读取操作

package com.jike.flink.examples.redis;

import org.apache.flink.util.Preconditions;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.JedisPool;

import redis.clients.jedis.JedisSentinelPool;

import java.util.HashMap;

import java.util.Map;

import java.util.Set;

public class MyRedisContainer implements MyRedisCommandsContainer,Cloneable{

private static final long serialVersionUID = 1L;

private static final Logger LOG = LoggerFactory.getLogger(MyRedisContainer.class);

private final JedisPool jedisPool;

private final JedisSentinelPool jedisSentinelPool;

public MyRedisContainer(JedisPool jedisPool) {

Preconditions.checkNotNull(jedisPool, "Jedis Pool can not be null");

this.jedisPool = jedisPool;

this.jedisSentinelPool = null;

}

public MyRedisContainer(JedisSentinelPool sentinelPool) {

Preconditions.checkNotNull(sentinelPool, "Jedis Sentinel Pool can not be null");

this.jedisPool = null;

this.jedisSentinelPool = sentinelPool;

}

@Override

public Map<String,String> hget(String key) {

Jedis jedis = null;

try {

jedis = this.getInstance();

Map<String,String> map = new HashMap<String,String>();

Set<String> fieldSet = jedis.hkeys(key);

for(String s : fieldSet){

map.put(s,jedis.hget(key,s));

}

return map;

} catch (Exception e) {

if (LOG.isErrorEnabled()) {

LOG.error("Cannot get Redis message with command HGET to key {} error message {}", new Object[]{key, e.getMessage()});

}

throw e;

} finally {

this.releaseInstance(jedis);

}

}

private Jedis getInstance() {

return this.jedisSentinelPool != null ? this.jedisSentinelPool.getResource() : this.jedisPool.getResource();

}

private void releaseInstance(Jedis jedis) {

if (jedis != null) {

try {

jedis.close();

} catch (Exception var3) {

LOG.error("Failed to close (return) instance to pool", var3);

}

}

}

public void close() {

if (this.jedisPool != null) {

this.jedisPool.close();

}

if (this.jedisSentinelPool != null) {

this.jedisSentinelPool.close();

}

}

}

定义redis读取操作对象的创建者类

该类用来根据不同的配置生成不同的对象,这里考虑了直连redis和哨兵模式两张情况,后续还可以考虑redis集群的情形

package com.jike.flink.examples.redis;

import org.apache.commons.pool2.impl.GenericObjectPoolConfig;

import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisConfigBase;

import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisPoolConfig;

import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisSentinelConfig;

import org.apache.flink.util.Preconditions;

import redis.clients.jedis.JedisPool;

import redis.clients.jedis.JedisSentinelPool;

public class MyRedisCommandsContainerBuilder {

public MyRedisCommandsContainerBuilder(){

}

public static MyRedisCommandsContainer build(FlinkJedisConfigBase flinkJedisConfigBase) {

if (flinkJedisConfigBase instanceof FlinkJedisPoolConfig) {

FlinkJedisPoolConfig flinkJedisPoolConfig = (FlinkJedisPoolConfig)flinkJedisConfigBase;

return build(flinkJedisPoolConfig);

} else if (flinkJedisConfigBase instanceof FlinkJedisSentinelConfig) {

FlinkJedisSentinelConfig flinkJedisSentinelConfig = (FlinkJedisSentinelConfig)flinkJedisConfigBase;

return build(flinkJedisSentinelConfig);

} else {

throw new IllegalArgumentException("Jedis configuration not found");

}

}

public static MyRedisCommandsContainer build(FlinkJedisPoolConfig jedisPoolConfig) {

Preconditions.checkNotNull(jedisPoolConfig, "Redis pool config should not be Null");

GenericObjectPoolConfig genericObjectPoolConfig = new GenericObjectPoolConfig();

genericObjectPoolConfig.setMaxIdle(jedisPoolConfig.getMaxIdle());

genericObjectPoolConfig.setMaxTotal(jedisPoolConfig.getMaxTotal());

genericObjectPoolConfig.setMinIdle(jedisPoolConfig.getMinIdle());

JedisPool jedisPool = new JedisPool(genericObjectPoolConfig, jedisPoolConfig.getHost(), jedisPoolConfig.getPort(), jedisPoolConfig.getConnectionTimeout(), jedisPoolConfig.getPassword(), jedisPoolConfig.getDatabase());

return new MyRedisContainer(jedisPool);

}

public static MyRedisCommandsContainer build(FlinkJedisSentinelConfig jedisSentinelConfig) {

Preconditions.checkNotNull(jedisSentinelConfig, "Redis sentinel config should not be Null");

GenericObjectPoolConfig genericObjectPoolConfig = new GenericObjectPoolConfig();

genericObjectPoolConfig.setMaxIdle(jedisSentinelConfig.getMaxIdle());

genericObjectPoolConfig.setMaxTotal(jedisSentinelConfig.getMaxTotal());

genericObjectPoolConfig.setMinIdle(jedisSentinelConfig.getMinIdle());

JedisSentinelPool jedisSentinelPool = new JedisSentinelPool(jedisSentinelConfig.getMasterName(), jedisSentinelConfig.getSentinels(), genericObjectPoolConfig, jedisSentinelConfig.getConnectionTimeout(), jedisSentinelConfig.getSoTimeout(), jedisSentinelConfig.getPassword(), jedisSentinelConfig.getDatabase());

return new MyRedisContainer(jedisSentinelPool);

}

}

redis操作描述类

package com.jike.flink.examples.redis;

import org.apache.flink.streaming.connectors.redis.common.mapper.RedisDataType;

public enum MyRedisCommand {

HGET(RedisDataType.HASH);

private RedisDataType redisDataType;

private MyRedisCommand(RedisDataType redisDataType) {

this.redisDataType = redisDataType;

}

public RedisDataType getRedisDataType() {

return this.redisDataType;

}

}

package com.jike.flink.examples.redis;

import org.apache.flink.streaming.connectors.redis.common.mapper.RedisDataType;

import org.apache.flink.util.Preconditions;

import java.io.Serializable;

public class MyRedisCommandDescription implements Serializable {

private static final long serialVersionUID = 1L;

private MyRedisCommand redisCommand;

private String additionalKey;

public MyRedisCommandDescription(MyRedisCommand redisCommand, String additionalKey) {

Preconditions.checkNotNull(redisCommand, "Redis command type can not be null");

this.redisCommand = redisCommand;

this.additionalKey = additionalKey;

if ((redisCommand.getRedisDataType() == RedisDataType.HASH || redisCommand.getRedisDataType() == RedisDataType.SORTED_SET) && additionalKey == null) {

throw new IllegalArgumentException("Hash and Sorted Set should have additional key");

}

}

public MyRedisCommandDescription(MyRedisCommand redisCommand) {

this(redisCommand, (String)null);

}

public MyRedisCommand getCommand() {

return this.redisCommand;

}

public String getAdditionalKey() {

return this.additionalKey;

}

}

RedisSource

定义flink redis source的实现,该类构造方法接收两个参数,包括redis配置信息以及要读取的redis数据类型信息;open方法会在source打开执行,用了完成redis操作类对象的创建;run方法会一直读取redis数据,并根据数据类型调用对应的redis操作,封装成MyRedisRecord对象,够后续处理

package com.jike.flink.examples.redis;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.source.RichSourceFunction;

import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisConfigBase;

import org.apache.flink.util.Preconditions;

public class RedisSource extends RichSourceFunction<MyRedisRecord>{

private static final long serialVersionUID = 1L;

private String additionalKey;

private MyRedisCommand redisCommand;

private FlinkJedisConfigBase flinkJedisConfigBase;

private MyRedisCommandsContainer redisCommandsContainer;

private volatile boolean isRunning = true;

public RedisSource(FlinkJedisConfigBase flinkJedisConfigBase, MyRedisCommandDescription redisCommandDescription) {

Preconditions.checkNotNull(flinkJedisConfigBase, "Redis connection pool config should not be null");

Preconditions.checkNotNull(redisCommandDescription, "MyRedisCommandDescription can not be null");

this.flinkJedisConfigBase = flinkJedisConfigBase;

this.redisCommand = redisCommandDescription.getCommand();

this.additionalKey = redisCommandDescription.getAdditionalKey();

}

@Override

public void open(Configuration parameters) throws Exception {

this.redisCommandsContainer = MyRedisCommandsContainerBuilder.build(this.flinkJedisConfigBase);

}

@Override

public void run(SourceContext sourceContext) throws Exception {

while (isRunning){

switch(this.redisCommand) {

case HGET:

sourceContext.collect(new MyRedisRecord(this.redisCommandsContainer.hget(this.additionalKey), this.redisCommand.getRedisDataType()));

break;

default:

throw new IllegalArgumentException("Cannot process such data type: " + this.redisCommand);

}

}

}

@Override

public void cancel() {

isRunning = false;

if (this.redisCommandsContainer != null) {

this.redisCommandsContainer.close();

}

}

}

使用

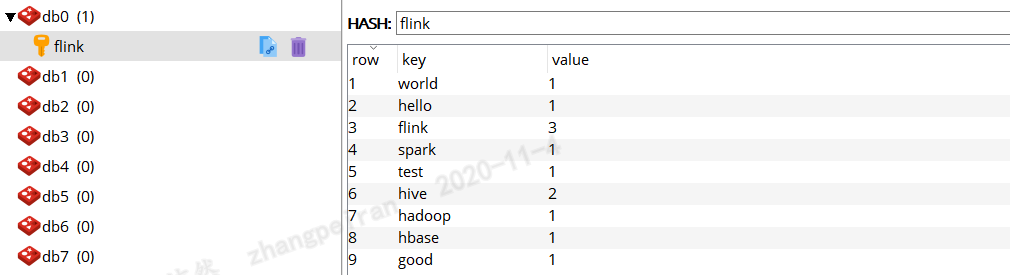

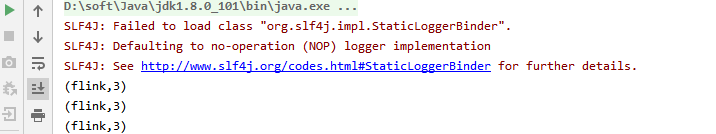

redis中的哈希表保存个各个单词的词频,统计词频最大的单词

package com.jike.flink.examples.redis;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.connectors.redis.common.mapper.RedisDataType;

import org.apache.flink.util.Collector;

import java.util.Map;

public class MyMapRedisRecordSplitter implements FlatMapFunction<MyRedisRecord, Tuple2<String,Integer>> {

@Override

public void flatMap(MyRedisRecord myRedisRecord, Collector<Tuple2<String, Integer>> collector) throws Exception {

assert myRedisRecord.getRedisDataType() == RedisDataType.HASH;

Map<String,String> map = (Map<String,String>)myRedisRecord.getData();

for(Map.Entry<String,String> e : map.entrySet()){

collector.collect(new Tuple2<>(e.getKey(),Integer.valueOf(e.getValue())));

}

}

}

package com.jike.flink.examples.redis;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisPoolConfig;

public class MaxCount{

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment executionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment();

FlinkJedisPoolConfig conf = new FlinkJedisPoolConfig.Builder().setHost("ip").setPort(30420).setPassword("passwd").build();

DataStreamSource<MyRedisRecord> source = executionEnvironment.addSource(new RedisSource(conf,new MyRedisCommandDescription(MyRedisCommand.HGET,"flink")));

DataStream<Tuple2<String, Integer>> max = source.flatMap(new MyMapRedisRecordSplitter()).timeWindowAll(Time.milliseconds(5000)).maxBy(1);

max.print().setParallelism(1);

executionEnvironment.execute();

}

}

结果

Flink读写Redis(三)-读取redis数据的更多相关文章

- ELK之logstash收集日志写入redis及读取redis

logstash->redis->logstash->elasticsearch 1.安装部署redis cd /usr/local/src wget http://download ...

- jxl读写excel, poi读写excel,word, 读取Excel数据到MySQL

这篇blog是介绍: 1. java中的poi技术读取Excel数据,然后保存到MySQL数据中. 2. jxl读写excel 你也可以在 : java的poi技术读取和导入Excel了解到写入Exc ...

- 《闲扯Redis三》Redis五种数据类型之List型

一.前言 Redis 提供了5种数据类型:String(字符串).Hash(哈希).List(列表).Set(集合).Zset(有序集合),理解每种数据类型的特点对于redis的开发和运维非常重要. ...

- 三、Redis基本操作——List

小喵的唠叨话:前面我们介绍了Redis的string的数据结构的原理和操作.当时我们提到Redis的键值对不仅仅是字符串.而这次我们就要介绍Redis的第二个数据结构了,List(链表).由于List ...

- redis+twemproxy实现redis集群

Redis+TwemProxy(nutcracker)集群方案部署记录 转自: http://www.cnblogs.com/kevingrace/p/5685401.html Twemproxy 又 ...

- 数据库应用之--Redis+mysql实现大量数据的读写,以及高并发

一.开发背景 在项目开发过程中中遇到了以下三个需求: 1. 多个用户同时上传数据: 2. 数据库需要支持同时读写: 3. 1分钟内存储上万条数据: 根据对Mysql的测试情况,遇到以下问题: 1. 最 ...

- 在 Istio 中实现 Redis 集群的数据分片、读写分离和流量镜像

Redis 是一个高性能的 key-value 存储系统,被广泛用于微服务架构中.如果我们想要使用 Redis 集群模式提供的高级特性,则需要对客户端代码进行改动,这带来了应用升级和维护的一些困难.利 ...

- logstash读取redis数据

类型设置: logstash中的redis插件,指定了三种方式来读取redis队列中的信息. list=>BLPOP (相当 ...

- 5.1.1 读取Redis 数据

Redis 服务器是Logstash 推荐的Broker选择,Broker 角色就意味会同时存在输入和输出两个插件. 5.1.1 读取Redis 数据 LogStash::Input::Redis 支 ...

随机推荐

- yum 方式安装mysql (完整记录)

2016-04-07 学习笔记,源代码安装比较麻烦,还是要尝试一下yum安装和rpm方式安装 一.检查系统是否安装老版本,有的话干掉 #yum list installed | grep mysqlm ...

- 「CEOI2013」Board

description 洛谷P5513 solution 用一个二进制数维护这个节点所处的位置,那么"1"操作就是这个数\(*2\),"2"操作就是这个数\(* ...

- css3系列之@font-face

@font-face 这个属性呢,必须设置在 css的根下,也就是说,不能设置在任何元素里面. @font-face: 参数: font-family: 给这个文字库 起个名字. src: url( ...

- php数字运算与格式化

浮点数高精度运算 PHP 官方手册 浮点数的精度有限.尽管取决于系统,PHP 通常使用 IEEE 754 双精度格式,则由于取整而导致的最大相对误差为 1.11e-16.非基本数学运算可能会给出更大误 ...

- NameServer路由删除

NameServer会每隔10s扫描brokerLiveTable状态表,如果BrokerLive的lastUpdateTimestamp的时间戳距当前时间超过120s,则认为Broker失效,移除改 ...

- LaTeX中的特殊字符

空白符号代码及注释: 显示效果: 控制符.排版符号.标志符号.引号.连字符.非英文字符和重音符号的代码及注释: 显示效果:

- 如何有效恢复误删的HDFS文件

HDFS是大数据领域比较知名的分布式存储系统,作为大数据相关从业人员,每天处理HDFS上的文件数据是常规操作.这就容易带来一个问题,实际操作中对重要数据文件的误删,那么如何恢复这些文件,就显得尤为重要 ...

- PP-OCR论文翻译

译者注: 我有逛豆瓣社区的习惯,因此不经意间会看到一些外文翻译成中文书的评价."书是好书,翻译太臭"."中文版别看"."有能力尽量看原版". ...

- 第3章 Python的数据类型 第3.1节 功能强大的 Python序列概述

一.概述 序列是Python中最基本的数据结构,C语言中没有这样的数据类型,只有数组有点类似,但序列跟数组差异比较大. 序列的典型特征如下: 序列使用索引来获取元素,这种索引方式适用于所有序列: 序列 ...

- PyQt学习随笔:QTableWidget的visualRow、visualColumn、logicalRow、logicalColumn(可见行、逻辑行、可见列、逻辑列)相关概念及方法探究

老猿Python博文目录 专栏:使用PyQt开发图形界面Python应用 老猿Python博客地址 一.概念 关于逻辑行logicalRow.列logicalColumn和可见行visualRow.列 ...