手写Word2vec算法实现

1. 语料下载:https://dumps.wikimedia.org/zhwiki/latest/zhwiki-latest-pages-articles.xml.bz2 【中文维基百科语料】

2. 语料处理

(1)提取数据集的文本

下载的数据集无法直接使用,需要提取出文本信息。

安装python库:

pip install numpy

pip install scipy

pip install gensim

'''

Description: 提取中文语料

Author: zhangyh

Date: 2024-05-09 21:31:22

LastEditTime: 2024-05-09 22:10:16

LastEditors: zhangyh

'''

import logging

import os.path

import six

import sys

import warnings warnings.filterwarnings(action='ignore', category=UserWarning, module='gensim')

from gensim.corpora import WikiCorpus if __name__ == '__main__':

program = os.path.basename(sys.argv[0])

logger = logging.getLogger(program) logging.basicConfig(format='%(asctime)s: %(levelname)s: %(message)s')

logging.root.setLevel(level=logging.INFO)

logger.info("running %s" % ' '.join(sys.argv)) # check and process input arguments

if len(sys.argv) != 3:

print("Using: python process_wiki.py enwiki.xxx.xml.bz2 wiki.en.text")

sys.exit(1)

inp, outp = sys.argv[1:3]

space = " "

i = 0 output = open(outp, 'w',encoding='utf-8')

wiki = WikiCorpus(inp, dictionary={})

for text in wiki.get_texts():

output.write(space.join(text) + "\n")

i=i+1

if (i%10000==0):

logger.info("Saved " + str(i) + " articles") output.close()

logger.info("Finished Saved " + str(i) + " articles")

运行代码提取文本:

PS C:\Users\zhang\Desktop\nlp 自然语言处理\data> python .\process_wiki.py .\zhwiki-latest-pages-articles.xml.bz2 wiki_zh.text

2024-05-09 21:43:10,036: INFO: running .\process_wiki.py .\zhwiki-latest-pages-articles.xml.bz2 wiki_zh.text

2024-05-09 21:44:02,944: INFO: Saved 10000 articles

2024-05-09 21:44:51,875: INFO: Saved 20000 articles

...

2024-05-09 22:22:34,244: INFO: Saved 460000 articles

2024-05-09 22:23:33,323: INFO: Saved 470000 articles

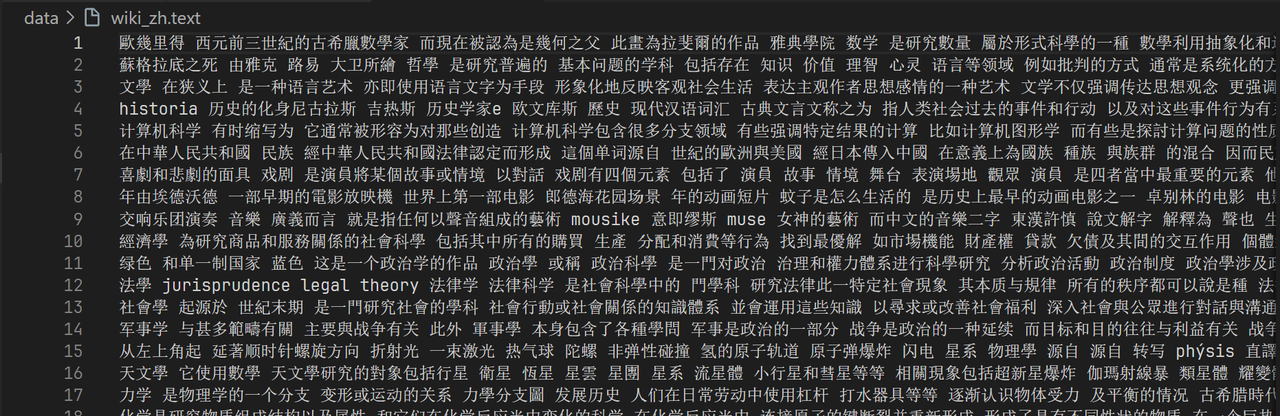

提取后的文本(有繁体字):

(2)转繁体为简体

- opencc工具进行繁简转换,下载opencc:https://bintray.com/package/files/byvoid/opencc/OpenCC

- 执行命令进行转换

opencc -i wiki_zh.text -o wiki_sample_chinese.text -c "C:\Program Files\OpenCC\build\share\opencc\t2s.json"

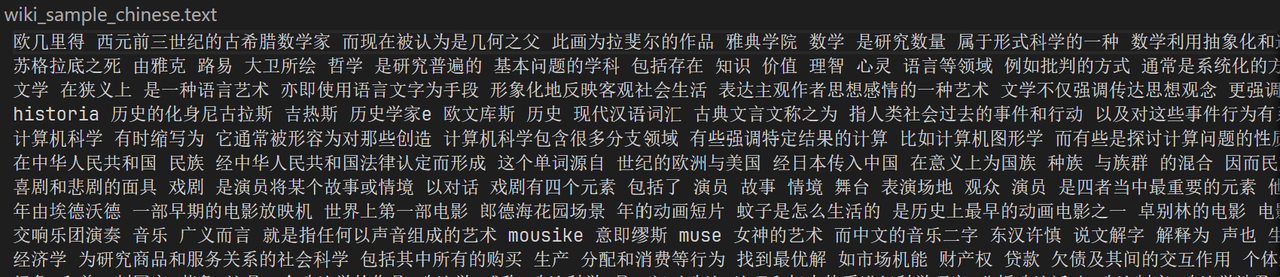

- 转换后的简体文本如下:

(3)分词(使用jieba分词)

- 分词代码:

'''

Description:

Author: zhangyh

Date: 2024-05-10 22:48:45

LastEditTime: 2024-05-10 23:02:57

LastEditors: zhangyh

'''

#文章分词

import jieba

import jieba.analyse

import codecs

import os

import sys

sys.path.append(os.path.dirname(os.path.abspath(__file__))) # def cut_words(sentence):

# return " ".join(jieba.cut(sentence)).encode('utf-8') f=codecs.open('data\\wiki_sample_chinese.text','r',encoding="utf8")

target = codecs.open("data\\wiki_word_cutted_result.text", 'w',encoding="utf8") line_num=1

line = f.readline()

while line:

print('---- processing', line_num, 'article----------------')

line_seg = " ".join(jieba.cut(line))

target.writelines(line_seg)

line_num = line_num + 1

line = f.readline() f.close()

target.close() # exit()

# while line:

# curr = []

# for oneline in line:

# #print(oneline)

# curr.append(oneline)

# after_cut = map(cut_words, curr)

# target.writelines(after_cut)

# print ('saved',line_num,'articles')

# exit()

# line = f.readline1()

# f.close()

# target.close()

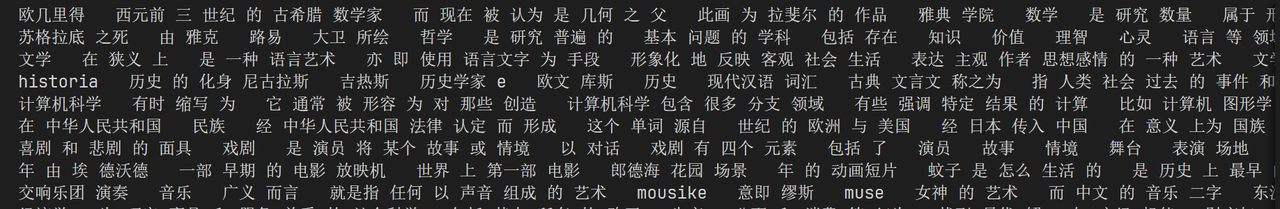

- 分词后的结果

3. 模型训练

(1)skip-gram模型

'''

Description:

Author: zhangyh

Date: 2024-05-12 21:51:03

LastEditTime: 2024-05-16 11:08:59

LastEditors: zhangyh

'''

import numpy as np

import pandas as pd

import pickle

from tqdm import tqdm

import os

import sys

import random sys.path.append(os.path.dirname(os.path.dirname(os.path.abspath(__file__)))) def load_stop_words(file = "作业-skipgram\\stopwords.txt"):

with open(file,"r",encoding = "utf-8") as f:

return f.read().split("\n") def load_cutted_data(num_lines: int):

stop_words = load_stop_words()

data = []

# with open('wiki_word_cutted_result.text', mode='r', encoding='utf-8') as file:

with open('作业-skipgram\\wiki_word_cutted_result.text', mode='r', encoding='utf-8') as file:

for line in tqdm(file.readlines()[:num_lines]):

words_list = line.split()

words_list = [word for word in words_list if word not in stop_words]

data += words_list

data = list(set(data))

return data def get_dict(data):

index_2_word = []

word_2_index = {} for word in tqdm(data):

if word not in word_2_index:

index = len(index_2_word)

word_2_index[word] = index

index_2_word.append(word) word_2_onehot = {}

word_size = len(word_2_index)

for word, index in tqdm(word_2_index.items()):

one_hot = np.zeros((1, word_size))

one_hot[0, index] = 1

word_2_onehot[word] = one_hot return word_2_index, index_2_word, word_2_onehot def softmax(x):

ex = np.exp(x)

return ex/np.sum(ex,axis = 1,keepdims = True) # 负采样

# def negative_sampling(word_2_index, word_count, num_negative_samples):

# word_probs = [word_count[word]**0.75 for word in word_2_index]

# word_probs = np.array(word_probs) / sum(word_probs)

# neg_samples = np.random.choice(len(word_2_index), size=num_negative_samples, replace=True, p=word_probs)

# return neg_samples if __name__ == "__main__": batch_size = 562 # 定义批量大小 data = load_cutted_data(5) word_2_index, index_2_word, word_2_onehot = get_dict(data) word_size = len(word_2_index)

embedding_num = 100

lr = 0.01

epochs = 200

n_gram = 3

# num_negative_samples = 5 # 计算词频

# word_count = dict.fromkeys(word_2_index, 0)

# for word in data:

# word_count[word] += 1 batches = [data[j:j+batch_size] for j in range(0, len(data), batch_size)] w1 = np.random.normal(-1,1,size = (word_size,embedding_num))

w2 = np.random.normal(-1,1,size = (embedding_num,word_size)) for i in range(epochs):

print(f'-------- epoch {i + 1} --------')

for batch in tqdm(batches):

for i in tqdm(range(len(batch))):

now_word = batch[i]

now_word_onehot = word_2_onehot[now_word]

other_words = batch[max(0, i - n_gram): i] + batch[i + 1: min(len(batch), i + n_gram + 1)]

for other_word in other_words:

other_word_onehot = word_2_onehot[other_word] hidden = now_word_onehot @ w1

p = hidden @ w2

pre = softmax(p)

# A @ B = C

# delta_C = G

# delta_A = G @ B.T

# delta_B = A.T @ G

G2 = pre - other_word_onehot

delta_w2 = hidden.T @ G2

G1 = G2 @ w2.T

delta_w1 = now_word_onehot.T @ G1 w1 -= lr * delta_w1

w2 -= lr * delta_w2 with open("作业-skipgram\\word2vec_skipgram.pkl","wb") as f:

# with open("word2vec_skipgram.pkl","wb") as f:

pickle.dump([w1, word_2_index, index_2_word, w2], f)

(2)CBOW 模型

'''

Description:

Author: zhangyh

Date: 2024-05-13 20:47:57

LastEditTime: 2024-05-16 09:21:40

LastEditors: zhangyh

'''

import numpy as np

import pandas as pd

import pickle

from tqdm import tqdm

import os

import sys sys.path.append(os.path.dirname(os.path.dirname(os.path.abspath(__file__)))) def load_stop_words(file = "stopwords.txt"):

with open(file,"r",encoding = "utf-8") as f:

return f.read().split("\n") def load_cutted_data(num_lines: int):

stop_words = load_stop_words()

data = []

with open('wiki_word_cutted_result.text', mode='r', encoding='utf-8') as file:

# with open('作业-CBOW\\wiki_word_cutted_result.text', mode='r', encoding='utf-8') as file:

for line in tqdm(file.readlines()[:num_lines]):

words_list = line.split()

words_list = [word for word in words_list if word not in stop_words]

data += words_list

data = list(set(data))

return data def get_dict(data):

index_2_word = []

word_2_index = {} for word in tqdm(data):

if word not in word_2_index:

index = len(index_2_word)

word_2_index[word] = index

index_2_word.append(word) word_2_onehot = {}

word_size = len(word_2_index)

for word, index in tqdm(word_2_index.items()):

one_hot = np.zeros((1, word_size))

one_hot[0, index] = 1

word_2_onehot[word] = one_hot return word_2_index, index_2_word, word_2_onehot def softmax(x):

ex = np.exp(x)

return ex/np.sum(ex,axis = 1,keepdims = True) if __name__ == "__main__": batch_size = 562

data = load_cutted_data(5) word_2_index, index_2_word, word_2_onehot = get_dict(data) word_size = len(word_2_index)

embedding_num = 100

lr = 0.01

epochs = 200

context_window = 3 batches = [data[j:j+batch_size] for j in range(0, len(data), batch_size)] w1 = np.random.normal(-1,1,size = (word_size,embedding_num))

w2 = np.random.normal(-1,1,size = (embedding_num,word_size)) for i in range(epochs):

print(f'-------- epoch {i + 1} --------')

for batch in tqdm(batches):

for i in tqdm(range(len(batch))):

target_word = batch[i]

context_words = batch[max(0, i - context_window): i] + batch[i + 1: min(len(batch), i + context_window + 1)] # 获取上下文词的词向量的平均值作为输入

context_vectors = np.mean([word_2_onehot[word] for word in context_words], axis=0) # 计算输出层

hidden = context_vectors @ w1

p = hidden @ w2

pre = softmax(p) # 交叉熵损失函数

# loss = -np.log(pre[word_2_index[target_word], 0]) # 反向传播更新参数

G2 = pre - word_2_onehot[target_word]

delta_w2 = hidden.T @ G2

G1 = G2 @ w2.T

delta_w1 = context_vectors.T @ G1 w1 -= lr * delta_w1

w2 -= lr * delta_w2 # with open("作业-CBOW\\word2vec_cbow.pkl","wb") as f:

with open("word2vec_cbow.pkl","wb") as f:

pickle.dump([w1, word_2_index, index_2_word, w2], f)

4. 训练结果

(1)余弦相似度计算

'''

Description:

Author: zhangyh

Date: 2024-05-13 20:12:56

LastEditTime: 2024-05-16 21:16:19

LastEditors: zhangyh

'''

import pickle

import numpy as np # w1, voc_index, index_voc, w2 = pickle.load(open('word2vec_cbow.pkl','rb'))

w1, voc_index, index_voc, w2 = pickle.load(open('作业-CBOW\\word2vec_cbow.pkl','rb')) def word_voc(word):

return w1[voc_index[word]] def voc_sim(word, top_n):

v_w1 = word_voc(word)

word_sim = {}

for i in range(len(voc_index)):

v_w2 = w1[i]

theta_sum = np.dot(v_w1, v_w2)

theta_den = np.linalg.norm(v_w1) * np.linalg.norm(v_w2)

theta = theta_sum / theta_den

word = index_voc[i]

word_sim[word] = theta

words_sorted = sorted(word_sim.items(), key=lambda kv: kv[1], reverse=True)

for word, sim in words_sorted[:top_n]:

# print(f'word: {word}, similiar: {sim}, vector: {w1[voc_index[word]]}')

print(f'word: {word}, similiar: {sim}') voc_sim('学院', 20)

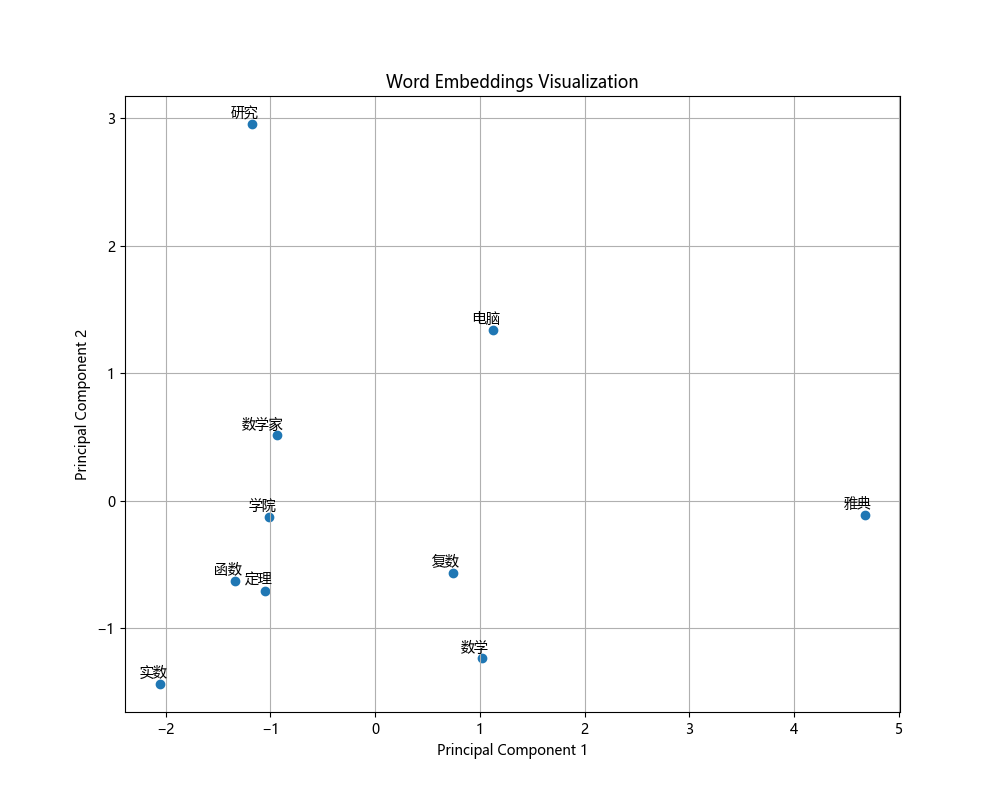

(2)可视化展示

'''

Description:

Author: zhangyh

Date: 2024-05-16 21:41:33

LastEditTime: 2024-05-17 23:50:07

LastEditors: zhangyh

'''

import numpy as np

import pandas as pd

import pickle

from sklearn.decomposition import PCA

import matplotlib.pyplot as plt plt.rcParams['font.family'] = ['Microsoft YaHei', 'SimHei', 'sans-serif'] # Load trained word embeddings

with open("word2vec_cbow.pkl", "rb") as f:

w1, word_2_index, index_2_word, w2 = pickle.load(f) # Select specific words for visualization

visual_words = ['研究', '电脑', '雅典', '数学', '数学家', '学院', '函数', '定理', '实数', '复数'] # Get the word vectors corresponding to the selected words

subset_vectors = np.array([w1[word_2_index[word]] for word in visual_words]) # Perform PCA for dimensionality reduction

pca = PCA(n_components=2)

reduced_vectors = pca.fit_transform(subset_vectors) # Visualization

plt.figure(figsize=(10, 8))

plt.scatter(reduced_vectors[:, 0], reduced_vectors[:, 1], marker='o')

for i, word in enumerate(visual_words):

plt.annotate(word, xy=(reduced_vectors[i, 0], reduced_vectors[i, 1]), xytext=(5, 2),

textcoords='offset points', ha='right', va='bottom')

plt.title('Word Embeddings Visualization')

plt.xlabel('Principal Component 1')

plt.ylabel('Principal Component 2')

plt.grid(True)

plt.show()

(3)类比实验探索(例如:王子 - 男 + 女 = 公主)

'''

Description:

Author: zhangyh

Date: 2024-05-16 23:13:21

LastEditTime: 2024-05-19 11:51:53

LastEditors: zhangyh

'''

import numpy as np

import pickle

from sklearn.metrics.pairwise import cosine_similarity # 加载训练得到的词向量

with open("word2vec_cbow.pkl", "rb") as f:

w1, word_2_index, index_2_word, w2 = pickle.load(f) # 计算类比关系

v_prince = w1[word_2_index["王子"]]

v_man = w1[word_2_index["男"]]

v_woman = w1[word_2_index["女"]]

v_princess = v_prince - v_man + v_woman # 找出最相近的词向量

similarities = cosine_similarity(v_princess.reshape(1, -1), w1)

most_similar_index = np.argmax(similarities)

most_similar_word = index_2_word[most_similar_index] print("结果:", most_similar_word)

手写Word2vec算法实现的更多相关文章

- [纯C#实现]基于BP神经网络的中文手写识别算法

效果展示 这不是OCR,有些人可能会觉得这东西会和OCR一样,直接进行整个字的识别就行,然而并不是. OCR是2维像素矩阵的像素数据.而手写识别不一样,手写可以把用户写字的笔画时间顺序,抽象成一个维度 ...

- 08.手写KNN算法测试

导入库 import numpy as np from sklearn import datasets import matplotlib.pyplot as plt 导入数据 iris = data ...

- 用C实现单隐层神经网络的训练和预测(手写BP算法)

实验要求:•实现10以内的非负双精度浮点数加法,例如输入4.99和5.70,能够预测输出为10.69•使用Gprof测试代码热度 代码框架•随机初始化1000对数值在0~10之间的浮点数,保存在二维数 ...

- 手写KMeans算法

KMeans算法是一种无监督学习,它会将相似的对象归到同一类中. 其基本思想是: 1.随机计算k个类中心作为起始点. 将数据点分配到理其最近的类中心. 3.移动类中心. 4.重复2,3直至类中心不再改 ...

- 手写k-means算法

作为聚类的代表算法,k-means本属于NP难问题,通过迭代优化的方式,可以求解出近似解. 伪代码如下: 1,算法部分 距离采用欧氏距离.参数默认值随意选的. import numpy as np d ...

- Javascript 手写 LRU 算法

LRU 是 Least Recently Used 的缩写,即最近最少使用.作为一种经典的缓存策略,它的基本思想是长期不被使用的数据,在未来被用到的几率也不大,所以当新的数据进来时我们可以优先把这些数 ...

- 手写LRU算法

import java.util.LinkedHashMap; import java.util.Map; public class LRUCache<K, V> extends Link ...

- 手写hashmap算法

/** * 01.自定义一个hashmap * 02.实现put增加键值对,实现key重复时替换key的值 * 03.重写toString方法,方便查看map中的键值对信息 * 04.实现get方法, ...

- 手写BP(反向传播)算法

BP算法为深度学习中参数更新的重要角色,一般基于loss对参数的偏导进行更新. 一些根据均方误差,每层默认激活函数sigmoid(不同激活函数,则更新公式不一样) 假设网络如图所示: 则更新公式为: ...

- 面试题目:手写一个LRU算法实现

一.常见的内存淘汰算法 FIFO 先进先出 在这种淘汰算法中,先进⼊缓存的会先被淘汰 命中率很低 LRU Least recently used,最近最少使⽤get 根据数据的历史访问记录来进⾏淘汰 ...

随机推荐

- Centos环境部署SpringBoot项目

centos JDK Jenkins maven tomcat git myslq nginx 7.9 11.0.19 2.418 3.8.1 9.0.78 2.34.4 5.7.26 1.24.0 ...

- 开源相机管理库Aravis例程学习(一)——单帧采集single-acquisition

目录 简介 源码 函数说明 arv_camera_new arv_camera_acquisition arv_camera_get_model_name arv_buffer_get_image_w ...

- leetcode:3. 无重复字符的最长子串

3. 无重复字符的最长子串 给定一个字符串,请你找出其中不含有重复字符的 最长子串 的长度. 示例 1: 输入: "abcabcbb" 输出: 3 解释: 因为无重复字符的最长子 ...

- 力扣1768(java&python)-交替合并字符串(简单)

题目: 给你两个字符串 word1 和 word2 .请你从 word1 开始,通过交替添加字母来合并字符串.如果一个字符串比另一个字符串长,就将多出来的字母追加到合并后字符串的末尾. 返回 合并后的 ...

- HarmonyOS NEXT应用开发案例—自定义日历选择器

介绍 本示例介绍通过CustomDialogController类显示自定义日历选择器. 效果图预览 使用说明 加载完成后显示主界面,点当前日期后会弹出日历选择器,选择日期后会关闭弹窗,主页面日期会变 ...

- PyFlink 开发环境利器:Zeppelin Notebook

简介: 在 Zeppelin notebook 里利用 Conda 来创建 Python env 自动部署到 Yarn 集群中. PyFlink 作为 Flink 的 Python 语言入口,其 Py ...

- .NET周刊【4月第2期 2024-04-21】

国内文章 他来了他来了,.net开源智能家居之苹果HomeKit的c#原生sdk[Homekit.Net]1.0.0发布,快来打造你的私人智能家居吧 https://www.cnblogs.com/h ...

- [Caddy2] cloudflare, acme: cleaning up failed: no memory of presenting a DNS record

使用 cloudflare 做为 DNS 之后,使用 Caddy 申请 Lets Encrypt 证书. 有时在日志里会发现一系列的提示信息: acme: use dns-01 solver acme ...

- 在英特尔至强 CPU 上使用 🤗 Optimum Intel 实现超快 SetFit 推理

在缺少标注数据场景,SetFit 是解决的建模问题的一个有前途的解决方案,其由 Hugging Face 与 Intel 实验室 以及 UKP Lab 合作共同开发.作为一个高效的框架,SetFit ...

- linux文件查找工具详解

linux文件查找详解 目录 linux文件查找详解 1.linux文件查找工具 1.1 find命令详解 1.1.1 根据文件名查找 1.1.2 根据属主属组查找 1.1.3 根据文件类型查找 1. ...