Fundmentals in Stream Computing

Spark programs are structured on RDDs: they invole reading data from stable storage into the RDD format, performing a number of computations and

data transformations on the RDD, and writing the result RDD to stable storage on collecting to the driver. Thus, most of the power of Spark comes from

its transformation: operations that are defined on RDDs and return RDDs.

1. Need core underlying layer as basic fundmentals

2. Providing the API to high level

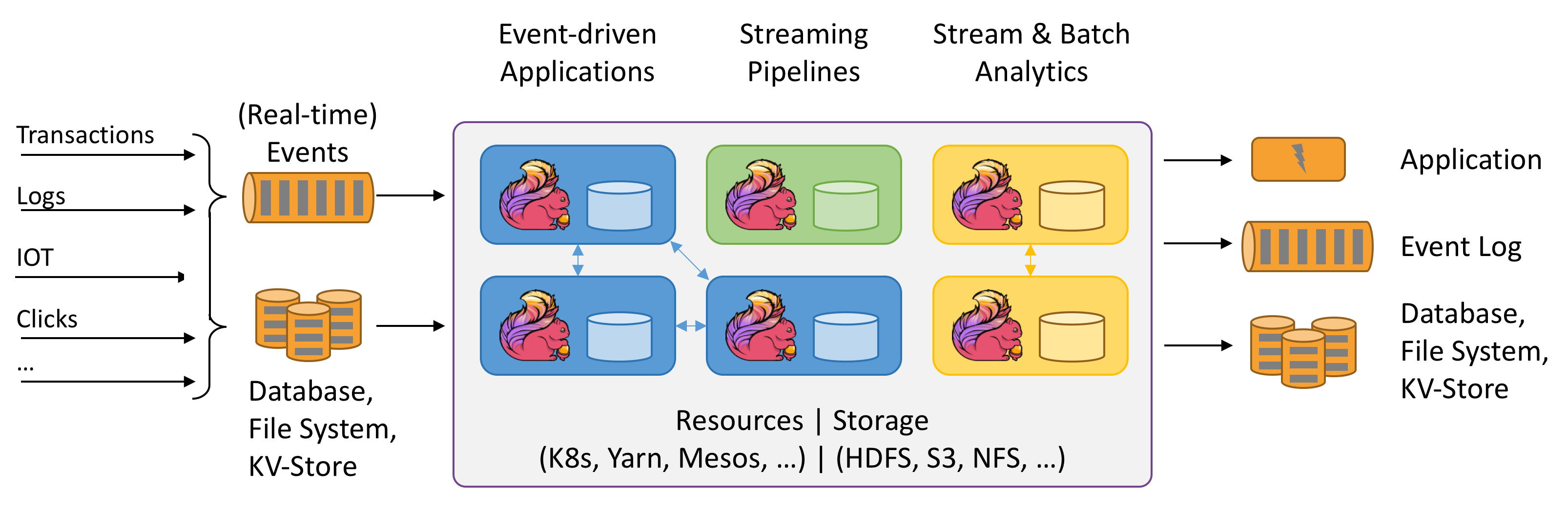

3. Stream computing = core underlying API + Distributed RPC + Computing Template + Cluster of executor

4.What will be computed, the Sequence of computed and definition of (K,V) are totally in hand of Users through the defined Computing Template.

5. We can say that Distributed Computing is a kind of platform to provide more Computing Template to operate the user data which is splited and distributed in cluster.

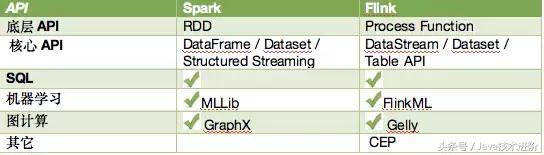

6. The ML/Bigdata SQL alike use these Stream API to do there jobs.

7. Remmeber that Stream Computing is a platform or runtime of operating distributed data with Computing Template (transformation API).

8. We can see a lot of common between StreamComputing and OS, which all provide the API to have operation on Data in Stream and on Hardeware in OS.

9.Stream Computing Runtime has API of Computing Template / Computing Generic; OS has API of Resource Operation on PC hardware.

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies.

| ransformation | Description |

|---|---|

| Map DataStream → DataStream |

Takes one element and produces one element. A map function that doubles the values of the input stream: |

| FlatMap DataStream → DataStream |

Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words: |

| Filter DataStream → DataStream |

Evaluates a boolean function for each element and retains those for which the function returns true. A filter that filters out zero values: |

| KeyBy DataStream → KeyedStream |

Logically partitions a stream into disjoint partitions. All records with the same key are assigned to the same partition. Internally, keyBy() is implemented with hash partitioning. There are different ways to specify keys. This transformation returns a KeyedStream, which is, among other things, required to use keyed state. Attention A type cannot be a key if:

|

| Reduce KeyedStream → DataStream |

A "rolling" reduce on a keyed data stream. Combines the current element with the last reduced value and emits the new value. A reduce function that creates a stream of partial sums: |

| Fold KeyedStream → DataStream |

A "rolling" fold on a keyed data stream with an initial value. Combines the current element with the last folded value and emits the new value. A fold function that, when applied on the sequence (1,2,3,4,5), emits the sequence "start-1", "start-1-2", "start-1-2-3", ... |

| Aggregations KeyedStream → DataStream |

Rolling aggregations on a keyed data stream. The difference between min and minBy is that min returns the minimum value, whereas minBy returns the element that has the minimum value in this field (same for max and maxBy). |

| Window KeyedStream → WindowedStream |

Windows can be defined on already partitioned KeyedStreams. Windows group the data in each key according to some characteristic (e.g., the data that arrived within the last 5 seconds). See windows for a complete description of windows. |

| WindowAll DataStream → AllWindowedStream |

Windows can be defined on regular DataStreams. Windows group all the stream events according to some characteristic (e.g., the data that arrived within the last 5 seconds). See windows for a complete description of windows. WARNING: This is in many cases a non-parallel transformation. All records will be gathered in one task for the windowAll operator. |

| Window Apply WindowedStream → DataStream AllWindowedStream → DataStream |

Applies a general function to the window as a whole. Below is a function that manually sums the elements of a window. Note: If you are using a windowAll transformation, you need to use an AllWindowFunction instead. |

| Window Reduce WindowedStream → DataStream |

Applies a functional reduce function to the window and returns the reduced value. |

| Window Fold WindowedStream → DataStream |

Applies a functional fold function to the window and returns the folded value. The example function, when applied on the sequence (1,2,3,4,5), folds the sequence into the string "start-1-2-3-4-5": |

| Aggregations on windows WindowedStream → DataStream |

Aggregates the contents of a window. The difference between min and minBy is that min returns the minimum value, whereas minBy returns the element that has the minimum value in this field (same for max and maxBy). |

| Union DataStream* → DataStream |

Union of two or more data streams creating a new stream containing all the elements from all the streams. Note: If you union a data stream with itself you will get each element twice in the resulting stream. |

| Window Join DataStream,DataStream → DataStream |

Join two data streams on a given key and a common window. |

| Interval Join KeyedStream,KeyedStream → DataStream |

Join two elements e1 and e2 of two keyed streams with a common key over a given time interval, so that e1.timestamp + lowerBound <= e2.timestamp <= e1.timestamp + upperBound |

| Window CoGroup DataStream,DataStream → DataStream |

Cogroups two data streams on a given key and a common window. |

| Connect DataStream,DataStream → ConnectedStreams |

"Connects" two data streams retaining their types. Connect allowing for shared state between the two streams. |

| CoMap, CoFlatMap ConnectedStreams → DataStream |

Similar to map and flatMap on a connected data stream |

| Split DataStream → SplitStream |

Split the stream into two or more streams according to some criterion. |

| Select SplitStream → DataStream |

Select one or more streams from a split stream. |

| Iterate DataStream → IterativeStream → DataStream |

Creates a "feedback" loop in the flow, by redirecting the output of one operator to some previous operator. This is especially useful for defining algorithms that continuously update a model. The following code starts with a stream and applies the iteration body continuously. Elements that are greater than 0 are sent back to the feedback channel, and the rest of the elements are forwarded downstream. See iterations for a complete description. |

| Extract Timestamps DataStream → DataStream |

Extracts timestamps from records in order to work with windows that use event time semantics. See Event Time. |

Fundmentals in Stream Computing的更多相关文章

- [Note] Stream Computing

Stream Computing 概念对比 静态数据和流数据 静态数据,例如数据仓库中存放的大量历史数据,特点是不会发生更新,可以利用数据挖掘技术和 OLAP(On-Line Analytical P ...

- Stream computing

stream data 从广义上说,所有大数据的生成均可以看作是一连串发生的离散事件.这些离散的事件以时间轴为维度进行观看就形成了一条条事件流/数据流.不同于传统的离线数据,流数据是指由数千个数据源持 ...

- [Linux] 流 ( Stream )、管道 ( Pipeline ) 、Filter - 笔记

流 ( Stream ) 1. 流,是指可使用的数据元素一个序列. 2. 流,可以想象为是传送带上等待加工处理的物品,也可以想象为工厂流水线上的物品. 3. 流,可以是无限的数据. 4. 有一种功能, ...

- MapReduce的核心资料索引 [转]

转自http://prinx.blog.163.com/blog/static/190115275201211128513868/和http://www.cnblogs.com/jie46583173 ...

- 分布式系统(Distributed System)资料

这个资料关于分布式系统资料,作者写的太好了.拿过来以备用 网址:https://github.com/ty4z2008/Qix/blob/master/ds.md 希望转载的朋友,你可以不用联系我.但 ...

- 资源list:Github上关于大数据的开源项目、论文等合集

Awesome Big Data A curated list of awesome big data frameworks, resources and other awesomeness. Ins ...

- Mac OS X 背后的故事

Mac OS X 背后的故事 作者: 王越 来源: <程序员> 发布时间: 2013-01-22 10:55 阅读: 25840 次 推荐: 49 原文链接 [收藏] ...

- 想从事分布式系统,计算,hadoop等方面,需要哪些基础,推荐哪些书籍?--转自知乎

作者:廖君链接:https://www.zhihu.com/question/19868791/answer/88873783来源:知乎 分布式系统(Distributed System)资料 < ...

- 【原】Kryo序列化篇

Kryo是一个快速有效的对象图序列化Java库.它的目标是快速.高效.易使用.该项目适用于对象持久化到文件或数据库中或通过网络传输.Kryo还可以自动实现深浅的拷贝/克隆. 就是直接复制一个对象对象到 ...

随机推荐

- String字符串补位

String类的format()方法用于创建格式化的字符串以及连接多个字符串对象.熟悉C语言的读者应该记得C语言的sprintf()方法,两者有类似之处.format()方法有两种重载形式. l ...

- QT 相关

Qt是一个GUI框架,在GUI程序中,主线程也叫GUI线程,因为它是唯一被允许执行GUI相关操作的线程.对于一些耗时的操作,如果放在主线程中,就是出现界面无法响应的问题. 解决方法一:在处理耗时操作中 ...

- scrapy源码分析(转)

记录一下两个讲解scrapy源码的博客: 1.http://kaito-kidd.com/2016/11/21/scrapy-code-analyze-component-initialization ...

- Python学习 day09

一.文件的修改 python中修改文件,可以直接通过write实现,但这种方法均比较局限.若有需求:将文件中的某内容替换为新内容,其他内容保持不变.这种需求write理论上是可以实现的,可以将一个文件 ...

- Java - String中的==、equals及StringBuffer(转自CSDN 作者:chenrui_)

equals是比较值/对象是否相同,==则比较的是引用地址是否相同. == 如果是基本类型则表示值相等,如果是引用类型则表示地址相等即是同一个对象 package com.char3; public ...

- docker升级

使用系统自带的docker源安装docker,安装的版本都是偏低的,因此需要进行版本升级 升级步骤如下: 1. 查找现主机上关于docker的已安装包, 若列出为空,跳过第2步 rpm -qa|gre ...

- Java框架-mybatis02基本的crud操作

1.搭建mybatis框架 1)导入相关jar包 2)编写核心配置文件(配置数据库连接的相关信息以及配置mapper映射文件) 3)编写dao操作 4)编写mapper映射文件 5)编写实体类 2.执 ...

- gRPC GoLang Test

gRPC是Google开源的一个高性能.跨语言的RPC框架,基于HTTP2协议,基于protobuf 3.x,基于Netty 4.x +. gRPC与thrift.avro-rpc.WCF等其实在总体 ...

- 使用express创建node服务器的两种方法及区别

使用express创建node服务器有两种方法,如下所示: 方法一: var express = require('express'); var app = express(); app.listen ...

- Robot Framework自动化测试三(selenium API)

Robot Framework Selenium API 说明: 此文档只是将最常用的UI 操作列出.更多方法请查找selenium2Library 关键字库. 一.浏览器驱动 通过不同的浏览器 ...