spark Mllib SVM实例

Mllib SVM实例

1、数据

数据格式为:标签, 特征1 特征2 特征3……

0 128:51 129:159 130:253 131:159 132:50 155:48 156:238 157:252 158:252 159:252 160:237 182:54 183:227 184:253 185:252 186:239 187:233 188:252 189:57 190:6 208:10 209:60 210:224 211:252 212:253 213:252 214:202 215:84 216:252 217:253 218:122 236:163 237:252 238:252 239:252 240:253 241:252 242:252 243:96 244:189 245:253 246:167 263:51 264:238 265:253 266:253 267:190 268:114 269:253 270:228 271:47 272:79 273:255 274:168 290:48 291:238 292:252 293:252 294:179 295:12 296:75 297:121 298:21 301:253 302:243 303:50 317:38 318:165 319:253 320:233 321:208 322:84 329:253 330:252 331:165 344:7 345:178 346:252 347:240 348:71 349:19 350:28 357:253 358:252 359:195 372:57 373:252 374:252 375:63 385:253 386:252 387:195 400:198 401:253 402:190 413:255 414:253 415:196 427:76 428:246 429:252 430:112 441:253 442:252 443:148 455:85 456:252 457:230 458:25 467:7 468:135 469:253 470:186 471:12 483:85 484:252 485:223 494:7 495:131 496:252 497:225 498:71 511:85 512:252 513:145 521:48 522:165 523:252 524:173 539:86 540:253 541:225 548:114 549:238 550:253 551:162 567:85 568:252 569:249 570:146 571:48 572:29 573:85 574:178 575:225 576:253 577:223 578:167 579:56 595:85 596:252 597:252 598:252 599:229 600:215 601:252 602:252 603:252 604:196 605:130 623:28 624:199 625:252 626:252 627:253 628:252 629:252 630:233 631:145 652:25 653:128 654:252 655:253 656:252 657:141 658:37

1 159:124 160:253 161:255 162:63 186:96 187:244 188:251 189:253 190:62 214:127 215:251 216:251 217:253 218:62 241:68 242:236 243:251 244:211 245:31 246:8 268:60 269:228 270:251 271:251 272:94 296:155 297:253 298:253 299:189 323:20 324:253 325:251 326:235 327:66 350:32 351:205 352:253 353:251 354:126 378:104 379:251 380:253 381:184 382:15 405:80 406:240 407:251 408:193 409:23 432:32 433:253 434:253 435:253 436:159 460:151 461:251 462:251 463:251 464:39 487:48 488:221 489:251 490:251 491:172 515:234 516:251 517:251 518:196 519:12 543:253 544:251 545:251 546:89 570:159 571:255 572:253 573:253 574:31 597:48 598:228 599:253 600:247 601:140 602:8 625:64 626:251 627:253 628:220 653:64 654:251 655:253 656:220 681:24 682:193 683:253 684:220

……

2、代码

//1 读取样本数据 val data_path = "/user/tmp/sample_libsvm_data.txt" val examples = MLUtils.loadLibSVMFile(sc, data_path).cache() //2 样本数据划分训练样本与测试样本 val splits = examples.randomSplit(Array(0.6, 0.4), seed = 11L) val training = splits(0).cache() val test = splits(1) val numTraining = training.count() val numTest = test.count() println(s"Training: $numTraining, test: $numTest.") //3 新建SVM模型,并设置训练参数 val numIterations = 1000 val stepSize = 1 val miniBatchFraction = 1.0 val model = SVMWithSGD.train(training, numIterations, stepSize, miniBatchFraction)

//4 对测试样本进行测试 val prediction = model.predict(test.map(_.features)) val predictionAndLabel = prediction.zip(test.map(_.label)) //5 计算测试误差 val metrics = new MulticlassMetrics(predictionAndLabel) val precision = metrics.precision println("Precision = " + precision)

------------------------------------我叫分隔线------------------------------------

The following code snippet illustrates how to load a sample dataset, execute a training algorithm on this training data using a static method in the algorithm object, and make predictions with the resulting model to compute the training error.

import org.apache.spark.SparkContext

import org.apache.spark.mllib.classification.{SVMModel, SVMWithSGD}

import org.apache.spark.mllib.evaluation.BinaryClassificationMetrics

import org.apache.spark.mllib.regression.LabeledPoint

import org.apache.spark.mllib.linalg.Vectors

import org.apache.spark.mllib.util.MLUtils // Load training data in LIBSVM format.

val data = MLUtils.loadLibSVMFile(sc, "data/mllib/sample_libsvm_data.txt") // Split data into training (60%) and test (40%).

val splits = data.randomSplit(Array(0.6, 0.4), seed = 11L)

val training = splits(0).cache()

val test = splits(1) // Run training algorithm to build the model

val numIterations = 100

val model = SVMWithSGD.train(training, numIterations) // Clear the default threshold.

model.clearThreshold() // Compute raw scores on the test set.

val scoreAndLabels = test.map { point =>

val score = model.predict(point.features)

(score, point.label)

} // Get evaluation metrics.

val metrics = new BinaryClassificationMetrics(scoreAndLabels)

val auROC = metrics.areaUnderROC() println("Area under ROC = " + auROC) // Save and load model

model.save(sc, "myModelPath")

val sameModel = SVMModel.load(sc, "myModelPath")

The SVMWithSGD.train() method by default performs L2 regularization with the regularization parameter set to 1.0. If we want to configure this algorithm, we can customize SVMWithSGD further by creating a new object directly and calling setter methods. All other MLlib algorithms support customization in this way as well. For example, the following code produces an L1 regularized variant of SVMs with regularization parameter set to 0.1, and runs the training algorithm for 200 iterations.

import org.apache.spark.mllib.optimization.L1Updater val svmAlg = new SVMWithSGD()

svmAlg.optimizer.

setNumIterations(200).

setRegParam(0.1).

setUpdater(new L1Updater)

val modelL1 = svmAlg.run(training)

------------------------------------我叫分隔线------------------------------------

1.数据

0 2.857738033247042 0 2.061393766919624 2.619965104088255 0 2.004684436494304 2.000347299268466 2.122974378789621 2.228387042742021 2.228387042742023 0 0 0 0 12.72816758217773 0

1 2.857738033247042 0 2.061393766919624 2.619965104088255 0 2.004684436494304 2.000347299268466 2.122974378789621 2.228387042742021 2.228387042742023 0 0 12.72816758217773 0 0 0

1 2.857738033247042 2.52078447201548 0 2.619965104088255 0 2.004684436494304 0 2.122974378789621 0 0 0 0 0 0 0 0

2.代码

import org.apache.spark.SparkContext

import org.apache.spark.mllib.classification.SVMWithSGD

import org.apache.spark.mllib.linalg.Vectors

import org.apache.spark.mllib.regression.LabeledPoint

// Load and parse the data file

val data = sc.textFile("mllib/data/sample_svm_data.txt")

val parsedData = data.map { line =>

val parts = line.split(' ')

LabeledPoint(parts(0).toDouble, Vectors.dense(parts.tail.map(x => x.toDouble).toArray))

}

// Run training algorithm to build the model

val numIterations = 20

val model = SVMWithSGD.train(parsedData, numIterations)

// Evaluate model on training examples and compute training error

val labelAndPreds = parsedData.map { point =>

val prediction = model.predict(point.features)

(point.label, prediction) }

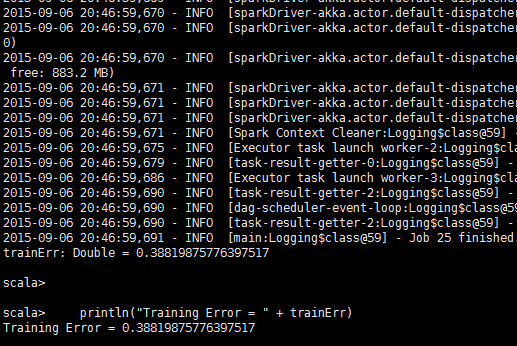

val trainErr = labelAndPreds.filter( r =>

r._1 != r._2).count.toDouble / parsedData.count

println("Training Error = " + trainErr) file:///home/hadoop/suanec/suae/workspace/data/mllib/sample_svm_data.txt

spark Mllib SVM实例的更多相关文章

- 梯度迭代树(GBDT)算法原理及Spark MLlib调用实例(Scala/Java/python)

梯度迭代树(GBDT)算法原理及Spark MLlib调用实例(Scala/Java/python) http://blog.csdn.net/liulingyuan6/article/details ...

- 三种文本特征提取(TF-IDF/Word2Vec/CountVectorizer)及Spark MLlib调用实例(Scala/Java/python)

https://blog.csdn.net/liulingyuan6/article/details/53390949

- Spark MLlib回归算法------线性回归、逻辑回归、SVM和ALS

Spark MLlib回归算法------线性回归.逻辑回归.SVM和ALS 1.线性回归: (1)模型的建立: 回归正则化方法(Lasso,Ridge和ElasticNet)在高维和数据集变量之间多 ...

- spark Mllib基本功系列编程入门之 SVM实现分类

话不多说.直接上代码咯.欢迎交流. /** * Created by whuscalaman on 1/7/16. */import org.apache.spark.{SparkConf, Spar ...

- Spark入门实战系列--8.Spark MLlib(下)--机器学习库SparkMLlib实战

[注]该系列文章以及使用到安装包/测试数据 可以在<倾情大奉送--Spark入门实战系列>获取 .MLlib实例 1.1 聚类实例 1.1.1 算法说明 聚类(Cluster analys ...

- Spark MLlib 机器学习

本章导读 机器学习(machine learning, ML)是一门涉及概率论.统计学.逼近论.凸分析.算法复杂度理论等多领域的交叉学科.ML专注于研究计算机模拟或实现人类的学习行为,以获取新知识.新 ...

- Spark MLlib(下)--机器学习库SparkMLlib实战

1.MLlib实例 1.1 聚类实例 1.1.1 算法说明 聚类(Cluster analysis)有时也被翻译为簇类,其核心任务是:将一组目标object划分为若干个簇,每个簇之间的object尽可 ...

- Spark MLlib聚类KMeans

算法说明 聚类(Cluster analysis)有时也被翻译为簇类,其核心任务是:将一组目标object划分为若干个簇,每个簇之间的object尽可能相似,簇与簇之间的object尽可能相异.聚类算 ...

- 《Spark MLlib机器学习实践》内容简介、目录

http://product.dangdang.com/23829918.html Spark作为新兴的.应用范围最为广泛的大数据处理开源框架引起了广泛的关注,它吸引了大量程序设计和开发人员进行相 ...

随机推荐

- js指定标签的id只能添加不能删除

<body> <form id="form1" runat="server"> <div> <input id=&qu ...

- ecshop设置一个子类对应多个父类并指定跳转url的修改方法

这是一篇记录在日记里面的技术文档,其实是对ecshop的二次开发.主要作用是将一个子类对应多个父类,并指定条跳转url的功能.ecshop是一款在线购物网站,感兴趣的可以下载源码看看.我们看看具体是怎 ...

- iOS block 声明时和定义时的不同格式

今天写程序时,在实现一个block时总提示格式错误,对比api的block参数格式,没发现错误.后来查阅了资料,发现这两个格式是不同的! 具体格式见下方 NSString * (^testBlock) ...

- iOS 和 Android 中的后台运行问题

后台机制的不同,算是iOS 和 Android的一大区别了,最近发布的iOS7又对后台处理做了一定的更改,找时间总结一下编码上的区别,先做个记录. 先看看iOS的把,首先需要仔细阅读一下Apple的官 ...

- Jpush推送模块

此文章已于 14:17:10 2015/3/24 重新发布到 鲸歌 Jpush推送模块 或以上版本的手机系统. SDK集成步骤 .导入 SDK 开发包到你自己的应用程序项目 • 解压 ...

- Codeforces Round #321 (Div. 2)C(tree dfs)

题意:给出一棵树,共有n个节点,其中根节点是Kefa的家,叶子是restaurant,a[i]....a[n]表示i节点是否有猫,问:Kefa要去restaurant并且不能连续经过m个有猫的节点有多 ...

- Hibernate常见问题

问题1,hql条件查询报错 执行Query session.createQuery(hql) 报错误直接跳到finally 解决方案 加入 <prop key="hibernate.q ...

- hrbustoj 1179:下山(DFS+剪枝)

下山Time Limit: 1000 MS Memory Limit: 65536 KTotal Submit: 271(111 users) Total Accepted: 129(101 user ...

- html5 (个人笔记)

妙味 html5 1.0 <!DOCTYPE html> <html> <head lang="en"> <meta charset=& ...

- Sql server之路 (三)添加本地数据库SDF文件

12月25日 今天搞了半天 添加本地数据库Sdf文件到项目里.总是出现问题. 安装环境 Vs2008 没有安装的环境 1.Vs2008 sp1 2. 适用于 Windows 桌面的 Microsoft ...