NEURAL NETWORKS, PART 3: THE NETWORK

NEURAL NETWORKS, PART 3: THE NETWORK

We have learned about individual neurons in the previous section, now it’s time to put them together to form an actual neural network.

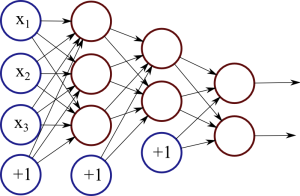

The idea is quite simple – we line multiple neurons up to form a layer, and connect the output of the first layer to the input of the next layer. Here is an illustration:

Figure 1: Neural network with two hidden layers.

Figure 1: Neural network with two hidden layers.

Each red circle in the diagram represents a neuron, and the blue circles represent fixed values. From left to right, there are four columns: the input layer, two hidden layers, and an output layer. The output from neurons in the previous layer is directed into the input of each of the neurons in the next layer.

We have 3 features (vector space dimensions) in the input layer that we use for learning: x1, x2 and x3. The first hidden layer has 3 neurons, the second one has 2 neurons, and the output layer has 2 output values. The size of these layers is up to you – on complex real-world problems we would use hundreds or thousands of neurons in each layer.

The number of neurons in the output layer depends on the task. For example, if we have a binary classification task (something is true or false), we would only have one neuron. But if we have a large number of possible classes to choose from, our network can have a separate output neuron for each class.

The network in Figure 1 is a deep neural network, meaning that it has two or more hidden layers, allowing the network to learn more complicated patterns. Each neuron in the first hidden layer receives the input signals and learns some pattern or regularity. The second hidden layer, in turn, receives input from these patterns from the first layer, allowing it to learn “patterns of patterns” and higher-level regularities. However, the cost of adding more layers is increased complexity and possibly lower generalisation capability, so finding the right network structure is important.

Implementation

I have implemented a very simple neural network for demonstration. You can find the code here: SimpleNeuralNetwork.java

The first important method is initialiseNetwork(), which sets up the necessary structures:

|

1

2

3

4

5

6

7

8

9

|

public void initialiseNetwork(){ input = new double[1 + M]; // 1 is for the bias hidden = new double[1 + H]; weights1 = new double[1 + M][H]; weights2 = new double[1 + H]; input[0] = 1.0; // Setting the bias hidden[0] = 1.0;} |

M is the number of features in the feature vectors, H is the number of neurons in the hidden layer. We add 1 to these, since we also use the bias constants.

We represent the input and hidden layer as arrays of doubles. For example, hidden[i] stores the current output value of the i-th neuron in the hidden layer.

The first set of weights, between the input and hidden layer, are stored as a matrix. Each of the (1+M) neurons in the input layer connects to H neurons in the hidden layer, leading to a total of (1+M)×H weights. We only have one output neuron, so the second set of weights between hidden and output layers is technically a (1+H)×1 matrix, but we can just represent that as a vector.

The second important function is forwardPass(), which takes an input vector and performs the computation to reach an output value.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

public void forwardPass(){ for(int j = 1; j < hidden.length; j++){ hidden[j] = 0.0; for(int i = 0; i < input.length; i++){ hidden[j] += input[i] * weights1[i][j-1]; } hidden[j] = sigmoid(hidden[j]); } output = 0.0; for(int i = 0; i < hidden.length; i++){ output += hidden[i] * weights2[i]; } output = sigmoid(output);} |

The first for-loop calculates the values in the hidden layer, by multiplying the input vector with the weight vector and applying the sigmoid function. The last part calculates the output value by multiplying the hidden values with the second set of weights, and also applying the sigmoid.

Evaluation

To test out this network, I have created a sample dataset using the database at quandl.com. This dataset contains sociodemographic statistics for 141 countries:

- Population density (per suqare km)

- Population growth rate (%)

- Urban population (%)

- Life expectancy at birth (years)

- Fertility rate (births per woman)

- Infant mortality (deaths per 1000 births)

- Enrolment in tertiary education (%)

- Unemployment (%)

- Estimated control of corruption (score)

- Estimated government effectiveness (score)

- Internet users (per 100)

Based on this information, we want to train a neural network that can predict whether the GDP per capita is more than average for that country (label 1 if it is, 0 if it’s not).

I’ve separated the dataset for training (121 countries) and testing (40 countries). The values have been normalised, by subtracting the mean and dividing by the standard deviation, using a script from a previous article. I’ve also pre-trained a model that we can load into this network and evaluate. You can download these from here: original data, training data, test data,pretrained model.

You can then execute the neural network (remember to compile and link the binaries):

|

1

|

java neuralnet.SimpleNeuralNetwork data/model.txt data/countries-classify-gdp-normalised.test.txt |

The output should be something like this:

|

1

2

3

4

5

6

7

8

9

10

|

Label: 0 Prediction: 0.01Label: 0 Prediction: 0.00Label: 1 Prediction: 0.99Label: 0 Prediction: 0.00...Label: 0 Prediction: 0.20Label: 0 Prediction: 0.01Label: 1 Prediction: 0.99Label: 0 Prediction: 0.00Accuracy: 0.9 |

The network is in verbose mode, so it prints out the labels and predictions for each test item. At the end, it also prints out the overall accuracy. The test data contains 14 positive and 26 negative examples; a random system would have had accuracy 50%, whereas a biased system would have accuracy 65%. Our network managed 90%, which means it has learned some useful patterns in the data.

In this case we simply loaded a pre-trained model. In the next section, I will describe how to learn this model from some training data.

NEURAL NETWORKS, PART 3: THE NETWORK的更多相关文章

- 神经网络第三部分:网络Neural Networks, Part 3: The Network

NEURAL NETWORKS, PART 3: THE NETWORK We have learned about individual neurons in the previous sectio ...

- [C3] Andrew Ng - Neural Networks and Deep Learning

About this Course If you want to break into cutting-edge AI, this course will help you do so. Deep l ...

- 课程五(Sequence Models),第一 周(Recurrent Neural Networks) —— 1.Programming assignments:Building a recurrent neural network - step by step

Building your Recurrent Neural Network - Step by Step Welcome to Course 5's first assignment! In thi ...

- 课程一(Neural Networks and Deep Learning),第四周(Deep Neural Networks) —— 3.Programming Assignments: Deep Neural Network - Application

Deep Neural Network - Application Congratulations! Welcome to the fourth programming exercise of the ...

- 课程一(Neural Networks and Deep Learning),第二周(Basics of Neural Network programming)—— 4、Logistic Regression with a Neural Network mindset

Logistic Regression with a Neural Network mindset Welcome to the first (required) programming exerci ...

- 【转】Artificial Neurons and Single-Layer Neural Networks

原文:written by Sebastian Raschka on March 14, 2015 中文版译文:伯乐在线 - atmanic 翻译,toolate 校稿 This article of ...

- Deep Learning 23:dropout理解_之读论文“Improving neural networks by preventing co-adaptation of feature detectors”

理论知识:Deep learning:四十一(Dropout简单理解).深度学习(二十二)Dropout浅层理解与实现.“Improving neural networks by preventing ...

- 一天一经典Reducing the Dimensionality of Data with Neural Networks [Science2006]

别看本文没有几页纸,本着把经典的文多读几遍的想法,把它彩印出来看,没想到效果很好,比在屏幕上看着舒服.若用蓝色的笔圈出重点,这篇文章中几乎要全蓝.字字珠玑. Reducing the Dimensio ...

- Stanford机器学习笔记-5.神经网络Neural Networks (part two)

5 Neural Networks (part two) content: 5 Neural Networks (part two) 5.1 cost function 5.2 Back Propag ...

随机推荐

- ngnix apache tomcat集群负载均衡配置

http://w.gdu.me/wiki/Java/tomcat_cluster.html 参考: Tomcat与Apache或Nginx的集群负载均衡设置: http://huangrs.blog. ...

- uva 10718 Bit Mask(贪心)

题目连接:10718 Bit Mask 题目大意:给出一个T, 和一个下限L, 上限R, 在[L, R]之间找一个数, 使得这个数与T做或运算之后的数值最大 输出这个数. 解题思路:将T转换成二进制, ...

- HDU 1242 rescue and 优先级队列的条目

Problem Description Angel was caught by the MOLIGPY! He was put in prison by Moligpy. The prison is ...

- [转载]js中__proto__和prototype的区别和关系

首先,要明确几个点:1.在JS里,万物皆对象.方法(Function)是对象,方法的原型(Function.prototype)是对象.因此,它们都会具有对象共有的特点.即:对象具有属性_ ...

- txt无法正常保存正文的解决办法

最近遇到一个问题,txt文档中写了中文,则保存的时候 就会提示“该文件含有unicode格式字符,当文件保存为ANST编码文本文件时,该字符将会丢失”.虽然有解决办法,但不彻底,用起来总是很费劲,研究 ...

- Nginx Resource

Nginx中URL转换成小写首先编译安装nginx_lua_module模块server节: location / { if($uri ~ [A-Z]){ rewrite_by_lua 'return ...

- '[linux下tomcat 配置

tomcat目录结构 bin ——Tomcat执行脚本目录 conf ——Tomcat配置文件 lib ——Tomcat运行需要的库文件(JARS) logs ——Tomcat执行时的LOG文件 te ...

- ASP.NET中常用方法

身份证号检测: /// <summary> /// 检验身份证号是否正确 /// </summary> /// <param name="Id"> ...

- 深入理解shared pool共享池之library cache的library cache pin系列三

关于library cache相关的LATCH非常多,名称差不多,我相信一些人对这些概念还是有些晕,我之前也有些晕,希望此文可以对这些概念有个更为清晰的理解,本文主要学习library cache p ...

- centos 彻底卸载mysql

yum remove mysql mysql-server mysql-libs compat-mysql51rm -rf /var/lib/mysqlrm /etc/my.cnf查看是否还有mysq ...