flink Standalone Cluster

Requirements

Software Requirements

Flink runs on all UNIX-like environments, e.g. Linux, Mac OS X, and Cygwin (for Windows) and expects the cluster to consist of one master node and one or more worker nodes. Before you start to setup the system, make sure you have the following software installed on each node:

- Java 1.8.x or higher,

- ssh (sshd must be running to use the Flink scripts that manage remote components)

If your cluster does not fulfill these software requirements you will need to install/upgrade it.

Having passwordless SSH and the same directory structure on all your cluster nodes will allow you to use our scripts to control everything.

ssh免密登录:

在源机器执行

ssh-keygen //一路回车,生成公钥,位置~/.ssh/id_rsa.pub

ssh-copy-id -i ~/.ssh/id_rsa.pub root@目的机器 //将源机器生成的公钥拷贝到目的机器的~/.ssh/authorized_keys

或者 手动将源机器生成的公钥拷贝到目的机器的~/.ssh/authorized_keys。注意:不要有换行

完成!

JAVA_HOME Configuration

系统环境配置了JAVA_HOME即可,无需如下操作

Flink requires the JAVA_HOME environment variable to be set on the master and all worker nodes and point to the directory of your Java installation.

You can set this variable in conf/flink-conf.yaml via the env.java.home key.

Flink Setup

Go to the downloads page and get the ready-to-run package. Make sure to pick the Flink package matching your Hadoop version. If you don’t plan to use Hadoop, pick any version.

After downloading the latest release, copy the archive to your master node and extract it:

tar xzf flink-*.tgz

cd flink-*Configuring Flink (不做任何设置,在单节点上直接运行bin/start-cluster.sh,可在单机启动单个jobmanager和单个taskmanager,方便debug代码)

After having extracted the system files, you need to configure Flink for the cluster by editing conf/flink-conf.yaml.

Set the jobmanager.rpc.address key to point to your master node. You should also define the maximum amount of main memory the JVM is allowed to allocate on each node by setting the jobmanager.heap.mb and taskmanager.heap.mb keys.

These values are given in MB. If some worker nodes have more main memory which you want to allocate to the Flink system you can overwrite the default value by setting the environment variable FLINK_TM_HEAP on those specific nodes.

Finally, you must provide a list of all nodes in your cluster which shall be used as worker nodes. Therefore, similar to the HDFS configuration, edit the file conf/slaves and enter the IP/host name of each worker node. Each worker node will later run a TaskManager.

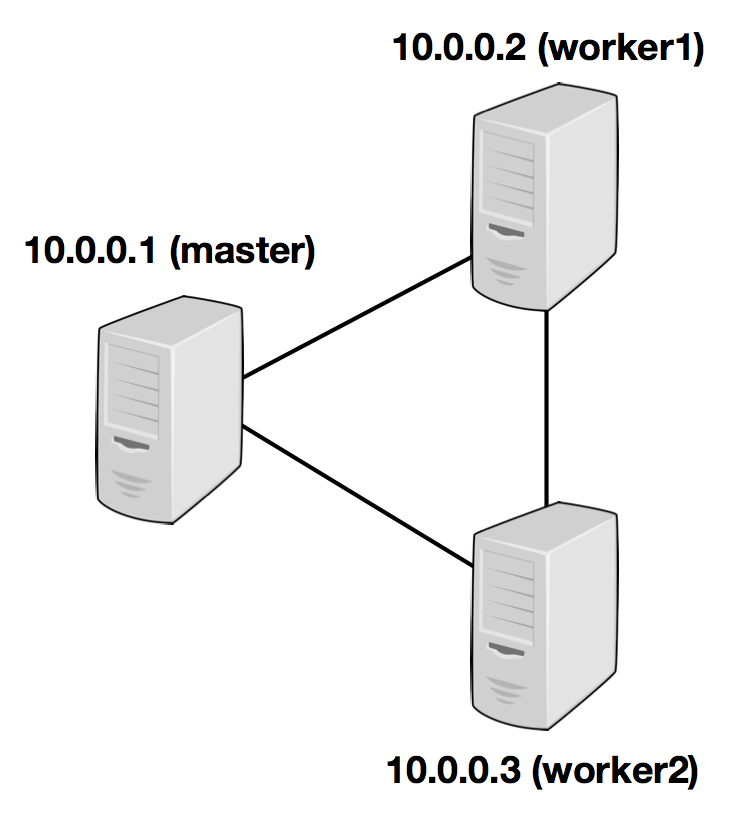

The following example illustrates the setup with three nodes (with IP addresses from 10.0.0.1 to 10.0.0.3 and hostnames master, worker1, worker2) and shows the contents of the configuration files (which need to be accessible at the same path on all machines):

jobmanager.rpc.address: 10.0.0.1

注意:需要在所有需要启动taskmanager的机器进行如上配置

/path/to/flink/conf/slaves

10.0.0.2

10.0.0.3

The Flink directory must be available on every worker under the same path. You can use a shared NFS directory, or copy the entire Flink directory to every worker node.

Please see the configuration page for details and additional configuration options.

In particular,

- the amount of available memory per JobManager (

jobmanager.heap.mb), - the amount of available memory per TaskManager (

taskmanager.heap.mb), - the number of available CPUs per machine (

taskmanager.numberOfTaskSlots), - the total number of CPUs in the cluster (

parallelism.default) and - the temporary directories (

taskmanager.tmp.dirs)

are very important configuration values.

Starting Flink

The following script starts a JobManager on the local node and connects via SSH to all worker nodes listed in the slaves file to start the TaskManager on each node. Now your Flink system is up and running. The JobManager running on the local node will now accept jobs at the configured RPC port.

Assuming that you are on the master node and inside the Flink directory:

bin/start-cluster.shTo stop Flink, there is also a stop-cluster.sh script.

Adding JobManager/TaskManager Instances to a Cluster

You can add both JobManager and TaskManager instances to your running cluster with the bin/jobmanager.sh and bin/taskmanager.shscripts.

Adding a JobManager

bin/jobmanager.sh ((start|start-foreground) cluster)|stop|stop-allAdding a TaskManager

bin/taskmanager.sh start|start-foreground|stop|stop-allMake sure to call these scripts on the hosts on which you want to start/stop the respective instance.

flink Standalone Cluster的更多相关文章

- flink初识及安装flink standalone集群

flink architecture 1.可以看出,flink可以运行在本地,也可以类似spark一样on yarn或者standalone模式(与spark standalone也很相似),此外fl ...

- Apache Spark源码走读之19 -- standalone cluster模式下资源的申请与释放

欢迎转载,转载请注明出处,徽沪一郎. 概要 本文主要讲述在standalone cluster部署模式下,Spark Application在整个运行期间,资源(主要是cpu core和内存)的申请与 ...

- Spark Standalone cluster try

Spark Standalone cluster node*-- stop firewalldsystemctl stop firewalldsystemctl disable firewalld-- ...

- flink部署操作-flink standalone集群安装部署

flink集群安装部署 standalone集群模式 必须依赖 必须的软件 JAVA_HOME配置 flink安装 配置flink 启动flink 添加Jobmanager/taskmanager 实 ...

- Spark运行模式_spark自带cluster manager的standalone cluster模式(集群)

这种运行模式和"Spark自带Cluster Manager的Standalone Client模式(集群)"还是有很大的区别的.使用如下命令执行应用程序(前提是已经启动了spar ...

- Flink standalone模式作业执行流程

宏观流程如下图: client端 生成StreamGraph env.addSource(new SocketTextStreamFunction(...)) .flatMap(new FlatMap ...

- 【转帖】两年Flink迁移之路:从standalone到on yarn,处理能力提升五倍

两年Flink迁移之路:从standalone到on yarn,处理能力提升五倍 https://segmentfault.com/a/1190000020209179 flink 1.7k 次阅读 ...

- Apache Flink 的迁移之路,2 年处理效果提升 5 倍

一.背景与痛点 在 2017 年上半年以前,TalkingData 的 App Analytics 和 Game Analytics 两个产品,流式框架使用的是自研的 td-etl-framework ...

- 重磅!解锁Apache Flink读写Apache Hudi新姿势

感谢阿里云 Blink 团队Danny Chan的投稿及完善Flink与Hudi集成工作. 1. 背景 Apache Hudi 是目前最流行的数据湖解决方案之一,Data Lake Analytics ...

随机推荐

- 免费SSL证书(支持1.0、1.1、1.2)

由于公司要开发微信小程序,而微信小程序的接口需要https协议的,并且要支持TLS1.0.TLS1.1.TLS1.2.如果仅仅是为了开发小程序,安全等级又不用太高,可以选择免费的SSL证书 在这里选择 ...

- C#版(打败97.89%的提交) - Leetcode 202. 快乐数 - 题解

版权声明: 本文为博主Bravo Yeung(知乎UserName同名)的原创文章,欲转载请先私信获博主允许,转载时请附上网址 http://blog.csdn.net/lzuacm. C#版 - L ...

- HTTP 权威指南 详解 ( 一、概述 )

HTTP 权威指南 详解 ( 一.概述 ) 最近在解读 <http权威指南> 这本书.之前对于http 的理解仅限于 知道我需要向服务端发送一个 get or post 请求,然后等待服务 ...

- Linux上安装Zookeeper以及一些注意事项

最近打算出一个系列,介绍Dubbo的使用. 分布式应用现在已经越来越广泛,Spring Could也是一个不错的一站式解决方案,不过据我了解国内目前貌似使用阿里Dubbo的公司比较多,一方面这个框架也 ...

- 消息队列、socket(UDP)实现简易聊天系统

前言: 最近在学进程间通信,所以做了一个小项目练习一下.主要用消息队列和socket(UDP)实现这个系统,并数据库存储数据,对C语言操作数据库不熟悉的可以参照我的这篇博客:https://www.c ...

- 一文看懂https如何保证数据传输的安全性的

通过漫画的形式由浅入深带你读懂htts是如何保证一台主机把数据安全发给另一台主机的 对称加密 一禅:在每次发送真实数据之前,服务器先生成一把密钥,然后先把密钥传输给客户端.之后服务器给客户端发送真实数 ...

- Linux - CentOS 登陆密码找回解决方法

- Android单个控件占父控件宽度一半且水平居中

前些天,在工作中遇到了一个需求:一个“加载上一页”的按钮宽度为父控件宽度一半,且水平居中于父控件中. 在此给出两种思路: 1.直接在Activity代码中获取到当前父控件的宽度,并将此按钮宽度值设置成 ...

- [ SSH框架 ] Spring框架学习之一

一.Spring概述 1.1 什么是Spring Spring是一个开源框架, Spring是于2003年兴起的一个轻量级的Java开发框架,由 Rod Johnson在其著作 Expert One- ...

- Ubuntu18 的超详细常用软件安装

心血来潮,在笔记本安装了Ubuntu 18 用于日常学习,于是有了下面的安装记录. Gnome-Tweak-Tool gnome-tweak-tool可以打开隐藏的设置,可以详细的对系统进行配置,以及 ...