Ansible实战:部署分布式日志系统

本节内容:

- 背景

- 分布式日志系统架构图

- 创建和使用roles

- JDK 7 role

- JDK 8 role

- Zookeeper role

- Kafka role

- Elasticsearch role

- MySQL role

- Nginx role

- Redis role

- Hadoop role

- Spark role

一、背景

产品组在开发一个分布式日志系统,用的组件较多,单独手工部署一各个个软件比较繁琐,花的时间比较长,于是就想到了使用ansible playbook + roles进行部署,效率大大提高。

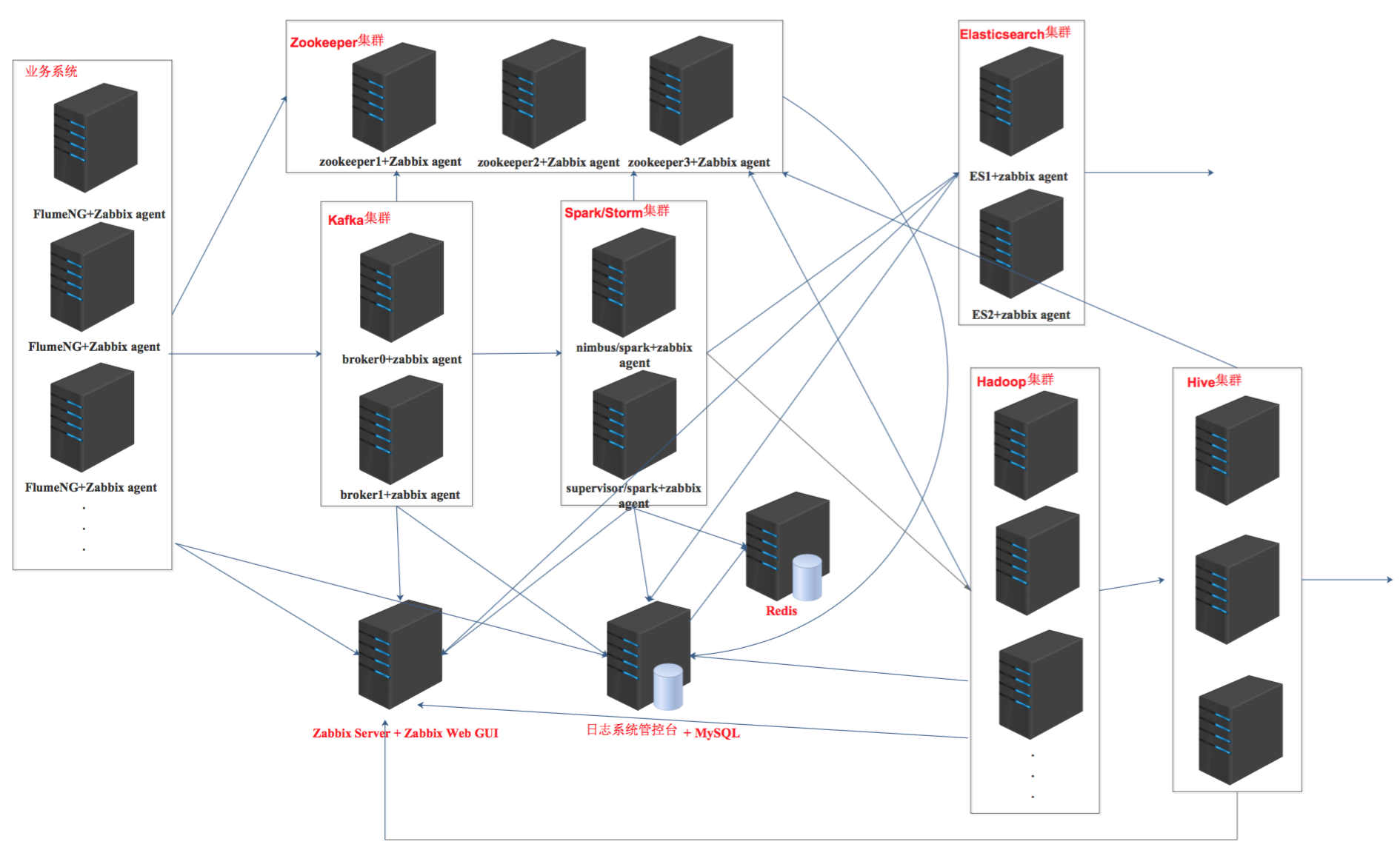

二、分布式日志系统架构图

三、创建和使用roles

每一个软件或集群都创建一个单独的角色。

[root@node1 ~]# mkdir -pv ansible_playbooks/roles/{db_server,web_server,redis_server,zk_server,kafka_server,es_server,tomcat_server,flume_agent,hadoop,spark,hbase,hive,jdk7,jdk8}/{tasks,files,templates,meta,handlers,vars}

3.1. JDK7 role

[root@node1 jdk7]# pwd

/root/ansible_playbooks/roles/jdk7

[root@node1 jdk7]# ls

files handlers meta tasks templates vars

1. 上传软件包

将jdk-7u80-linux-x64.gz上传到files目录下。

2. 编写tasks

[root@node1 jdk7]# vim tasks/main.yml

- name: mkdir necessary catalog

file: path=/usr/java state=directory mode=

- name: copy and unzip jdk

unarchive: src={{jdk_package_name}} dest=/usr/java/

- name: set env

lineinfile: dest={{env_file}} insertafter="{{item.position}}" line="{{item.value}}" state=present

with_items:

- {position: EOF, value: "\n"}

- {position: EOF, value: "export JAVA_HOME=/usr/java/{{jdk_version}}"}

- {position: EOF, value: "export PATH=$JAVA_HOME/bin:$PATH"}

- {position: EOF, value: "export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar"}

- name: enforce env

shell: source {{env_file}}

jdk7 tasks

3. 编写vars

[root@node1 jdk7]# vim vars/main.yml

jdk_package_name: jdk-7u80-linux-x64.gz

env_file: /etc/profile

jdk_version: jdk1..0_80

4. 使用角色

在roles同级目录,创建一个jdk.yml文件,里面定义好你的playbook。

[root@node1 ansible_playbooks]# vim jdk.yml

- hosts: jdk

remote_user: root

roles:

- jdk7

运行playbook安装JDK7:

[root@node1 ansible_playbooks]# ansible-playbook jdk.yml

使用jdk7 role可以需要根据实际环境修改vars/main.yml里的变量以及/etc/ansible/hosts文件里定义的主机。

3.2 JDK8 role

[root@node1 jdk8]# pwd

/root/ansible_playbooks/roles/jdk8

[root@node1 jdk8]# ls

files handlers meta tasks templates vars

1. 上传安装包

将jdk-8u73-linux-x64.gz上传到files目录下。

2. 编写tasks

[root@node1 jdk8]# vim tasks/main.yml

- name: mkdir necessary catalog

file: path=/usr/java state=directory mode=

- name: copy and unzip jdk

unarchive: src={{jdk_package_name}} dest=/usr/java/

- name: set env

lineinfile: dest={{env_file}} insertafter="{{item.position}}" line="{{item.value}}" state=present

with_items:

- {position: EOF, value: "\n"}

- {position: EOF, value: "export JAVA_HOME=/usr/java/{{jdk_version}}"}

- {position: EOF, value: "export PATH=$JAVA_HOME/bin:$PATH"}

- {position: EOF, value: "export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar"}

- name: enforce env

shell: source {{env_file}}

jdk8 tasks

3. 编写vars

[root@node1 jdk8]# vim vars/main.yml

jdk_package_name: jdk-8u73-linux-x64.gz

env_file: /etc/profile

jdk_version: jdk1..0_73

4. 使用角色

在roles同级目录,创建一个jdk.yml文件,里面定义好你的playbook。

[root@node1 ansible_playbooks]# vim jdk.yml

- hosts: jdk

remote_user: root

roles:

- jdk8

运行playbook安装JDK8:

[root@node1 ansible_playbooks]# ansible-playbook jdk.yml

使用jdk8 role可以需要根据实际环境修改vars/main.yml里的变量以及/etc/ansible/hosts文件里定义的主机。

3.3 Zookeeper role

Zookeeper集群节点配置好/etc/hosts文件,配置集群各节点主机名和ip地址的对应关系。

[root@node1 zk_server]# pwd

/root/ansible_playbooks/roles/zk_server

[root@node1 zk_server]# ls

files handlers meta tasks templates vars

1. 上传安装包

将zookeeper-3.4.6.tar.gz和clean_zklog.sh上传到files目录。clean_zklog.sh是清理Zookeeper日志的脚本。

2. 编写tasks

[root@node1 zk_server]# vim tasks/main.yml

- name: install zookeeper

unarchive: src=zookeeper-3.4..tar.gz dest=/usr/local/

- name: install configuration file for zookeeper

template: src=zoo.cfg.j2 dest=/usr/local/zookeeper-3.4./conf/zoo.cfg

- name: add myid file

shell: echo {{ myid }} > /usr/local/zookeeper-3.4./dataDir/myid

- name: copy script to clear zookeeper logs.

copy: src=clean_zklog.sh dest=/usr/local/zookeeper-3.4./clean_zklog.sh mode=

- name: crontab task

cron: name="clear zk logs" weekday="" hour="" minute="" job="/usr/local/zookeeper-3.4.6/clean_zklog.sh"

- name: start zookeeper

shell: /usr/local/zookeeper-3.4./bin/zkServer.sh start

tags:

- start

zookeeper tasks

3. 编写templates

将zookeeper-3.4.6.tar.gz包中的默认配置文件上传到../roles/zk_server/templates/目录下,重命名为zoo.cfg.j2,并修改其中的内容。

[root@node1 ansible_playbooks]# vim roles/zk_server/templates/zoo.cfg.j2

配置文件内容过多,具体见github,地址是https://github.com/jkzhao/ansible-godseye。配置文件内容也不在解释,在前面博客中的文章中都已写明。

4. 编写vars

[root@node1 zk_server]# vim vars/main.yml

server1_hostname: hadoop27

server2_hostname: hadoop28

server3_hostname: hadoop29

另外在tasks中还使用了个变量{{myid}},该变量每台主机的值是不一样的,所以定义在了/etc/ansible/hosts文件中:

[zk_servers]

172.16.206.27 myid=

172.16.206.28 myid=

172.16.206.29 myid=

5. 设置主机组

/etc/ansible/hosts文件:

[zk_servers]

172.16.206.27 myid=

172.16.206.28 myid=

172.16.206.29 myid=

6. 使用角色

在roles同级目录,创建一个zk.yml文件,里面定义好你的playbook。

[root@node1 ansible_playbooks]# vim zk.yml

- hosts: zk_servers

remote_user: root

roles:

- zk_server

运行playbook安装Zookeeper集群:

[root@node1 ansible_playbooks]# ansible-playbook zk.yml

使用zk_server role需要根据实际环境修改vars/main.yml里的变量以及/etc/ansible/hosts文件里定义的主机。

3.4 Kafka role

[root@node1 kafka_server]# pwd

/root/ansible_playbooks/roles/kafka_server

[root@node1 kafka_server]# ls

files handlers meta tasks templates vars

1. 上传安装包

将kafka_2.11-0.9.0.1.tar.gz、kafka-manager-1.3.0.6.zip和clean_kafkalog.sh上传到files目录。clean_kafkalog.sh是清理kafka日志的脚本。

2. 编写tasks

[root@node1 kafka_server]# vim tasks/main.yml

- name: copy and unzip kafka

unarchive: src=kafka_2.-0.9.0.1.tgz dest=/usr/local/

- name: install configuration file for kafka

template: src=server.properties.j2 dest=/usr/local/kafka_2.-0.9.0.1/config/server.properties

- name: copy script to clear kafka logs.

copy: src=clean_kafkalog.sh dest=/usr/local/kafka_2.-0.9.0.1/clean_kafkalog.sh mode=

- name: crontab task

cron: name="clear kafka logs" weekday="" hour="" minute="" job="/usr/local/kafka_2.11-0.9.0.1/clean_kafkalog.sh"

- name: start kafka

shell: JMX_PORT= /usr/local/kafka_2.-0.9.0.1/bin/kafka-server-start.sh -daemon /usr/local/kafka_2.-0.9.0.1/config/server.properties &

tags:

- start

- name: copy and unizp kafka-manager

unarchive: src=kafka-manager-1.3.0.6.zip dest=/usr/local/

when: ansible_default_ipv4['address'] == "{{kafka_manager_ip}}"

- name: install configuration file for kafka-manager

template: src=application.conf.j2 dest=/usr/local/kafka-manager-1.3.0.6/conf/application.conf

when: ansible_default_ipv4['address'] == "{{kafka_manager_ip}}"

- name: start kafka-manager

shell: nohup /usr/local/kafka-manager-1.3.0.6/bin/kafka-manager &

when: ansible_default_ipv4['address'] == "{{kafka_manager_ip}}"

tags:

- kafkaManagerStart

kafka tasks

3. 编写templates

[root@node1 kafka_server]# vim templates/server.properties.j2

配置文件内容过多,具体见github,地址是https://github.com/jkzhao/ansible-godseye。配置文件内容也不再解释,在前面博客中的文章中都已写明。

4. 编写vars

[root@node1 kafka_server]# vim vars/main.yml

zk_cluster: 172.16.7.151:,172.16.7.152:,172.16.7.153:

kafka_manager_ip: 172.16.7.151

另外在template的文件中还使用了个变量{{broker_id}},该变量每台主机的值是不一样的,所以定义在了/etc/ansible/hosts文件中:

[kafka_servers]

172.16.206.17 broker_id=

172.16.206.31 broker_id=

172.16.206.32 broker_id=

5. 设置主机组

/etc/ansible/hosts文件:

[kafka_servers]

172.16.206.17 broker_id=

172.16.206.31 broker_id=

172.16.206.32 broker_id=

6. 使用角色

在roles同级目录,创建一个kafka.yml文件,里面定义好你的playbook。

[root@node1 ansible_playbooks]# vim kafka.yml

- hosts: kafka_servers

remote_user: root

roles:

- kafka_server

运行playbook安装kafka集群:

[root@node1 ansible_playbooks]# ansible-playbook kafka.yml

使用kafka_server role需要根据实际环境修改vars/main.yml里的变量以及/etc/ansible/hosts文件里定义的主机。

3.5 Elasticsearch role

[root@node1 es_server]# pwd

/root/ansible_playbooks/roles/es_server

[root@node1 es_server]# ls

files handlers meta tasks templates vars

1. 上传安装包

将elasticsearch-2.3.3.tar.gz elasticsearch-analysis-ik-1.9.3.zip上传到files目录。

2. 编写tasks

[root@node1 es_server]# vim tasks/main.yml

- name: create es user

user: name=es password={{password}}

vars:

# created with:

# python -c 'import crypt; print crypt.crypt("This is my Password", "$1$SomeSalt$")'

# >>> import crypt

# >>> crypt.crypt('wisedu123', '$1$bigrandomsalt$')

# '$1$bigrando$wzfZ2ifoHJPvaMuAelsBq0'

password: $$bigrando$wzfZ2ifoHJPvaMuAelsBq0

- name: mkdir directory for elasticsearch data

file: dest=/esdata mode= state=directory owner=es group=es

- name: copy and unzip es

#unarchive module owner and group only effect on directory.

unarchive: src=elasticsearch-2.3..tar.gz dest=/usr/local/

- name: install memory configuration file for es

template: src=elasticsearch.in.sh.j2 dest=/usr/local/elasticsearch-2.3./bin/elasticsearch.in.sh owner=es group=es

- name: install configuration file for es

template: src=elasticsearch.yml.j2 dest=/usr/local/elasticsearch-2.3./config/elasticsearch.yml owner=es group=es

- name: mkdir directory for elasticsearch-analysis-ik plugin

file: dest=/usr/local/elasticsearch-2.3./plugins/ik mode= state=directory owner=es group=es

- name: copy and unizp elasticsearch-analysis-ik plugin

unarchive: src=elasticsearch-analysis-ik-1.9..zip dest=/usr/local/elasticsearch-2.3./plugins/ik

- name: change owner and group

#recurse=yes make all files in a directory changed.

file: path=/usr/local/elasticsearch-2.3. owner=es group=es recurse=yes

- name: start es

shell: su - es -c '/usr/local/elasticsearch-2.3.3/bin/elasticsearch -d'

#command: /usr/local/elasticsearch-2.3./bin/elasticsearch -d

#become: true

#become_method: su

#become_user: es

tags:

- start

Elasticsearch tasks

3. 编写templates

将模板elasticsearch.in.sh.j2和elasticsearch.yml.j2放入templates目录下

注意模板里的变量名中间不能用.。比如:{{node.name}}这样的变量名是不合法的。

配置文件内容过多,具体见github,地址是https://github.com/jkzhao/ansible-godseye。配置文件内容也不再解释,在前面博客中的文章中都已写明。

4. 编写vars

[root@node1 es_server]# vim vars/main.yml

ES_MEM: 2g

cluster_name: wisedu

master_ip: 172.16.7.151

另外在template的文件中还使用了个变量{{node_master}},该变量每台主机的值是不一样的,所以定义在了/etc/ansible/hosts文件中:

[es_servers]

172.16.7.151 node_master=true

172.16.7.152 node_master=false

172.16.7.153 node_master=false

5. 设置主机组

/etc/ansible/hosts文件:

[es_servers]

172.16.7.151 node_master=true

172.16.7.152 node_master=false

172.16.7.153 node_master=false

6. 使用角色

在roles同级目录,创建一个es.yml文件,里面定义好你的playbook。

[root@node1 ansible_playbooks]# vim es.yml

- hosts: es_servers

remote_user: root

roles:

- es_server

运行playbook安装Elasticsearch集群:

[root@node1 ansible_playbooks]# ansible-playbook es.yml

使用es_server role需要根据实际环境修改vars/main.yml里的变量以及/etc/ansible/hosts文件里定义的主机。

3.6 MySQL role

[root@node1 db_server]# pwd

/root/ansible_playbooks/roles/db_server

[root@node1 db_server]# ls

files handlers meta tasks templates vars

1. 上传安装包

将制作好的rpm包mysql-5.6.27-1.x86_64.rpm放到/root/ansible_playbooks/roles/db_server/files/目录下。

【注意】:这个rpm包是自己打包制作的,打包成rpm会使得部署的效率提高。关于如何打包成rpm见之前的博客《速成RPM包制作》。

2. 编写tasks

[root@node1 db_server]# vim tasks/main.yml

- name: install dependency package

yum: name={{ item }} state=present

with_items:

- libaio

- libaio-devel

- name: copy mysql rpm

copy: src=mysql-5.6.-.x86_64.rpm dest=/tmp/

- name: install mysql

yum: name=/tmp/mysql-5.6.-.x86_64.rpm state=present

- name: start mysql

shell: /etc/init.d/mysqld start

tags:

- start

- name: set up root password

shell: /usr/local/mysql/bin/mysql -uroot -e "UPDATE mysql.user SET Password=PASSWORD('wisedu123') where USER='root'" &>/dev/null

- name: delete anonymous account1

shell: /usr/local/mysql/bin/mysql -uroot -Dmysql -pwisedu123 -e "DROP USER ''@localhost" &>/dev/null

- name: delete anonymous account2

shell: /usr/local/mysql/bin/mysql -uroot -Dmysql -pwisedu123 -e "grant all on *.* to root@'%.%.%.%' identified by 'wisedu123'" &>/dev/null

- name: flush privileges

shell: /usr/local/mysql/bin/mysql -uroot -Dmysql -pwisedu123 -e "flush privileges" &>/dev/null

mysql tasks

3. 设置主机组

# vim /etc/ansible/hosts

[db_servers]

172.16.7.152

4. 使用角色

在roles同级目录,创建一个db.yml文件,里面定义好你的playbook。

[root@node1 ansible_playbooks]# vim db.yml

- hosts: mysql_server

remote_user: root

roles:

- db_server

运行playbook安装MySQL:

[root@node1 ansible_playbooks]# ansible-playbook db.yml

使用db_server role需要根据实际环境修改/etc/ansible/hosts文件里定义的主机。

3.7 Nginx role

[root@node1 web_server]# pwd

/root/ansible_playbooks/roles/web_server

[root@node1 web_server]# ls

files handlers meta tasks templates vars

1. 上传安装包

将制作好的rpm包openresty-for-godseye-1.9.7.3-1.x86_64.rpm放到/root/ansible_playbooks/roles/web_server/files/目录下。

【注意】:做成rpm包,在安装时省去了编译nginx的过程,提升了部署效率。这个包里面打包了很多与我们系统相关的文件。

2. 编写tasks

[root@node1 web_server]# vim tasks/main.yml

- name: install dependency package

yum: name={{ item }} state=present

with_items:

- openssl-devel

- readline-devel

- pcre-devel

- gcc

- name: copy nginx

copy: src=openresty-for-godseye-1.9.7.3-.x86_64.rpm dest=/tmp/

- name: install nginx

yum: name=/tmp/openresty-for-godseye-1.9.7.3-.x86_64.rpm state=present

- name: install configuration file for nginx

template: src=nginx.conf.j2 dest=/usr/local/openresty/nginx/conf/nginx.conf

- name: crontab task

cron: name="clear nginx logs" weekday="" hour="" minute="" job="/usr/local/openresty/clrnginxlog.sh"

- name: start nginx

shell: systemctl start nginx.service

tags:

- start

Nginx tasks

3. 编写templates

将模板nginx.conf.j2放入templates目录下.

配置文件内容过多,具体见github,地址是https://github.com/jkzhao/ansible-godseye。配置文件内容也不再解释,在前面博客中的文章中都已写明。

4. 编写vars

[root@node1 web_server]# vim vars/main.yml

elasticsearch_cluster: server 172.16.7.151:;server 172.16.7.152:;server 172.16.7.153:;

kafka_server1: 172.16.7.151

kafka_server2: 172.16.7.152

kafka_server3: 172.16.7.153

经过测试,变量里面不能有逗号。

5. 设置主机组

/etc/ansible/hosts文件:

# vim /etc/ansible/hosts

[nginx_servers]

172.16.7.153

6. 使用角色

在roles同级目录,创建一个nginx.yml文件,里面定义好你的playbook。

[root@node1 ansible_playbooks]# vim nginx.yml

- hosts: nginx_servers

remote_user: root

roles:

- web_server

运行playbook安装Nginx:

[root@node1 ansible_playbooks]# ansible-playbook nginx.yml

使用web_server role需要根据实际环境修改vars/main.yml里的变量以及/etc/ansible/hosts文件里定义的主机。

3.8 Redis role

[root@node1 redis_server]# pwd

/root/ansible_playbooks/roles/redis_server

[root@node1 redis_server]# ls

files handlers meta tasks templates vars

1. 上传安装包

将制作好的rpm包redis-3.2.2-1.x86_64.rpm放到/root/ansible_playbooks/roles/redis_server/files/目录下。

2. 编写tasks

[root@node1 redis_server]# vim tasks/main.yml

- name: install dependency package

yum: name={{ item }} state=present

with_items:

- openssl-devel

- readline-devel

- pcre-devel

- name: copy redis

copy: src=redis-3.2.-.x86_64.rpm dest=/tmp/

- name: install redis

yum: name=/tmp/redis-3.2.-.x86_64.rpm state=present

- name: start redis

shell: /usr/local/bin/redis-server /etc/redis.conf

tags:

- start

Redis tasks

3. 设置主机组

/etc/ansible/hosts文件:

# vim /etc/ansible/hosts

[redis_servers]

172.16.7.152

4. 使用角色

在roles同级目录,创建一个redis.yml文件,里面定义好你的playbook。

[root@node1 ansible_playbooks]# vim redis.yml

- hosts: redis_servers

remote_user: root

roles:

- redis_server

运行playbook安装redis:

[root@node1 ansible_playbooks]# ansible-playbook redis.yml

使用redis_server role需要根据实际环境修改vars/main.yml里的变量以及/etc/ansible/hosts文件里定义的主机。

3.9 Hadoop role

完全分布式集群部署,NameNode和ResourceManager高可用。

提前配置集群节点的/etc/hosts文件、节点时间同步、某些集群主节点登录其他节点不需要输入密码。

[root@node1 hadoop]# pwd

/root/ansible_playbooks/roles/hadoop

[root@node1 hadoop]# ls

files handlers meta tasks templates vars

1. 上传安装包

将hadoop-2.7.2.tar.gz放到/root/ansible_playbooks/roles/hadoop/files/目录下。

2. 编写tasks

- name: install dependency package

yum: name={{ item }} state=present

with_items:

- openssh

- rsync

- name: create hadoop user

user: name=hadoop password={{password}}

vars:

# created with:

# python -c 'import crypt; print crypt.crypt("This is my Password", "$1$SomeSalt$")'

# >>> import crypt

# >>> crypt.crypt('wisedu123', '$1$bigrandomsalt$')

# '$1$bigrando$wzfZ2ifoHJPvaMuAelsBq0'

password: $$bigrando$wzfZ2ifoHJPvaMuAelsBq0

- name: copy and unzip hadoop

#unarchive module owner and group only effect on directory.

unarchive: src=hadoop-2.7..tar.gz dest=/usr/local/

- name: create hadoop soft link

file: src=/usr/local/hadoop-2.7. dest=/usr/local/hadoop state=link

- name: create hadoop logs directory

file: dest=/usr/local/hadoop/logs mode= state=directory

- name: change hadoop soft link owner and group

#recurse=yes make all files in a directory changed.

file: path=/usr/local/hadoop owner=hadoop group=hadoop recurse=yes

- name: change hadoop-2.7. directory owner and group

#recurse=yes make all files in a directory changed.

file: path=/usr/local/hadoop-2.7. owner=hadoop group=hadoop recurse=yes

- name: set hadoop env

lineinfile: dest={{env_file}} insertafter="{{item.position}}" line="{{item.value}}" state=present

with_items:

- {position: EOF, value: "\n"}

- {position: EOF, value: "# Hadoop environment"}

- {position: EOF, value: "export HADOOP_HOME=/usr/local/hadoop"}

- {position: EOF, value: "export PATH=$PATH:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin"}

- name: enforce env

shell: source {{env_file}}

- name: install configuration file hadoop-env.sh.j2 for hadoop

template: src=hadoop-env.sh.j2 dest=/usr/local/hadoop/etc/hadoop/hadoop-env.sh owner=hadoop group=hadoop

- name: install configuration file core-site.xml.j2 for hadoop

template: src=core-site.xml.j2 dest=/usr/local/hadoop/etc/hadoop/core-site.xml owner=hadoop group=hadoop

- name: install configuration file hdfs-site.xml.j2 for hadoop

template: src=hdfs-site.xml.j2 dest=/usr/local/hadoop/etc/hadoop/hdfs-site.xml owner=hadoop group=hadoop

- name: install configuration file mapred-site.xml.j2 for hadoop

template: src=mapred-site.xml.j2 dest=/usr/local/hadoop/etc/hadoop/mapred-site.xml owner=hadoop group=hadoop

- name: install configuration file yarn-site.xml.j2 for hadoop

template: src=yarn-site.xml.j2 dest=/usr/local/hadoop/etc/hadoop/yarn-site.xml owner=hadoop group=hadoop

- name: install configuration file slaves.j2 for hadoop

template: src=slaves.j2 dest=/usr/local/hadoop/etc/hadoop/slaves owner=hadoop group=hadoop

- name: install configuration file hadoop-daemon.sh.j2 for hadoop

template: src=hadoop-daemon.sh.j2 dest=/usr/local/hadoop/sbin/hadoop-daemon.sh owner=hadoop group=hadoop

- name: install configuration file yarn-daemon.sh.j2 for hadoop

template: src=yarn-daemon.sh.j2 dest=/usr/local/hadoop/sbin/yarn-daemon.sh owner=hadoop group=hadoop

# make sure zookeeper started, and then start hadoop.

# start journalnode

- name: start journalnode

shell: /usr/local/hadoop/sbin/hadoop-daemon.sh start journalnode

become: true

become_method: su

become_user: hadoop

when: datanode == "true"

# format namenode

- name: format active namenode hdfs

shell: /usr/local/hadoop/bin/hdfs namenode -format

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"

- name: start active namenode hdfs

shell: /usr/local/hadoop/sbin/hadoop-daemon.sh start namenode

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"

- name: format standby namenode hdfs

shell: /usr/local/hadoop/bin/hdfs namenode -bootstrapStandby

become: true

become_method: su

become_user: hadoop

when: namenode_standby == "true"

- name: stop active namenode hdfs

shell: /usr/local/hadoop/sbin/hadoop-daemon.sh stop namenode

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"

# format ZKFC

- name: format ZKFC

shell: /usr/local/hadoop/bin/hdfs zkfc -formatZK

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"

# start hadoop cluster

- name: start namenode

shell: /usr/local/hadoop/sbin/start-dfs.sh

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"

- name: start yarn

shell: /usr/local/hadoop/sbin/start-yarn.sh

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"

- name: start standby rm

shell: /usr/local/hadoop/sbin/yarn-daemon.sh start resourcemanager

become: true

become_method: su

become_user: hadoop

when: namenode_standby == "true"

Hadoop tasks

3. 编写templates

将模板core-site.xml.j2、hadoop-daemon.sh.j2、hadoop-env.sh.j2、hdfs-site.xml.j2、mapred-site.xml.j2、slaves.j2、yarn-daemon.sh.j2、yarn-site.xml.j2放入templates目录下。

配置文件内容过多,具体见github,地址是https://github.com/jkzhao/ansible-godseye。配置文件内容也不再解释,在前面博客中的文章中都已写明。

4. 编写vars

[root@node1 hadoop]# vim vars/main.yml

env_file: /etc/profile

# hadoop-env.sh.j2 file variables.

JAVA_HOME: /usr/java/jdk1..0_73

# core-site.xml.j2 file variables.

ZK_NODE1: node1:

ZK_NODE2: node2:

ZK_NODE3: node3:

# hdfs-site.xml.j2 file variables.

NAMENODE1_HOSTNAME: node1

NAMENODE2_HOSTNAME: node2

DATANODE1_HOSTNAME: node3

DATANODE2_HOSTNAME: node4

DATANODE3_HOSTNAME: node5

# mapred-site.xml.j2 file variables.

MR_MODE: yarn

# yarn-site.xml.j2 file variables.

RM1_HOSTNAME: node1

RM2_HOSTNAME: node2

5. 设置主机组

/etc/ansible/hosts文件:

# vim /etc/ansible/hosts

[hadoop]

172.16.7.151 namenode_active=true namenode_standby=false datanode=false

172.16.7.152 namenode_active=false namenode_standby=true datanode=false

172.16.7.153 namenode_active=false namenode_standby=false datanode=true

172.16.7.154 namenode_active=false namenode_standby=false datanode=true

172.16.7.155 namenode_active=false namenode_standby=false datanode=true

6. 使用角色

在roles同级目录,创建一个hadoop.yml文件,里面定义好你的playbook。

[root@node1 ansible_playbooks]# vim hadoop.yml

- hosts: hadoop

remote_user: root

roles:

- jdk8

- hadoop

运行playbook安装hadoop集群:

[root@node1 ansible_playbooks]# ansible-playbook hadoop.yml

使用hadoop role需要根据实际环境修改vars/main.yml里的变量以及/etc/ansible/hosts文件里定义的主机。

3.10 Spark role

Standalone模式部署spark (无HA)

[root@node1 spark]# pwd

/root/ansible_playbooks/roles/spark

[root@node1 spark]# ls

files handlers meta tasks templates vars

1. 上传安装包

将scala-2.10.6.tgz和spark-1.6.1-bin-hadoop2.6.tgz放到/root/ansible_playbooks/roles/hadoop/files/目录下。

2. 编写tasks

- name: copy and unzip scala

unarchive: src=scala-2.10..tgz dest=/usr/local/

- name: set scala env

lineinfile: dest={{env_file}} insertafter="{{item.position}}" line="{{item.value}}" state=present

with_items:

- {position: EOF, value: "\n"}

- {position: EOF, value: "# Scala environment"}

- {position: EOF, value: "export SCALA_HOME=/usr/local/scala-2.10.6"}

- {position: EOF, value: "export PATH=$SCALA_HOME/bin:$PATH"}

- name: copy and unzip spark

unarchive: src=spark-1.6.-bin-hadoop2..tgz dest=/usr/local/

- name: rename spark directory

command: mv /usr/local/spark-1.6.-bin-hadoop2. /usr/local/spark-1.6.

- name: set spark env

lineinfile: dest={{env_file}} insertafter="{{item.position}}" line="{{item.value}}" state=present

with_items:

- {position: EOF, value: "\n"}

- {position: EOF, value: "# Spark environment"}

- {position: EOF, value: "export SPARK_HOME=/usr/local/spark-1.6.1"}

- {position: EOF, value: "export PATH=$SPARK_HOME/bin:$PATH"}

- name: enforce env

shell: source {{env_file}}

- name: install configuration file for spark

template: src=slaves.j2 dest=/usr/local/spark-1.6./conf/slaves

- name: install configuration file for spark

template: src=spark-env.sh.j2 dest=/usr/local/spark-1.6./conf/spark-env.sh

- name: start spark cluster

shell: /usr/local/spark-1.6./sbin/start-all.sh

tags:

- start

Spark tasks

3. 编写templates

将模板slaves.j2和spark-env.sh.j2放到/root/ansible_playbooks/roles/spark/templates/目录下。

配置文件内容过多,具体见github,地址是https://github.com/jkzhao/ansible-godseye。配置文件内容也不再解释,在前面博客中的文章中都已写明。

4. 编写vars

[root@node1 spark]# vim vars/main.yml

env_file: /etc/profile

# spark-env.sh.j2 file variables

JAVA_HOME: /usr/java/jdk1..0_73

SCALA_HOME: /usr/local/scala-2.10.

SPARK_MASTER_HOSTNAME: node1

SPARK_HOME: /usr/local/spark-1.6.

SPARK_WORKER_MEMORY: 256M

HIVE_HOME: /usr/local/apache-hive-2.1.-bin

HADOOP_CONF_DIR: /usr/local/hadoop/etc/hadoop/

# slave.j2 file variables

SLAVE1_HOSTNAME: node2

SLAVE2_HOSTNAME: node3

5. 设置主机组

/etc/ansible/hosts文件:

# vim /etc/ansible/hosts

[spark]

172.16.7.151

172.16.7.152

172.16.7.153

6. 使用角色

在roles同级目录,创建一个spark.yml文件,里面定义好你的playbook。

[root@node1 ansible_playbooks]# vim spark.yml

- hosts: spark

remote_user: root

roles:

- spark

运行playbook安装spark集群:

[root@node1 ansible_playbooks]# ansible-playbook spark.yml

使用spark role需要根据实际环境修改vars/main.yml里的变量以及/etc/ansible/hosts文件里定义的主机。

【注】:所有的文件都在github上,https://github.com/jkzhao/ansible-godseye。

Ansible实战:部署分布式日志系统的更多相关文章

- PowerJob 在线日志饱受好评的秘诀:小但实用的分布式日志系统

本文适合有 Java 基础知识的人群 作者:HelloGitHub-Salieri HelloGitHub 推出的<讲解开源项目>系列. 项目地址: https://github.com/ ...

- C#采用rabbitMQ搭建分布式日志系统

网上对于java有很多开源的组件可以搭建分布式日志系统,我参考一些组件自己开发一套简单的分布式日志系 全部使用采用.NET进行开发,所用技术:MVC.EF.RabbitMq.MySql.Autofac ...

- Spring Cloud 5分钟搭建教程(附上一个分布式日志系统项目作为参考) - 推荐

http://blog.csdn.net/lc0817/article/details/53266212/ https://github.com/leoChaoGlut/log-sys 上面是我基于S ...

- ELK +Nlog 分布式日志系统的搭建 For Windows

前言 我们为啥需要全文搜索 首先,我们来列举一下关系型数据库中的几种模糊查询 MySql : 一般情况下LIKE 模糊查询 SELECT * FROM `LhzxUsers` WHERE UserN ...

- ASP.NET Core分布式日志系统ELK实战演练

一.ELK简介 ELK是Elasticsearch.Logstash和Kibana首字母的缩写.这三者均是开源软件,这三套开源工具组合起来形成了一套强大的集中式日志管理平台. • Elastics ...

- 教你一步搭建Flume分布式日志系统

在前篇几十条业务线日志系统如何收集处理?中已经介绍了Flume的众多应用场景,那此篇中先介绍如何搭建单机版日志系统. 环境 CentOS7.0 Java1.8 下载 官网下载 http://flume ...

- HAProxy + Keepalived + Flume 构建高性能高可用分布式日志系统

一.HAProxy简介 HAProxy提供高可用性.负载均衡以及基于TCP和HTTP应用的代 理,支持虚拟主机,它是免费.快速并且可靠的一种解决方案.HAProxy特别适用于那些负载特大的web站点, ...

- 利用开源架构ELK构建分布式日志系统

问题导读 1.ELK产生的背景?2.ELK的基本组成模块以及各个模块的作用?3.ELK的使用总计有哪些? 背景 日志,对每个系统来说,都是很重要,又很容易被忽视的部分.日志里记录了程序执行的关键信息, ...

- ELK( ElasticSearch+ Logstash+ Kibana)分布式日志系统部署文档

开始在公司实施的小应用,慢慢完善之~~~~~~~~文档制作 了好作运维同事之间的前期普及.. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ 软件下载地址: https://www.e ...

随机推荐

- Hadoop生态圈-hbase介绍-完全分布式搭建

Hadoop生态圈-hbase介绍-完全分布式搭建 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任.

- maven添加docker插件无法引入,运行时报错 No plugin found for prefix 'docker'

maven 安装不上docker插件,运行 提示:docker:bulid时No plugin found for prefix 'docker' 原因是maven不能识别 docker-maven- ...

- Spark记录-Scala数据类型

Scala与Java具有相同的数据类型,具有相同的内存占用和精度.以下是提供Scala中可用的所有数据类型的详细信息的表格: 序号 数据类型 说明 1 Byte 8位有符号值,范围从-128至127 ...

- bzoj千题计划157:bzoj1220:[HNOI2002]跳蚤

扩展欧几里得:ax+by=gcd(a,b) 一定有解 能跳到左边一格,即ax+by=-1 若a,b的gcd=1,则一定有解 所以问题转化为 求n个不大于m的数,他们与m的gcd=1 的方案数 容斥原理 ...

- jenkins 相关默认信息

(1) 默认安装目录 /usr/lib/jenkins/:jenkins安装目录,WAR包会放在这里. ( 2 ) 默认配置文件 /etc/sysconfig/jenkins:jenkins ...

- 20155301 2016-2017-2 《Java程序设计》第6周学习总结

20155301 2016-2017-2 <Java程序设计>第6周学习总结 教材学习内容总结 1.串流设计,在数据来源与目的地之间,简介两者的是串流对象,在来源于目的地都不知道的情况下, ...

- [HAOI2006]旅行 题解(kruskal)

[HAOI2006]旅行 Description Z小镇是一个景色宜人的地方,吸引来自各地的观光客来此旅游观光.Z小镇附近共有N个景点(编号为1,2,3,-,N),这些景点被M条道路连接着,所有道路都 ...

- 【译】第六篇 SQL Server代理深入作业步骤工作流

本篇文章是SQL Server代理系列的第六篇,详细内容请参考原文. 正如这一系列的前几篇所述,SQL Server代理作业是由一系列的作业步骤组成,每个步骤由一个独立的类型去执行.每个作业步骤在技术 ...

- SpringMVC控制器 跳转到jsp页面 css img js等文件不起作用 不显示

今天在SpringMVC转发页面的时候发现跳转页面确实成功,但是JS,CSS等静态资源不起作用: 控制层代码: /** * 转发到查看培养方案详情的页面 * @return */ @RequestMa ...

- oracle字符集查看、修改、版本查看

.1.先查服务端的字符集 或者 2.再查客户端的字符集 两个字符集(不是语言)一致的话就不会乱码了 详细资料 一.什么是Oracle字符集 Oracle字符集是一个字节数据的解释的符号集合,有 ...