Whitening

The goal of whitening is to make the input less redundant; more formally, our desiderata are that our learning algorithms sees a training input where (i) the features are less correlated with each other, and (ii) the features all have the same variance.

example

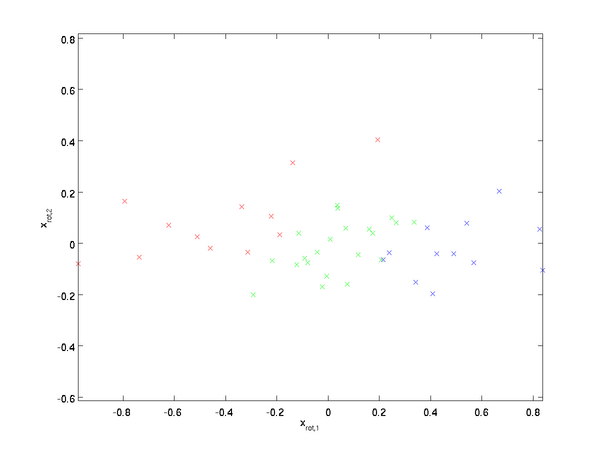

How can we make our input features uncorrelated with each other? We had already done this when computing

. Repeating our previous figure, our plot for

was:

The covariance matrix of this data is given by:

It is no accident that the diagonal values are

and

. Further, the off-diagonal entries are zero; thus,

and

are uncorrelated, satisfying one of our desiderata for whitened data (that the features be less correlated).

To make each of our input features have unit variance, we can simply rescale each feature

by

. Concretely, we define our whitened data

as follows:

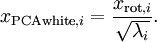

Plotting

, we get:

This data now has covariance equal to the identity matrix

. We say that

is our PCA whitened version of the data: The different components of

are uncorrelated and have unit variance.

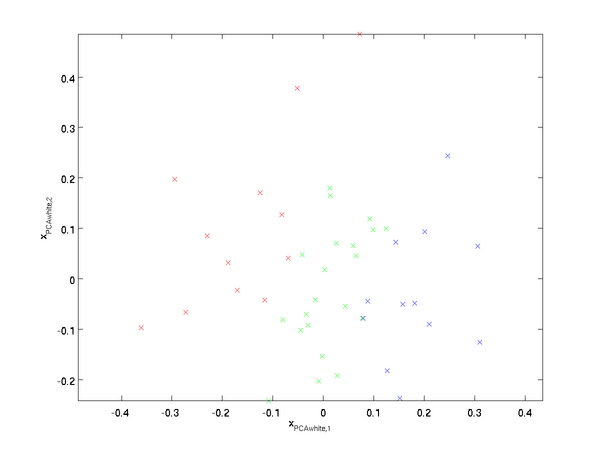

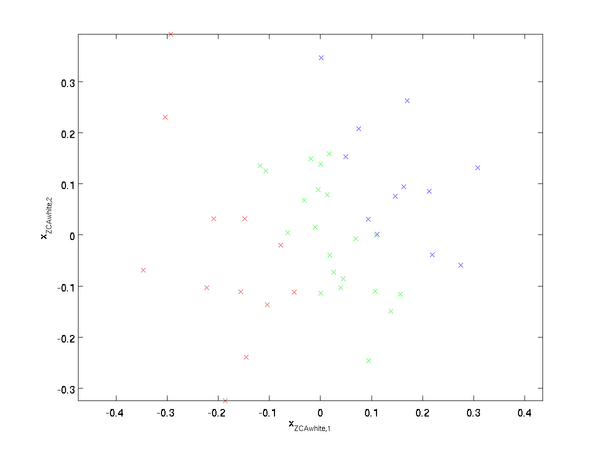

ZCA Whitening

Finally, it turns out that this way of getting the data to have covariance identity

isn't unique. Concretely, if

is any orthogonal matrix, so that it satisfies

(less formally, if

is a rotation/reflection matrix), then

will also have identity covariance. In ZCA whitening, we choose

. We define

Plotting

, we get:

It can be shown that out of all possible choices for

, this choice of rotation causes

to be as close as possible to the original input data

.

When using ZCA whitening (unlike PCA whitening), we usually keep all

dimensions of the data, and do not try to reduce its dimension.

Regularizaton

When implementing PCA whitening or ZCA whitening in practice, sometimes some of the eigenvalues

will be numerically close to 0, and thus the scaling step where we divide by

would involve dividing by a value close to zero; this may cause the data to blow up (take on large values) or otherwise be numerically unstable. In practice, we therefore implement this scaling step using a small amount of regularization, and add a small constant

to the eigenvalues before taking their square root and inverse:

When

takes values around

, a value of

might be typical.

For the case of images, adding

here also has the effect of slightly smoothing (or low-pass filtering) the input image. This also has a desirable effect of removing aliasing artifacts caused by the way pixels are laid out in an image, and can improve the features learned (details are beyond the scope of these notes).

ZCA whitening is a form of pre-processing of the data that maps it from

to

. It turns out that this is also a rough model of how the biological eye (the retina) processes images. Specifically, as your eye perceives images, most adjacent "pixels" in your eye will perceive very similar values, since adjacent parts of an image tend to be highly correlated in intensity. It is thus wasteful for your eye to have to transmit every pixel separately (via your optic nerve) to your brain. Instead, your retina performs a decorrelation operation (this is done via retinal neurons that compute a function called "on center, off surround/off center, on surround") which is similar to that performed by ZCA. This results in a less redundant representation of the input image, which is then transmitted to your brain.

Whitening的更多相关文章

- (六)6.8 Neurons Networks implements of PCA ZCA and whitening

PCA 给定一组二维数据,每列十一组样本,共45个样本点 -6.7644914e-01 -6.3089308e-01 -4.8915202e-01 ... -4.4722050e-01 -7.4 ...

- (六)6.7 Neurons Networks whitening

PCA的过程结束后,还有一个与之相关的预处理步骤,白化(whitening) 对于输入数据之间有很强的相关性,所以用于训练数据是有很大冗余的,白化的作用就是降低输入数据的冗余,通过白化可以达到(1)降 ...

- UFLDL教程之(三)PCA and Whitening exercise

Exercise:PCA and Whitening 第0步:数据准备 UFLDL下载的文件中,包含数据集IMAGES_RAW,它是一个512*512*10的矩阵,也就是10幅512*512的图像 ( ...

- Deep Learning学习随记(二)Vectorized、PCA和Whitening

接着上次的记,前面看了稀疏自编码.按照讲义,接下来是Vectorized, 翻译成向量化?暂且这么认为吧. Vectorized: 这节是老师教我们编程技巧了,这个向量化的意思说白了就是利用已经被优化 ...

- Modeling Filters and Whitening Filters

Colored and White Process White Process White Process,又称为White Noise(白噪声),其中white来源于白光,寓意着PSD的平坦分布,w ...

- 白化(Whitening): PCA 与 ZCA (转)

转自:findbill 本文讨论白化(Whitening),以及白化与 PCA(Principal Component Analysis) 和 ZCA(Zero-phase Component Ana ...

- CS229 6.8 Neurons Networks implements of PCA ZCA and whitening

PCA 给定一组二维数据,每列十一组样本,共45个样本点 -6.7644914e-01 -6.3089308e-01 -4.8915202e-01 ... -4.4722050e-01 -7.4 ...

- CS229 6.7 Neurons Networks whitening

PCA的过程结束后,还有一个与之相关的预处理步骤,白化(whitening) 对于输入数据之间有很强的相关性,所以用于训练数据是有很大冗余的,白化的作用就是降低输入数据的冗余,通过白化可以达到(1)降 ...

- PCA和Whitening

PCA: PCA的具有2个功能,一是维数约简(可以加快算法的训练速度,减小内存消耗等),一是数据的可视化. PCA并不是线性回归,因为线性回归是保证得到的函数是y值方面误差最小,而PCA是保证得到的函 ...

- 【DeepLearning】Exercise:PCA and Whitening

Exercise:PCA and Whitening 习题链接:Exercise:PCA and Whitening pca_gen.m %%============================= ...

随机推荐

- PostgreSQL Replication之第一章 理解复制概念(2)

1.2不同类型的复制 现在,您已经完全地理解了物理和理论的局限性,可以开始学习不同类型的复制了. 1.2.1 同步和异步复制 我们可以做的第一个区分是同步复制和异步复制的区别. 这是什么意思呢?假设我 ...

- button click event in jqxgrid jqwidgets

button click event in jqxgrid jqwidgets http://www.jqwidgets.com/jquery-widgets-demo/demos/jqxgrid/p ...

- css columns 与overflow结合的问题

想实现上面这样分栏,并且溢出滚动的效果.可是自己下面的代码只能得到横向滚动条.觉得出现这个情况觉得还蛮有意思的,特地记录一下. <li v-for="(item,index) in s ...

- 记intel杯比赛中各种bug与debug【其三】:intel chainer的安装与使用

现在在训练模型,闲着来写一篇 顺着这篇文章,顺利安装上intel chainer 再次感谢 大黄老鼠 intel chainer 使用 头一次使用chainer,本以为又入了一个大坑,实际尝试感觉非常 ...

- BZOJ 2141 排队(分块+树状数组)

题意 第一行为一个正整数n,表示小朋友的数量:第二行包含n个由空格分隔的正整数h1,h2,…,hn,依次表示初始队列中小朋友的身高:第三行为一个正整数m,表示交换操作的次数:以下m行每行包含两个正整数 ...

- 解决HMC在IE浏览器无法登录的问题(Java Applet的使用问题)

管理IBM的小型机必须要用到HMC(Hardware Management Console),有时候在使用测试环境使用的时候我们会把HMC装到自己电脑上的虚拟机里面,然后管理小型机,但是在虚拟机里面使 ...

- 今日SGU 6.6

sgu 177 题意:给你一个一开始全是白色的正方形,边长为n,然后问你经过几次染色之后,最后的矩形里面 还剩多少个白色的块 收获:矩形切割,我们可以这么做,离散处理,对于每次染黑的操作,看看后面有没 ...

- ECNUOJ 2615 会议安排

会议安排 Time Limit:1000MS Memory Limit:65536KB Total Submit:451 Accepted:102 Description 科研人员与相关领域的国内外同 ...

- Vs2012在Linux开发中的应用(1):开发环境

在Linux的开发过程中使用过多个IDE.code::blocks.eclipse.source insight.还有嵌入式厂商提供的各种IDE.如VisualDsp等,感觉总是不如vs强大好用.尽管 ...

- sql暂时表的创建

create table #simple /*仅仅对当前用户有效.其它用户无法使用,断掉连接后马上销毁该表*/ ( id int not null ) select * from #simple c ...