8. Linear Transformations

8.1 Linear Requires

Keys:

A linear transformation T takes vectors v to vectors T(v). Linearity requires:

\[T(cv +dw) = cT(v) + dT(w)

\]The input vectors v and outputs T(v) can be in \(R^n\) or matrix space or function space.

If A is m by n, \(T(x)=Ax\) is linear from the input space \(R^n\) to the output space \(R^m\).

The derivative \(T(f)=\frac{df}{dx}\) is linear.The integral \(T^+(f)=\int^x_0f(t)dt\) is its pseudoinverse.

Derivative: \(1,x,x^2 \rightarrow 1,x\)

\[u = a + bx + cx^2 \\

\Downarrow \\

Au = \left [ \begin{matrix} 0&1&0 \\ 0&0&2 \end{matrix} \right]

\left [ \begin{matrix} a \\ b \\ c \end{matrix} \right]

=\left [ \begin{matrix} b \\ 2c \end{matrix} \right] \\

\Downarrow \\

\frac{du}{dx} = b + 2cx

\]Integration: \(1,x \rightarrow x,x^2\)

\[\int^x_0(D+Ex)dx= Dx + \frac{1}{2}Ex^2 \\

\Downarrow \\

input \ \ v \ \ (D+Ex) \\

A^+v = \left[ \begin{matrix} 0&0 \\ 1&0 \\ 0&\frac{1}{2} \end{matrix} \right]

\left[ \begin{matrix} D \\ E \end{matrix} \right]

=\left[ \begin{matrix} 0 \\D \\ \frac{1}{2}E \end{matrix} \right] \\

\Downarrow \\

T^+(v) = Dx + \frac{1}{2}Ex^2

\]The product ST of two linear transformations is still linear : \((ST)(v)=S(T(v))\)

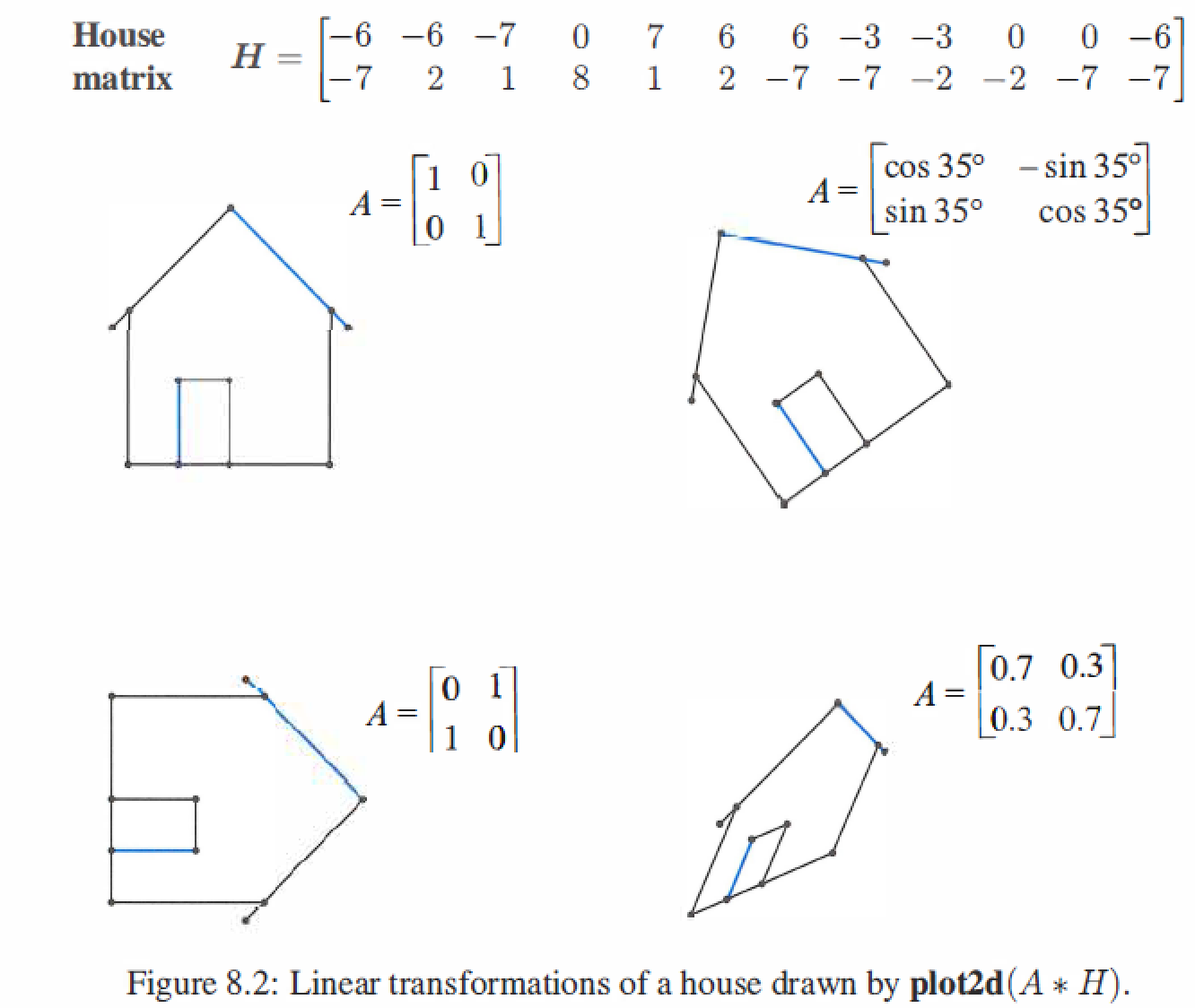

Linear : rotated or stretched or other linear transformations.

8.2 Matrix instead of Linear Transformation

We can assign a matrix A to instead of every linear transformation T.

For ordinary column vectors, the input v is in \(V=R^n\) and the output \(T(v)\) is in \(W=R^m\), The matrix A for this transformation will be m by n.Our choice of bases in V and W will decide A.

8.2.1 Change of Basis

if \(T(v) = v\) means T is the identiy transformation.

If input bases = output bases, then the matrix \(I\) will be choosed.

If input bases not equal to output bases, then we can construct new matrix \(B=W^{-1}V\).

example:

\[input \ \ basis \ \ [v_1 \ \ v_2] = \left [ \begin{matrix} 3&6 \\ 3&8 \end{matrix} \right] \\

output \ \ basis \ \ [w_1 \ \ w_2] = \left [ \begin{matrix} 3&0 \\ 1&2 \end{matrix} \right] \\

\Downarrow \\

v_1 = 1w_1 + 1w_2 \\

v_2 = 2w_1 + 3w_2 \\

\Downarrow \\

[w_1 \ \ w_2] [B] = [v_1 \ \ v_2] \\

\Downarrow \\

\left [ \begin{matrix} 3&0 \\ 1&2 \end{matrix} \right]

\left [ \begin{matrix} 1&2 \\ 1&3 \end{matrix} \right]

=

\left [ \begin{matrix} 3&6 \\ 3&8 \end{matrix} \right]

\]when the input basis is in the columns of V, and the output basis is in the columns of W, the change of basis matrix for \(T\) is \(B=W^{-1}V\).

Suppose the same vector u is written in input basis of v's and output basis of w's:

\[u=c_1v_1 + \cdots + c_nv_n \\

u=d_1w_1 + \cdots + d_nw_n \\

\left [ \begin{matrix} v_1 \cdots v_n \end{matrix} \right]

\left [ \begin{matrix} c_1 \\ \vdots \\ c_n \end{matrix} \right]

=

\left [ \begin{matrix} w_1 \cdots w_n \end{matrix} \right]

\left [ \begin{matrix} d_1 \\ \vdots \\ d_n \end{matrix} \right]

\\

Vc=Wd \\

d = W^{-1}Vc = Bc \\

\]c is coordinates of input basis, d is coordinates of output basis.

8.2.2 Construction Matrix

Suppose T transforms the space V to space W. We choose a basis \(v_1,v_2,...,v_n\) for V and a basis \(w_1,w_2,...,w_n\) for W.

=a_{1j}w_1 + \cdots + a_{mj}w_m

\]

The \(a_{ij}\) are into A.

T(v) =\frac{dv}{dx} = 1c_2 + 2c_3x + 3c_4x^2 \\

Ac=\left[ \begin{matrix} 0&1&0&0 \\ 0&0&2&0\\ 0&0&0&3 \end{matrix} \right]

\left[ \begin{matrix} c_1 \\ c_2 \\ c_3 \\ c_4 \end{matrix} \right]

=\left[ \begin{matrix} c_2 \\ 2c_3 \\ 3c_4 \end{matrix} \right]

\]

T takes the derivative, A is "derivative matrix".

8.2.3 Choosing the Best Bases

The same T is represented by different matrices when we choose different bases.

Perfect basis

Eigenvectors are the perfect basis vectors.They produce the eigenvalues matrix \(\Lambda = X^{-1}AX\)

Input basis = output basis

The new basis of b's is similar to A in the standard basis:

\]

Different basis

Probably A is not symmetric or even square, we can choose the right singular vectors (\(v_1,...,v_n\)) as input basis and the left singular vectors(\(u_1,...,u_n\)) as output basis.

\]

\(\Sigma\) is "isometric" to A.

Definition : \(C=Q^{-1}_1AQ_{2}\) is isometric to A if \(Q_1\) and \(Q_2\) are orthogonal.

8.2.4 The Search of a Good Basis

Keys: fast and few basis.

- $B_{in} = B_{out} = $ eigenvector matrix X . Then \(X^{-1}AX\)= eigenvalues in \(\Lambda\).

- $B_{in} = V \ , \ B_{out} = U $ : singular vectors of A. Then \(U^{-1}AV\)= singular values in \(\Sigma\).

- $B_{in} = B_{out} = $ generalized eigenvectors of A . Then \(B^{-1}AB\)= Jordan form \(J\).

- $B_{in} = B_{out} = $ Fourier matrix F . Then \(Fx\) is a Discrete Fourier Transform of x.

- The Fourier basis : \(1,sinx,cosx,sin2x,cos2x,...\)

- The Legendre basis : \(1, x, x^2 - \frac{1}{3},x^3 - \frac{3}{5},...\)

- The Chebyshev basis : \(1, x, 2x^2 - 1,4x^3 - 3x,...\)

- The Wavelet basis.

8. Linear Transformations的更多相关文章

- Linear transformations. 线性变换与矩阵的关系

0.2.2 Linear transformations. Let U be an n-dimensional vector space and let V be an m-dimensional v ...

- 线性代数导论 | Linear Algebra 课程

搞统计的线性代数和概率论必须精通,最好要能锻炼出直觉,再学机器学习才会事半功倍. 线性代数只推荐Prof. Gilbert Strang的MIT课程,有视频,有教材,有习题,有考试,一套学下来基本就入 ...

- transformations 变换集合关系 仿射变换

http://groups.csail.mit.edu/graphics/classes/6.837/F03/lectures/04_transformations.ppt https://group ...

- paper 128:奇异值分解(SVD) --- 线性变换几何意义[转]

PS:一直以来对SVD分解似懂非懂,此文为译文,原文以细致的分析+大量的可视化图形演示了SVD的几何意义.能在有限的篇幅把这个问题讲解的如此清晰,实属不易.原文举了一个简单的图像处理问题,简单形象,真 ...

- 特征向量-Eigenvalues_and_eigenvectors#Graphs

https://en.wikipedia.org/wiki/Eigenvalues_and_eigenvectors#Graphs A {\displaystyle A} ...

- 转载:奇异值分解(SVD) --- 线性变换几何意义(上)

本文转载自他人: PS:一直以来对SVD分解似懂非懂,此文为译文,原文以细致的分析+大量的可视化图形演示了SVD的几何意义.能在有限的篇幅把这个问题讲解的如此清晰,实属不易.原文举了一个简单的图像处理 ...

- (转) Deep Learning in a Nutshell: Core Concepts

Deep Learning in a Nutshell: Core Concepts Share: Posted on November 3, 2015by Tim Dettmers 7 Comm ...

- We Recommend a Singular Value Decomposition

We Recommend a Singular Value Decomposition Introduction The topic of this article, the singular val ...

- A geometric interpretation of the covariance matrix

A geometric interpretation of the covariance matrix Contents [hide] 1 Introduction 2 Eigendecomposit ...

- <转>机器学习笔记之奇异值分解的几何解释与简单应用

看到的一篇比较好的关于SVD几何解释与简单应用的文章,其实是有中文译本的,但是翻译的太烂,还不如直接看英文原文的.课本上学的往往是知其然不知其所以然,希望这篇文能为所有初学svd的童鞋提供些直观的认识 ...

随机推荐

- 【Azure Storage Blob】如何通过.NET Azure Storage Blobs SDK获取到Blob的MD5值呢?

问题描述 通过.NET Azure Storage Blobs SDK , 获取Blob的MD5值,查看了Azure操作手册中,介绍可以使用 blob.Properties.ContentMD5 属性 ...

- 【Azure Service Bus】使用Spring Cloud integration示例代码,为多个 Service Bus的连接使用 ConnectionString 方式

问题描述 查看Service Bus的Java示例代码,发现使用Spring Cloud Integration,配置 Application.yaml 可以连接到两个Service Bus. 但代码 ...

- docker知识整理(备份)

概念: 镜像:Docker 镜像类似于虚拟机镜像,可以将它理解为一个只读的模板 .镜像是创建 Docker 容器的基础.通过版本管理和增量的文件系统, Docker 提供了一套十分简单的机制来创建和更 ...

- RocketMQ(6) offset管理

这里的offset指的是Consumer的消费进度offset. 消费进度offset是用来记录每个Queue的不同消费组的消费进度的.根据消费进度记录器的不同,可以分为两种模式:本地模式和远程模式. ...

- 学习ASP.NET MVC 编程系列文章目录

学习ASP.NET MVC(一)--我的第一个ASP.NET MVC应用程序 学习ASP.NET MVC(二)--我的第一个ASP.NET MVC 控制器 学习ASP.NET MVC(三)--我的第一 ...

- Spring5课堂笔记

Spring5 1..Spring 1.1.简介 Spring --> 春天,为开源软件带来了春天 2002,首次推出了Spring框架的雏形:interface21框架! Spring框架以i ...

- gyroflow.xyz - 视频防抖 支持相机 gopro 不支持手机视频 - 软件推荐

gyroflow.xyz - 视频防抖 支持相机 gopro 不支持手机视频 - 软件推荐 https://gyroflow.xyz/ https://github.com/gyroflow/gyro ...

- [置顶]

linux与windows之间传输文件工具rz上传大文件失败问题解决方案

rz,sz是Linux/Unix同Windows进行ZModem文件传输的命令行工具. windows端需要支持ZModem的telnet/ssh客户端(比如SecureCRT),运行命令rz即是接收 ...

- MyBatis Java 和 Mysql数据库 数据类型对应表

类型处理器(typeHandlers) MyBatis 在设置预处理语句(PreparedStatement)中的参数或从结果集中取出一个值时, 都会用类型处理器将获取到的值以合适的方式转换成 Jav ...

- 通过抓包分析RTP包头格式信息

目录 RTP概览 RTP Header格式 Rtp 数据包拆解 Version Padding X(扩展) CC(CSRC计数) M(marker) PT(payload type) sequence ...