tensorflow 模型前向传播 保存ckpt tensorbard查看 ckpt转pb pb 转snpe dlc 实例

参考:

TensorFlow 自定义模型导出:将 .ckpt 格式转化为 .pb 格式

TensorFlow 模型保存与恢复

tensorflow 模型前向传播 保存ckpt tensorbard查看 ckpt转pb pb 转snpe dlc 实例

log文件

输入节点 图像高度 图像宽度 图像通道数

input0 6,6,3

输出节点

--out_node add

snpe-tensorflow-to-dlc --graph ./simple_snpe_log/model200.pb -i input0 6,6,3 --out_node add

#coding:utf-8

#http://blog.csdn.net/zhuiqiuk/article/details/53376283

#http://blog.csdn.net/gan_player/article/details/77586489

from __future__ import absolute_import, unicode_literals

import tensorflow as tf

import shutil

import os.path

from tensorflow.python.framework import graph_util

import mxnet as mx

import numpy as np

import random

import cv2

from time import sleep

from easydict import EasyDict as edict

import logging

import math

import tensorflow as tf

import numpy as np def FullyConnected(input, fc_weight, fc_bias, name):

fc = tf.matmul(input, fc_weight) + fc_bias

return fc def inference(body, name_class,outchannel):

wkernel = 3

inchannel = body.get_shape()[3].value

conv_weight = np.arange(wkernel * wkernel * inchannel * outchannel,dtype=np.float32).reshape((outchannel,inchannel,wkernel,wkernel))

conv_weight = conv_weight / (outchannel*inchannel*wkernel*wkernel)

print("conv_weight ", conv_weight)

conv_weight = conv_weight.transpose(2,3,1,0)

conv_weight = tf.Variable(conv_weight, dtype=np.float32, name = "conv_weight")

body = tf.nn.conv2d(body, conv_weight, strides=[1, 1, 1, 1], padding='SAME', name = "conv0")

conv = body

conv_shape = body.get_shape()

dim = conv_shape[1].value * conv_shape[2].value * conv_shape[3].value

body = tf.reshape(body, [1, dim], name = "fc0")

fc_weight = np.ones((dim, name_class))

fc_bias = np.zeros((1, name_class))

fc_weight = tf.Variable(fc_weight, dtype=np.float32, name="fc_weight")

fc_bias = tf.Variable(fc_bias, dtype=np.float32, name="fc_bias")

# tf.constant(100,dtype=np.float32, shape=(body.get_shape()[1] * body.get_shape()[2] * body.get_shape()[3], name_class])

# fc_bias = tf.constant(10, dtype=np.float32, shape=(1, name_class])

body = FullyConnected(body, fc_weight, fc_bias, "fc0")

return conv, body export_dir = "simple_snpe_log"

def saveckpt():

height = 6

width = 6

inchannel = 3

outchannel = 3

graph = tf.get_default_graph()

with tf.Graph().as_default():

input_image = tf.placeholder("float", [1, height, width, inchannel], name = "input0")

conv, logdit = inference(input_image,10,outchannel)

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

img = np.arange(height * width * inchannel, dtype=np.float32).reshape((1,inchannel,height,width)) \

/ (1 * inchannel * height * width) * 255.0 - 127.5

print("img",img)

img = img.transpose(0,2,3,1)

import time

since = time.time()

fc = sess.run(logdit,{input_image:img})

conv = sess.run(conv, {input_image: img})

time_elapsed = time.time() - since

print("tf inference time ", str(time_elapsed))

print("conv", conv.transpose(0, 2, 3, 1))

print("fc", fc)

#np.savetxt("tfconv.txt",fc)

#print( "fc", fc.transpose(0,3,2,1))

#np.savetxt("tfrelu.txt",fc.transpose(0,3,2,1)[0][0]) # #save ckpt

export_dir = "simple_snpe_log"

saver = tf.train.Saver()

step = 200

# if os.path.exists(export_dir):

# os.system("rm -rf " + export_dir)

if not os.path.isdir(export_dir): # Create the log directory if it doesn't exist

os.makedirs(export_dir) checkpoint_file = os.path.join(export_dir, 'model.ckpt')

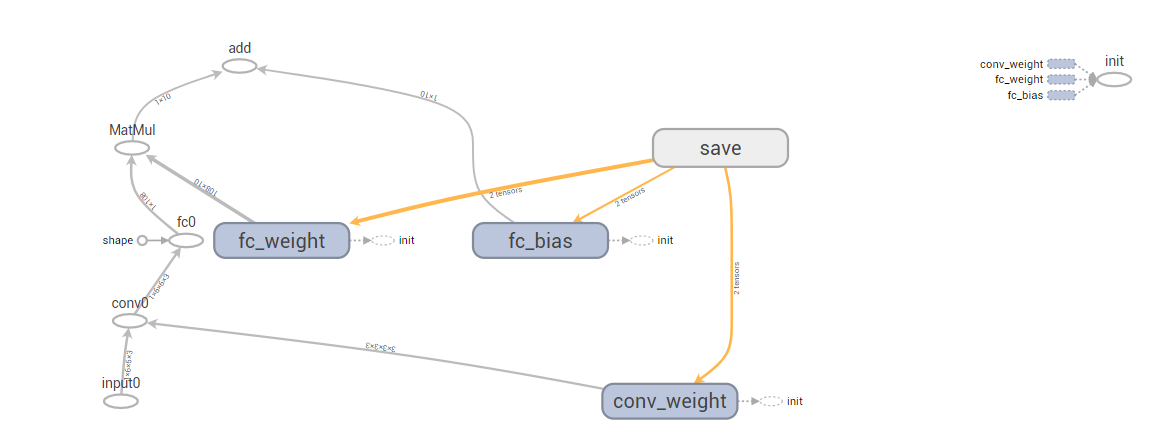

saver.save(sess, checkpoint_file, global_step=step) def LoadModelToTensorBoard():

graph = tf.get_default_graph()

checkpoint_file = os.path.join(export_dir, 'model.ckpt-200.meta')

saver = tf.train.import_meta_graph(checkpoint_file)

print(saver)

summary_write = tf.summary.FileWriter(export_dir , graph)

print(summary_write) def ckptToPb():

checkpoint_file = os.path.join(export_dir, 'model.ckpt-200.meta')

ckpt = tf.train.get_checkpoint_state(export_dir)

print("model ", ckpt.model_checkpoint_path)

saver = tf.train.import_meta_graph(ckpt.model_checkpoint_path +'.meta')

graph = tf.get_default_graph()

with tf.Session() as sess:

saver.restore(sess,ckpt.model_checkpoint_path)

height = 6

width = 6

input_image = tf.get_default_graph().get_tensor_by_name("input0:0")

fc0_output = tf.get_default_graph().get_tensor_by_name("add:0")

sess.run(tf.global_variables_initializer())

output_graph_def = tf.graph_util.convert_variables_to_constants(

sess, graph.as_graph_def(), ['add'])

model_name = os.path.join(export_dir, 'model200.pb')

with tf.gfile.GFile(model_name, "wb") as f:

f.write(output_graph_def.SerializeToString()) def PbTest():

with tf.Graph().as_default():

output_graph_def = tf.GraphDef()

output_graph_path = os.path.join(export_dir,'model200.pb')

with open(output_graph_path, "rb") as f:

output_graph_def.ParseFromString(f.read())

tf.import_graph_def(output_graph_def, name="") with tf.Session() as sess:

tf.initialize_all_variables().run()

height = 6

width = 6

inchannel = 3

outchannel = 3

input_image = tf.get_default_graph().get_tensor_by_name("input0:0")

fc0_output = tf.get_default_graph().get_tensor_by_name("add:0")

conv = tf.get_default_graph().get_tensor_by_name("conv0:0") img = np.arange(height * width * inchannel, dtype=np.float32).reshape((1,inchannel,height,width)) \

/ (1 * inchannel * height * width) * 255.0 - 127.5

print("img",img)

img = img.transpose(0,2,3,1)

import time

since = time.time()

fc0_output = sess.run(fc0_output,{input_image:img})

conv = sess.run(conv, {input_image: img})

time_elapsed = time.time() - since

print("tf inference time ", str(time_elapsed))

print("conv", conv.transpose(0, 2, 3, 1))

print("fc0_output", fc0_output) if __name__ == '__main__': saveckpt() #1

LoadModelToTensorBoard()#2

ckptToPb()#3

PbTest()#

tensorflow 模型前向传播 保存ckpt tensorbard查看 ckpt转pb pb 转snpe dlc 实例的更多相关文章

- Tensorflow模型加载与保存、Tensorboard简单使用

先上代码: from __future__ import absolute_import from __future__ import division from __future__ import ...

- TensorFlow模型加载与保存

我们经常遇到训练时间很长,使用起来就是Weight和Bias.那么如何将训练和测试分开操作呢? TF给出了模型的加载与保存操作,看了网上都是很简单的使用了一下,这里给出一个神经网络的小程序去测试. 本 ...

- 利用tensorflow实现前向传播

import tensorflow as tf w1 = tf.Variable(tf.random_normal((2, 3), stddev=1, seed=1))w2 = tf.Variable ...

- Tensorflow笔记——神经网络图像识别(一)前反向传播,神经网络八股

第一讲:人工智能概述 第三讲:Tensorflow框架 前向传播: 反向传播: 总的代码: #coding:utf-8 #1.导入模块,生成模拟数据集 import t ...

- tensorflow模型的保存与恢复,以及ckpt到pb的转化

转自 https://www.cnblogs.com/zerotoinfinity/p/10242849.html 一.模型的保存 使用tensorflow训练模型的过程中,需要适时对模型进行保存,以 ...

- tensorflow模型持久化保存和加载

模型文件的保存 tensorflow将模型保持到本地会生成4个文件: meta文件:保存了网络的图结构,包含变量.op.集合等信息 ckpt文件: 二进制文件,保存了网络中所有权重.偏置等变量数值,分 ...

- Tensorflow模型变量保存

Tensorflow:模型变量保存 觉得有用的话,欢迎一起讨论相互学习~Follow Me 参考文献Tensorflow实战Google深度学习框架 实验平台: Tensorflow1.4.0 pyt ...

- tensorflow模型持久化保存和加载--深度学习-神经网络

模型文件的保存 tensorflow将模型保持到本地会生成4个文件: meta文件:保存了网络的图结构,包含变量.op.集合等信息 ckpt文件: 二进制文件,保存了网络中所有权重.偏置等变量数值,分 ...

- 超详细的Tensorflow模型的保存和加载(理论与实战详解)

1.Tensorflow的模型到底是什么样的? Tensorflow模型主要包含网络的设计(图)和训练好的各参数的值等.所以,Tensorflow模型有两个主要的文件: a) Meta graph: ...

随机推荐

- 【Unity】4.3 地形编辑器

分类:Unity.C#.VS2015 创建日期:2016-04-10 一.简介 Unity拥有功能完善的地形编辑器,支持以笔刷绘制的方式实时雕刻出山脉.峡谷.平原.高地等地形.Unity地形编辑器同时 ...

- 智能引导式报错(Class file name must end with .class)

转自:http://blog.sina.com.cn/s/blog_8e761c110101dyj3.html 在使用Eclipse时,有时会出现这样的错误,在使用智能引导式会报错An interna ...

- GoLang-字符串

初始化 var str string //声明一个字符串 str = "laoYu" //赋值 ch :=str[0] //获取第一个字符 len :=len(str) //字符串 ...

- c#,asp.net 开发 app 学习资料整理

VS2015 Apache Cordova第一个Android和IOS应用 http://www.cnblogs.com/aehyok/p/4116410.html PhoneGap:免费开源的 HT ...

- Redis List数据类型

一.概述: 在Redis中,List类型是按照插入顺序排序的字符串链表.和数据结构中的普通链表一样,我们可以在其头部(left)和尾部(right)添加新的元素.在插入时,如果该键并不存在, ...

- 使用JPush(极光推送)实现远程通知

使用JPush(极光推送)实现远程通知 远程推送是APP 必备的功能, 现在第三方的 SDK 已经做的非常完备了, 在 iOS10.0出来之后, 极光推送也及时更新了他的 SDK, 今天小试了一下效果 ...

- Flume中的HDFS Sink配置参数说明【转】

转:http://lxw1234.com/archives/2015/10/527.htm 关键字:flume.hdfs.sink.配置参数 Flume中的HDFS Sink应该是非常常用的,其中的配 ...

- 远程mysql导入本地文件

远程mysql导入本地文件 登陆数据库 mysql --local-infile -h<IP> -u<USR> -p 选择数据库 USE xxx 导入文件 LOAD DATA ...

- s3c2440——swi异常

系统复位的时候,从0地址开始执行,这个时候系统处于svc管理模式. 一般而言,我们的app应用程序是处于用户模式的,但是用户模式不能访问硬件,必须处于特权模式才可以.所以这里我们用swi软中断方式来实 ...

- 移动web开发(三)——字体使用

参考: 移动web页面使用字体的思考.http://www.cnblogs.com/PeunZhang/p/3592096.html