python机器学习经典算法代码示例及思维导图(数学建模必备)

最近几天学习了机器学习经典算法,通过此次学习入门了机器学习,并将经典算法的代码实现并记录下来,方便后续查找与使用。

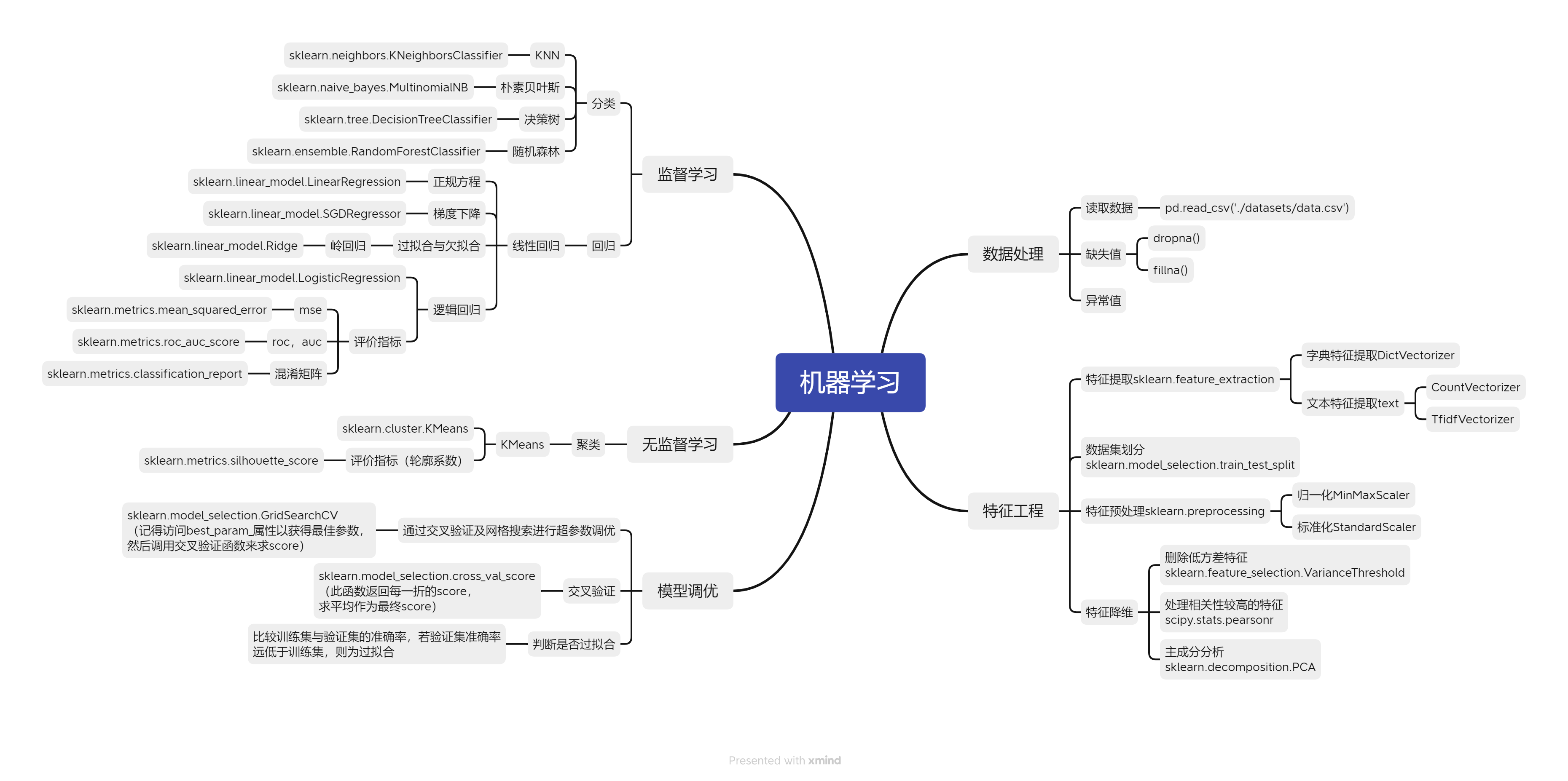

这次记录主要分为两部分:第一部分是机器学习思维导图,以框架的形式描述机器学习开发流程,并附有相关的具体python库,做索引使用;第二部分是相关算法的代码实现(其实就是调包),方便后面使用时直接复制粘贴,改改就可以用,尤其是在数学建模中很实用。

第一部分,思维导图:

第二部分,代码示例:

机器学习代码示例

导包

import numpy as np

import pandas as pd

from matplotlib.pyplot import plot as plt

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction import DictVectorizer

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.preprocessing import MinMaxScaler

from sklearn.preprocessing import StandardScaler

from sklearn.feature_selection import VarianceThreshold

from scipy.stats import pearsonr

from sklearn.model_selection import GridSearchCV

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import MultinomialNB

from sklearn.tree import DecisionTreeClassifier, export_graphviz

from sklearn.ensemble import RandomForestClassifier

from sklearn.linear_model import LinearRegression, SGDRegressor, Ridge, LogisticRegression

from sklearn.metrics import mean_squared_error

from sklearn.metrics import classification_report

from sklearn.metrics import roc_auc_score

from sklearn.cluster import KMeans

from sklearn.metrics import silhouette_score

import joblib

特征工程

特征抽取

def dict_demo():

data = [{'city': '北京', 'temperature': 100}, {'city': '上海', 'temperature': 200},

{'city': '广州', 'temperature': 300}]

transfer = DictVectorizer()

data_new = transfer.fit_transform(data)

data_new = data_new.toarray()

print(data_new)

print(transfer.get_feature_names_out())

# dict_demo()

def count_demo():

data = ["I love love China", "I don't love China"]

transfer = CountVectorizer()

data_new = transfer.fit_transform(data)

data_new = data_new.toarray()

print(data_new)

print(transfer.get_feature_names_out())

# count_demo()

def chinese_demo(d):

tt = " ".join(list(jieba.cut(d)))

return tt

# data = [

# "晚风轻轻飘荡,心事都不去想,那失望也不失望,惆怅也不惆怅,都在风中飞扬",

# "晚风轻轻飘荡,随我迎波逐浪,那欢畅都更欢畅,幻想更幻想,就像 你还在身旁"]

# res = []

# for t in data:

# res.append(chinese_demo(t))

#

# transfer = TfidfVectorizer()

# new_data = transfer.fit_transform(res)

# new_data = new_data.toarray()

# print(new_data)

# print(transfer.get_feature_names_out())

数据预处理

def minmax_demo():

data = pd.read_csv("datasets/dating.txt")

data = data.iloc[:, 0:3]

print(data)

transfer = MinMaxScaler()

data_new = transfer.fit_transform(data)

print(data_new)

return None

# minmax_demo()

def standard_demo():

data = pd.read_csv("datasets/dating.txt")

data = data.iloc[:, 0:3]

print(data)

transfer = StandardScaler()

data_new = transfer.fit_transform(data)

print(data_new)

return None

# standard_demo()

def stats_demo():

data = pd.read_csv("./datasets/factor_returns.csv")

data = data.iloc[:, 1:10]

transfer = VarianceThreshold(threshold=10)

data_new = transfer.fit_transform(data)

print(data_new)

print(data_new.shape)

df = pd.DataFrame(data_new, columns=transfer.get_feature_names_out())

print(df)

# stats_demo()

def pear_demo():

data = pd.read_csv("./datasets/factor_returns.csv")

data = data.iloc[:, 1:10]

print(data.corr(method="pearson"))

# pear_demo()

模型训练

分类算法

KNN

# 读取数据

iris = load_iris()

# 数据集划分

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.3, random_state=42)

# 数据标准化

transfer = StandardScaler()

transfer.fit(x_train)

x_train = transfer.transform(x_train)

x_test = transfer.transform(x_test)

# 模型训练

estimator = KNeighborsClassifier(n_neighbors=i)

estimator.fit(x_train, y_train)

# 模型预测

y_predict = estimator.predict(x_test)

score = estimator.score(x_test, y_test)

print("score:", score)

朴素贝叶斯

new = fetch_20newsgroups(subset="all")

x_train, x_test, y_train, y_test = train_test_split(new.data, new.target, random_state=42)

# 文本特征提取

transfer = TfidfVectorizer()

transfer.fit(x_train)

x_train = transfer.transform(x_train)

x_test = transfer.transform(x_test)

estimator = MultinomialNB()

estimator.fit(x_train, y_train)

score = estimator.score(x_test, y_test)

print(score)

决策树

iris = load_iris()

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.3, random_state=42)

estimator = DecisionTreeClassifier(criterion='gini')

estimator.fit(x_train, y_train)

score = estimator.score(x_test, y_test)

print(score)

# 决策树可视化

export_graphviz(estimator, out_file='tree.dot', feature_names=iris.feature_names)

随机森林

x_train, x_test, y_train, y_test = train_test_split(x, y, train_size=0.7)

estimator = RandomForestClassifier(random_state=42, max_features='sqrt')

param_dict = {'n_estimators': range(10, 50), 'max_depth': range(5, 10)}

estimator = GridSearchCV(estimator=estimator, param_grid=param_dict, cv=3)

estimator.fit(x_train, y_train)

print(estimator.best_score_)

print(estimator.best_estimator_)

print(estimator.best_params_)

回归算法

线性回归

def demo1():

data_url = "http://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22, header=None)

data = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :2]])

target = raw_df.values[1::2, 2]

x_train, x_test, y_train, y_test = train_test_split(data, target, train_size=0.7, random_state=42)

transfer = StandardScaler()

transfer.fit(x_train)

x_train = transfer.transform(x_train)

x_test = transfer.transform(x_test)

estimator = LinearRegression()

estimator.fit(x_train, y_train)

y_predict = estimator.predict(x_test)

mse = mean_squared_error(y_test, y_predict)

print("正规方程-", estimator.coef_)

print("正规方程-", estimator.intercept_)

print(mse)

def demo2():

data_url = "http://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22, header=None)

data = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :2]])

target = raw_df.values[1::2, 2]

x_train, x_test, y_train, y_test = train_test_split(data, target, train_size=0.7, random_state=42)

transfer = StandardScaler()

transfer.fit(x_train)

x_train = transfer.transform(x_train)

x_test = transfer.transform(x_test)

estimator = SGDRegressor()

estimator.fit(x_train, y_train)

y_predict = estimator.predict(x_test)

mse = mean_squared_error(y_test, y_predict)

print("梯度下降", estimator.coef_)

print("梯度下降", estimator.intercept_)

print(mse)

岭回归

def demo3():

data_url = "http://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22, header=None)

data = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :2]])

target = raw_df.values[1::2, 2]

x_train, x_test, y_train, y_test = train_test_split(data, target, train_size=0.7, random_state=42)

transfer = StandardScaler()

transfer.fit(x_train)

x_train = transfer.transform(x_train)

x_test = transfer.transform(x_test)

estimator = Ridge()

estimator.fit(x_train, y_train)

y_predict = estimator.predict(x_test)

mse = mean_squared_error(y_test, y_predict)

print("梯度下降", estimator.coef_)

print("梯度下降", estimator.intercept_)

print(mse)

逻辑回归

def demo4():

data = pd.read_csv("./datasets/breast-cancer-wisconsin.data",

names=['Sample code number', 'Clump Thickness', 'Uniformity of Cell Size',

'Uniformity of Cell Shape',

'Marginal Adhesion', 'Single Epithelial Cell Size', 'Bare Nuclei', 'Bland Chromatin',

' Normal Nucleoli', 'Mitoses', 'Class'])

data.replace(to_replace="?", value=np.nan, inplace=True)

data.dropna(inplace=True)

x = data.iloc[:, 1:-1]

y = data['Class']

x_train, x_test, y_train, y_test = train_test_split(x, y, train_size=0.7, random_state=42)

transfer = StandardScaler()

transfer.fit(x_train)

x_train = transfer.transform(x_train)

x_test = transfer.transform(x_test)

estimator = LogisticRegression()

estimator.fit(x_train, y_train)

# joblib.dump(estimator, 'estimator.pkl')

# estimator = joblib.load('estimator.pkl')

y_predict = estimator.predict(x_test)

print(estimator.coef_)

print(estimator.intercept_)

score = estimator.score(x_test, y_test)

print(score)

report = classification_report(y_test, y_predict, labels=[2, 4], target_names=["良性", "恶性"])

print(report)

auc = roc_auc_score(y_test, y_predict)

print(auc)

聚类算法

KMeans

data = pd.read_csv("./datasets/factor_returns.csv")

data = data.iloc[:, 1:10]

transfer = VarianceThreshold(threshold=10)

data_new = transfer.fit_transform(data)

# df = pd.DataFrame(data_new, columns=transfer.get_feature_names_out())

estimator = KMeans()

estimator.fit(data_new)

y_predict = estimator.predict(data_new)

print(y_predict)

s = silhouette_score(data_new, y_predict)

print(s)

模型调优

# 网格搜索与交叉验证:以KNN为例

iris = load_iris()

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.3, random_state=42)

transfer = StandardScaler()

transfer.fit(x_train)

x_train = transfer.transform(x_train)

x_test = transfer.transform(x_test)

estimator = KNeighborsClassifier()

# 网格搜素设置

para_dict = {"n_neighbors": range(1, 10)}

estimator = GridSearchCV(estimator, para_dict, cv=10)

estimator.fit(x_train, y_train)

# 最佳参数

print("best_score_:", estimator.best_score_)

print("best_estimator_:", estimator.best_estimator_)

print("best_params_:", estimator.best_params_)

本文作者:CodingOrange

本文链接:https://www.cnblogs.com/CodingOrange/p/17642747.html

转载请注明出处!

python机器学习经典算法代码示例及思维导图(数学建模必备)的更多相关文章

- 计算机基础 python安装时的常见致命错误 pycharm 思维导图

计算机基础 1.组成 人 功能 主板:骨架 设备扩展 cpu:大脑 计算 逻辑处理 硬盘: 永久储存 电源:心脏 内存: 临时储存,断电无 操作系统(windonws mac linux): 软件,应 ...

- 第一行代码笔记的思维导图(http://images2015.cnblogs.com/blog/1089110/201704/1089110-20170413160323298-819915364.png)

- python中的内置函数的思维导图

https://mubu.com/doc/taq9-TBNix

- iOS面试准备之思维导图

以思维导图的方式对iOS常见的面试题知识点进行梳理复习,文章xmind点这下载,文章图片太大查看不了也点这下载 你可以在公众号 五分钟学算法 获取数据结构与算法相关的内容,准备算法面试 公众号回复 g ...

- JavaScript如何生成思维导图(mindmap)

JavaScript如何生成思维导图(mindmap) 一.总结 一句话总结:可以直接用gojs gojs 二.一个用JavaScript生成思维导图(mindmap)的github repo(转) ...

- iOS面试准备之思维导图(转)

以思维导图的方式对iOS常见的面试题知识点进行梳理复习. 目录 1.UI视图相关面试问题 2.Runtime相关面试问题 3.内存管理相关面试问题 4.Block相关面试问题 5.多线程相关面试问题 ...

- 一个用JavaScript生成思维导图(mindmap)的github repo

github 地址:https://github.com/dundalek/markmap 作者的readme写得很简单. 今天有同事问作者提供的例子到底怎么跑.这里我就写一个更详细的步骤出来. 首先 ...

- 机器学习经典算法详解及Python实现--基于SMO的SVM分类器

原文:http://blog.csdn.net/suipingsp/article/details/41645779 支持向量机基本上是最好的有监督学习算法,因其英文名为support vector ...

- 机器学习经典算法具体解释及Python实现--线性回归(Linear Regression)算法

(一)认识回归 回归是统计学中最有力的工具之中的一个. 机器学习监督学习算法分为分类算法和回归算法两种,事实上就是依据类别标签分布类型为离散型.连续性而定义的. 顾名思义.分类算法用于离散型分布预測, ...

- 机器学习经典算法具体解释及Python实现--K近邻(KNN)算法

(一)KNN依旧是一种监督学习算法 KNN(K Nearest Neighbors,K近邻 )算法是机器学习全部算法中理论最简单.最好理解的.KNN是一种基于实例的学习,通过计算新数据与训练数据特征值 ...

随机推荐

- 2022-04-12:给定一个字符串形式的数,比如“3421“或者“-8731“, 如果这个数不在-32768~32767范围上,那么返回“NODATA“, 如果这个数在-32768~32767范围上

2022-04-12:给定一个字符串形式的数,比如"3421"或者"-8731", 如果这个数不在-32768~32767范围上,那么返回"NODAT ...

- 2021-10-02:单词搜索。给定一个 m x n 二维字符网格 board 和一个字符串单词 word 。如果 word 存在于网格中,返回 true ;否则,返回 false 。单词必须按照字母

2021-10-02:单词搜索.给定一个 m x n 二维字符网格 board 和一个字符串单词 word .如果 word 存在于网格中,返回 true :否则,返回 false .单词必须按照字母 ...

- docker安装es,单机集群模式.失败。

操作系统:mac系统. docker run -d --name es1 -p 9201:9200 -p 9301:9300 elasticsearch:7.14.0 docker run -d -- ...

- 2021-09-20:给你一个有序数组 nums ,请你 原地 删除重复出现的元素,使每个元素 只出现一次 ,返回删除后数组的新长度。不要使用额外的数组空间,你必须在 原地 修改输入数组 并在使用 O

2021-09-20:给你一个有序数组 nums ,请你 原地 删除重复出现的元素,使每个元素 只出现一次 ,返回删除后数组的新长度.不要使用额外的数组空间,你必须在 原地 修改输入数组 并在使用 O ...

- Selenium - 元素定位(3) - CSS进阶

Selenium - 元素定位 CSS 定位进阶 元素示例 属性定位 # css 通过id属性定位 driver.find_element_by_css_selector("#kw" ...

- MMCM/PLL VCO

输入输出时钟频率,input 322.265625Mhz, output 312.5Mhz 对于使用MMCM与PLL的不同情况,虽然输入输出频率是一样的,但是,分/倍频系数是不同的,不能使用同一套参数 ...

- HNU2019 Summer Training 3 E. Blurred Pictures

E. Blurred Pictures time limit per test 2 seconds memory limit per test 256 megabytes input standard ...

- 【C#/.NET】使用ASP.NET Core对象池

Nuget Microsoft.Extensions.ObjectPool 使用对象池的好处 减少初始化/资源分配,提高性能.这一条与线程池同理,有些对象的初始化或资源分配耗时长,复用这些对象减少初始 ...

- 谈谈ChatGPT是否可以替代人

起初我以为我是搬砖的,最近发现其实只是一块砖,哪里需要哪里搬. 这两天临时被抽去支援跨平台相关软件开发,帮忙画几个界面.有了 ChatGPT 之后就觉得以前面向 Googel 编程会拉低我滴档次和逼格 ...

- 从零玩转系列之微信支付实战PC端接口搭建

一.前言 halo各位大佬很久没更新了最近在搞微信支付,因商户号审核了我半个月和小程序认证也找了资料并且将商户号和小程序进行关联,至此微信支付Native支付完成.此篇文章过长我将分几个阶段的文章发布 ...