OpenShift上的OpenvSwitch入门

前段时间参加openshift培训,通过产品部门的讲解,刷新了我对OpenShift一些的认识,今天先从最弱的环节网络做一些了解吧。

Openvswitch是openshift sdn的核心组件,进入集群,然后列出某个节点所有的pod

[root@master ~]# oc adm manage-node node1.example.com --list-pods Listing matched pods on node: node1.example.com NAMESPACE NAME READY STATUS RESTARTS AGE

default docker-registry--trrdq / Running 16d

default router--6zjmb / Running 16d

openshift-monitoring alertmanager-main- / Running 16d

openshift-monitoring alertmanager-main- / Running 6d

openshift-monitoring alertmanager-main- / Running 6d

openshift-monitoring cluster-monitoring-operator-df6d9f48d-8lzcw / Running 16d

openshift-monitoring grafana-76cc4f64c-m25c4 / Running 16d

openshift-monitoring kube-state-metrics-8db94b768-tgfgq / Running 16d

openshift-monitoring node-exporter-mjclp / Running 164d

openshift-monitoring prometheus-k8s- / Running 6d

openshift-monitoring prometheus-k8s- / Running 6d

openshift-monitoring prometheus-operator-959fc8dfd-ppc78 / Running 16d

openshift-node sync-7mpsc / Running 164d

openshift-sdn ovs-rzc7j / Running 164d

openshift-sdn sdn-77s8t / Running 164d

Sync Pod

sync pod主要是sync daemonset创建,主要负责监控/etc/sysconfig/atomic-openshift-node的变化, 主要是观察BOOTSTRAP_CONFIG_NAME的设置.

BOOTSTRAP_CONFIG_NAME 是openshift-ansible 安装的,是一个基于node configuration group的ConfigMap类型。openshift 缺省有如下node Configuration Group

node-config-master

node-config-infra

node-config-compute

node-config-all-in-one

node-config-master-infra

Sync Pod 转换configmap的数据到kubelet的配置,并为节点生成/etc/origin/node/node-config.yaml ,如果文件配置有变化,kubelet会重启。

0. OVS的简介

OVS Pod

ovs Pod主要基于Openvswitch为Openshift提供一个容器网络.它是OpenvSwitch的一个容器化实现,核心组件如下:

核心组件介绍如下

- OVS-VSwitchd

ovs-vswitchd守护进程是OVS的核心部件,它和datapath内核模块一起实现OVS基于流的数据交换。作为核心组件,它使用openflow协议与上层OpenFlow控制器通信,使用OVSDB协议与ovsdb-server通信,使用netlink和datapath内核模块通信。ovs-vswitchd在启动时会读取ovsdb-server中配置信息,然后配置内核中的datapaths和所有OVS switches,当ovsdb中的配置信息改变时(例如使用ovs-vsctl工具),ovs-vswitchd也会自动更新其配置以保持与数据库同步

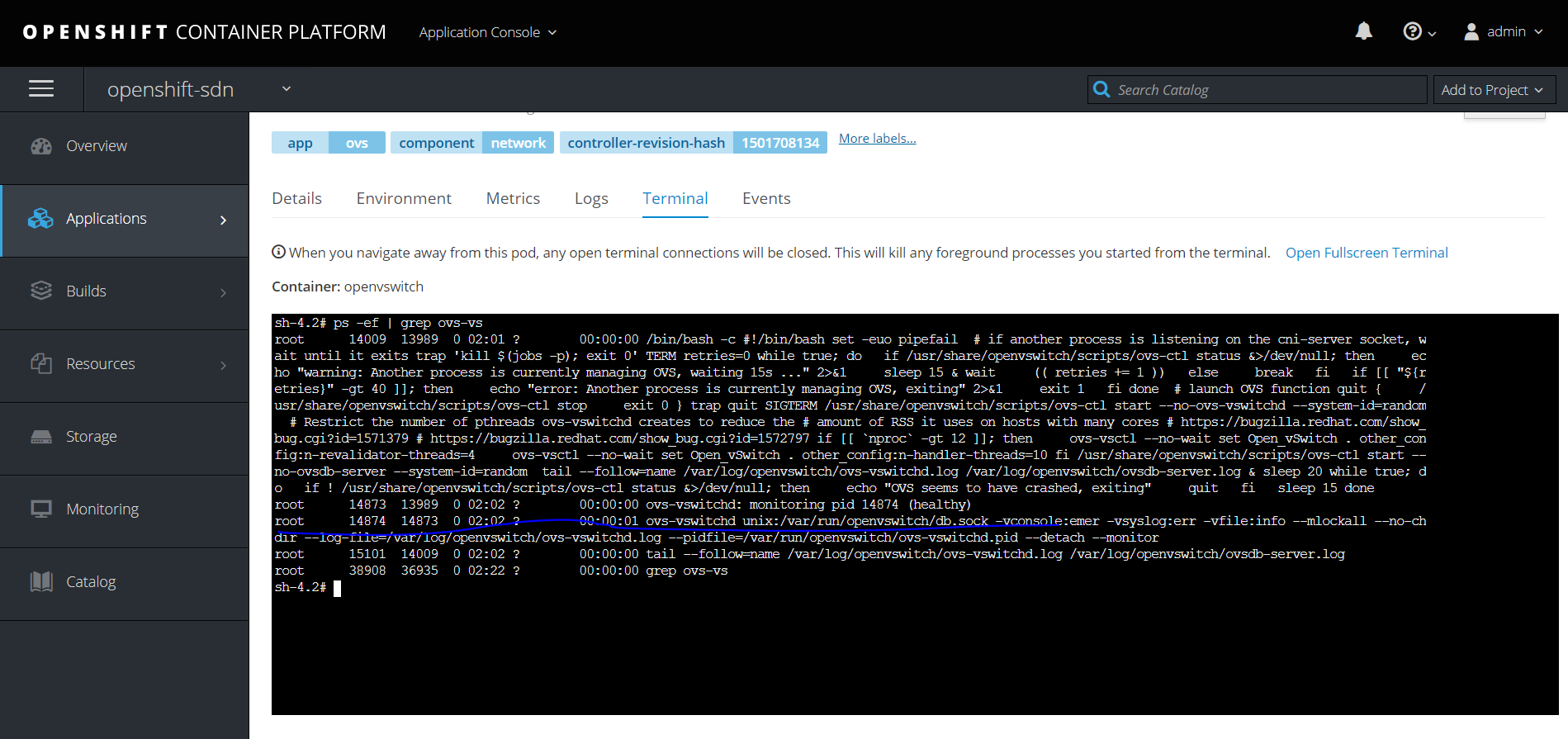

进入到ovs pod,可以看到运行的核心进程

root : ? :: ovs-vswitchd unix:/var/run/openvswitch/db.sock -vconsole:emer -vsyslog:err -vfile:info --mlockall --no-chdir --log-file=/var/log/openvswitch/ovs-vswitchd.log --pidfile=/var/run/openvswitch/ovs-vswitchd.pid --detach --monitor

ovsdb-server

ovsdb-server是OVS轻量级的数据库服务,用于整个OVS的配置信息,包括接口/交换内容/VLAN等,OVS主进程ovs-vswitchd根据数据库中的配置信息工作,下面是ovsdb-server进程详细信息

sh-4.2# ps -ef | grep ovsdb-server

root : ? :: /bin/bash -c #!/bin/bash set -euo pipefail # if another process is listening on the cni-server socket, wait until it exits trap 'kill $(jobs -p); exit 0' TERM retries= while true; do if /usr/share/openvswitch/scripts/ovs-ctl status &>/dev/null; then echo "warning: Another process is currently managing OVS, waiting 15s ..." >& sleep & wait (( retries += )) else break fi if [[ "${retries}" -gt ]]; then echo "error: Another process is currently managing OVS, exiting" >& exit fi done # launch OVS function quit { /usr/share/openvswitch/scripts/ovs-ctl stop exit } trap quit SIGTERM /usr/share/openvswitch/scripts/ovs-ctl start --no-ovs-vswitchd --system-id=random # Restrict the number of pthreads ovs-vswitchd creates to reduce the # amount of RSS it uses on hosts with many cores # https://bugzilla.redhat.com/show_bug.cgi?id=1571379 # https://bugzilla.redhat.com/show_bug.cgi?id=1572797 if [[ `nproc` -gt 12 ]]; then ovs-vsctl --no-wait set Open_vSwitch . other_config:n-revalidator-threads=4 ovs-vsctl --no-wait set Open_vSwitch . other_config:n-handler-threads=10 fi /usr/share/openvswitch/scripts/ovs-ctl start --no-ovsdb-server --system-id=random tail --follow=name /var/log/openvswitch/ovs-vswitchd.log /var/log/openvswitch/ovsdb-server.log & sleep 20 while true; do if ! /usr/share/openvswitch/scripts/ovs-ctl status &>/dev/null; then echo "OVS seems to have crashed, exiting" quit fi sleep 15 done

root : ? :: ovsdb-server: monitoring pid (healthy)

root : ? :: ovsdb-server /etc/openvswitch/conf.db -vconsole:emer -vsyslog:err -vfile:info --remote=punix:/var/run/openvswitch/db.sock --private-key=db:Open_vSwitch,SSL,private_key --certificate=db:Open_vSwitch,SSL,certificate --bootstrap-ca-cert=db:Open_vSwitch,SSL,ca_cert --no-chdir --log-file=/var/log/openvswitch/ovsdb-server.log --pidfile=/var/run/openvswitch/ovsdb-server.pid --detach --monitor

root : ? :: tail --follow=name /var/log/openvswitch/ovs-vswitchd.log /var/log/openvswitch/ovsdb-server.log

root : ? :: grep ovsdb-server

OpenFlow

OpenFlow是开源的用于管理交换机流表的协议,OpenFlow在OVS中的地位可以参考上面架构图,它是Controller和ovs-vswitched间的通信协议。需要注意的是,OpenFlow是一个独立的完整的流表协议,不依赖于OVS,OVS只是支持OpenFlow协议,有了支持,我们可以使用OpenFlow控制器来管理OVS中的流表,OpenFlow不仅仅支持虚拟交换机,某些硬件交换机也支持OpenFlow协议。

Controller

Controller指OpenFlow控制器。OpenFlow控制器可以通过OpenFlow协议连接到任何支持OpenFlow的交换机,比如OVS。控制器通过向交换机下发流表规则来控制数据流向。除了可以通过OpenFlow控制器配置OVS中flows,也可以使用OVS提供的ovs-ofctl命令通过OpenFlow协议去连接OVS,从而配置flows,命令也能够对OVS的运行状况进行动态监控。

Kernel Datapath

datapath是一个Linux内核模块,它负责执行数据交换。

1.网络架构

下面这个图比较直观。

找个环境先看看

[root@node1 ~]# ip addr show

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN group default qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP group default qlen

link/ether :::dc::1a brd ff:ff:ff:ff:ff:ff

inet 192.168.56.104/ brd 192.168.56.255 scope global noprefixroute enp0s3

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fedc:991a/ scope link tentative dadfailed

valid_lft forever preferred_lft forever

: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN group default

link/ether ::1e::6a: brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/ scope global docker0

valid_lft forever preferred_lft forever

: ovs-system: <BROADCAST,MULTICAST> mtu qdisc noop state DOWN group default qlen

link/ether :ea::f3:: brd ff:ff:ff:ff:ff:ff

: br0: <BROADCAST,MULTICAST> mtu qdisc noop state DOWN group default qlen

link/ether 8e:be:::b7: brd ff:ff:ff:ff:ff:ff

: vxlan_sys_4789: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UNKNOWN group default qlen

link/ether :e0:7e::e9:5b brd ff:ff:ff:ff:ff:ff

inet6 fe80::54e0:7eff:fe45:e95b/ scope link

valid_lft forever preferred_lft forever

: tun0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN group default qlen

link/ether 1a::ef:7d::2a brd ff:ff:ff:ff:ff:ff

inet 10.130.0.1/ brd 10.130.1.255 scope global tun0

valid_lft forever preferred_lft forever

inet6 fe80:::efff:fe7d:842a/ scope link

valid_lft forever preferred_lft forever

: veth4da99f3a@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UP group default

link/ether 4e:7c::3b:db:3c brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80::4c7c:96ff:fe3b:db3c/ scope link

valid_lft forever preferred_lft forever

: veth4596fc96@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UP group default

link/ether 2a::b7:1b:e0: brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80:::b7ff:fe1b:e081/ scope link

valid_lft forever preferred_lft forever

: veth04caa6b2@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UP group default

link/ether 3a:5b:9a::9c:f4 brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80::385b:9aff:fe62:9cf4/ scope link

valid_lft forever preferred_lft forever

: veth14f14b18@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UP group default

link/ether ca:d2::::be brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80::c8d2:96ff:fe48:84be/ scope link

valid_lft forever preferred_lft forever

: veth31713a78@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UP group default

link/ether ce:c3:8f:3e:b9: brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80::ccc3:8fff:fe3e:b941/ scope link

valid_lft forever preferred_lft forever

: veth9ff2f96a@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UP group default

link/ether :f8::d2::7a brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80::14f8:11ff:fed2:287a/ scope link

valid_lft forever preferred_lft forever

: veth86a4a302@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UP group default

link/ether ::ab:7a:: brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80:::abff:fe7a:/ scope link

valid_lft forever preferred_lft forever

: vethb4141622@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UP group default

link/ether :d1:df::5c:d3 brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80::44d1:dfff:fe02:5cd3/ scope link

valid_lft forever preferred_lft forever

: vethae772509@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UP group default

link/ether c6::af::f0:b7 brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80::c412:afff:fe03:f0b7/ scope link

valid_lft forever preferred_lft forever

: veth4fbfae38@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master ovs-system state UP group default

link/ether :f3:e8::9b:e9 brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80::80f3:e8ff:fe87:9be9/ scope link

valid_lft forever preferred_lft forever

可以看到一堆的interface, 在一个节点上,OpenShift SDN往往会生成如下的类型的接口(interface)

br0: OVS的网桥设备 The OVS bridge device that containers will be attached to. OpenShift SDN also configures a set of non-subnet-specific flow rules on this bridge.tun0: 访问外部网络的网关 An OVS internal port (port 2 onbr0). This gets assigned the cluster subnet gateway address, and is used for external network access. OpenShift SDN configuresnetfilterand routing rules to enable access from the cluster subnet to the external network via NAT.vxlan_sys_4789: 访问其他节点Pod的设备 The OVS VXLAN device (port 1 onbr0), which provides access to containers on remote nodes. Referred to asvxlan0in the OVS rules.vethX(in the main netns): 和Pod关联的虚拟网卡 A Linux virtual ethernet peer ofeth0in the Docker netns. It will be attached to the OVS bridge on one of the other ports.

这个说明可以结合上面那个图来看,就很清晰了。

基于brctl命令是看不到ovs的网桥的,只能看到 docker0

[root@node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 .02421e326a69 no

如果要看到br0网桥,并且了解附在上面的ip信息,需要安装openvswitch.

2.安装OpenvSwitch

基于

https://access.redhat.com/solutions/3710321

因为openvswitch 在3.10以上版本转为static pod实现,所以在3.10以上版本在节点不预装openvswitch,如果需要安装参照一下步骤。当然也可以直接在ovs pod里面进行查看。

# subscription-manager register

# subscription-manager list --available

# subscription-manager attach --pool=<pool_id>

subscription-manager repos --enable=rhel--server-extras-rpms

subscription-manager repos --enable=rhel--server-optional-rpms yum install -y openvswitch

基于命令查看网桥

[root@node1 network-scripts]# ovs-vsctl list-br

br0

查看网桥上的端口

[root@node1 network-scripts]# ovs-ofctl -O OpenFlow13 dump-ports-desc br0

OFPST_PORT_DESC reply (OF1.) (xid=0x2):

(vxlan0): addr:a2::b9:7d:a6:c3

config:

state: LIVE

speed: Mbps now, Mbps max

(tun0): addr:de::d6:e7:a9:

config:

state: LIVE

speed: Mbps now, Mbps max

(veth04fb5821): addr::::fa:e0:9f

config:

state: LIVE

current: 10GB-FD COPPER

speed: Mbps now, Mbps max

(vethbdebc4f7): addr:d6:f8:::3e:da

config:

state: LIVE

current: 10GB-FD COPPER

speed: Mbps now, Mbps max

(veth9fd3926f): addr:5e:::c8::9e

config:

state: LIVE

current: 10GB-FD COPPER

speed: Mbps now, Mbps max

(veth3b466fa9): addr:ee:3f:1b:cb:cf:9b

config:

state: LIVE

current: 10GB-FD COPPER

speed: Mbps now, Mbps max

(veth866c42b5): addr:be:7e:e6:d2:2f:f1

config:

state: LIVE

current: 10GB-FD COPPER

speed: Mbps now, Mbps max

(veth2446bc66): addr::5b:fe:::

config:

state: LIVE

current: 10GB-FD COPPER

speed: Mbps now, Mbps max

(veth1afcb012): addr::3b:de:9d::8b

config:

state: LIVE

current: 10GB-FD COPPER

speed: Mbps now, Mbps max

(vethd31bb8c7): addr::8f:::ba:

config:

state: LIVE

current: 10GB-FD COPPER

speed: Mbps now, Mbps max

(vethe02a7907): addr::c4:af:c3:c8:

config:

state: LIVE

current: 10GB-FD COPPER

speed: Mbps now, Mbps max

(veth06b13117): addr:0a:::5e::d7

config:

state: LIVE

current: 10GB-FD COPPER

speed: Mbps now, Mbps max

LOCAL(br0): addr:b2:a3:b4::9d:4e

config: PORT_DOWN

state: LINK_DOWN

speed: Mbps now, Mbps max

查看所有信息

[root@node1 ~]# ovs-vsctl show

c2bad35f---aa1c-b255d0ee7e60

Bridge "br0"

fail_mode: secure

Port "tun0"

Interface "tun0"

type: internal

Port "vethfff599a6"

Interface "vethfff599a6"

Port "br0"

Interface "br0"

type: internal

Port "veth38efc56f"

Interface "veth38efc56f"

Port "veth80b6c074"

Interface "veth80b6c074"

Port "veth4cdb026d"

Interface "veth4cdb026d"

Port "veth42f411d0"

Interface "veth42f411d0"

Port "vxlan0"

Interface "vxlan0"

type: vxlan

options: {dst_port="", key=flow, remote_ip=flow}

Port "veth5bf0c012"

Interface "veth5bf0c012"

Port "vethb80cca24"

Interface "vethb80cca24"

Port "veth2335064f"

Interface "veth2335064f"

Port "vethf857e799"

Interface "vethf857e799"

Port "veth325c8496"

Interface "veth325c8496"

ovs_version: "2.9.0"

查看流量

[root@node1 network-scripts]# ovs-ofctl -O OpenFlow13 dump-flows br0

OFPST_FLOW reply (OF1.) (xid=0x2):

cookie=0x0, duration=.192s, table=, n_packets=, n_bytes=, priority=,ip,in_port=,nw_dst=224.0.0.0/ actions=drop

cookie=0x0, duration=.192s, table=, n_packets=, n_bytes=, priority=,arp,in_port=,arp_spa=10.128.0.0/,arp_tpa=10.130.0.0/ actions=move:NXM_NX_TUN_ID[..]->NXM_NX_REG0[],goto_table:

cookie=0x0, duration=.192s, table=, n_packets=, n_bytes=, priority=,ip,in_port=,nw_src=10.128.0.0/ actions=move:NXM_NX_TUN_ID[..]->NXM_NX_REG0[],goto_table:

cookie=0x0, duration=.192s, table=, n_packets=, n_bytes=, priority=,ip,in_port=,nw_dst=10.128.0.0/ actions=move:NXM_NX_TUN_ID[..]->NXM_NX_REG0[],goto_table:

cookie=0x0, duration=.192s, table=, n_packets=, n_bytes=, priority=,arp,in_port=,arp_spa=10.130.0.1,arp_tpa=10.128.0.0/ actions=goto_table:

开了个头,先这样,更多的网络诊断,可以参考

https://docs.openshift.com/container-platform/3.11/admin_guide/sdn_troubleshooting.html

openvswitch材料,参考

https://opengers.github.io/openstack/openstack-base-use-openvswitch/

OpenShift上的OpenvSwitch入门的更多相关文章

- 在openshift上使用django+postgresql

openshift上用的是django 1.7,数据库选择的是postgresql 9.2 本地开发用的是sqlite3数据库,发布到openshift上后是没有数据的(本地的sqlite3数据库里的 ...

- Web---演示Servlet的相关类、表单多参数接收、文件上传简单入门

说明: Servlet的其他相关类: ServletConfig – 代表Servlet的初始化配置参数. ServletContext – 代表整个Web项目. ServletRequest – 代 ...

- openshift上使用devicemapper

环境:openshift v3.6.173.0.5 openshift上devicemapper与官方文档中的描述略有不同,在官方文档的描述中,容器使用的lvm文件系统挂载在/var/lib/devi ...

- 在OpenShift上托管web.py应用

一.背景 最近在学习web.py,跟随官网的cookbook和code examples一路敲敲打打,在本地访问了无数遍http://0.0.0.0:8080/,也算是对web.py有了基本的认识.为 ...

- OpenShift上部署Redis主从集群

客户有部署有状态服务的需求,单机部署模式相对简单,尝试一下集群部署. 关于Redis的master,slave 以及sentinal的架构和作用不提,有兴趣可以参考之前的博客 https://www. ...

- 使用Quarkus在Openshift上构建微服务的快速指南

在我的博客上,您有机会阅读了许多关于使用Spring Boot或Micronaut之类框架构建微服务的文章.这里将介绍另一个非常有趣的框架专门用于微服务体系结构,它越来越受到大家的关注– Quarku ...

- 【openshift】在Openshift上通过yaml部署应用

在Openshift上通过yaml部署应用 1.通过直接执行yaml 通过如下命令直接执行 oc create -f nginx.yml nginx.yml apiVersion: v1 items: ...

- sentinel (史上最全+入门教程)

文章很长,建议收藏起来,慢慢读! 高并发 发烧友社群:疯狂创客圈 为小伙伴奉上以下珍贵的学习资源: 疯狂创客圈 经典图书 : 极致经典 < Java 高并发 三部曲 > 面试必备 + 大厂 ...

- 《Python高手之路 第3版》这不是一本常规意义上Python的入门书!!

<Python高手之路 第3版>|免费下载地址 作者简介 · · · · · · Julien Danjou 具有12年从业经验的自由软件黑客.拥有多个开源社区的不同身份:Debian开 ...

随机推荐

- yaml模块

原文链接:https://www.cnblogs.com/fancyl/p/9133738.html 一.安装yaml模块:pip install pyyaml 二.在pycharm里新建.yaml文 ...

- 二进制搭建Kubernetes集群(最新v1.16.0版本)

目录 1.生产环境k8s平台架构 2.官方提供三种部署方式 3.服务器规划 4.系统初始化 5.Etcd集群部署 5.1.安装cfssl工具 5.2.生成etcd证书 5.2.1 创建用来生成 CA ...

- Linux-负载均衡HAproxy

负载均衡之HAProxy 现在常用的三大开源软件负载均衡器分别是Nginx.LVS.HAProxy.三大软件特点如下: LVS负载均衡的特点: ()抗负载能力强,抗负载能力强.性能高.能达到F5硬件的 ...

- thinkphp5.x命令执行漏洞复现及环境搭建

楼主Linux环境是Centos7,LAMP怎么搭不用我废话吧,别看错了 一.thinkphp5.X系列 1.安装composer yum -y install composer 安装php拓展 yu ...

- CyclicBarrier 使用详解

原文:https://www.jianshu.com/p/333fd8faa56e 1. CyclicBarrier 是什么? 从字面上的意思可以知道,这个类的中文意思是“循环栅栏”.大概的意思就是一 ...

- 《Java设计模式》之代理模式 -Java动态代理(InvocationHandler) -简单实现

如题 代理模式是对象的结构模式.代理模式给某一个对象提供一个代理对象,并由代理对象控制对原对象的引用. 代理模式可细分为如下, 本文不做多余解释 远程代理 虚拟代理 缓冲代理 保护代理 借鉴文章 ht ...

- linux ssh tunnel

ssh -qTfnN -D 7070 ape@192.168.1.35

- 2019牛客多校第二场BEddy Walker 2——BM递推

题意 从数字 $0$ 除法,每次向前走 $i$ 步,$i$ 是 $1 \sim K$ 中等概率随机的一个数,也就是说概率都是 $\frac{1}{K}$.求落在过数字 $N$ 额概率,$N=-1$ 表 ...

- Linux系统硬盘扩容

参考教程:https://www.jb51.net/article/144291.htm 1.查看硬盘已经用了99% $ df -h #查看硬盘已经使用了99% 文件系统 容量 已用 可用 已用% 挂 ...

- WinDbg常用命令系列---源代码操作相关命令

lsf, lsf- (Load or Unload Source File) lsf和lsf-命令加载或卸载源文件. lsf Filename lsf- Filename 参数: Filename指定 ...