自然语言16_Chunking with NLTK

python机器学习-乳腺癌细胞挖掘(博主亲自录制视频)https://study.163.com/course/introduction.htm?courseId=1005269003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

Chunking with NLTK

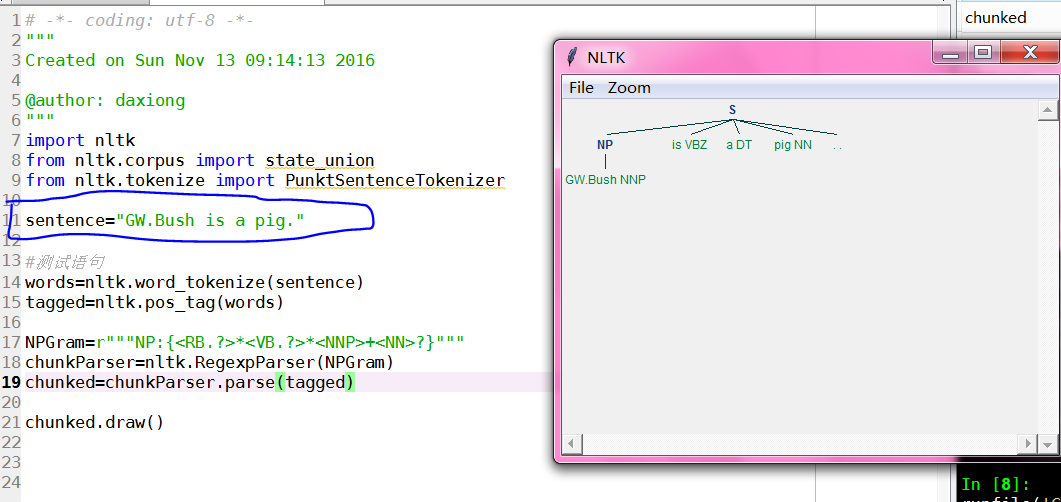

对chunk分类数据结构可以图形化输出,用于分析英语句子主干结构

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 13 09:14:13 2016 @author: daxiong

"""

import nltk

sentence="GW.Bush is a big pig."

#切分单词

words=nltk.word_tokenize(sentence)

#词性标记

tagged=nltk.pos_tag(words)

#正则表达式,定义包含所有名词的re

NPGram=r"""NP:{<NNP>|<NN>|<NNS>|<NNPS>}"""

chunkParser=nltk.RegexpParser(NPGram)

chunked=chunkParser.parse(tagged)

#树状图展示

chunked.draw()

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 13 09:14:13 2016 @author: daxiong

"""

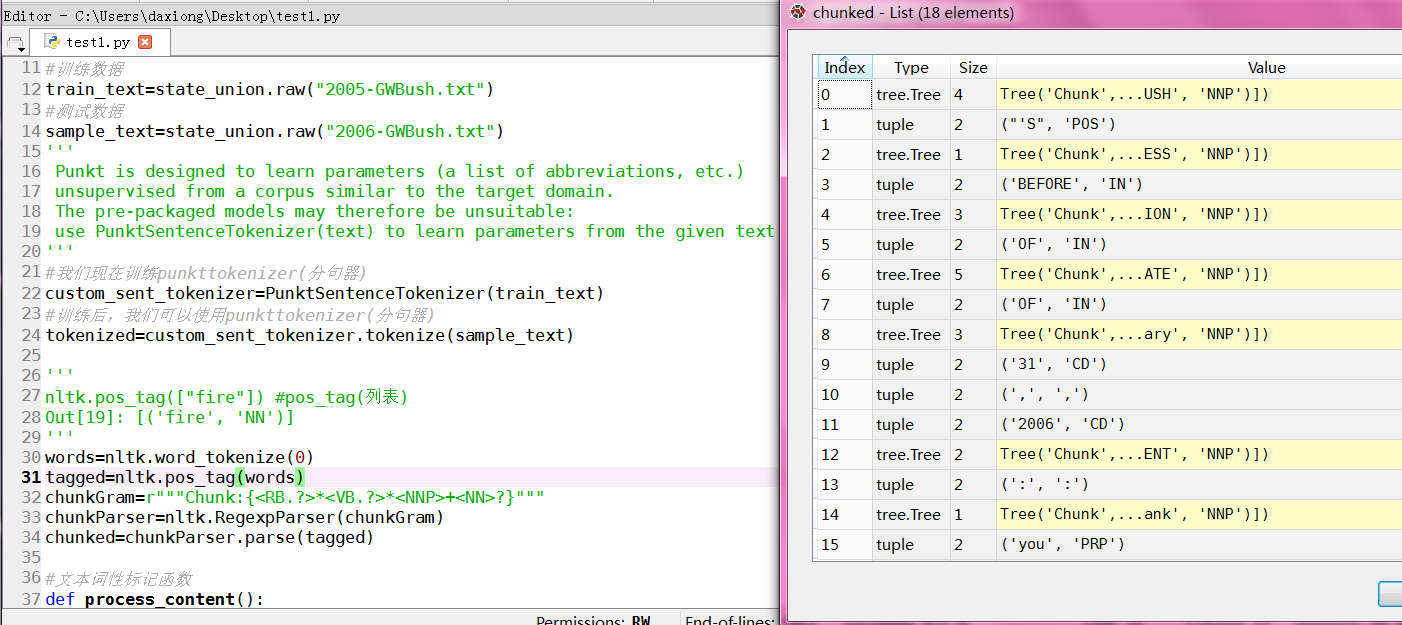

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer #训练数据

train_text=state_union.raw("2005-GWBush.txt")

#测试数据

sample_text=state_union.raw("2006-GWBush.txt")

'''

Punkt is designed to learn parameters (a list of abbreviations, etc.)

unsupervised from a corpus similar to the target domain.

The pre-packaged models may therefore be unsuitable:

use PunktSentenceTokenizer(text) to learn parameters from the given text

'''

#我们现在训练punkttokenizer(分句器)

custom_sent_tokenizer=PunktSentenceTokenizer(train_text)

#训练后,我们可以使用punkttokenizer(分句器)

tokenized=custom_sent_tokenizer.tokenize(sample_text) '''

nltk.pos_tag(["fire"]) #pos_tag(列表)

Out[19]: [('fire', 'NN')]

''' words=nltk.word_tokenize(tokenized[0])

tagged=nltk.pos_tag(words)

chunkGram=r"""Chunk:{<RB.?>*<VB.?>*<NNP>+<NN>?}"""

chunkParser=nltk.RegexpParser(chunkGram)

chunked=chunkParser.parse(tagged)

#lambda t:t.label()=='Chunk' 包含Chunk标签的列

for subtree in chunked.subtrees(filter=lambda t:t.label()=='Chunk'):

print(subtree)

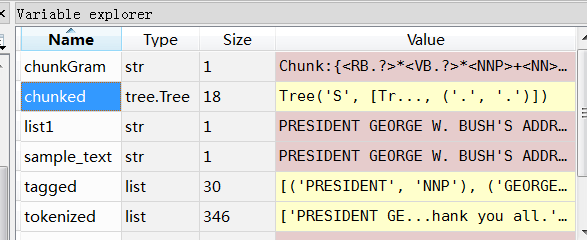

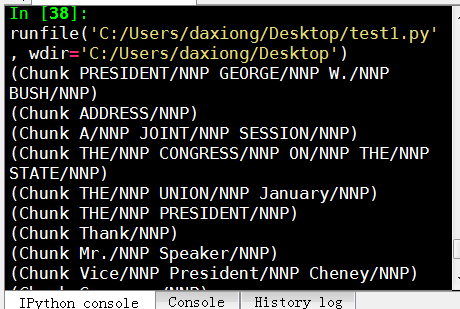

数据类型:chunked 是树结构

#lambda t:t.label()=='Chunk' 包含Chunk标签的列

输出只包含Chunk标签的列

完整代码

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 13 09:14:13 2016 @author: daxiong

"""

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer #训练数据

train_text=state_union.raw("2005-GWBush.txt")

#测试数据

sample_text=state_union.raw("2006-GWBush.txt")

'''

Punkt is designed to learn parameters (a list of abbreviations, etc.)

unsupervised from a corpus similar to the target domain.

The pre-packaged models may therefore be unsuitable:

use PunktSentenceTokenizer(text) to learn parameters from the given text

'''

#我们现在训练punkttokenizer(分句器)

custom_sent_tokenizer=PunktSentenceTokenizer(train_text)

#训练后,我们可以使用punkttokenizer(分句器)

tokenized=custom_sent_tokenizer.tokenize(sample_text) '''

nltk.pos_tag(["fire"]) #pos_tag(列表)

Out[19]: [('fire', 'NN')]

'''

'''

#测试语句

words=nltk.word_tokenize(tokenized[0])

tagged=nltk.pos_tag(words)

chunkGram=r"""Chunk:{<RB.?>*<VB.?>*<NNP>+<NN>?}"""

chunkParser=nltk.RegexpParser(chunkGram)

chunked=chunkParser.parse(tagged)

#lambda t:t.label()=='Chunk' 包含Chunk标签的列

for subtree in chunked.subtrees(filter=lambda t:t.label()=='Chunk'):

print(subtree)

''' #文本词性标记函数

def process_content():

try:

for i in tokenized[0:5]:

words=nltk.word_tokenize(i)

tagged=nltk.pos_tag(words)

#RB副词,VB动词,NNP专有名词单数形式,NN单数名词

chunkGram=r"""Chunk:{<RB.?>*<VB.?>*<NNP>+<NN>?}"""

chunkParser=nltk.RegexpParser(chunkGram)

chunked=chunkParser.parse(tagged)

#print(chunked)

for subtree in chunked.subtrees(filter=lambda t:t.label()=='Chunk'):

print(subtree)

#chunked.draw()

except Exception as e:

print(str(e)) process_content()

得到所有名词分类

Now that we know the parts of speech, we can do what is called chunking, and group words into hopefully meaningful chunks. One of the main goals of chunking is to group into what are known as "noun phrases." These are phrases of one or more words that contain a noun, maybe some descriptive words, maybe a verb, and maybe something like an adverb. The idea is to group nouns with the words that are in relation to them.

In order to chunk, we combine the part of speech tags with regular expressions. Mainly from regular expressions, we are going to utilize the following:

+ = match 1 or more

? = match 0 or 1 repetitions.

* = match 0 or MORE repetitions

. = Any character except a new line

See the tutorial linked above if you need help with regular expressions. The last things to note is that the part of speech tags are denoted with the "<" and ">" and we can also place regular expressions within the tags themselves, so account for things like "all nouns" (<N.*>)

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt") custom_sent_tokenizer = PunktSentenceTokenizer(train_text) tokenized = custom_sent_tokenizer.tokenize(sample_text) def process_content():

try:

for i in tokenized:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

chunkGram = r"""Chunk: {<RB.?>*<VB.?>*<NNP>+<NN>?}"""

chunkParser = nltk.RegexpParser(chunkGram)

chunked = chunkParser.parse(tagged)

chunked.draw() except Exception as e:

print(str(e)) process_content()

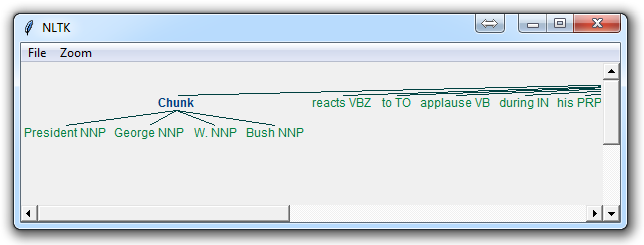

The result of this is something like:

The main line here in question is:

chunkGram = r"""Chunk: {<RB.?>*<VB.?>*<NNP>+<NN>?}"""

This line, broken down:

<RB.?>* = "0 or more of any tense of adverb," followed by:

<VB.?>* = "0 or more of any tense of verb," followed by:

<NNP>+ = "One or more proper nouns," followed by

<NN>? = "zero or one singular noun."

Try playing around with combinations to group various instances until you feel comfortable with chunking.

Not covered in the video, but also a reasonable task is to actually access the chunks specifically. This is something rarely talked about, but can be an essential step depending on what you're doing. Say you print the chunks out, you are going to see output like:

(S

(Chunk PRESIDENT/NNP GEORGE/NNP W./NNP BUSH/NNP)

'S/POS

(Chunk

ADDRESS/NNP

BEFORE/NNP

A/NNP

JOINT/NNP

SESSION/NNP

OF/NNP

THE/NNP

CONGRESS/NNP

ON/NNP

THE/NNP

STATE/NNP

OF/NNP

THE/NNP

UNION/NNP

January/NNP)

31/CD

,/,

2006/CD

THE/DT

(Chunk PRESIDENT/NNP)

:/:

(Chunk Thank/NNP)

you/PRP

all/DT

./.)

Cool, that helps us visually, but what if we want to access this data via our program? Well, what is happening here is our "chunked" variable is an NLTK tree. Each "chunk" and "non chunk" is a "subtree" of the tree. We can reference these by doing something like chunked.subtrees. We can then iterate through these subtrees like so:

for subtree in chunked.subtrees():

print(subtree)

Next, we might be only interested in getting just the chunks, ignoring the rest. We can use the filter parameter in the chunked.subtrees() call.

for subtree in chunked.subtrees(filter=lambda t: t.label() == 'Chunk'):

print(subtree)

Now, we're filtering to only show the subtrees with the label of "Chunk." Keep in mind, this isn't "Chunk" as in the NLTK chunk attribute... this is "Chunk" literally because that's the label we gave it here: chunkGram = r"""Chunk: {<RB.?>*<VB.?>*<NNP>+<NN>?}"""

Had we said instead something like chunkGram = r"""Pythons: {<RB.?>*<VB.?>*<NNP>+<NN>?}""", then we would filter by the label of "Pythons." The result here should be something like:

-

(Chunk PRESIDENT/NNP GEORGE/NNP W./NNP BUSH/NNP)

(Chunk

ADDRESS/NNP

BEFORE/NNP

A/NNP

JOINT/NNP

SESSION/NNP

OF/NNP

THE/NNP

CONGRESS/NNP

ON/NNP

THE/NNP

STATE/NNP

OF/NNP

THE/NNP

UNION/NNP

January/NNP)

(Chunk PRESIDENT/NNP)

(Chunk Thank/NNP)

Full code for this would be:

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt") custom_sent_tokenizer = PunktSentenceTokenizer(train_text) tokenized = custom_sent_tokenizer.tokenize(sample_text) def process_content():

try:

for i in tokenized:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

chunkGram = r"""Chunk: {<RB.?>*<VB.?>*<NNP>+<NN>?}"""

chunkParser = nltk.RegexpParser(chunkGram)

chunked = chunkParser.parse(tagged) print(chunked)

for subtree in chunked.subtrees(filter=lambda t: t.label() == 'Chunk'):

print(subtree) chunked.draw() except Exception as e:

print(str(e)) process_content()

If you get particular enough, you may find that you may be better off if there was a way to chunk everything, except some stuff. This process is what is known as chinking, and that's what we're going to be covering next.

自然语言16_Chunking with NLTK的更多相关文章

- 转 --自然语言工具包(NLTK)小结

原作者:http://www.cnblogs.com/I-Tegulia/category/706685.html 1.自然语言工具包(NLTK) NLTK 创建于2001 年,最初是宾州大学计算机与 ...

- 自然语言22_Wordnet with NLTK

QQ:231469242 欢迎喜欢nltk朋友交流 https://www.pythonprogramming.net/wordnet-nltk-tutorial/?completed=/nltk-c ...

- 自然语言17_Chinking with NLTK

https://www.pythonprogramming.net/chinking-nltk-tutorial/?completed=/chunking-nltk-tutorial/ 代码 # -* ...

- Python自然语言处理工具NLTK的安装FAQ

1 下载Python 首先去python的主页下载一个python版本http://www.python.org/,一路next下去,安装完毕即可 2 下载nltk包 下载地址:http://www. ...

- Python自然语言工具包(NLTK)入门

在本期文章中,小生向您介绍了自然语言工具包(Natural Language Toolkit),它是一个将学术语言技术应用于文本数据集的 Python 库.称为“文本处理”的程序设计是其基本功能:更深 ...

- Python NLTK 自然语言处理入门与例程(转)

转 https://blog.csdn.net/hzp666/article/details/79373720 Python NLTK 自然语言处理入门与例程 在这篇文章中,我们将基于 Pyt ...

- NLTK在自然语言处理

nltk-data.zip 本文主要是总结最近学习的论文.书籍相关知识,主要是Natural Language Pracessing(自然语言处理,简称NLP)和Python挖掘维基百科Infobox ...

- Python自然语言处理工具小结

Python自然语言处理工具小结 作者:白宁超 2016年11月21日21:45:26 目录 [Python NLP]干货!详述Python NLTK下如何使用stanford NLP工具包(1) [ ...

- 自然语言处理(NLP)入门学习资源清单

Melanie Tosik目前就职于旅游搜索公司WayBlazer,她的工作内容是通过自然语言请求来生产个性化旅游推荐路线.回顾她的学习历程,她为期望入门自然语言处理的初学者列出了一份学习资源清单. ...

随机推荐

- 【BZOJ 2818】gcd 欧拉筛

枚举小于n的质数,然后再枚举小于n/这个质数的Φ的和,乘2再加1即可.乘2是因为xy互换是另一组解,加1是x==y==1时的一组解.至于求和我们只需处理前缀和就可以啦,注意Φ(1)的值不能包含在前缀和 ...

- poj3254 状态压缩dp

题意:给出一个n行m列的草地,1表示肥沃,0表示贫瘠,现在要把一些牛放在肥沃的草地上,但是要求所有牛不能相邻,问你有多少种放法. 分析:假如我们知道第 i-1 行的所有的可以放的情况,那么对于 ...

- python 进程间共享数据 (三)

Python的multiprocessing模块包装了底层的机制,提供了Queue.Pipes等多种方式来交换数据. 我们以Queue为例,在父进程中创建两个子进程,一个往Queue里写数据,一个从Q ...

- 数据库连接池的选择 Druid

我先说说数据库连接 数据库大家都不陌生,从名字就能看出来它是「存放数据的仓库」,那我们怎么去「仓库」取东西呢?当然需要钥匙啦!这就是我们的数据库用户名.密码了,然后我们就可以打开门去任意的存取东西了. ...

- list 集合

1.Model public class ROLE_FUNCTION { //角色集合 public List< ROLE> ROLES { get; set; } //角色权限集合 pu ...

- Web前端性能优化教程08:配置ETag

本文是Web前端性能优化系列文章中的第五篇,主要讲述内容:配置ETag.完整教程可查看:Web前端性能优化 什么是ETag? 实体标签(EntityTag)是唯一标识了一个组件的一个特定版本的字符串, ...

- 快速查找无序数组中的第K大数?

1.题目分析: 查找无序数组中的第K大数,直观感觉便是先排好序再找到下标为K-1的元素,时间复杂度O(NlgN).在此,我们想探索是否存在时间复杂度 < O(NlgN),而且近似等于O(N)的高 ...

- Python 从零学起(纯基础) 笔记 (二)

Day02 自学笔记 1. 对于Python,一切事物都是对象,对象基于类创建,对象具有的功能去类里找 name = ‘Young’ - 对象 Li1 = [11,22,33] ...

- 在VS里配置及查看IL

在VS里配置及查看IL 来源:网络 编辑:admin 在之前的版本VS2010中,在Tools下有IL Disassembler(IL中间语言查看器),但是我想直接集成在VS2012里使用,方法如下: ...

- webapi获取IP的方式

using System.Net.Http; public static class HttpRequestMessageExtensions { private const string HttpC ...