[线性代数] 矩阵代数進階:矩阵分解 Matrix factorization

Matrix factorization

导语:承载上集的矩阵代数入门,今天来聊聊进阶版,矩阵分解。其他集数可在[线性代数]标籤文章找到。有空再弄目录什麽的。

Matrix factorization is quite like an application of invertible matrices, where L is an invertible matrix in LU factorization.

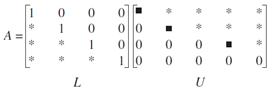

As you may have seen, that solving Ax=b for x can be tedious with all the row-reduction algorithm. Here, we are going to explore another efficient algorithm for find x in matrix equation, which is LU Factorization. Suppose we are given L and U in the following form which reconstruct A. L is an invertible unit lower triangular mxm matrix, while U is the mxn echelon form of A. Recall a way to solve for x is by x=A-1b and A-1 need to be invertible. Since L is invertible, LU is also invertible as proved in previous article in this series. The motivation here is that if we are to compute x for different b, we need to compute A-1bi for every single b. That's not desirable and we should look for ways to circumvent this…

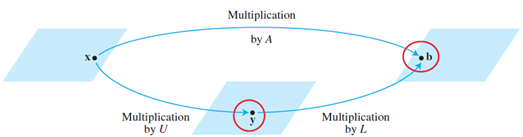

Suppose LU are already given, expressing A=LU is just the first step in LU factorization. Remember our goal of using matrix factorization is to solve for x in matrix equation. So we rely on the following:

Above suggests by row-reducing the following, we can get x. So we introduce y as the intermediate results along our way to get b. Noted that we still need to calculate each b individually for Ax=b, just that with the assistance of LU, less steps are involved.

As we know L as an lower unit triangular matrix, columns must be linearly independent. Since it's mxm, L is also invertible. This means the following:

Indeed, when you get a lower triangular unit matrix L, it's trivial to get Imxm from it. As U is the echelon form of A and is of size mxn, so identity matrix is not guaranteed as the reduced echelon form may not be of square matrix.

The LU factorization algorithm

The prerequisite for using this algorithm is that, given any matrix A in Ax=b, A must be reduceable to echelon form, U, using row replacements of rows in a TOP-DOWN manner. However, this is always a hard requirement to meet and people sometimes relax this restriction into allowing row interchanges before performing top-down sequential row replacement in A. If the requirements are satisfied, it's guaranteed we can get the lower triangular unit matrix L, and the proof of which is shown below:

And if we apply the same sequence of elementary matrices onto L, we restore the identity matrix I as follows:

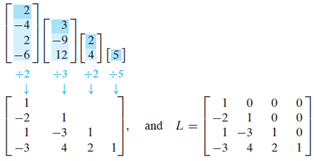

But now it sounds a bit abstract. What exactly does  give us btw? And how is it utilized to find L? The following example shows how. During the row-reduction of A into U, entries below pivot position in each pivot column is zeroed-out. The reverse of elementary row operations just require us to gather all pivot columns before their transformation and pack them into a nxn matrix.

give us btw? And how is it utilized to find L? The following example shows how. During the row-reduction of A into U, entries below pivot position in each pivot column is zeroed-out. The reverse of elementary row operations just require us to gather all pivot columns before their transformation and pack them into a nxn matrix.

When all pivot columns before row replacement gathered, L is easily available.

Examples

例子不定期更新

[线性代数] 矩阵代数進階:矩阵分解 Matrix factorization的更多相关文章

- 矩阵分解(Matrix Factorization)与推荐系统

转自:http://www.tuicool.com/articles/RV3m6n 对于矩阵分解的梯度下降推导参考如下:

- 【Math for ML】矩阵分解(Matrix Decompositions) (下)

[Math for ML]矩阵分解(Matrix Decompositions) (上) I. 奇异值分解(Singular Value Decomposition) 1. 定义 Singular V ...

- 【Math for ML】矩阵分解(Matrix Decompositions) (上)

I. 行列式(Determinants)和迹(Trace) 1. 行列式(Determinants) 为避免和绝对值符号混淆,本文一般使用\(det(A)\)来表示矩阵\(A\)的行列式.另外这里的\ ...

- 推荐系统之矩阵分解及C++实现

1.引言 矩阵分解(Matrix Factorization, MF)是传统推荐系统最为经典的算法,思想来源于数学中的奇异值分解(SVD), 但是与SVD 还是有些不同,形式就可以看出SVD将原始的评 ...

- Mahout分布式运行实例:基于矩阵分解的协同过滤评分系统(一个命令实现文件格式的转换)

Apr 08, 2014 Categories in tutorial tagged with Mahout hadoop 协同过滤 Joe Jiang 前言:之前配置Mahout时测试过一个简 ...

- matlab之矩阵分解

矩阵分解 矩阵分解 (decomposition, factorization)是将矩阵拆解为数个矩阵的乘积. 1.三角分解法: 要求原矩阵为方阵,将之分解成一个上三角形矩阵(或是排列(permute ...

- Matrix Factorization SVD 矩阵分解

Today we have learned the Matrix Factorization, and I want to record my study notes. Some kownledge ...

- 【RS】Sparse Probabilistic Matrix Factorization by Laplace Distribution for Collaborative Filtering - 基于拉普拉斯分布的稀疏概率矩阵分解协同过滤

[论文标题]Sparse Probabilistic Matrix Factorization by Laplace Distribution for Collaborative Filtering ...

- 【RS】List-wise learning to rank with matrix factorization for collaborative filtering - 结合列表启发排序和矩阵分解的协同过滤

[论文标题]List-wise learning to rank with matrix factorization for collaborative filtering (RecSys '10 ...

随机推荐

- 在论坛中出现的比较难的sql问题:24(生成时间段)

原文:在论坛中出现的比较难的sql问题:24(生成时间段) 最近,在论坛中,遇到了不少比较难的sql问题,虽然自己都能解决,但发现过几天后,就记不起来了,也忘记解决的方法了. 所以,觉得有必要记录下来 ...

- C#插入时间

//获取日期+时间 DateTime.Now.ToString(); // 2008-9-4 20:02:10 DateTime.Now.ToLocalTime().ToString(); // 20 ...

- 算法题:购买n个苹果,苹果6个一袋或者8个一袋,若想袋数最少,如何购买?

这是面试一家公司java实习生的算法题,我当时把代码写出来了,但是回学校之后搜索别人的算法,才发现自己的算法实在是太简陋了呜呜呜 我的算法: public void buy(int n){ int m ...

- centos7上使用git clone出现问题

centos 7 git clone时出现不支持协议版本的问题 unable to access 'https://github.com/baloonwj/TeamTalk.git/': Peer ...

- Linux挂载Windows文件夹

# sudo mount -t \ -o user=username \ //Windows用户名 -o uid=myname \ //Linux用户名 -o gid=users \ -o defau ...

- NSIP

1. 第一章 信息安全概述 信息:信息是有意义的数据,具有一定的价值,是一种适当保护的资产,数据是是客观事务属性的记录,是信息的具体表现形式,数据经过加工处理之后 就是信息,而信息需要经过数字处理转换 ...

- 动态URL是什么?动态URL有什么特点?

动态URL是什么动态URL就是动态页面,动态链接,即指在URL中出现“?”这样的参数符号,并以aspx.asp.jsp.php.perl.cgi为后缀的url. 动态URL有什么特点1.在建设反向链接 ...

- Socket的一些疑惑整理

关于listen的问题请看steven<tcp/ip详解1>18章18.11.4 呼入连接请求队列一节,说的很清楚

- kubbernetes Flannel网络部署(五)

一.Flannel生成证书 1.创建Flannel生成证书的文件 [root@linux-node1 ~]# vim flanneld-csr.json { "CN": " ...

- 工程代码不编译src的java目录下的xml文件问题及解决

IDEA的maven项目中,默认源代码目录下(src/main/java目录)的xml等资源文件并不会在编译的时候一块打包进classes文件夹,而是直接舍弃掉.如果使用的是Eclipse,Eclip ...