Helm 安装Kafka

helm镜像库配置

helm repo add stable http://mirror.azure.cn/kubernetes/charts

helm repo add incubator http://mirror.azure.cn/kubernetes/charts-incubator

helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts

local http://127.0.0.1:8879/charts

incubator http://mirror.azure.cn/kubernetes/charts-incubator

创建Kafka和Zookeeper的Local PV

创建Kafka的Local PV

这里的部署环境是本地的测试环境,存储选择Local Persistence Volumes。首先,在k8s集群上创建本地存储的StorageClass local-storage.yaml:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Retain

kubectl apply -f local-storage.yaml

storageclass.storage.k8s.io/local-storage created

[root@master home]# kubectl get sc --all-namespaces -o wide

NAME PROVISIONER AGE

local-storage kubernetes.io/no-provisioner 9s

这里要在master,slaver1,slaver2这三个k8s节点上部署3个kafka的broker节点,因此先在三个节点上创建这3个kafka broker节点的Local PV

kafka-local-pv.yaml:

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-kafka-0

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /home/kafka/data-0

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- master

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-kafka-1

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /home/kafka/data-1

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- slaver1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-kafka-2

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /home/kafka/data-2

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- slaver2

kubectl apply -f kafka-local-pv.yaml

根据上面创建的local pv,

在master上创建目录/home/kafka/data-0

在slaver1上创建目录/home/kafka/data-1

在slaver2上创建目录/home/kafka/data-2

# master

mkdir -p /home/kafka/data-0

# slaver1

mkdir -p /home/kafka/data-1

# slaver2

mkdir -p /home/kafka/data-2

查看:

[root@master home]# kubectl get pv,pvc --all-namespaces

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/datadir-kafka-0 5Gi RWO Retain Available local-storage 12s

persistentvolume/datadir-kafka-1 5Gi RWO Retain Available local-storage 12s

persistentvolume/datadir-kafka-2 5Gi RWO Retain Available local-storage 12s

创建Zookeeper的Local PV

这里要在master,slaver1,slaver2这三个k8s节点上部署3个zookeeper节点,因此先在三个节点上创建这3个zookeeper节点的Local PV

zookeeper-local-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-kafka-zookeeper-0

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /home/kafka/zkdata-0

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- master

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-kafka-zookeeper-1

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /home/kafka/zkdata-1

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- slaver1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-kafka-zookeeper-2

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /home/kafka/zkdata-2

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- slaver2

kubectl apply -f zookeeper-local-pv.yaml

根据上面创建的local pv,

在master上创建目录/home/kafka/zkdata-0

在slaver1上创建目录/home/kafka/zkdata-1

在slaver2上创建目录/home/kafka/zkdata-2

# master

mkdir -p /home/kafka/zkdata-0

# slaver1

mkdir -p /home/kafka/zkdata-1

# slaver2

mkdir -p /home/kafka/zkdata-2

查看:

[root@master home]# kubectl get pv,pvc --all-namespaces

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/data-kafka-zookeeper-0 5Gi RWO Retain Available local-storage 5s

persistentvolume/data-kafka-zookeeper-1 5Gi RWO Retain Available local-storage 5s

persistentvolume/data-kafka-zookeeper-2 5Gi RWO Retain Available local-storage 5s

persistentvolume/datadir-kafka-0 5Gi RWO Retain Available local-storage 116s

persistentvolume/datadir-kafka-1 5Gi RWO Retain Available local-storage 116s

persistentvolume/datadir-kafka-2 5Gi RWO Retain Available local-storage 116s

部署Kafka

编写kafka chart的vaule文件

kafka-values.yaml

replicas: 3

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Exists

effect: PreferNoSchedule

persistence:

storageClass: local-storage

size: 5Gi

zookeeper:

persistence:

enabled: true

storageClass: local-storage

size: 5Gi

replicaCount: 3

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Exists

effect: PreferNoSchedule

helm install --name kafka --namespace kafka -f kafka-values.yaml incubator/kafka

查看:

[root@master home]# kubectl get po,svc -n kafka -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/kafka-0 1/1 Running 2 5m7s 10.244.1.24 slaver1 <none> <none>

pod/kafka-1 1/1 Running 0 2m50s 10.244.2.16 slaver2 <none> <none>

pod/kafka-2 0/1 Running 0 80s 10.244.0.13 master <none> <none>

pod/kafka-zookeeper-0 1/1 Running 0 5m7s 10.244.1.23 slaver1 <none> <none>

pod/kafka-zookeeper-1 1/1 Running 0 4m29s 10.244.2.15 slaver2 <none> <none>

pod/kafka-zookeeper-2 1/1 Running 0 3m43s 10.244.0.12 master <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kafka ClusterIP 10.101.224.127 <none> 9092/TCP 5m7s app=kafka,release=kafka

service/kafka-headless ClusterIP None <none> 9092/TCP 5m7s app=kafka,release=kafka

service/kafka-zookeeper ClusterIP 10.97.247.79 <none> 2181/TCP 5m7s app=zookeeper,release=kafka

service/kafka-zookeeper-headless ClusterIP None <none> 2181/TCP,3888/TCP,2888/TCP 5m7s app=zookeeper,release=kafka

[root@master home]# kubectl get pv,pvc --all-namespaces

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/data-kafka-zookeeper-0 5Gi RWO Retain Bound kafka/datadir-kafka-2 local-storage 130m

persistentvolume/data-kafka-zookeeper-1 5Gi RWO Retain Bound kafka/data-kafka-zookeeper-0 local-storage 130m

persistentvolume/data-kafka-zookeeper-2 5Gi RWO Retain Bound kafka/data-kafka-zookeeper-1 local-storage 130m

persistentvolume/datadir-kafka-0 5Gi RWO Retain Bound kafka/data-kafka-zookeeper-2 local-storage 132m

persistentvolume/datadir-kafka-1 5Gi RWO Retain Bound kafka/datadir-kafka-0 local-storage 132m

persistentvolume/datadir-kafka-2 5Gi RWO Retain Bound kafka/datadir-kafka-1 local-storage 132m

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

kafka persistentvolumeclaim/data-kafka-zookeeper-0 Bound data-kafka-zookeeper-1 5Gi RWO local-storage 129m

kafka persistentvolumeclaim/data-kafka-zookeeper-1 Bound data-kafka-zookeeper-2 5Gi RWO local-storage 4m36s

kafka persistentvolumeclaim/data-kafka-zookeeper-2 Bound datadir-kafka-0 5Gi RWO local-storage 3m50s

kafka persistentvolumeclaim/datadir-kafka-0 Bound datadir-kafka-1 5Gi RWO local-storage 129m

kafka persistentvolumeclaim/datadir-kafka-1 Bound datadir-kafka-2 5Gi RWO local-storage 2m57s

kafka persistentvolumeclaim/datadir-kafka-2 Bound data-kafka-zookeeper-0 5Gi RWO local-storage 87s

[root@master home]# kubectl get statefulset -n kafka

NAME READY AGE

kafka 3/3 25m

kafka-zookeeper 3/3 25m

安装后的测试

进入一个broker容器查看

[root@master /]# kubectl -n kafka exec kafka-0 -it sh

# ls /usr/bin |grep kafka

kafka-acls

kafka-broker-api-versions

kafka-configs

kafka-console-consumer

kafka-console-producer

kafka-consumer-groups

kafka-consumer-perf-test

kafka-delegation-tokens

kafka-delete-records

kafka-dump-log

kafka-log-dirs

kafka-mirror-maker

kafka-preferred-replica-election

kafka-producer-perf-test

kafka-reassign-partitions

kafka-replica-verification

kafka-run-class

kafka-server-start

kafka-server-stop

kafka-streams-application-reset

kafka-topics

kafka-verifiable-consumer

kafka-verifiable-producer

# ls /usr/share/java/kafka | grep kafka

kafka-clients-2.0.1-cp1.jar

kafka-log4j-appender-2.0.1-cp1.jar

kafka-streams-2.0.1-cp1.jar

kafka-streams-examples-2.0.1-cp1.jar

kafka-streams-scala_2.11-2.0.1-cp1.jar

kafka-streams-test-utils-2.0.1-cp1.jar

kafka-tools-2.0.1-cp1.jar

kafka.jar

kafka_2.11-2.0.1-cp1-javadoc.jar

kafka_2.11-2.0.1-cp1-scaladoc.jar

kafka_2.11-2.0.1-cp1-sources.jar

kafka_2.11-2.0.1-cp1-test-sources.jar

kafka_2.11-2.0.1-cp1-test.jar

kafka_2.11-2.0.1-cp1.jar

可以看到对应apache kafka的版本号是2.11-2.0.1,前面2.11是Scala编译器的版本,Kafka的服务器端代码是使用Scala语言开发的,后边2.0.1是Kafka的版本。 即CP Kafka 5.0.1是基于Apache Kafka 2.0.1的。

安装Kafka Manager

Helm的官方repo中已经提供了Kafka Manager的Chart。

创建kafka-manager-values.yaml:

image:

repository: zenko/kafka-manager

tag: 1.3.3.22

zkHosts: kafka-zookeeper:2181

basicAuth:

enabled: true

username: admin

password: admin

ingress:

enabled: true

hosts:

- km.hongda.com

tls:

- secretName: hongda-com-tls-secret

hosts:

- km.hongda.com

使用helm部署kafka-manager:

helm install --name kafka-manager --namespace kafka -f kafka-manager-values.yaml stable/kafka-manager

安装完成后,确认kafka-manager的Pod已经正常启动:

[root@master home]# kubectl get pod -n kafka -l app=kafka-manager

NAME READY STATUS RESTARTS AGE

kafka-manager-5d974b7844-f4bz2 1/1 Running 0 6m41s

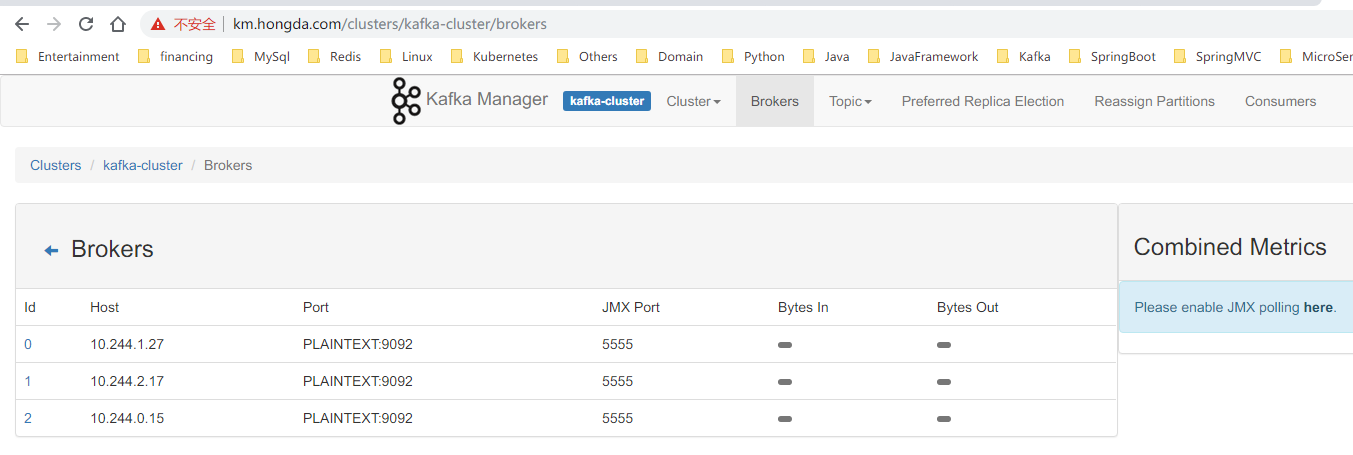

并配置Cluster Zookeeper Hosts为kafka-zookeeper:2181,即可将前面部署的kafka集群纳入kafka-manager管理当中。

查看kafka的po,svc:

[root@master home]# kubectl get po,svc -n kafka -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/kafka-0 1/1 Running 4 12d 10.244.1.27 slaver1 <none> <none>

pod/kafka-1 1/1 Running 2 12d 10.244.2.17 slaver2 <none> <none>

pod/kafka-2 1/1 Running 2 12d 10.244.0.15 master <none> <none>

pod/kafka-manager-5d974b7844-f4bz2 1/1 Running 0 43m 10.244.2.21 slaver2 <none> <none>

pod/kafka-zookeeper-0 1/1 Running 1 12d 10.244.1.26 slaver1 <none> <none>

pod/kafka-zookeeper-1 1/1 Running 1 12d 10.244.2.20 slaver2 <none> <none>

pod/kafka-zookeeper-2 1/1 Running 1 12d 10.244.0.16 master <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kafka ClusterIP 10.101.224.127 <none> 9092/TCP 12d app=kafka,release=kafka

service/kafka-headless ClusterIP None <none> 9092/TCP 12d app=kafka,release=kafka

service/kafka-manager ClusterIP 10.102.125.67 <none> 9000/TCP 43m app=kafka-manager,release=kafka-manager

service/kafka-zookeeper ClusterIP 10.97.247.79 <none> 2181/TCP 12d app=zookeeper,release=kafka

service/kafka-zookeeper-headless ClusterIP None <none> 2181/TCP,3888/TCP,2888/TCP 12d app=zookeeper,release=kafka

问题

拉取最新的gcr.io/google_samples/k8szk异常

最新的k8szk版本为3.5.5,拉取不了

解决方法:

docker pull bairuijie/k8szk:3.5.5

docker tag bairuijie/k8szk:3.5.5 gcr.io/google_samples/k8szk:3.5.5

docker rmi bairuijie/k8szk:3.5.5

或者修改镜像版本为k8szk:v3

kubectl edit pod kafka-zookeeper-0 -n kafka

使用k8szk镜像,zookeeper一直处于CrashLoopBackOff状态

[root@master home]# kubectl get po -n kafka -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kafka pod/kafka-0 0/1 Running 7 15m 10.244.2.15 slaver2 <none> <none>

kafka pod/kafka-zookeeper-0 0/1 CrashLoopBackOff 7 15m 10.244.1.11 slaver1 <none> <none>

[root@master home]# kubectl logs kafka-zookeeper-0 -n kafka

Error from server: Get https://18.16.202.227:10250/containerLogs/kafka/kafka-zookeeper-0/zookeeper: proxyconnect tcp: net/http: TLS handshake timeout

到slaver1节点上,查看docker容器日志:

[root@slaver1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

51eb5e6e0640 b3a008535ed2 "/bin/bash -xec /con…" About a minute ago Exited (1) About a minute ago k8s_zookeeper_kafka-zookeeper-0_kafka_4448f944-b1cd-4415-8abd-5cee39699b51_8

。。。

[root@slaver1 ~]# docker logs 51eb5e6e0640

+ /config-scripts/run

/config-scripts/run: line 63: /conf/zoo.cfg: No such file or directory

/config-scripts/run: line 68: /conf/log4j.properties: No such file or directory

/config-scripts/run: line 69: /conf/log4j.properties: No such file or directory

/config-scripts/run: line 70: $LOGGER_PROPERS_FILE: ambiguous redirect

/config-scripts/run: line 71: /conf/log4j.properties: No such file or directory

/config-scripts/run: line 81: /conf/log4j.properties: No such file or directory

+ exec java -cp '/apache-zookeeper-*/lib/*:/apache-zookeeper-*/*jar:/conf:' -Xmx1G -Xms1G org.apache.zookeeper.server.quorum.QuorumPeerMain /conf/zoo.cfg

Error: Could not find or load main class org.apache.zookeeper.server.quorum.QuorumPeerMain

查看helm拉取的k8s应用

helm fetch incubator/kafka

ll

-rw-r--r-- 1 root root 30429 8月 23 14:47 kafka-0.17.0.tgz

下载解压以后,发现里面的image依赖的是zookeeper,没有使用k8szk

旧版的kafka-values.yaml:

replicas: 3

persistence:

storageClass: local-storage

size: 5Gi

zookeeper:

persistence:

enabled: true

storageClass: local-storage

size: 5Gi

replicaCount: 3

image:

repository: gcr.io/google_samples/k8szk

去除image绑定即可。

replicas: 3

persistence:

storageClass: local-storage

size: 5Gi

zookeeper:

persistence:

enabled: true

storageClass: local-storage

size: 5Gi

replicaCount: 3

master节点不能部署

查看master节点:

[root@master home]# kubectl describe node master

Name: master

Roles: edge,master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=master

kubernetes.io/os=linux

node-role.kubernetes.io/edge=

node-role.kubernetes.io/master=

Annotations: flannel.alpha.coreos.com/backend-data: {"VtepMAC":"aa:85:ea:b3:54:07"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.236.130

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Tue, 20 Aug 2019 23:03:57 +0800

Taints: node-role.kubernetes.io/master:PreferNoSchedule

可以看见master节点被污染了

去除:

kubectl taint nodes master node-role.kubernetes.io/master-

nodes节点后面跟的是节点名称

也可以使用去除所有

kubectl taint nodes --all node-role.kubernetes.io/master-

如果将master设置为train

kubectl taint nodes master node-role.kubernetes.io/master=:NoSchedule

注意⚠️ : 为master设置的这个taint中, node-role.kubernetes.io/master为key, value为空, effect为NoSchedule

如果输入命令时, 你丢掉了=符号, 写成了node-role.kubernetes.io/master:NoSchedule, 会报error: at least one taint update is required错误

参考:

kubernetes(k8s) helm安装kafka、zookeeper

Helm 安装Kafka的更多相关文章

- kubernetes(k8s) helm安装kafka、zookeeper

通过helm在k8s上部署kafka.zookeeper 通过helm方法安装 k8s上安装kafka,可以使用helm,将kafka作为一个应用安装.当然这首先要你的k8s支持使用helm安装.he ...

- helm安装kafka集群并测试其高可用性

介绍 Kafka是由Apache软件基金会开发的一个开源流处理平台,由Scala和Java编写.Kafka是一种高吞吐量的分布式发布订阅消息系统,它可以处理消费者在网站中的所有动作流数据. 这种动作( ...

- PHP安装kafka插件

在工作中我们经常遇到需要给php安装插件,今天把php安装kafka的插件的步骤整理下,仅供大家参考 1:需要先安装librdkafka git clone https://github.com/ed ...

- 附录E 安装Kafka

E.1 安装Kafka E.1.1 下载Kafka Kafka是由LinkedIn设计的一个高吞吐量.分布式.基于发布订阅模式的消息系统,使用Scala编写,它以可水平扩展.可靠性.异步通信 ...

- Windows 安装Kafka

Windows 7 安装Apache kafka_2.11-0.9.0.1 下载所需文件 Zookeeper: http://www.apache.org/dyn/closer.cgi/zoo ...

- Redis安装,mongodb安装,hbase安装,cassandra安装,mysql安装,zookeeper安装,kafka安装,storm安装大数据软件安装部署百科全书

伟大的程序员版权所有,转载请注明:http://www.lenggirl.com/bigdata/server-sofeware-install.html 一.安装mongodb 官网下载包mongo ...

- ambari安装集群下安装kafka manager

简介: 不想通过kafka shell来管理kafka已创建的topic信息,想通过管理页面来统一管理和查看kafka集群.所以选择了大部分人使用的kafka manager,我一共有一台主机mast ...

- kubernetes包管理工具Helm安装

helm官方建议使用tls,首先生成证书. openssl genrsa -out ca.key.pem openssl req -key ca.key.pem -new -x509 -days -s ...

- 安装kafka过程及出现的问题解决

第一步:下载kafka安装包 下载地址:http://kafka.apache.org/downloads 解压 到/usr/local 目录 tar -zxvf kafka_2.12-2.2.0 第 ...

随机推荐

- ApartmentState.STA

需要设置子线程 ApartmentState 为 STA 模式,但 Task 又不能直接设置 ApartmentState,因此需要用 Thread 来封装一下. using System.Threa ...

- LeetCode 118:杨辉三角 II Pascal's Triangle II

公众号:爱写bug(ID:icodebugs) 作者:爱写bug 给定一个非负索引 k,其中 k ≤ 33,返回杨辉三角的第 k 行. Given a non-negative index k whe ...

- 问题追查:QA压测工具http长连接总是被服务端close情况

1. 背景 最近QA对线上单模块进行压测(非全链路压测),http客户端 与 thrift服务端的tcp链接总在一段时间被close. 查看服务端日志显示 i/o timeout. 最后的结果是: q ...

- Java8 新特性 Stream() API

新特性里面为什么要加入流Steam() 集合是Java中使用最多的API,几乎每一个Java程序都会制造和处理集合.集合对于很多程序都是必须的,但是如果一个集合进行,分组,排序,筛选,过滤...这些操 ...

- vue简介、入门、模板语法

在菜鸟教程上面学习的vue.js.同时结合vue中文文档网站,便于自己记录. vueAPI网站:API 1. 简介 Vue.js(读音 /vjuː/, 类似于 view) 是一套构建用户界面的渐进式框 ...

- mybatis 报Invalid bound statement(not found) 和 Property 'mapperLocations' was not specified or not matching resources found

排除问题的步骤: 1.首先检查mapper文件和mapper接口的文件名是否相等. 2.pom.xml是否把xml排除了,这样写就会src/main/java下所有的Mybatis的xml文件都删除, ...

- W tensorflow/core/util/ctc/ctc_loss_calculator.cc:144] No valid path found 或 loss:inf的解决方案

基于Tensorflow和Keras实现端到端的不定长中文字符检测和识别(文本检测:CTPN,文本识别:DenseNet + CTC),在使用自己的数据训练这个模型的过程中,出现如下错误,由于问题已经 ...

- 用JavaScript带你体验V8引擎解析标识符

上一篇讲了字符串的解析过程,这一篇来讲讲标识符(IDENTIFIER)的解析. 先上知识点,标识符的扫描分为快解析和慢解析,一旦出现Ascii编码大于127的字符或者转义字符,会进入慢解析,略微影响性 ...

- Blockstack: A Global Naming and Storage System Secured by Blockchains

作者:Muneeb Ali, Jude Nelson, Ryan Shea, and Michael Freedman Blockstack Labs and Princeton University ...

- 11、VUE混合

1.混合的概念(mixture) 混合是以一种灵活的方式,为组件提供代码复用功能.(类似于封装) 混合对象可以包含任意的组件选项.当组件使用了混合对象时,混合对象的所有选项将被“混入”组件自己的选项中 ...