【二】最新多智能体强化学习文章如何查阅{顶会:AAAI、 ICML }

相关文章:

【二】最新多智能体强化学习文章如何查阅{顶会:AAAI、 ICML }

【三】多智能体强化学习(MARL)近年研究概览 {Analysis of emergent behaviors(行为分析)_、Learning communication(通信学习)}

【四】多智能体强化学习(MARL)近年研究概览 {Learning cooperation(协作学习)、Agents modeling agents(智能体建模)}

1.中国计算机学会(CCF)推荐国际学术会议和期刊目录

CCF推荐国际学术会议(参考链接:链接点击查阅具体分类)

类别如下计算机系统与高性能计算,计算机网络,网络与信息安全,软件工程,系统软件与程序设计语言,数据库、数据挖掘与内容检索,计算机科学理论,计算机图形学与多媒体,人工智能与模式识别,人机交互与普适计算,前沿、交叉与综合

2021 ICML 多智能体强化学习论文整理汇总

| 类别名称 | 数量 |

|---|---|

| 投稿量 | 5513 |

| 接收量 | 1184 |

| 强化学习方向文章 | 163 |

| 其中多智能体强化学习文章 | 15 |

ICML地位:

1.1 中国计算机学会推荐国际学术会议

(人工智能与模式识别)

1.1.1 A类

|

序号 |

会议简称 |

会议全称 |

出版社 |

网址 |

|

1 |

AAAI |

AAAI Conference on Artificial Intelligence |

AAAI |

|

|

2 |

CVPR |

IEEE Conference on Computer Vision and |

IEEE |

|

|

3 |

ICCV |

International Conference on Computer |

IEEE |

|

|

4 |

ICML |

International Conference on Machine |

ACM |

|

|

5 |

IJCAI |

International Joint Conference on Artificial |

Morgan Kaufmann |

1.1.2 B类

|

序号 |

会议简称 |

会议全称 |

出版社 |

网址 |

|

1 |

COLT |

Annual Conference on Computational |

Springer |

|

|

2 |

NIPS |

Annual Conference on Neural Information |

MIT Press |

1.1.3 B、C类更多见附录

2.推荐深度强化学习实验室及链接

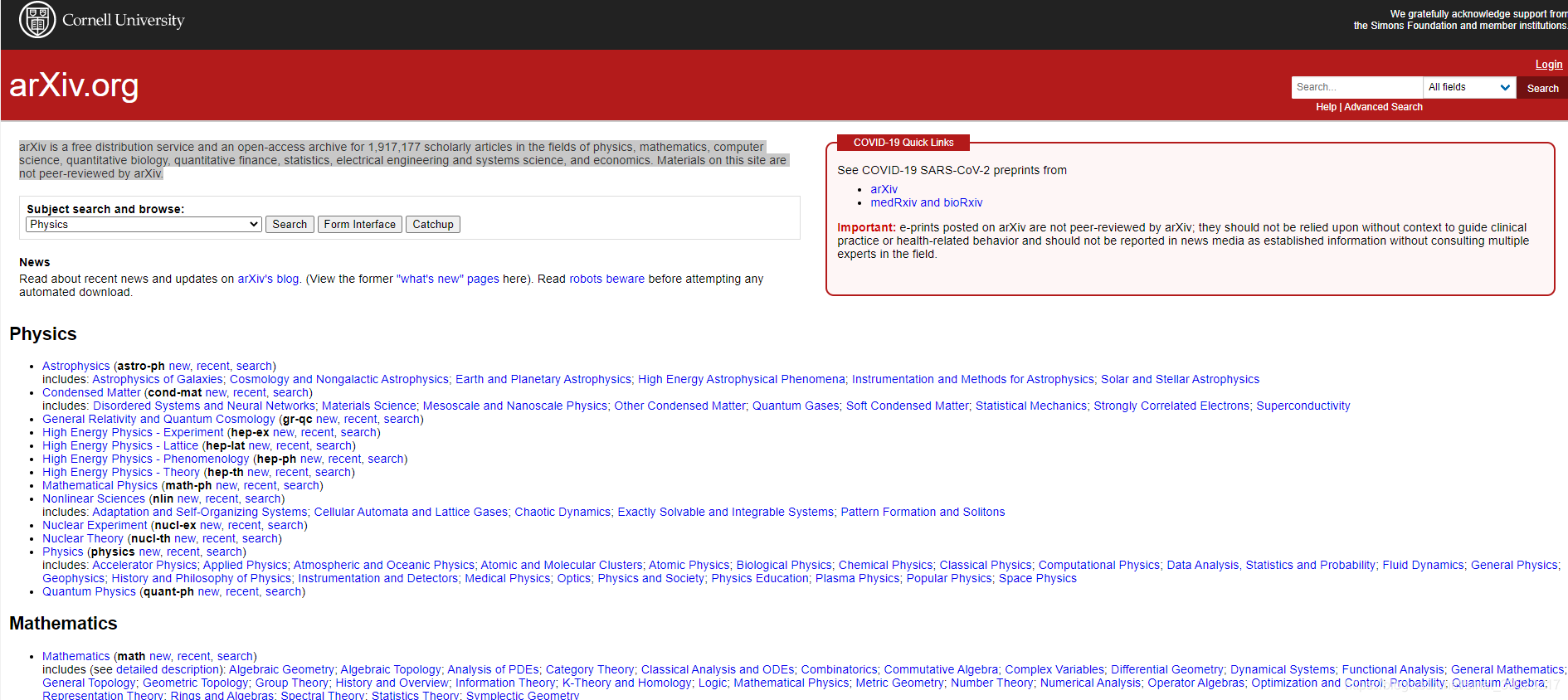

2.1 arXiv

arXiv是一个免费的分发服务和开放存取的档案,收录了物理、数学、计算机科学、定量生物学、定量金融、统计学、电气工程和系统科学以及经济学等领域的1,917,177篇学术文章。本网站上的材料没有经过arXiv的同行评审。

2.2 深度强化学习实验室

DeepRL——github:https://github.com/neurondance

微信公众号:Deep-RL

官网:http://www.neurondance.com/

论坛:http://deeprl.neurondance.com/

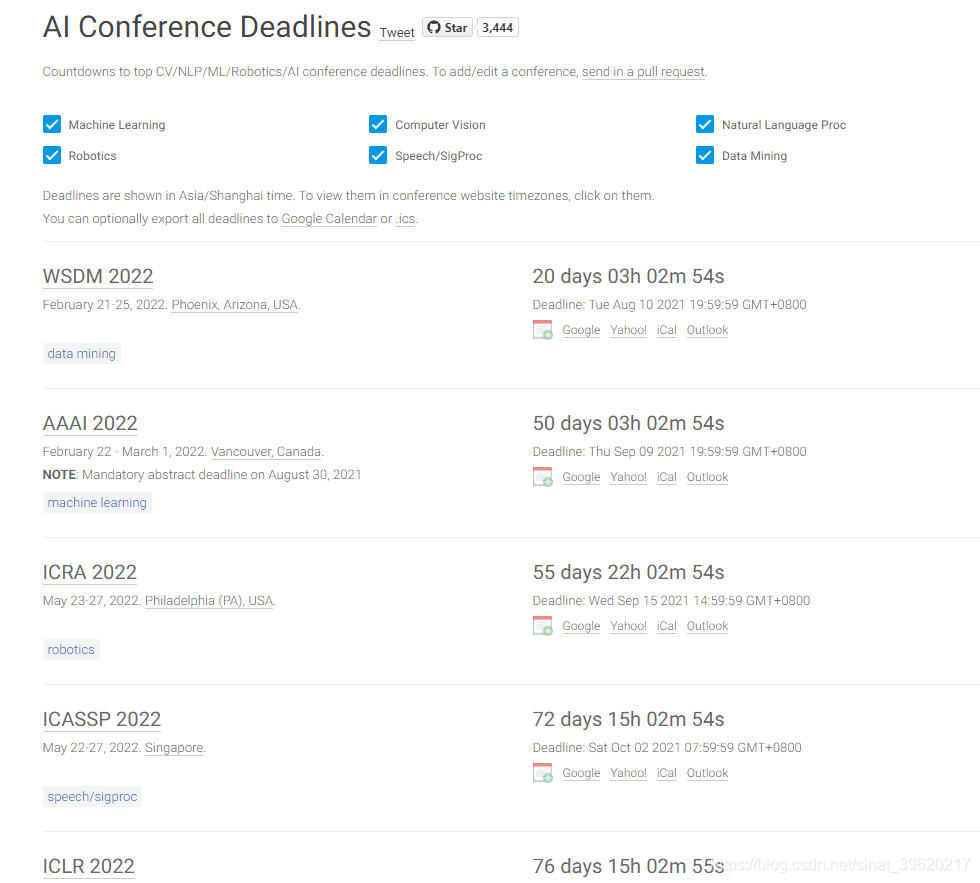

2.3 AI 会议Deadlines

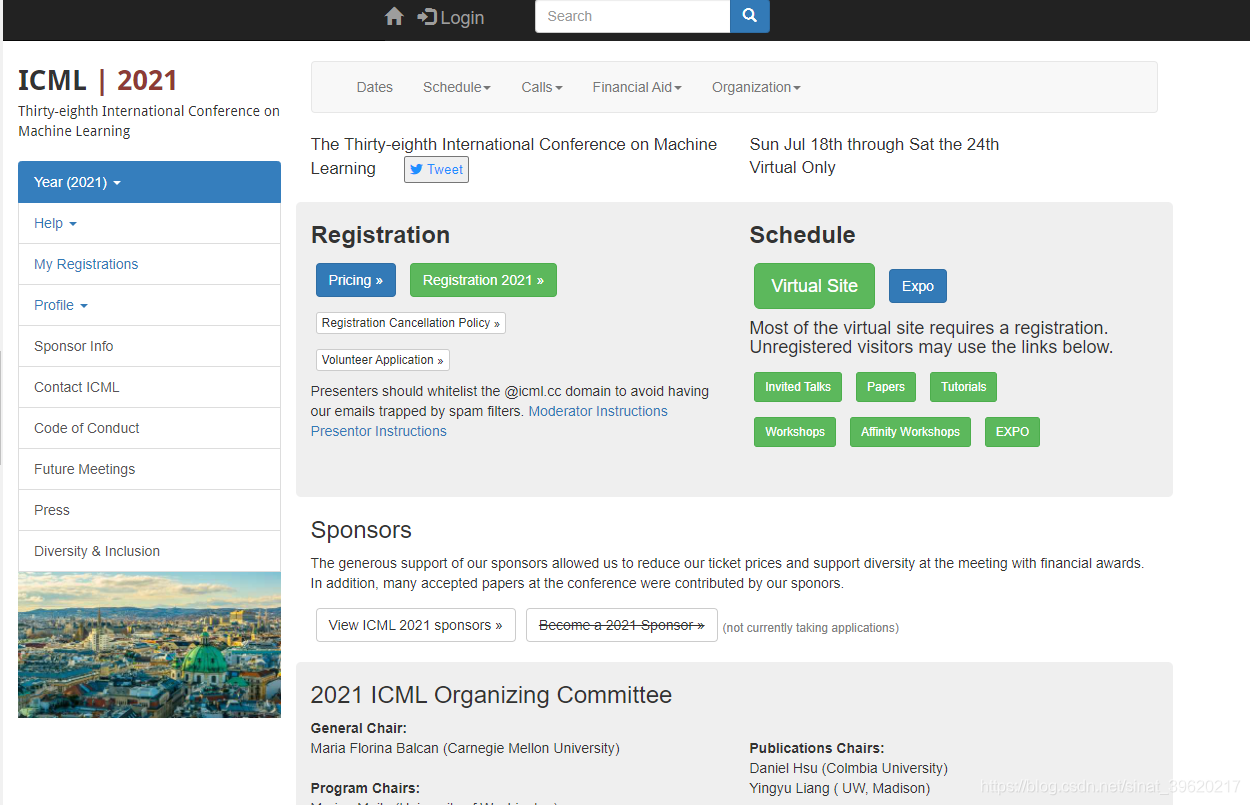

2.4 ICML官网:

3.最新多智能体强化学习方向论文

3.1 ICML International Conference on Machine Learning

[1]. Randomized Entity-wise Factorization for Multi-Agent Reinforcement Learning

作者: Shariq Iqbal (University of Southern California) · Christian Schroeder (University of Oxford) · Bei Peng (University of Oxford) · Wendelin Boehmer (Delft University of Technology) · Shimon Whiteson (University of Oxford) · Fei Sha (Google Research)

[2]. UneVEn: Universal Value Exploration for Multi-Agent Reinforcement Learning

作者: Tarun Gupta (University of Oxford) · Anuj Mahajan (Dept. of Computer Science, University of Oxford) · Bei Peng (University of Oxford) · Wendelin Boehmer (Delft University of Technology) · Shimon Whiteson (University of Oxford)

[3]. Emergent Social Learning via Multi-agent Reinforcement Learning

作者: Kamal Ndousse (OpenAI) · Douglas Eck (Google Brain) · Sergey Levine (UC Berkeley) · Natasha Jaques (Google Brain, UC Berkeley)

[4]. DFAC Framework: Factorizing the Value Function via Quantile Mixture for Multi-Agent Distributional Q-Learning

作者: Wei-Fang Sun (National Tsing Hua University) · Cheng-Kuang Lee (NVIDIA Corporation) · Chun-Yi Lee (National Tsing Hua University)

[5]. Cooperative Exploration for Multi-Agent Deep Reinforcement Learning

作者: Iou-Jen Liu (University of Illinois at Urbana-Champaign) · Unnat Jain (UIUC) · Raymond Yeh (University of Illinois at Urbana–Champaign) · Alexander Schwing (UIUC)

[6]. Large-Scale Multi-Agent Deep FBSDEs

作者: Tianrong Chen (Georgia Institute of Technology) · Ziyi Wang (Georgia Institute of Technology) · Ioannis Exarchos (Stanford University) · Evangelos Theodorou (Georgia Tech)

[7]. Tesseract: Tensorised Actors for Multi-Agent Reinforcement Learning

作者: Anuj Mahajan (Dept. of Computer Science, University of Oxford) · Mikayel Samvelyan (University College London) · Lei Mao (NVIDIA) · Viktor Makoviychuk (NVIDIA) · Animesh Garg (University of Toronto, Vector Institute, Nvidia) · Jean Kossaifi (NVIDIA) · Shimon Whiteson (University of Oxford) · Yuke Zhu (University of Texas - Austin) · Anima Anandkumar (Caltech and NVIDIA)

[8]. Scaling Multi-Agent Reinforcement Learning with Selective Parameter Sharing

作者: Filippos Christianos (University of Edinburgh) · Georgios Papoudakis (The University of Edinburgh) · Muhammad Arrasy Rahman (The University of Edinburgh) · Stefano Albrecht (University of Edinburgh)

[9]. Parallel Droplet Control in MEDA Biochips using Multi-Agent Reinforcement Learning

作者: Tung-Che Liang (Duke University) · Jin Zhou (Duke University) · Yun-Sheng Chan (National Chiao Tung University) · Tsung-Yi Ho (National Tsing Hua University) · Krishnendu Chakrabarty (Duke University) · Cy Lee (National Chiao Tung University)

[10]. A Policy Gradient Algorithm for Learning to Learn in Multiagent Reinforcement Learning

作者: Dong Ki Kim (MIT) · Miao Liu (IBM) · Matthew Riemer (IBM Research) · Chuangchuang Sun (MIT) · Marwa Abdulhai (MIT) · Golnaz Habibi (MIT) · Sebastian Lopez-Cot (MIT) · Gerald Tesauro (IBM Research) · Jonathan How (MIT)

[11]. Scalable Evaluation of Multi-Agent Reinforcement Learning with Melting Pot

作者: Joel Z Leibo (DeepMind) · Edgar Duenez-Guzman (DeepMind) · Alexander Vezhnevets (DeepMind) · John Agapiou (DeepMind) · Peter Sunehag () · Raphael Koster (DeepMind) · Jayd Matyas (DeepMind) · Charles Beattie (DeepMind Technologies Limited) · Igor Mordatch (Google Brain) · Thore Graepel (DeepMind)

[12]. Multi-Agent Training beyond Zero-Sum with Correlated Equilibrium Meta-Solvers

作者: Luke Marris (DeepMind) · Paul Muller (DeepMind) · Marc Lanctot (DeepMind) · Karl Tuyls (DeepMind) · Thore Graepel (DeepMind)

[13]. Coach-Player Multi-agent Reinforcement Learning for Dynamic Team Composition

作者: Bo Liu (University of Texas, Austin) · Qiang Liu (UT Austin) · Peter Stone (University of Texas at Austin) · Animesh Garg (University of Toronto, Vector Institute, Nvidia) · Yuke Zhu (University of Texas - Austin) · Anima Anandkumar (California Institute of Technology)

[14]. Learning Fair Policies in Decentralized Cooperative Multi-Agent Reinforcement Learning

作者: Matthieu Zimmer (Shanghai Jiao Tong University) · Claire Glanois (Shanghai Jiao Tong University) · Umer Siddique (Shanghai Jiao Tong University) · Paul Weng (Shanghai Jiao Tong University)

[15]. FOP: Factorizing Optimal Joint Policy of Maximum-Entropy Multi-Agent Reinforcement Learning

作者: Tianhao Zhang (Peking University) · yueheng li (Peking university) · Chen Wang (Peking University) · Zongqing Lu (Peking University) · Guangming Xie (1. State Key Laboratory for Turbulence and Complex Systems, College of Engineering, Peking University; 2. Institute of Ocean Research, Peking University)

3.2 AAAI Conference on Artificial Intelligence

会议时间节点

- August 15 – August 30, 2020: Authors register on the AAAI web site

- September 1, 2020: Electronic abstracts due at 11:59 PM UTC-12 (anywhere on earth)

- September 9, 2020: Electronic papers due at 11:59 PM UTC-12 (anywhere on earth)

- September 29, 2020: Abstracts AND full papers due for revisions of rejected NeurIPS/EMNLP submissions by 11:59 PM UTC-12 (anywhere on earth)

- AAAI-21 Reviewing Process: Two-Phase Reviewing and NeurIPS/EMNLP Fast Track Submissions

- November 3-5, 2020: Author Feedback Window (anywhere on earth)

- December 1, 2020: Notification of acceptance or rejection

具体论文见链接:http://deeprl.neurondance.com/d/191-82aaai2021

接收论文列表(共84篇)

4.附录

4.1 B类

|

序号 |

会议简称 |

会议全称 |

出版社 |

网址 |

|

1 |

COLT |

Annual Conference on Computational |

Springer |

|

|

2 |

NIPS |

Annual Conference on Neural Information |

MIT Press |

|

|

3 |

ACL |

Annual Meeting of the Association for |

ACL |

|

|

4 |

EMNLP |

Conference on Empirical Methods in Natural |

ACL |

|

|

5 |

ECAI |

European Conference on Artificial |

IOS Press |

|

|

6 |

ECCV |

European Conference on Computer Vision |

Springer |

|

|

7 |

ICRA |

IEEE International Conference on Robotics |

IEEE |

|

|

8 |

ICAPS |

International Conference on Automated |

AAAI |

|

|

9 |

ICCBR |

International Conference on Case-Based |

Springer |

|

|

10 |

COLING |

International Conference on Computational |

ACM |

|

|

11 |

KR |

International Conference on Principles of |

Morgan Kaufmann |

|

|

12 |

UAI |

International Conference on Uncertainty |

AUAI |

|

|

13 |

AAMAS |

International Joint Conference |

Springer |

4.2 C类

|

序号 |

会议简称 |

会议全称 |

出版社 |

网址 |

|

1 |

ACCV |

Asian Conference on Computer Vision |

Springer |

|

|

2 |

CoNLL |

Conference on Natural Language Learning |

CoNLL |

|

|

3 |

GECCO |

Genetic and Evolutionary Computation |

ACM |

|

|

4 |

ICTAI |

IEEE International Conference on Tools with |

IEEE |

|

|

5 |

ALT |

International Conference on Algorithmic |

Springer |

|

|

6 |

ICANN |

International Conference on Artificial Neural |

Springer |

|

|

7 |

FGR |

International Conference on Automatic Face |

IEEE |

|

|

8 |

ICDAR |

International Conference on Document |

IEEE |

|

|

9 |

ILP |

International Conference on Inductive Logic |

Springer |

|

|

10 |

KSEM |

International conference on Knowledge |

Springer |

|

|

11 |

ICONIP |

International Conference on Neural |

Springer |

|

|

12 |

ICPR |

International Conference on Pattern |

IEEE |

|

|

13 |

ICB |

International Joint Conference on Biometrics |

IEEE |

|

|

14 |

IJCNN |

International Joint Conference on Neural |

IEEE |

|

|

15 |

PRICAI |

Pacific Rim International Conference on |

Springer |

|

|

16 |

NAACL |

The Annual Conference of the North |

NAACL |

|

|

17 |

BMVC |

British Machine Vision Conference |

British Machine |

【二】最新多智能体强化学习文章如何查阅{顶会:AAAI、 ICML }的更多相关文章

- ICML 2018 | 从强化学习到生成模型:40篇值得一读的论文

https://blog.csdn.net/y80gDg1/article/details/81463731 感谢阅读腾讯AI Lab微信号第34篇文章.当地时间 7 月 10-15 日,第 35 届 ...

- (转) 深度强化学习综述:从AlphaGo背后的力量到学习资源分享(附论文)

本文转自:http://mp.weixin.qq.com/s/aAHbybdbs_GtY8OyU6h5WA 专题 | 深度强化学习综述:从AlphaGo背后的力量到学习资源分享(附论文) 原创 201 ...

- [强化学习]Part1:强化学习初印象

引入 智能 人工智能 强化学习初印象 强化学习的相关资料 经典书籍推荐:<Reinforcement Learning:An Introduction(强化学习导论)>(强化学习教父Ric ...

- Deep Learning专栏--强化学习之从 Policy Gradient 到 A3C(3)

在之前的强化学习文章里,我们讲到了经典的MDP模型来描述强化学习,其解法包括value iteration和policy iteration,这类经典解法基于已知的转移概率矩阵P,而在实际应用中,我们 ...

- 强化学习——如何提升样本效率 ( DeepMind 综述深度强化学习:智能体和人类相似度竟然如此高!)

强化学习 如何提升样本效率 参考文章: https://news.html5.qq.com/article?ch=901201&tabId=0&tagId=0&docI ...

- 强化学习之二:Q-Learning原理及表与神经网络的实现(Q-Learning with Tables and Neural Networks)

本文是对Arthur Juliani在Medium平台发布的强化学习系列教程的个人中文翻译.(This article is my personal translation for the tutor ...

- 强化学习调参技巧二:DDPG、TD3、SAC算法为例:

1.训练环境如何正确编写 强化学习里的 env.reset() env.step() 就是训练环境.其编写流程如下: 1.1 初始阶段: 先写一个简化版的训练环境.把任务难度降到最低,确保一定能正常训 ...

- 强化学习(二)马尔科夫决策过程(MDP)

在强化学习(一)模型基础中,我们讲到了强化学习模型的8个基本要素.但是仅凭这些要素还是无法使用强化学习来帮助我们解决问题的, 在讲到模型训练前,模型的简化也很重要,这一篇主要就是讲如何利用马尔科夫决策 ...

- 【转载】 强化学习(二)马尔科夫决策过程(MDP)

原文地址: https://www.cnblogs.com/pinard/p/9426283.html ------------------------------------------------ ...

- 强化学习二:Markov Processes

一.前言 在第一章强化学习简介中,我们提到强化学习过程可以看做一系列的state.reward.action的组合.本章我们将要介绍马尔科夫决策过程(Markov Decision Processes ...

随机推荐

- 以 Golang 为例详解 AST 抽象语法树

前言 各位同行有没有想过一件事,一个程序文件,比如 hello.go 是如何被编译器理解的,平常在编写程序时,IDE 又是如何提供代码提示的.在这奥妙无穷的背后, AST(Abstract Synta ...

- HanLP — HMM隐马尔可夫模型 -- 维特比(Viterbi)算法 --示例代码 - Java

Viterbi 维特比算法解决的是篱笆型的图的最短路径问题,图的节点按列组织,每列的节点数量可以不一样,每一列的节点只能和相邻列的节点相连,不能跨列相连,节点之间有着不同的距离,距离的值就不在 题目背 ...

- #2612:Find a way(BFS搜索+多终点)

第一次解决双向BFS问题,拆分两个出发点分BFS搜索 #include<cstdio> #include<cstring> #include<queue> usin ...

- Codeforce :466C. Number of Ways (数学)

https://codeforces.com/problemset/problem/466/C 解题说明:此题是一道数学题,若平分分成若干种情况,应当整体(sum)考虑,对sum/3进行分析.它是区分 ...

- 3.1 《数据库系统概论》SQL概述及数据定义(模式SCHEMA、表TABLE、索引INDEX)

前言 本篇文章学习书籍:<数据库系统概论>第5版 王珊 萨师煊编著 视频资源来自:数据库系统概论完整版(基础篇+高级篇+新技术篇) 由于 BitHachi 学长已经系统的整理过本书了,我在 ...

- <vue 组件 3、父子组件相互访问>

代码结构 一. 01-组件访问-父访问子 1. 效果 点击后在父组件里展示子组件的参数 2.代码 01-组件访问-父访问子.html <!DOCTYPE html> <htm ...

- u-swipe-action 宽度计算的延迟导致组件加载时内部样式错误

https://toscode.gitee.com/umicro/uView/issues/I1Y50J 左图为电脑显示效果,右图为app显示效果. 原因:u-swipe-action 宽度计算的延迟 ...

- vue中我改变了data中的一个值,但现在视图上没有实时更新,请问我怎么拿到更新后的值?

Vue在数据初始化的时候会对data,computed,watcher中的属性进行依赖收集,如果支持proxy,则直接使用proxy进行拦截,好处是可以深层次的进行拦截,如果不支持proxy,则使用o ...

- Java面试——数据库知识点

MySQL 1.建 主键:数据库表中对储存数据对象予以唯一和完整标识的数据列或属性的组合.一个数据列只能有一个主键,且主键的取值不能缺失,即不能为空值(Null). 超键:在关系中能唯一标识元组的属性 ...

- Java 客户端访问kafka

本文为博主原创,未经允许不得转载: 1. 引入maven依赖 <dependency> <groupId>org.apache.kafka</groupId> &l ...