HBase HA的分布式集群部署(适合3、5节点)

本博文的主要内容有:

.HBase的分布模式(3、5节点)安装

.HBase的分布模式(3、5节点)的启动

.HBase HA的分布式集群的安装

.HBase HA的分布式集群的启动

.HBase HA的切换

HBase HA分布式集群搭建———集群架构

HBase HA分布式集群搭建———安装步骤

HBase的分布模式(3、5节点)安装

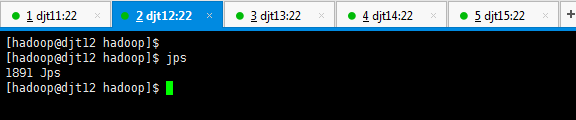

1、分别对djt11、djt12、djt13、djt14、djt15的启动进程恢复到没有任何启动进程的状态。

[hadoop@djt11 hadoop]$ pwd

[hadoop@djt11 hadoop]$ jps

[hadoop@djt12 hadoop]$ jps

[hadoop@djt13 hadoop]$ jps

[hadoop@djt14 hadoop]$ jps

[hadoop@djt15 hadoop]$ jps

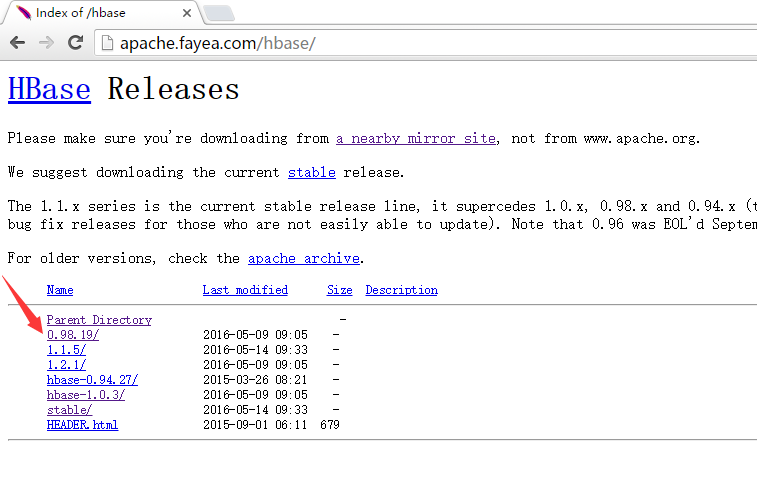

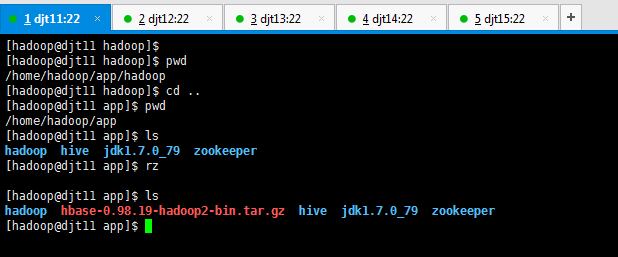

2、切换到app安装目录

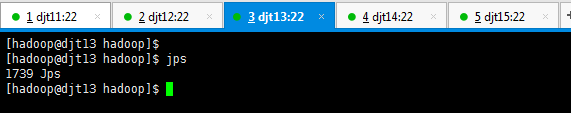

下载HBase压缩包

[hadoop@djt11 hadoop]$ pwd

[hadoop@djt11 hadoop]$ cd ..

[hadoop@djt11 app]$ pwd

[hadoop@djt11 app]$ ls

[hadoop@djt11 app]$ rz

[hadoop@djt11 app]$ ls

[hadoop@djt11]$ tar -zxvf hbase-0.98.19-hadoop2-bin.tar.gz

[hadoop@djt11 app]$ pwd

[hadoop@djt11 app]$ ls

[hadoop@djt11 app]$ mv hbase-0.98.19-hadoop2 hbase

[hadoop@djt11 app]$ rm -rf hbase-0.98.19-hadoop2-bin.tar.gz

[hadoop@djt11 app]$ ls

[hadoop@djt11 app]$ pwd

[hadoop@djt11 app]$

[hadoop@djt11 app]$ ls

[hadoop@djt11 app]$ cd hbase/

[hadoop@djt11 hbase]$ ls

[hadoop@djt11 hbase]$ cd conf/

[hadoop@djt11 conf]$ pwd

[hadoop@djt11 conf]$ ls

[hadoop@djt11 conf]$ vi regionservers

djt13

djt14

djt15

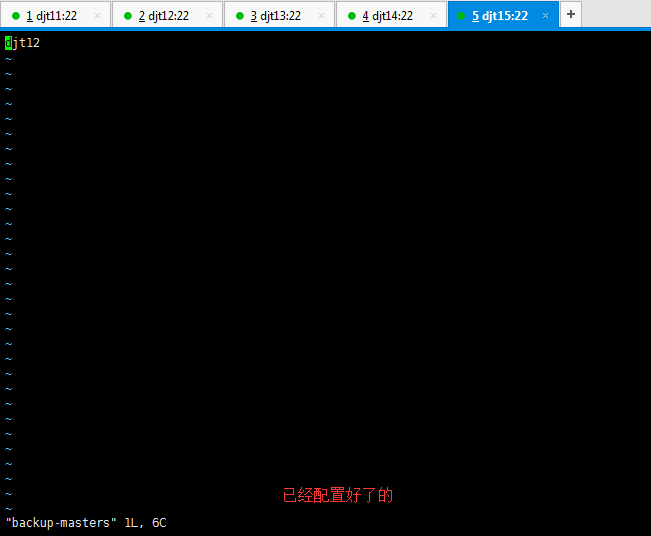

[hadoop@djt11 conf]$ vi backup-masters

djt12

[hadoop@djt11 conf]$ cp /home/hadoop/app/hadoop/etc/hadoop/core-site.xml ./

[hadoop@djt11 conf]$ cp /home/hadoop/app/hadoop/etc/hadoop/hdfs-site.xml ./

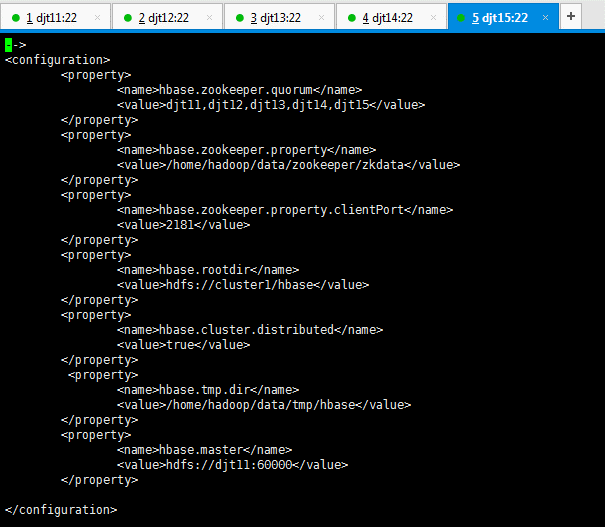

[hadoop@djt11 conf]$ vi hbase-site.xml

<configuration>

<property>

<name>hbase.zookeeper.quorum</name>

<value>djt11,djt12,djt13,djt14,djt15</value>

</property>

<property>

<name>hbase.zookeeper.property。dataDir</name>

<value>/home/hadoop/data/zookeeper/zkdata</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://cluster1/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/home/hadoop/data/tmp/hbase</value>

</property>

<property>

<name>hbase.master</name>

<value>hdfs://djt11:60000</value>

</property>

</configuration>

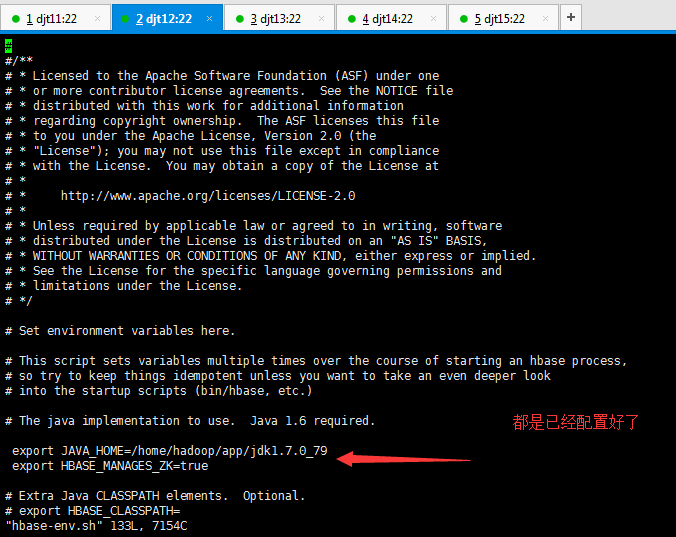

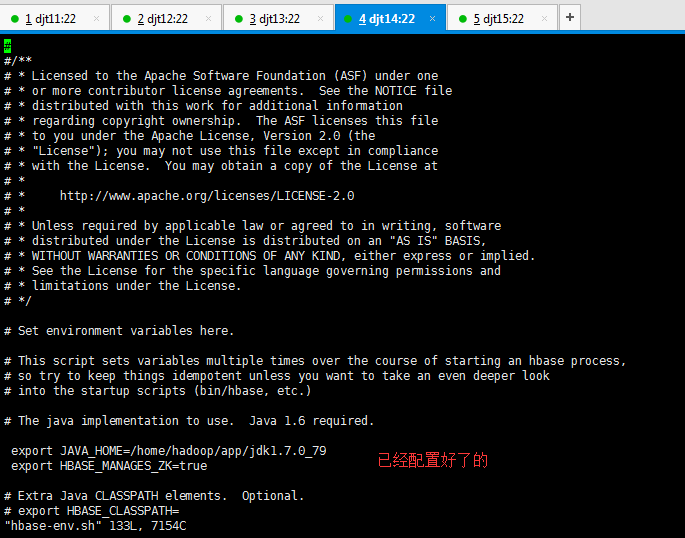

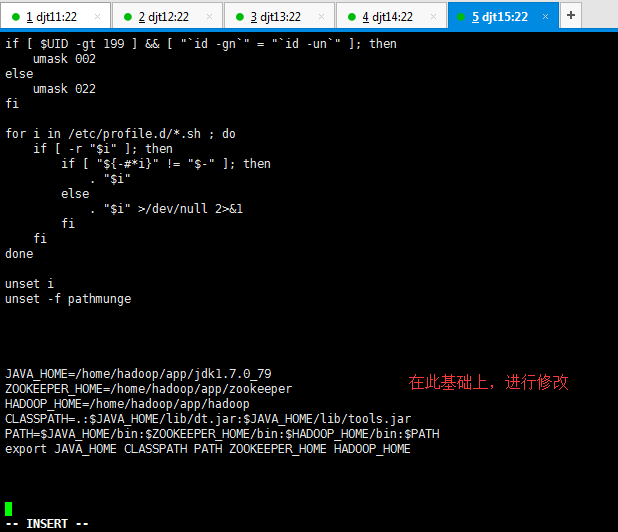

vi hbase-env.sh

#export JAVA_HOME=/usr/java/jdk1.6.0/

修改为,

export JAVA_HOME=/home/hadoop/app/jdk1.7.0_79

export HBASE_MANAGES_ZK=true

这里,有一个知识点。

进程HQuorumPeer,设HBASE_MANAGES_ZK=true,在启动HBase时,HBase把Zookeeper作为自身的一部分运行。

进程QuorumPeerMain,设HBASE_MANAGES_ZK=false,先手动启动Zookeeper,再启动HBase。

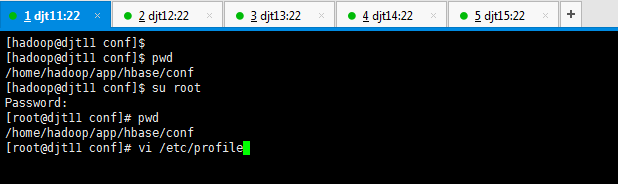

[hadoop@djt11 conf]$ pwd

[hadoop@djt11 conf]$ su root

[root@djt11 conf]# pwd

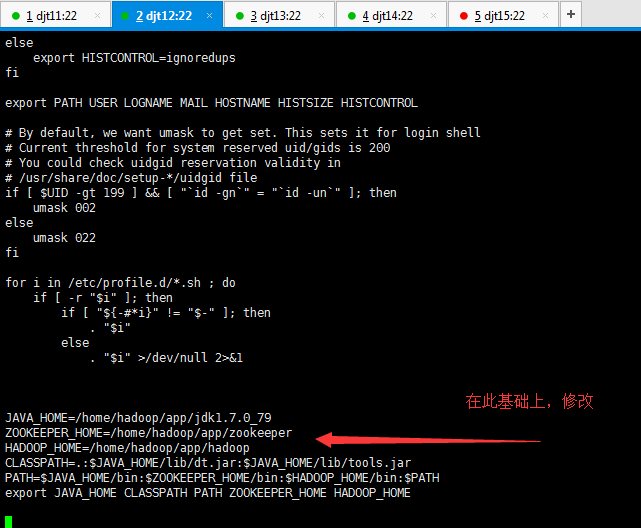

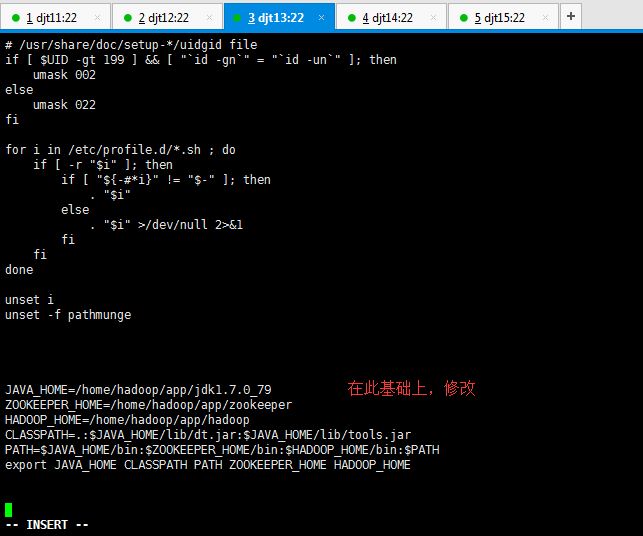

[root@djt11 conf]# vi /etc/profile

JAVA_HOME=/home/hadoop/app/jdk1.7.0_79

ZOOKEEPER_HOME=/home/hadoop/app/zookeeper

HADOOP_HOME=/home/hadoop/app/hadoop

HIVE_HOME=/home/hadoop/app/hive

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin:$HADOOP_HOME/bin:$HIVE_HOME/bin:/home/hadoop/tools:$PATH

export JAVA_HOME CLASSPATH PATH ZOOKEEPER_HOME HADOOP_HOME HIVE_HOME

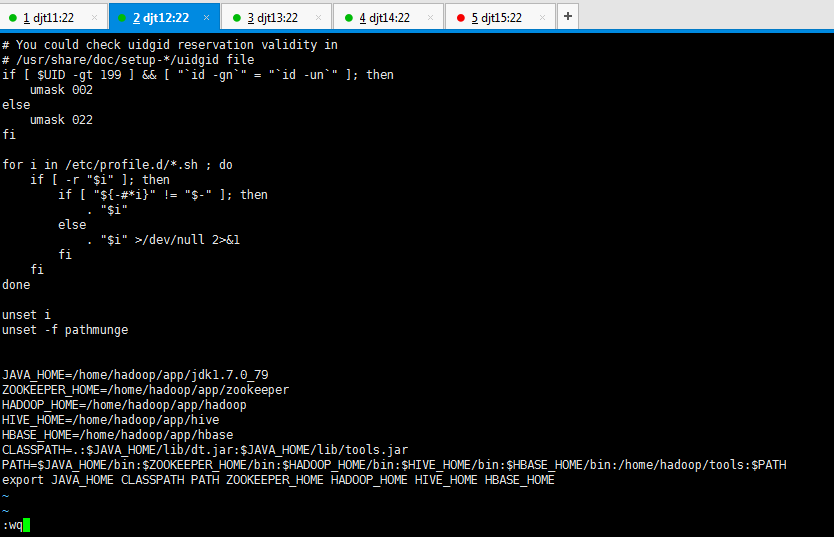

JAVA_HOME=/home/hadoop/app/jdk1.7.0_79

ZOOKEEPER_HOME=/home/hadoop/app/zookeeper

HADOOP_HOME=/home/hadoop/app/hadoop

HIVE_HOME=/home/hadoop/app/hive

HBASE_HOME=/home/hadoop/app/hbase

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin:$HADOOP_HOME/bin:$HIVE_HOME/bin:$HBASE_HOME/bin:/home/hadoop/tools:$PATH

export JAVA_HOME CLASSPATH PATH ZOOKEEPER_HOME HADOOP_HOME HIVE_HOME HBASE_HOME

[root@djt11 conf]# source /etc/profile

[root@djt11 conf]# su hadoop

这个脚本,我们之前在搭建hadoop的5节点时,已经写好并可用的。这里,我们查看下并温习。不作修改

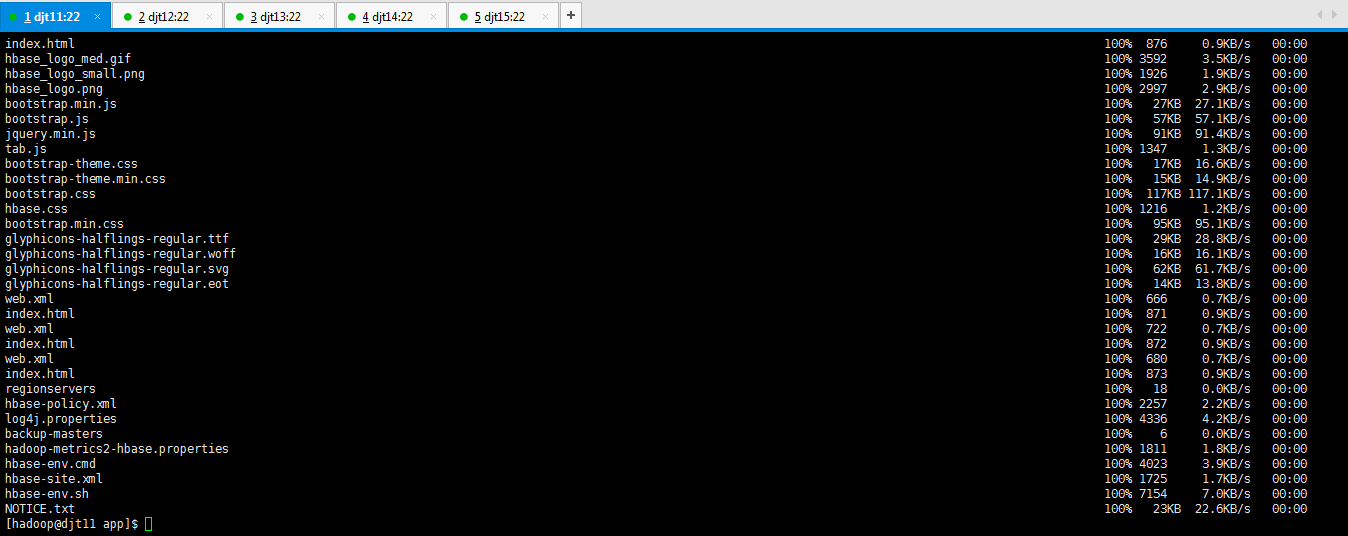

将djt11的hbase分发到slave,即djt12、djt13、djt14、djt15

[hadoop@djt11 tools]$ pwd

[hadoop@djt11 tools]$ cd /home/hadoop/app/

[hadoop@djt11 app]$ ls

[hadoop@djt11 app]$ deploy.sh hbase /home/hadoop/app/ slave

查看分发后的结果情况

表明,分发成功!

接下来,分别也跟djt11亿元,进行djt12、djt13、djt14、djt15的配置。

djt12的配置:

[hadoop@djt12 hbase]$ pwd

[hadoop@djt12 hbase]$ ls

[hadoop@djt12 hbase]$ cd conf/

[hadoop@djt12 conf]$ pwd

[hadoop@djt12 conf]$ ls

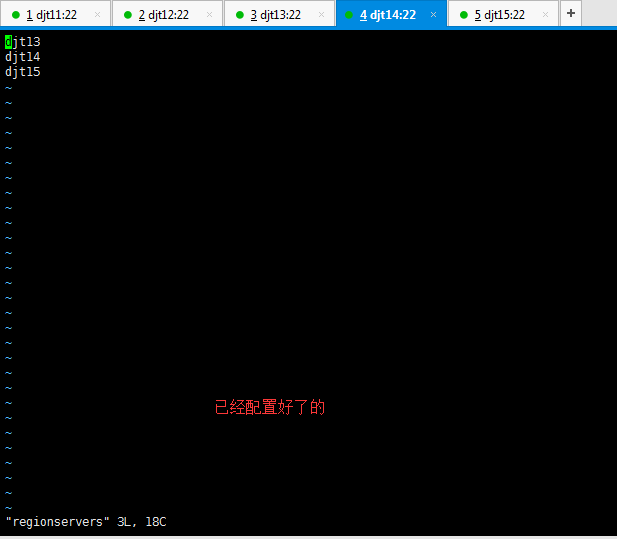

[hadoop@djt12 conf]$ vi regionservers

都是已经配置好了的

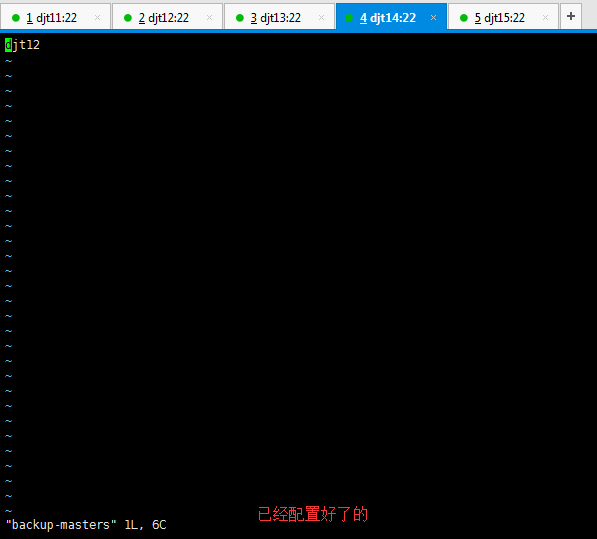

[hadoop@djt12 conf]$ vi backup-masters

都是之前已经配置好了的

[hadoop@djt12 conf]$ cp /home/hadoop/app/hadoop/etc/hadoop/core-site.xml ./

[hadoop@djt12 conf]$ cp /home/hadoop/app/hadoop/etc/hadoop/hdfs-site.xml ./

[hadoop@djt12 conf]$ vi hbase-site.xml

<configuration>

<property>

<name>hbase.zookeeper.quorum</name>

<value>djt11,djt12,djt13,djt14,djt15</value>

</property>

<property>

<name>hbase.zookeeper.property</name>

<value>/home/hadoop/data/zookeeper/zkdata</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://cluster1/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.master</name>

<value>hdfs://djt11:60000</value>

</property>

</configuration>

[hadoop@djt12 conf]$ vi hbase-env.sh

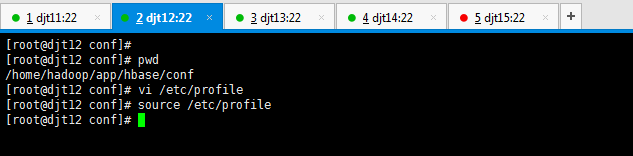

[hadoop@djt12 conf]$ pwd

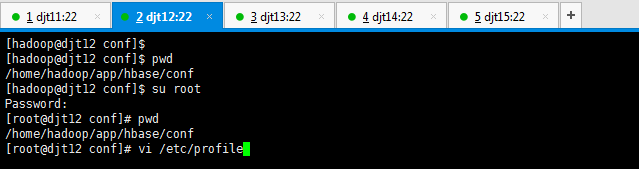

[hadoop@djt12 conf]$ su root

[root@djt12 conf]# pwd

[root@djt12 conf]# vi /etc/profile

[root@djt12 conf]# cd ..

[root@djt12 hbase]# pwd

[root@djt12 hbase]# su hadoop

[hadoop@djt12 hbase]$ pwd

[hadoop@djt12 hbase]$ ls

[hadoop@djt12 hbase]$

djt13的配置

[hadoop@djt13 app]$ pwd

[hadoop@djt13 app]$ ls

[hadoop@djt13 app]$ cd hbase/

[hadoop@djt13 hbase]$ ls

[hadoop@djt13 hbase]$ cd conf/

[hadoop@djt13 conf]$ ls

[hadoop@djt13 conf]$ vi regionservers

[hadoop@djt13 conf]$ vi backup-masters

[hadoop@djt13 conf]$ cp /home/hadoop/app/hadoop/etc/hadoop/core-site.xml ./

[hadoop@djt13 conf]$ cp /home/hadoop/app/hadoop/etc/hadoop/hdfs-site.xml ./

[hadoop@djt13 conf]$ vi hbase-site.xml

<configuration>

<property>

<name>hbase.zookeeper.quorum</name>

<value>djt11,djt12,djt13,djt14,djt15</value>

</property>

<property>

<name>hbase.zookeeper.property</name>

<value>/home/hadoop/data/zookeeper/zkdata</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://cluster1/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.master</name>

<value>hdfs://cluster1:60000</value>

</property>

(注意,我的图片里是错误的!) 因为是做了高可用,是cluster1而不是单独的djt11。cluster1包括了djt11和djt12

</configuration>

[hadoop@djt13 conf]$ pwd

[hadoop@djt13 conf]$ su root

[root@djt13 conf]# pwd

[root@djt13 conf]# vi /etc/profile

JAVA_HOME=/home/hadoop/app/jdk1.7.0_79

ZOOKEEPER_HOME=/home/hadoop/app/zookeeper

HADOOP_HOME=/home/hadoop/app/hadoop

HIVE_HOME=/home/hadoop/app/hive

HBASE_HOME=/home/hadoop/app/hbase

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin:$HADOOP_HOME/bin:$HIVE_HOME/bin:$HBASE_HOME/bin:/home/hadoop/tools:$PATH

export JAVA_HOME CLASSPATH PATH ZOOKEEPER_HOME HADOOP_HOME HIVE_HOME HBASE_HOME

[hadoop@djt13 conf]$ pwd

[hadoop@djt13 conf]$ su root

[root@djt13 conf]# pwd

[root@djt13 conf]# vi /etc/profile

[root@djt13 conf]# source /etc/profile

[root@djt13 conf]# cd ..

[root@djt13 hbase]# pwd

[root@djt13 hbase]# su hadoop

[hadoop@djt13 hbase]$ pwd

[hadoop@djt13 hbase]$ ls

[hadoop@djt13 hbase]$

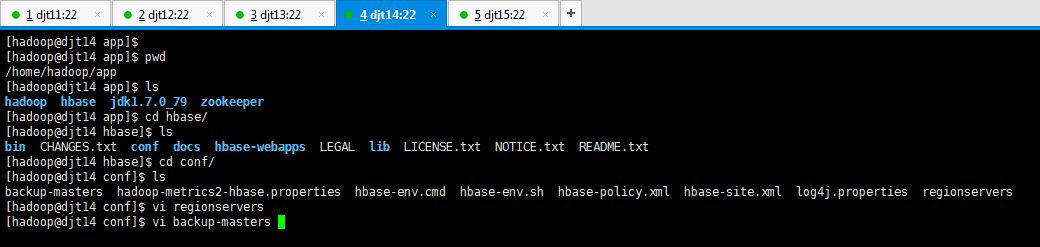

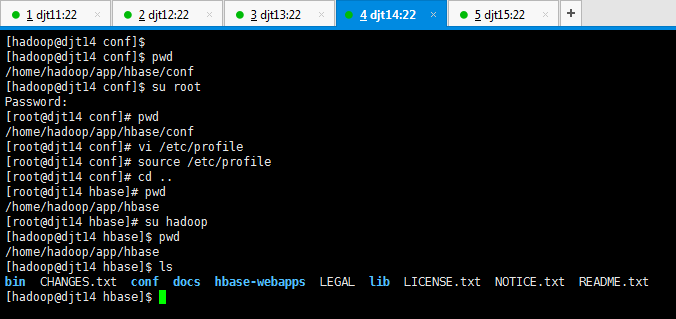

djt14的配置

[hadoop@djt14 app]$ pwd

[hadoop@djt14 app]$ ls

[hadoop@djt14 app]$ cd hbase/

[hadoop@djt14 hbase]$ ls

[hadoop@djt14 hbase]$ cd conf/

[hadoop@djt14 conf]$ ls

[hadoop@djt14 conf]$ vi regionservers

[hadoop@djt14 conf]$ vi backup-masters

[hadoop@djt14 conf]$ cp /home/hadoop/app/hadoop/etc/hadoop/core-site.xml ./

[hadoop@djt14 conf]$ cp /home/hadoop/app/hadoop/etc/hadoop/hdfs-site.xml ./

[hadoop@djt14 conf]$ vi hbase-site.xml

<configuration>

<property>

<name>hbase.zookeeper.quorum</name>

<value>djt11,djt12,djt13,djt14,djt15</value>

</property>

<property>

<name>hbase.zookeeper.property</name>

<value>/home/hadoop/data/zookeeper/zkdata</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://cluster1/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.master</name>

<value>hdfs://djt11:60000</value>

</property>

</configuration>

[hadoop@djt14 conf]$ vi hbase-env.sh

[hadoop@djt14 conf]$ pwd

[hadoop@djt14 conf]$ su root

[root@djt14 conf]# pwd

[root@djt14 conf]# vi /etc/profile

[hadoop@djt14 conf]$ pwd

[hadoop@djt14 conf]$ su root

[root@djt14 conf]# pwd

[root@djt14 conf]# vi /etc/profile

[root@djt14 conf]# source /etc/profile

[root@djt14 conf]# cd ..

[root@djt14 hbase]# pwd

[root@djt14 hbase]# su hadoop

[hadoop@djt14 hbase]$ pwd

[hadoop@djt14 hbase]$ ls

[hadoop@djt14 hbase]$

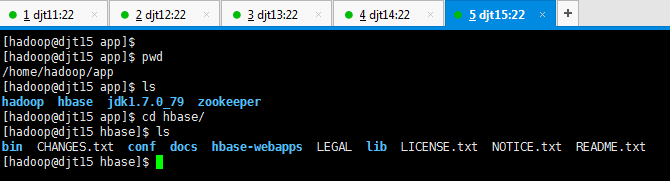

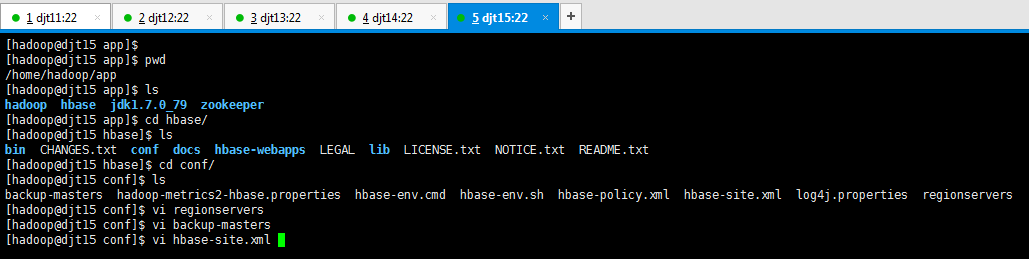

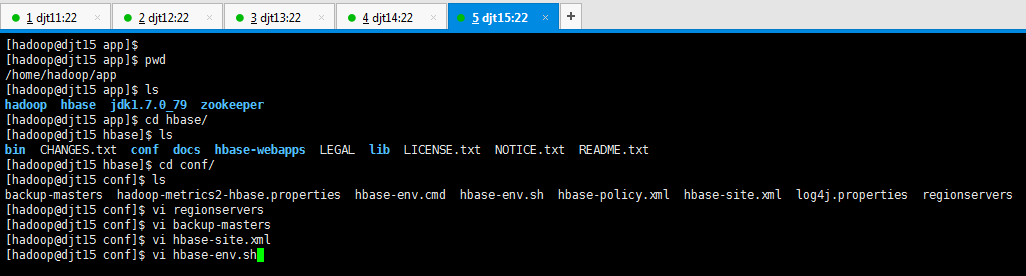

djt15的配置

[hadoop@djt15 app]$ pwd

[hadoop@djt15 app]$ ls

[hadoop@djt15 app]$ cd hbase/

[hadoop@djt15 hbase]$ ls

[hadoop@djt15 hbase]$ cd conf/

[hadoop@djt15 conf]$ ls

[hadoop@djt15 conf]$ vi regionservers

[hadoop@djt15 conf]$ vi backup-masters

[hadoop@djt15 conf]$ cp /home/hadoop/app/hadoop/etc/hadoop/core-site.xml ./

[hadoop@djt15 conf]$ cp /home/hadoop/app/hadoop/etc/hadoop/hdfs-site.xml ./

[hadoop@djt15 conf]$ vi hbase-site.xml

<configuration>

<property>

<name>hbase.zookeeper.quorum</name>

<value>djt11,djt12,djt13,djt14,djt15</value>

</property>

<property>

<name>hbase.zookeeper.property</name>

<value>/home/hadoop/data/zookeeper/zkdata</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://cluster1/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.master</name>

<value>hdfs://djt11:60000</value>

</property>

</configuration>

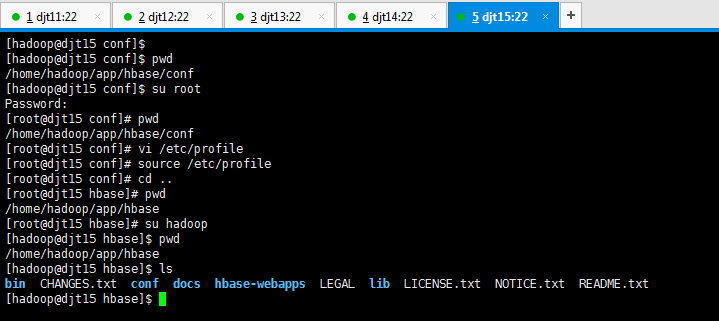

[hadoop@djt15 conf]$ vi hbase-env.sh

[hadoop@djt15 conf]$ pwd

[hadoop@djt15 conf]$ su root

[root@djt15 conf]# pwd

[root@djt15 conf]# vi /etc/profile

[hadoop@djt15 conf]$ pwd

[hadoop@djt15 conf]$ su root

[root@djt15 conf]# pwd

[root@djt15 conf]# vi /etc/profile

[root@djt15 conf]# source /etc/profile

[root@djt15 conf]# cd ..

[root@djt15 hbase]# pwd

[root@djt15 hbase]# su hadoop

[hadoop@djt15 hbase]$ pwd

[hadoop@djt15 hbase]$ ls

[hadoop@djt15 hbase]$

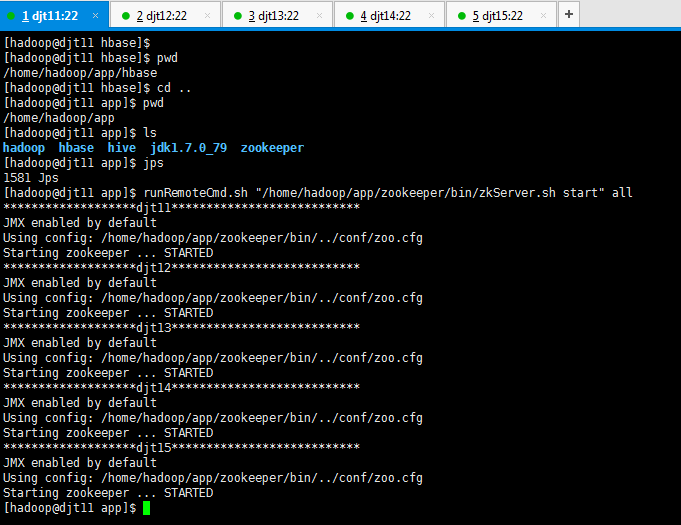

.HBase的分布模式(3、5节点)的启动

这里,只需启动sbin/start-dfs.sh即可。

不需sbin/start-all.sh (它包括sbin/start-dfs.sh和sbin/start-yarn.sh)

启动zookeeper,是因为,hbase是建立在zookeeper之上的。数据是保存在hdfs。

[hadoop@djt11 app]$ jps

[hadoop@djt11 app]$ ls

[hadoop@djt11 app]$ cd hadoop/

[hadoop@djt11 hadoop]$ ls

[hadoop@djt11 hadoop]$ sbin/start-dfs.sh

[hadoop@djt11 hadoop]$ jps

[hadoop@djt12 hadoop]$ jps

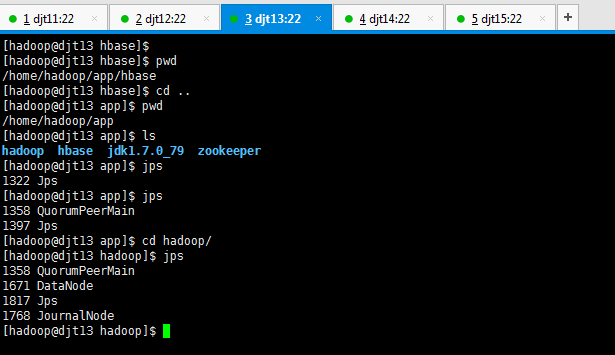

[hadoop@djt13 app]$ cd hadoop/

[hadoop@djt13 hadoop]$ jps

[hadoop@djt14 app]$ cd hadoop/

[hadoop@djt14 hadoop]$ pwd

[hadoop@djt14 hadoop]$ jps

[hadoop@djt15 app]$ cd hadoop/

[hadoop@djt15 hadoop]$ pwd

[hadoop@djt15 hadoop]$ jps

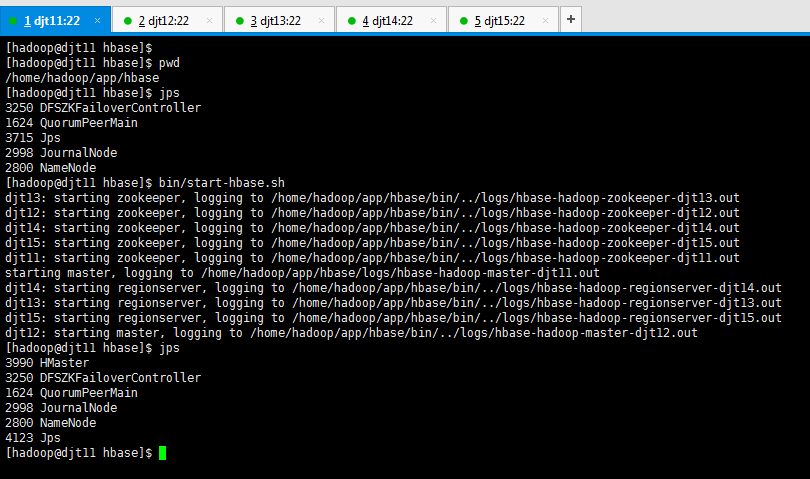

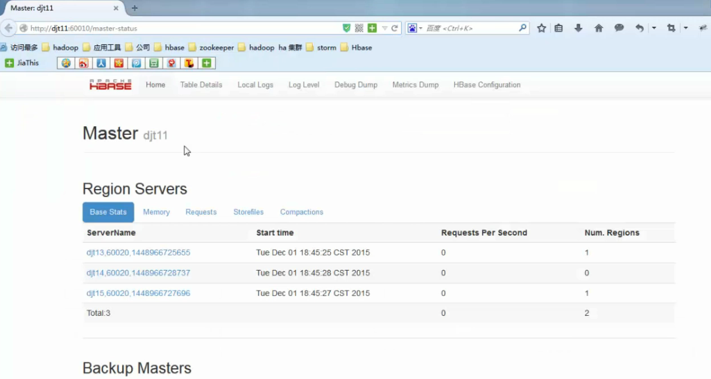

[hadoop@djt11 hbase]$ bin/start-hbase.sh

[hadoop@djt11 hbase]$ jps

[hadoop@djt12 hbase]$ cd ..

[hadoop@djt12 app]$ ls

[hadoop@djt12 app]$ cd hbase/

[hadoop@djt12 hbase]$ pwd

[hadoop@djt12 hbase]$ jps

[hadoop@djt13 hbase]$ cd ..

[hadoop@djt13 app]$ ls

[hadoop@djt13 app]$ cd hbase/

[hadoop@djt13 hbase]$ pwd

[hadoop@djt13 hbase]$ jps

[hadoop@djt14 hbase]$ cd ..

[hadoop@djt14 app]$ ls

[hadoop@djt14 app]$ cd hbase/

[hadoop@djt14 hbase]$ pwd

[hadoop@djt14 hbase]$ jps

[hadoop@djt15 hbase]$ cd ..

[hadoop@djt15 app]$ ls

[hadoop@djt15 app]$ cd hbase/

[hadoop@djt15 hbase]$ pwd

[hadoop@djt15 hbase]$ jps

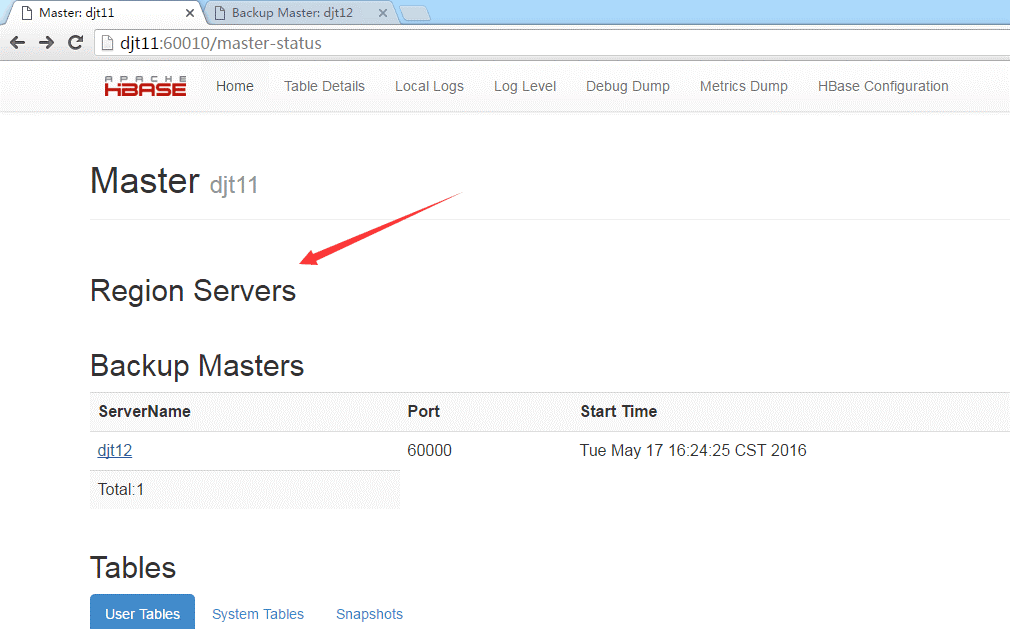

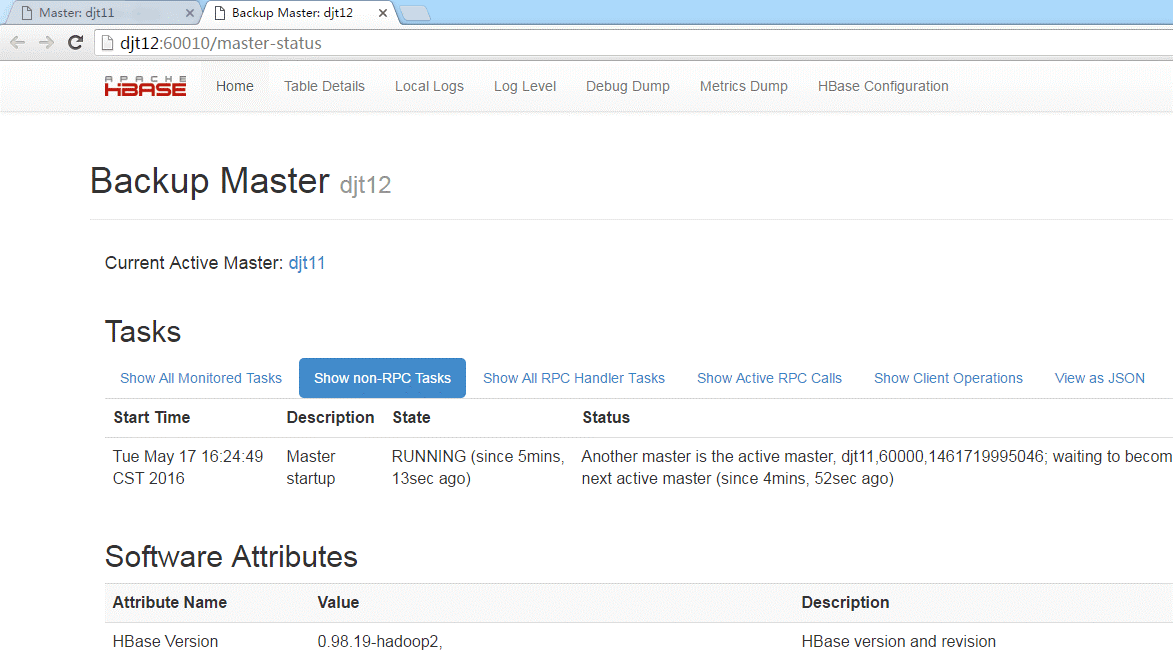

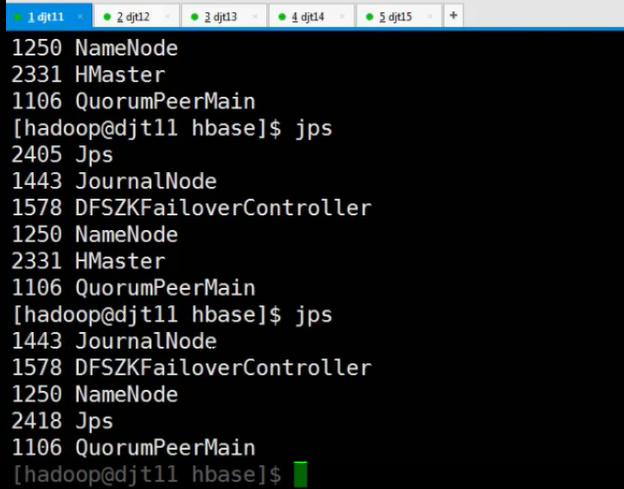

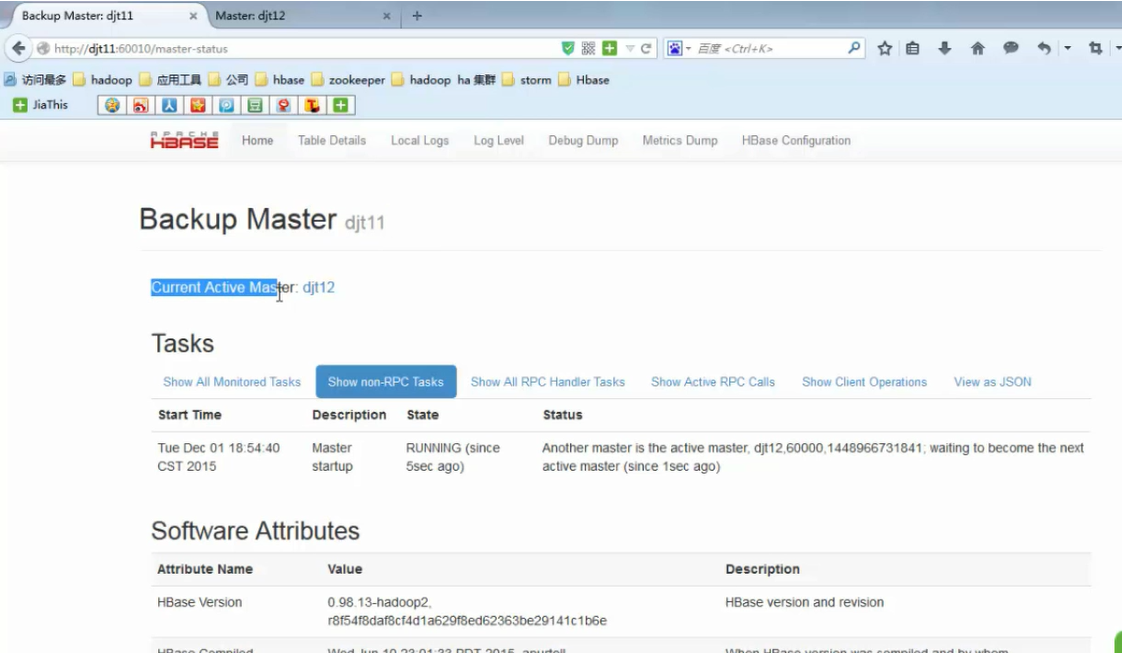

那么,djt11的master被杀死掉,则访问不到了。

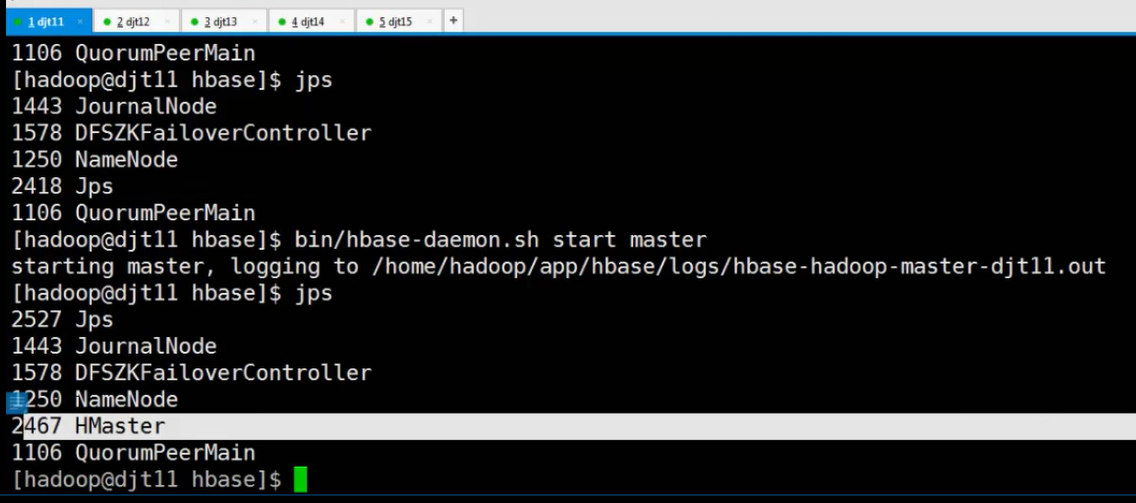

然后,我们再把djt11的master启起来,

则,djt11由不可访问,变成备用的master了。djt12依然还是主用的master

成功!

HBase HA的分布式集群部署(适合3、5节点)的更多相关文章

- Hadoop(HA)分布式集群部署

Hadoop(HA)分布式集群部署和单节点namenode部署其实一样,只是配置文件的不同罢了. 这篇就讲解hadoop双namenode的部署,实现高可用. 系统环境: OS: CentOS 6.8 ...

- Hadoop教程(五)Hadoop分布式集群部署安装

Hadoop教程(五)Hadoop分布式集群部署安装 1 Hadoop分布式集群部署安装 在hadoop2.0中通常由两个NameNode组成,一个处于active状态,还有一个处于standby状态 ...

- 基于winserver的Apollo配置中心分布式&集群部署实践(正确部署姿势)

基于winserver的Apollo配置中心分布式&集群部署实践(正确部署姿势) 前言 前几天对Apollo配置中心的demo进行一个部署试用,现公司已决定使用,这两天进行分布式部署的时候 ...

- 超详细从零记录Hadoop2.7.3完全分布式集群部署过程

超详细从零记录Ubuntu16.04.1 3台服务器上Hadoop2.7.3完全分布式集群部署过程.包含,Ubuntu服务器创建.远程工具连接配置.Ubuntu服务器配置.Hadoop文件配置.Had ...

- Hadoop分布式集群部署(单namenode节点)

Hadoop分布式集群部署 系统系统环境: OS: CentOS 6.8 内存:2G CPU:1核 Software:jdk-8u151-linux-x64.rpm hadoop-2.7.4.tar. ...

- solr 集群(SolrCloud 分布式集群部署步骤)

SolrCloud 分布式集群部署步骤 安装软件包准备 apache-tomcat-7.0.54 jdk1.7 solr-4.8.1 zookeeper-3.4.5 注:以上软件都是基于 Linux ...

- SolrCloud 分布式集群部署步骤

https://segmentfault.com/a/1190000000595712 SolrCloud 分布式集群部署步骤 solr solrcloud zookeeper apache-tomc ...

- hadoop分布式集群部署①

Linux系统的安装和配置.(在VM虚拟机上) 一:安装虚拟机VMware Workstation 14 Pro 以上,虚拟机软件安装完成. 二:创建虚拟机. 三:安装CentOS系统 (1)上面步 ...

- Minio分布式集群部署——Swarm

最近研究minio分布式集群部署,发现网上大部分都是单服务器部署,而minio官方在github上现在也只提供了k8s和docker-compose的方式,网上有关与swarm启动minio集群的文章 ...

随机推荐

- C#中深拷贝和浅拷贝

一:概念 内存:用来存储程序信息的介质. 指针:指向一块内存区域,通过它可以访问该内存区域中储存的程序信息.(C#也是有指针的) 值类型:struct(整形.浮点型.decimal的内部实现都是str ...

- WireShark 过滤 SSDP

在局域网中使用wireshark抓包过滤http的时候经常会出现一些干扰协议,例如SSDP,使用过滤条件"http"有可能出现N多ssdp包,如下图所示: SSDP:Simple ...

- linux下安装jdk8

https://www.cnblogs.com/shihaiming/p/5809553.html

- python数据类型之字典

字典定义 字典是一种 key-value 的数据类型,这点很重要,是区别使用列表和字典的依据. 语法格式: info = { 'stu1101': "Aaron", 'stu110 ...

- 解决 ImportError: cannot import name pywrap_tensorflow

原文:https://aichamp.wordpress.com/2016/11/13/handeling-importerror-cannot-import-name-pywrap_tensorfl ...

- SQL Server删除表及删除表中数据的方法

删除表的T-SQL语句为: drop table <表名> drop是丢弃的意思,drop table表示将一个表彻底删除掉. 删除表数据有两种方法:delete和truncate. de ...

- JavaWeb学习总结(七):通过Servlet生成验证码及其应用 (BufferedImage类)

一.BufferedImage类介绍 生成验证码图片主要用到了一个BufferedImage类,如下:

- AngularJS学习 之 安装

1. 安装好Node.js 2. 安装好Git 3. 安装好Yeoman 以管理员身份打开cmd 输入 npm install -g yo 回车即可开始安装Yeoman,具体的安装行为最好看官网的介绍 ...

- python 字符串格式化符号含义及注释

字符串格式化符号含义 符号 说明 %C 格式化字符及其ASCII码 %S 格式化字符串 %d 格式化整数 %o 格式化无符号八进制数 %x 格式化无符号十六进制数 %X 格式化无符号十六进制数(大写) ...

- Hive命令 参数

1.hive -h 显示帮助 2.hive -h hiveserverhost -p port 连接远程hive服务器 3.hive --define a=1 --hivevar b= ...