2019-ICCV-PDARTS-Progressive Differentiable Architecture Search Bridging the Depth Gap Between Search and Evaluation-论文阅读

P-DARTS

2019-ICCV-Progressive Differentiable Architecture Search Bridging the Depth Gap Between Search and Evaluation

- Tongji University && Huawei

- GitHub: 200+ stars

- Citation:49

Motivation

Question:

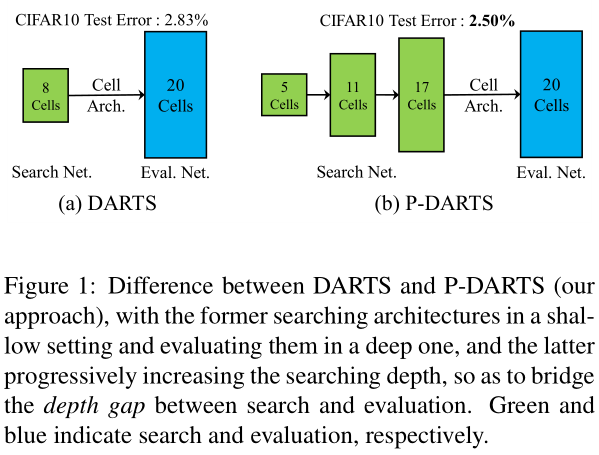

DARTS has to search the architecture in a shallow network while evaluate in a deeper one.

DARTS在浅层网络上搜索,在深层网络上评估(cifar search in 8-depth, eval in 20-depth)。

This brings an issue named the depth gap (see Figure 1(a)), which means that the search stage finds some operations that work well in a shallow architecture, but the evaluation stage actually prefers other operations that fit a deep architecture better.

Such gap hinders these approaches in their application to more complex visual recognition tasks.

Contribution

propose Progressive DARTS (P-DARTS), a novel and efficient algorithm to bridge the depth gap.

Bring two questions:

Q1: While a deeper architecture requires heavier computational overhead

we propose search space approximation which, as the depth increases, reduces the number of candidates (operations) according to their scores in the elapsed search process.

Q2:Another issue, lack of stability, emerges with searching over a deep architecture, in which the algorithm can be biased heavily towards skip-connect as it often leads to rapidest error decay during optimization, but, actually, a better option often resides in learnable operations such as convolution.

we propose search space regularization, which (i) introduces operation-level Dropout [25] to alleviate the dominance of skip-connect during training, and (ii) controls the appearance of skip-connect during evaluation.

Method

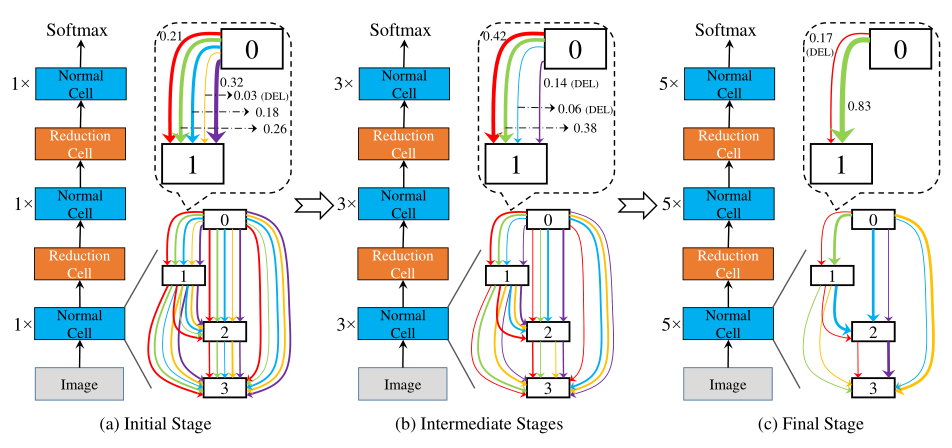

search space approximation

在初始阶段,搜索网络相对较浅,但是cell中每条边上的候选操作最多(所有操作)。在阶段 \(S_{k-1}\)中,根据学习到的网络结构参数(权值)来排序并筛选出权值(重要性)较高的 \(O_k\) 个操作,并由此搭建一个拥有 \(L_k\) 个cell的搜索网络用于下一阶段的搜索,其中, \(L_k > L_{k-1} , O_k < O_{k-1}\) .

这个过程可以渐进而持续地增加搜索网络的深度,直到足够接近测试网络深度。

search space regularization

we observe that information prefers to flow through skip-connect instead of convolution or pooling, which is arguably due to the reason that skip-connect often leads to rapid gradient descent.

实验结果表明在本文采用的框架下,信息往往倾向于通过skip-connect流动,而不是卷积。这是因为skip-connect通常处在梯度下降最速的路径上。

the search process tends to generate architectures with many skip-connect operations, which limits the number of learnable parameters and thus produces unsatisfying performance at the evaluation stage.

在这种情况下,最终搜索得到的结构往往包含大量的skip-connect操作,可训练参数较少,从而使得性能下降。

We address this problem by search space regularization, which consists of two parts.

First, we insert operation-level Dropout [25] after each skip-connect operation, so as to partially ‘cut off’ the straightforward path through skip-connect, and facilitate the algorithm to explore other operations.

作者采用搜索空间正则来解决这个问题。一方面,作者在skip-connect操作后添加Operations层面的随机Dropout来部分切断skip-connect的连接,迫使算法去探索其他的操作。

However, if we constantly block the path through skip-connect, the algorithm will drop them by assigning low weights to them, which is harmful to the final performance.

然而,持续地阻断这些路径的话会导致在最终生成结构的时候skip-connect操作仍然受到抑制,可能会影响最终性能。

we gradually decay the Dropout rate during the training process in each search stage, thus the straightforward path through skip-connect is blocked at the beginning and treated equally afterward when parameters of other operations are well learned, leaving the algorithm itself to make the decision.

因此,作者在训练的过程中逐渐地衰减Dropout的概率,在训练初期施加较强的Dropout,在训练后期将其衰减到很轻微的程度,使其不影响最终的网络结构参数的学习。

Despite the use of Dropout, we still observe that skip-connect, as a special kind of operation, has a significant impact on recognition accuracy at the evaluation stage.

另一方面,尽管使用了Operations层面的Dropout,作者依然观察到了skip-connect操作对实验性能的强烈影响。

This motivates us to design the second regularization rule, architecture refinement, which simply controls the number of preserved skip-connects, after the final search stage, to be a constant M.

因此,作者提出第二个搜索空间正则方法,即在最终生成的网络结构中,保留固定数量的skip-connect操作。具体的,作者根据最终阶段的结构参数,只保留权值最大的M个skip-connect操作,这一正则方法保证了搜索过程的稳定性。在本文中, M=2 .

We emphasize that the second regularization technique must be applied on top of the first one, otherwise, in the situations without operation-level Dropout, the search process is producing low-qualityarchitectureweights, basedon which we could not build up a powerful architecture even with a fixed number of skip-connects.

需要强调的是,第二种搜索空间正则是建立在第一种搜索空间正则的基础上的。在没有执行第一种正则的情况下,即使执行第二种正则,算法依旧会生成低质量的网络结构。

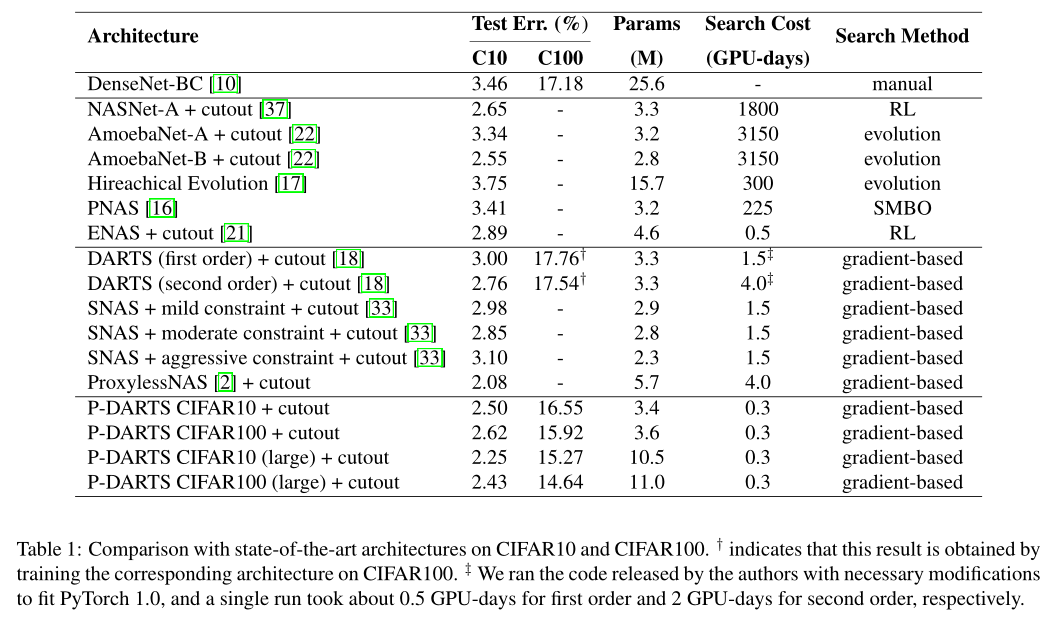

Experiments

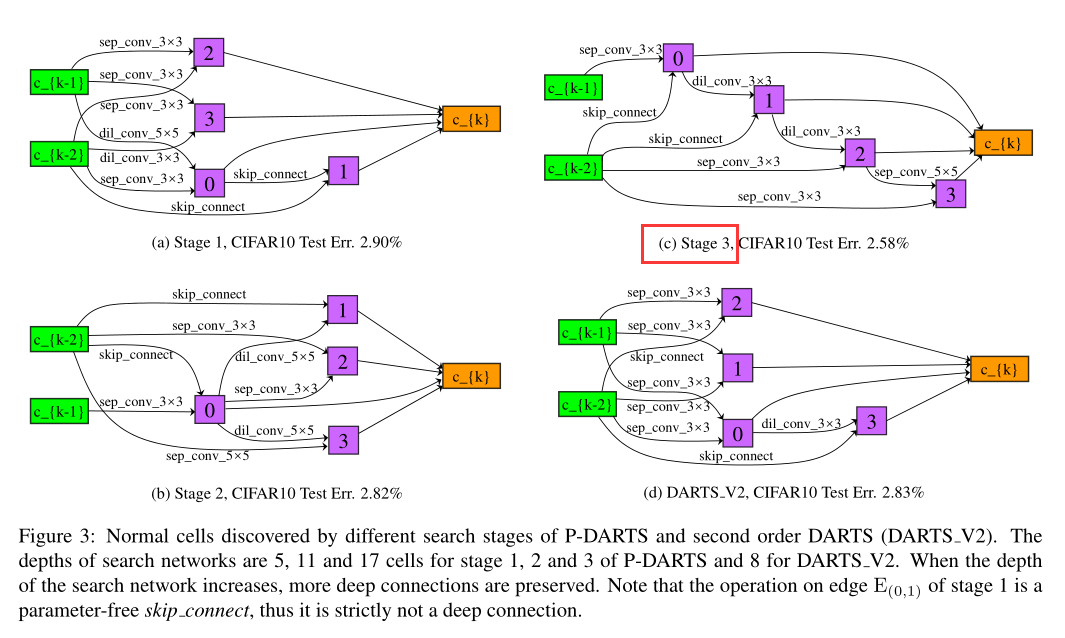

Cell arch in different Search Stage

cifar10

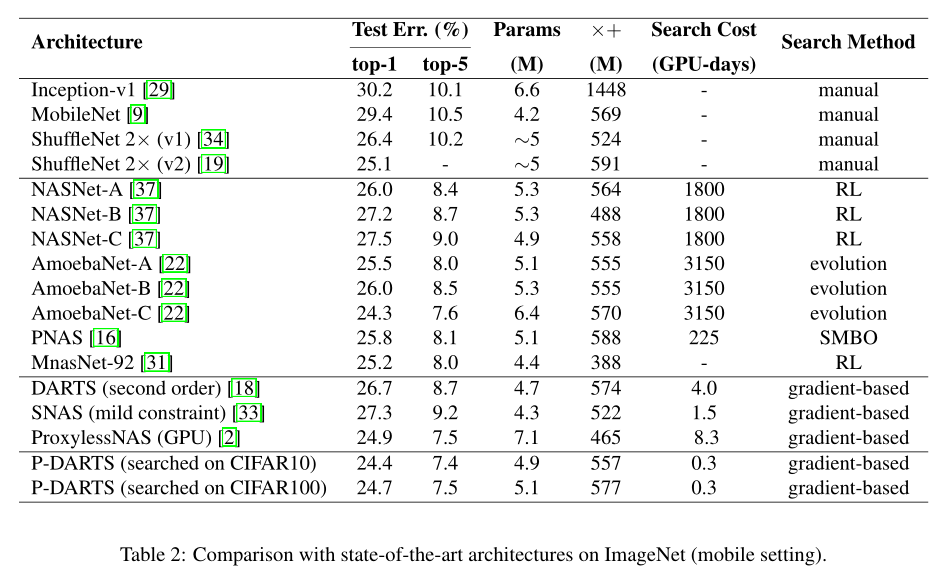

ImageNet

·

·

Conclusion

we propose a progressive version of differentiable architecture search to bridge the depth gap between search and evaluation scenarios.

The core idea is to gradually increase the depth of candidate architectures during the search process.

- 2Q: computational overhead and instability

Search space approximate and Search space regularize

Our research defends the importance of depth in differentiable architecture search, depth is still the dominant factor in exploring the architecture space.

2019-ICCV-PDARTS-Progressive Differentiable Architecture Search Bridging the Depth Gap Between Search and Evaluation-论文阅读的更多相关文章

- 论文笔记:Progressive Differentiable Architecture Search:Bridging the Depth Gap between Search and Evaluation

Progressive Differentiable Architecture Search:Bridging the Depth Gap between Search and Evaluation ...

- 论文笔记:DARTS: Differentiable Architecture Search

DARTS: Differentiable Architecture Search 2019-03-19 10:04:26accepted by ICLR 2019 Paper:https://arx ...

- 论文笔记:Progressive Neural Architecture Search

Progressive Neural Architecture Search 2019-03-18 20:28:13 Paper:http://openaccess.thecvf.com/conten ...

- (转)Illustrated: Efficient Neural Architecture Search ---Guide on macro and micro search strategies in ENAS

Illustrated: Efficient Neural Architecture Search --- Guide on macro and micro search strategies in ...

- 2019-ICLR-DARTS: Differentiable Architecture Search-论文阅读

DARTS 2019-ICLR-DARTS Differentiable Architecture Search Hanxiao Liu.Karen Simonyan.Yiming Yang GitH ...

- 2019 ICCV、CVPR、ICLR之视频预测读书笔记

2019 ICCV.CVPR.ICLR之视频预测读书笔记 作者 | 文永亮 学校 | 哈尔滨工业大学(深圳) 研究方向 | 视频预测.时空序列预测 ICCV 2019 CVP github地址:htt ...

- 微软的一篇ctr预估的论文:Web-Scale Bayesian Click-Through Rate Prediction for Sponsored Search Advertising in Microsoft’s Bing Search Engine。

周末看了一下这篇论文,觉得挺难的,后来想想是ICML的论文,也就明白为什么了. 先简单记录下来,以后会继续添加内容. 主要参考了论文Web-Scale Bayesian Click-Through R ...

- LeetCode 33 Search in Rotated Sorted Array [binary search] <c++>

LeetCode 33 Search in Rotated Sorted Array [binary search] <c++> 给出排序好的一维无重复元素的数组,随机取一个位置断开,把前 ...

- 33. Search in Rotated Sorted Array & 81. Search in Rotated Sorted Array II

33. Search in Rotated Sorted Array Suppose an array sorted in ascending order is rotated at some piv ...

随机推荐

- codingame

无聊登了一下condingame,通知说有本周谜题,正好刚撸完bfs,想尝试下. 题目链接:https://www.codingame.com/ide/17558874463b39b9ce6d4207 ...

- Mysql 常用函数(19)- mod 函数

Mysql常用函数的汇总,可看下面系列文章 https://www.cnblogs.com/poloyy/category/1765164.html mod 的作用 求余数,和%一样 mod的语法格式 ...

- Windows 系统如何安装 Docker

1 docker 是基于 unix 开发的系列工具,所以在 windows 上安装 docker 非常容易出现环境不兼容的问题. 如果 windows 版本是 pro,一般是可以直接安装 docker ...

- 【雕爷学编程】Arduino动手做(44)---类比霍尔传感器

37款传感器与模块的提法,在网络上广泛流传,其实Arduino能够兼容的传感器模块肯定是不止37种的.鉴于本人手头积累了一些传感器和模块,依照实践出真知(一定要动手做)的理念,以学习和交流为目的,这里 ...

- 关于react的一些总结

之前为了学习redux买了一本<深入浅出react和redux>,只看了redux部分.最近重新一遍,还是很有收获,这里结合阅读文档时的一些理解,记下一些初学者可能不太注意的东西. 原则: ...

- JS的函数和对象一

1.递归 在函数的内部调用自身,默认是一个无限循环. 2.匿名函数 没有名称的函数 function(){ } (1)创建函数 函数声明 function fn1(){ } 函数表达式 va ...

- django 中CBV和FBV 路由写法的区别

使用视图函数时, FBV: django完成URL解析之后,会直接把request对象以及URL解析器捕获的参数(比如re_path中正则表达捕获的位置参数或关键字参数)丢给视图函数 CBV: 这些参 ...

- Spring IOC 容器 简介

Spring 容器是 Spring 框架的核心.容器将创建对象,把它们连接在一起,配置它们,并管理他们的整个生命周期从创建到销毁. Spring 容器使用依赖注入(DI)来管理组成一个应用程序的组件. ...

- A == B ?(hdu2054)

输入格式:直接循环,同时输入两个不带空格未知长度的字符串. 思考:不带空格未知长度且同时输入,用两个char s[maxsize]定义两个字符数组,再用scanf_s()函数同时输入两个字符串. 注意 ...

- JUC(4)---java线程池原理及源码分析

线程池,既然是个池子里面肯定就装很多线程. 如果并发的请求数量非常多,但每个线程执行的时间很短,这样就会频繁的创建和销毁 线程,如此一来会大大降低系统的效率.可能出现服务器在为每个请求创建新线程和销毁 ...