特征向量-Eigenvalues_and_eigenvectors#Graphs 线性变换

总结:

1、线性变换运算封闭,加法和乘法

2、特征向量经过线性变换后方向不变

https://en.wikipedia.org/wiki/Linear_map

Examples of linear transformation matrices

In two-dimensional space R2 linear maps are described by 2 × 2 real matrices. These are some examples:

- rotation

- by 90 degrees counterclockwise:

- by an angle θ counterclockwise:

- by 90 degrees counterclockwise:

- reflection

- about the x axis:

- about the y axis:

- about the x axis:

- scaling by 2 in all directions:

- horizontal shear mapping:

- squeeze mapping:

- projection onto the y axis:

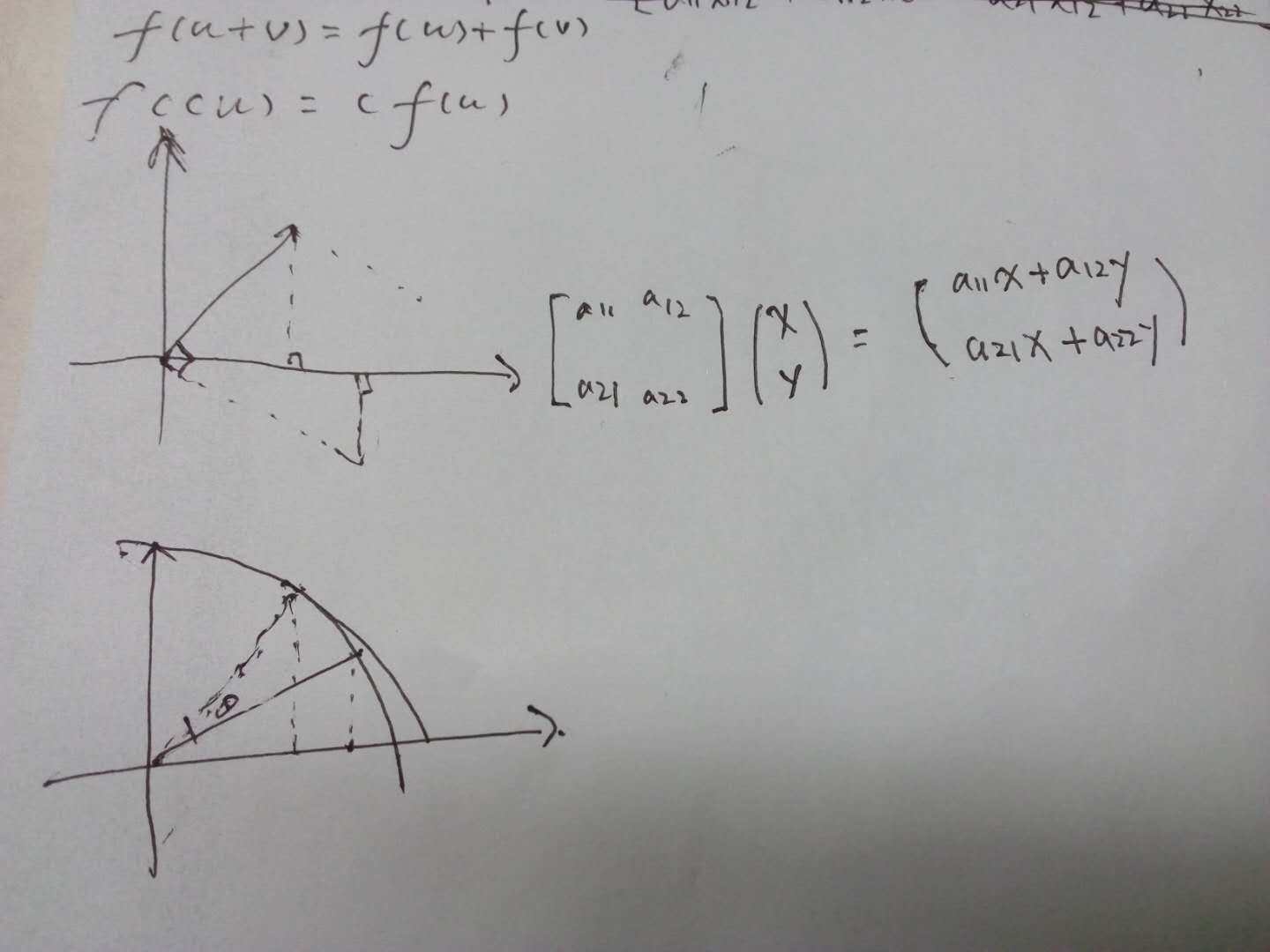

In mathematics, a linear map (also called a linear mapping, linear transformation or, in some contexts, linear function) is a mapping V → W between two modules (including vector spaces) that preserves (in the sense defined below) the operations of addition and scalar multiplication.

An important special case is when V = W, in which case the map is called a linear operator,[1] or an endomorphism of V. Sometimes the term linear function has the same meaning as linear map, while in analytic geometry it does not.

A linear map always maps linear subspaces onto linear subspaces (possibly of a lower dimension);[2] for instance it maps a plane through the origin to a plane, straight line or point. Linear maps can often be represented as matrices, and simple examples include rotation and reflection linear transformations.

In the language of abstract algebra, a linear map is a module homomorphism. In the language of category theory it is a morphism in the category of modules over a given ring.

Definition and first consequences

Let

|

additivity / operation of addition |

|

homogeneity of degree 1 / operation of scalar multiplication |

Thus, a linear map is said to be operation preserving. In other words, it does not matter whether you apply the linear map before or after the operations of addition and scalar multiplication.

This is equivalent to requiring the same for any linear combination of vectors, i.e. that for any vectors

Denoting the zero elements of the vector spaces

Occasionally,

A linear map

These statements generalize to any left-module

https://en.wikipedia.org/wiki/Eigenvalues_and_eigenvectors#Graphs

A {\displaystyle A}

A {\displaystyle A}

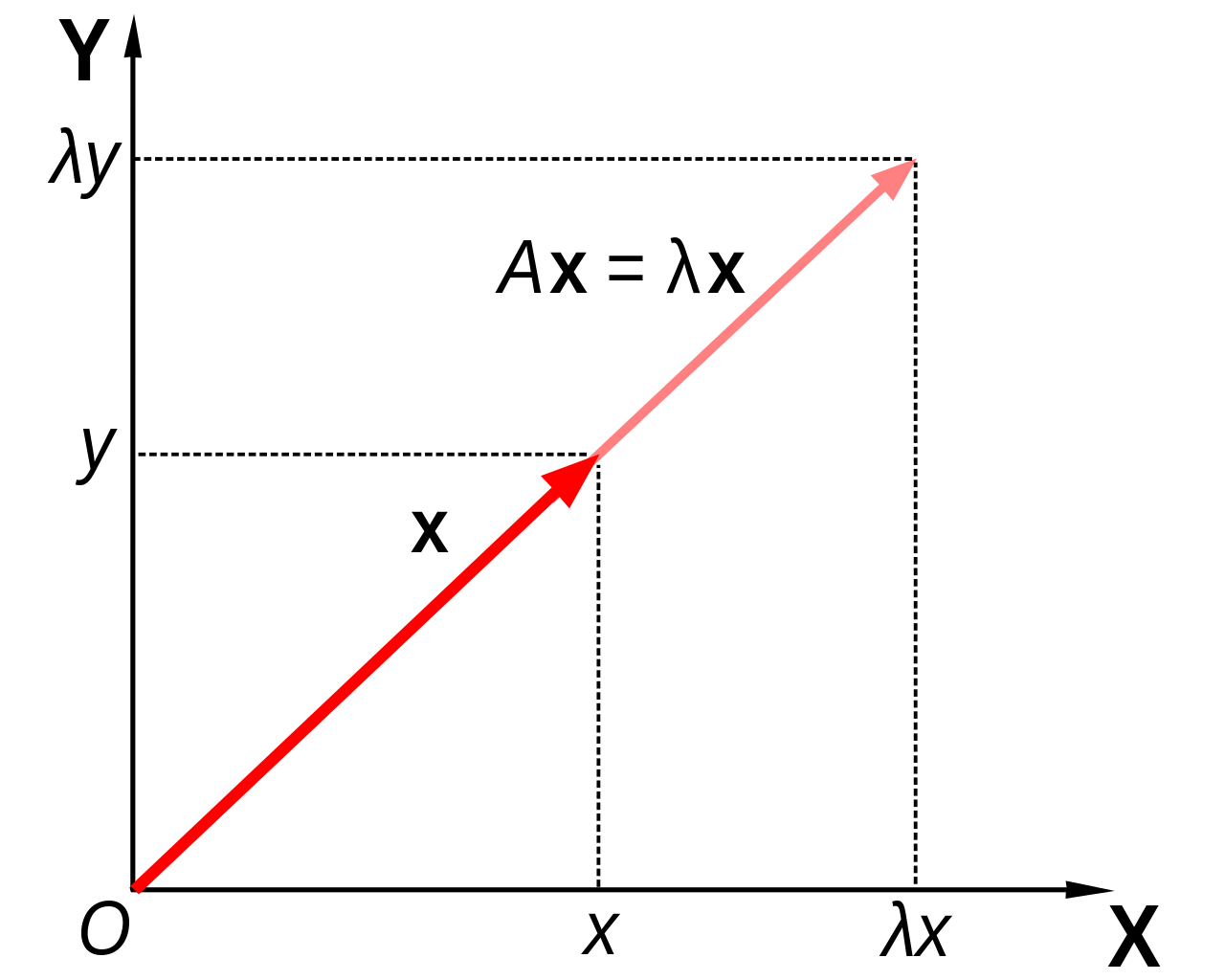

In linear algebra, an eigenvector or characteristic vector of a linear transformation is a non-zero vector that does not change its direction when that linear transformation is applied to it. More formally, if T is a linear transformation from a vector space V over a field F into itself and v is a vector in V that is not the zero vector, then v is an eigenvector of T if T(v) is a scalar multiple of v. This condition can be written as the equation

- T ( v ) = λ v , {\displaystyle T(\mathbf {v} )=\lambda \mathbf {v} ,}

where λ is a scalar in the field F, known as the eigenvalue, characteristic value, or characteristic root associated with the eigenvector v.

If the vector space V is finite-dimensional, then the linear transformation T can be represented as a square matrix A, and the vector v by a column vector, rendering the above mapping as a matrix multiplication on the left hand side and a scaling of the column vector on the right hand side in the equation

- A v = λ v . {\displaystyle A\mathbf {v} =\lambda \mathbf {v} .}

There is a correspondence between n by n square matrices and linear transformations from an n-dimensional vector space to itself. For this reason, it is equivalent to define eigenvalues and eigenvectors using either the language of matrices or the language of linear transformations.[1][2]

Geometrically an eigenvector, corresponding to a real nonzero eigenvalue, points in a direction that is stretched by the transformation and the eigenvalue is the factor by which it is stretched. If the eigenvalue is negative, the direction is reversed.[3]

math.mit.edu/~gs/linearalgebra/ila0601.pdf

A100 was found by using the eigenvalues of A, not by multiplying 100 matrices.

- A v = λ v {\displaystyle Av=\lambda v}

,

λ {\displaystyle \lambda }

- A v = λ v {\displaystyle Av=\lambda v}

,

λ {\displaystyle \lambda }

特征向量-Eigenvalues_and_eigenvectors#Graphs 线性变换的更多相关文章

- 特征向量-Eigenvalues_and_eigenvectors#Graphs

https://en.wikipedia.org/wiki/Eigenvalues_and_eigenvectors#Graphs A {\displaystyle A} ...

- 知识图谱顶刊综述 - (2021年4月) A Survey on Knowledge Graphs: Representation, Acquisition, and Applications

知识图谱综述(2021.4) 论文地址:A Survey on Knowledge Graphs: Representation, Acquisition, and Applications 目录 知 ...

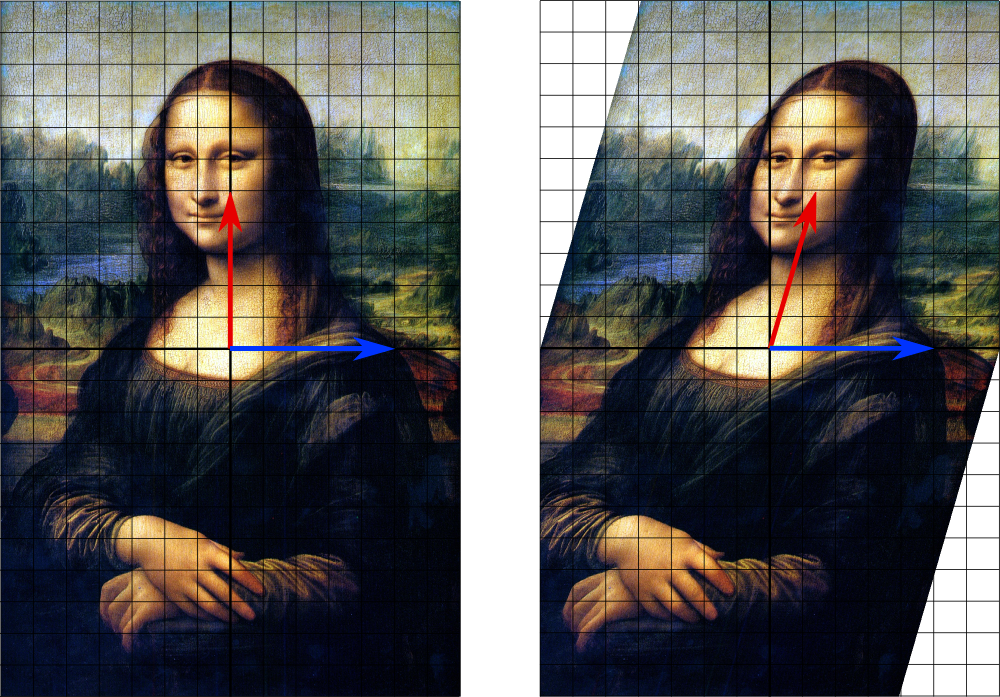

- paper 128:奇异值分解(SVD) --- 线性变换几何意义[转]

PS:一直以来对SVD分解似懂非懂,此文为译文,原文以细致的分析+大量的可视化图形演示了SVD的几何意义.能在有限的篇幅把这个问题讲解的如此清晰,实属不易.原文举了一个简单的图像处理问题,简单形象,真 ...

- 转载:奇异值分解(SVD) --- 线性变换几何意义(下)

本文转载自他人: PS:一直以来对SVD分解似懂非懂,此文为译文,原文以细致的分析+大量的可视化图形演示了SVD的几何意义.能在有限的篇幅把这个问题讲解的如此清晰,实属不易.原文举了一个简单的图像处理 ...

- 转载:奇异值分解(SVD) --- 线性变换几何意义(上)

本文转载自他人: PS:一直以来对SVD分解似懂非懂,此文为译文,原文以细致的分析+大量的可视化图形演示了SVD的几何意义.能在有限的篇幅把这个问题讲解的如此清晰,实属不易.原文举了一个简单的图像处理 ...

- Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering

Defferrard, Michaël, Xavier Bresson, and Pierre Vandergheynst. "Convolutional neural networks o ...

- 论文阅读:Learning Attention-based Embeddings for Relation Prediction in Knowledge Graphs(2019 ACL)

基于Attention的知识图谱关系预测 论文地址 Abstract 关于知识库完成的研究(也称为关系预测)的任务越来越受关注.多项最新研究表明,基于卷积神经网络(CNN)的模型会生成更丰富,更具表达 ...

- 论文解读二代GCN《Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering》

Paper Information Title:Convolutional Neural Networks on Graphs with Fast Localized Spectral Filteri ...

- 论文解读《The Emerging Field of Signal Processing on Graphs》

感悟 看完图卷积一代.二代,深感图卷积的强大,刚开始接触图卷积的时候完全不懂为什么要使用拉普拉斯矩阵( $L=D-W$),主要是其背后的物理意义.通过借鉴前辈们的论文.博客.评论逐渐对图卷积有了一定的 ...

随机推荐

- 使用HttpClient访问url的工具类

maven依赖jar包配置: <dependency> <groupId>org.apache.httpcomponents</groupId> <artif ...

- ArcGIS应用

1.ArcGIS Server发布资源报错:网络资源问题 有可能是跟网络相关的服务没有开启,开启相关服务器后有可能可以解决此问题. 还有可能通过此法解决:开始--控制面板--网络和共享中心--高级共享 ...

- Selenium 基本用法

如下,使用 Selenium 打开淘宝首页并获取页面源代码: from selenium import webdriver browser = webdriver.Chrome() # 声明一个浏览器 ...

- Unity 蓝牙插件

1.新建一个Unity5.6.2f1工程,导入正版Bluetooth LE for iOS tvOS and Android.unitypackage2.用JD-GUI反编译工具查看unityandr ...

- QT显示中文 连接上文

1.首先是建立Linux开发环境1.1.在windowsXP下安装博创公司提供的虚拟机软件VMware Workstation,版本为VMware-workstation-full-7.0.1-227 ...

- codeforces水题100道 第二十一题 Codeforces Beta Round #65 (Div. 2) A. Way Too Long Words (strings)

题目链接:http://www.codeforces.com/problemset/problem/71/A题意:将长字符串改成简写格式.C++代码: #include <string> ...

- 剑指offer面试题7:用两个栈实现队列

题目1:用两个栈来实现一个队列,完成队列的Push和Pop操作. 队列中的元素为int类型. 代码实现: public class Solution07 { Stack<Integer> ...

- 【LeetCode OJ】Two Sum

题目:Given an array of integers, find two numbers such that they add up to a specific target number. T ...

- Linux设备驱动剖析之SPI(四)

781行之前没什么好说的,直接看783行,将work投入到工作队列里,然后就返回,在这里就可以回答之前为什么是异步的问题.以后在某个合适的时间里CPU会执行这个work指定的函数,这里是s3c64xx ...

- web应用安全防范(1)—为什么要重视web应用安全漏洞

现在几乎所有的平台都是依赖于互联网构建核心业务的. 自从XP年代开始windows自带防火墙后,传统的缓冲器溢出等攻击失去了原有威力,黑客们也把更多的目光放在了WEB方面,直到进入WEB2.0后,WE ...