An Introduction to Lock-Free Programming

Lock-free programming is a challenge, not just because of the complexity of the task itself, but because of how difficult it can be to penetrate the subject in the first place.

I was fortunate in that my first introduction to lock-free (also known as lockless) programming was Bruce Dawson’s excellent and comprehensive white paper, Lockless Programming Considerations. And like many, I’ve had the occasion to put Bruce’s advice into practice developing and debugging lock-free code on platforms such as the Xbox 360.

Since then, a lot of good material has been written, ranging from abstract theory and proofs of correctness to practical examples and hardware details. I’ll leave a list of references in the footnotes. At times, the information in one source may appear orthogonal to other sources: For instance, some material assumes sequential consistency, and thus sidesteps the memory ordering issues which typically plague lock-free C/C++ code. The new C++11 atomic library standard throws another wrench into the works, challenging the way many of us express lock-free algorithms.

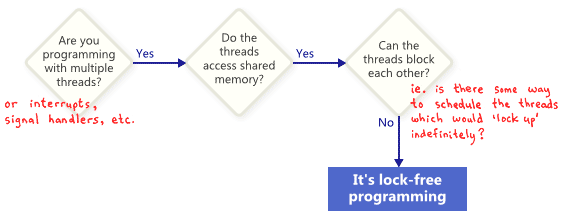

In this post, I’d like to re-introduce lock-free programming, first by defining it, then by distilling most of the information down to a few key concepts. I’ll show how those concepts relate to one another using flowcharts, then we’ll dip our toes into the details a little bit. At a minimum, any programmer who dives into lock-free programming should already understand how to write correct multithreaded code using mutexes, and other high-level synchronization objects such as semaphores and events.

What Is It?

People often describe lock-free programming as programming without mutexes, which are also referred to as locks. That’s true, but it’s only part of the story. The generally accepted definition, based on academic literature, is a bit more broad. At its essence, lock-free is a property used to describe some code, without saying too much about how that code was actually written.

Basically, if some part of your program satisfies the following conditions, then that part can rightfully be considered lock-free. Conversely, if a given part of your code doesn’t satisfy these conditions, then that part is not lock-free.

In this sense, the lock in lock-free does not refer directly to mutexes, but rather to the possibility of “locking up” the entire application in some way, whether it’s deadlock, livelock – or even due to hypothetical thread scheduling decisions made by your worst enemy. That last point sounds funny, but it’s key. Shared mutexes are ruled out trivially, because as soon as one thread obtains the mutex, your worst enemy could simply never schedule that thread again. Of course, real operating systems don’t work that way – we’re merely defining terms.

Here’s a simple example of an operation which contains no mutexes, but is still not lock-free. Initially, X = 0. As an exercise for the reader, consider how two threads could be scheduled in a way such that neither thread exits the loop.

while (X == 0)

{

X = 1 - X;

}

Nobody expects a large application to be entirely lock-free. Typically, we identify a specific set of lock-free operations out of the whole codebase. For example, in a lock-free queue, there might be a handful of lock-free operations such as push, pop, perhaps isEmpty, and so on.

Herlihy & Shavit, authors of The Art of Multiprocessor Programming

One important consequence of lock-free programming is that if you suspend a single thread, it will never prevent other threads from making progress, as a group, through their own lock-free operations. This hints at the value of lock-free programming when writing interrupt handlers and real-time systems, where certain tasks must complete within a certain time limit, no matter what state the rest of the program is in.

A final precision: Operations that are designed to block do not disqualify the algorithm. For example, a queue’s pop operation may intentionally block when the queue is empty. The remaining codepaths can still be considered lock-free.

Lock-Free Programming Techniques

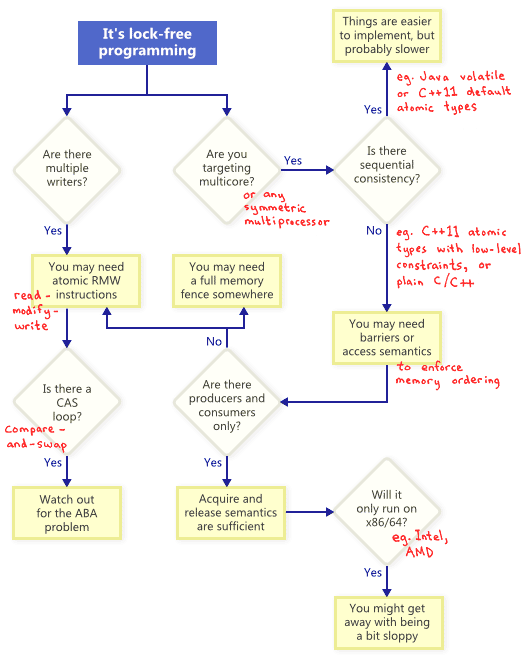

It turns out that when you attempt to satisfy the non-blocking condition of lock-free programming, a whole family of techniques fall out: atomic operations, memory barriers, avoiding the ABA problem, to name a few. This is where things quickly become diabolical.

So how do these techniques relate to one another? To illustrate, I’ve put together the following flowchart. I’ll elaborate on each one below.

Atomic Read-Modify-Write Operations

Atomic operations are ones which manipulate memory in a way that appears indivisible: No thread can observe the operation half-complete. On modern processors, lots of operations are already atomic. For example, aligned reads and writes of simple types are usually atomic.

Read-modify-write (RMW) operations go a step further, allowing you to perform more complex transactions atomically. They’re especially useful when a lock-free algorithm must support multiple writers, because when multiple threads attempt an RMW on the same address, they’ll effectively line up in a row and execute those operations one-at-a-time. I’ve already touched upon RMW operations in this blog, such as when implementing a lightweight mutex, a recursive mutex and a lightweight logging system.

Examples of RMW operations include _InterlockedIncrement on Win32, OSAtomicAdd32 on iOS, and std::atomic<int>::fetch_add in C++11. Be aware that the C++11 atomic standard does not guarantee that the implementation will be lock-free on every platform, so it’s best to know the capabilities of your platform and toolchain. You can call std::atomic<>::is_lock_free to make sure.

Different CPU families support RMW in different ways. Processors such as PowerPC and ARM expose load-link/store-conditional instructions, which effectively allow you to implement your own RMW primitive at a low level, though this is not often done. The common RMW operations are usually sufficient.

As illustrated by the flowchart, atomic RMWs are a necessary part of lock-free programming even on single-processor systems. Without atomicity, a thread could be interrupted halfway through the transaction, possibly leading to an inconsistent state.

Compare-And-Swap Loops

Perhaps the most often-discussed RMW operation is compare-and-swap (CAS). On Win32, CAS is provided via a family of intrinsics such as _InterlockedCompareExchange. Often, programmers perform compare-and-swap in a loop to repeatedly attempt a transaction. This pattern typically involves copying a shared variable to a local variable, performing some speculative work, and attempting to publish the changes using CAS:

void LockFreeQueue::push(Node* newHead)

{

for (;;)

{

// Copy a shared variable (m_Head) to a local.

Node* oldHead = m_Head; // Do some speculative work, not yet visible to other threads.

newHead->next = oldHead; // Next, attempt to publish our changes to the shared variable.

// If the shared variable hasn't changed, the CAS succeeds and we return.

// Otherwise, repeat.

if (_InterlockedCompareExchange(&m_Head, newHead, oldHead) == oldHead)

return;

}

}

Such loops still qualify as lock-free, because if the test fails for one thread, it means it must have succeeded for another – though some architectures offer a weaker variant of CAS where that’s not necessarily true. Whenever implementing a CAS loop, special care must be taken to avoid the ABA problem.

Sequential Consistency

Sequential consistency means that all threads agree on the order in which memory operations occurred, and that order is consistent with the order of operations in the program source code. Under sequential consistency, it’s impossible to experience memory reordering shenanigans like the one I demonstrated in a previous post.

A simple (but obviously impractical) way to achieve sequential consistency is to disable compiler optimizations and force all your threads to run on a single processor. A processor never sees its own memory effects out of order, even when threads are pre-empted and scheduled at arbitrary times.

Some programming languages offer sequentially consistency even for optimized code running in a multiprocessor environment. In C++11, you can declare all shared variables as C++11 atomic types with default memory ordering constraints. In Java, you can mark all shared variables as volatile. Here’s the example from my previous post, rewritten in C++11 style:

std::atomic<int> X(0), Y(0);

int r1, r2; void thread1()

{

X.store(1);

r1 = Y.load();

} void thread2()

{

Y.store(1);

r2 = X.load();

}

Because the C++11 atomic types guarantee sequential consistency, the outcome r1 = r2 = 0 is impossible. To achieve this, the compiler outputs additional instructions behind the scenes – typically memory fences and/or RMW operations. Those additional instructions may make the implementation less efficient compared to one where the programmer has dealt with memory ordering directly.

Memory Ordering

As the flowchart suggests, any time you do lock-free programming for multicore (or any symmetric multiprocessor), and your environment does not guarantee sequential consistency, you must consider how to prevent memory reordering.

On today’s architectures, the tools to enforce correct memory ordering generally fall into three categories, which prevent both compiler reordering and processor reordering:

- A lightweight sync or fence instruction, which I’ll talk about in future posts;

- A full memory fence instruction, which I’ve demonstrated previously;

- Memory operations which provide acquire or release semantics.

Acquire semantics prevent memory reordering of operations which follow it in program order, and release semantics prevent memory reordering of operations preceding it. These semantics are particularly suitable in cases when there’s a producer/consumer relationship, where one thread publishes some information and the other reads it. I’ll also talk about this more in a future post.

Different Processors Have Different Memory Models

Different CPU families have different habits when it comes to memory reordering. The rules are documented by each CPU vendor and followed strictly by the hardware. For instance, PowerPC and ARM processors can change the order of memory stores relative to the instructions themselves, but normally, the x86/64 family of processors from Intel and AMD do not. We say the former processors have a more relaxed memory model.

There’s a temptation to abstract away such platform-specific details, especially with C++11 offering us a standard way to write portable lock-free code. But currently, I think most lock-free programmers have at least some appreciation of platform differences. If there’s one key difference to remember, it’s that at the x86/64 instruction level, every load from memory comes with acquire semantics, and every store to memory provides release semantics – at least for non-SSE instructions and non-write-combined memory. As a result, it’s been common in the past to write lock-free code which works on x86/64, but fails on other processors.

If you’re interested in the hardware details of how and why processors perform memory reordering, I’d recommend Appendix C of Is Parallel Programming Hard. In any case, keep in mind that memory reordering can also occur due to compiler reordering of instructions.

In this post, I haven’t said much about the practical side of lock-free programming, such as: When do we do it? How much do we really need? I also haven’t mentioned the importance of validating your lock-free algorithms. Nonetheless, I hope for some readers, this introduction has provided a basic familiarity with lock-free concepts, so you can proceed into the additional reading without feeling too bewildered. As usual, if you spot any inaccuracies, let me know in the comments.

Additional References

- Anthony Williams’ blog and his book, C++ Concurrency in Action

- Dmitriy V’jukov’s website and various forum discussions

- Bartosz Milewski’s blog

- Charles Bloom’s Low-Level Threading series on his blog

- Doug Lea’s JSR-133 Cookbook

- Howells and McKenney’s memory-barriers.txt document

- Hans Boehm’s collection of links about the C++11 memory model

- Herb Sutter’s Effective Concurrency series

An Introduction to Lock-Free Programming的更多相关文章

- Introduction to 3D Game Programming with DirectX 11 翻译--开篇

Direct3D 11简介 Direct3D 11是一个渲染库,用于在Windows平台上使用现代图形硬件编写高性能3D图形应用程序.Direct3D是一个windows底层库,因为它的应用程序编程接 ...

- Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 全书总结

原文:Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 全书总结 本系列文章中可能有很多翻译有问题或者错误的地方:并且有些章节 ...

- Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- Direct12优化

原文:Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- Direct12优化 第一章:向量代数 1.向量计算的时候,使用XMV ...

- Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第二十三章:角色动画

原文:Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第二十三章:角色动画 学习目标 熟悉蒙皮动画的术语: 学习网格层级变换 ...

- Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第二十二章:四元数(QUATERNIONS)

原文:Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第二十二章:四元数(QUATERNIONS) 学习目标 回顾复数,以及 ...

- Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第二十一章:环境光遮蔽(AMBIENT OCCLUSION)

原文:Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第二十一章:环境光遮蔽(AMBIENT OCCLUSION) 学习目标 ...

- Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第二十章:阴影贴图

原文:Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第二十章:阴影贴图 本章介绍一种在游戏和应用中,模拟动态阴影的基本阴影 ...

- Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第十九章:法线贴图

原文:Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第十九章:法线贴图 学习目标 理解为什么需要法线贴图: 学习法线贴图如 ...

- Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第十八章:立方体贴图

原文:Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第十八章:立方体贴图 代码工程地址: https://github.c ...

- Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第十七章:拾取

原文:Introduction to 3D Game Programming with DirectX 12 学习笔记之 --- 第十七章:拾取 代码工程地址: https://github.com/ ...

随机推荐

- 基于FDC2214的手势识别

基于FDC2214的手势识别 1.本次题目来源于2018年全国电子设计大赛D题,要求实现对石头.剪刀.布以及数字12345的识别:同时在上述基础上实现对手势的学习. 2.硬件实现: 硬件主要采用STM ...

- Nginx初体验(一):nginx介绍

今天我们来介绍一下Nginx. Nginx是一款轻量级的Web服务器/反向代理服务器以及电子邮件(IMAP/POP3)代理服务器 特点: 反向代理,负载均衡,动静分离 首先我们来介绍一下正向代理服务器 ...

- MapWindow记录

增加MapWinGIS的新功能,编译完MapWinGIS,可以生成Debug和Release版本的x64和Win32四种版本, 自己基于c#的Mapwindow如果要用到新添加的功能,此时就得重新注册 ...

- 【BZOJ1564】【NOI2009】二叉查找树(动态规划)

[BZOJ1564][NOI2009]二叉查找树(动态规划) 题面 BZOJ 洛谷 题目描述 已知一棵特殊的二叉查找树.根据定义,该二叉查找树中每个结点的数据值都比它左儿子结点的数据值大,而比它右儿子 ...

- 杭州优步uber司机第三组奖励政策

-8月9日更新- 优步杭州第三组: 定义为激活时间在2015/8/3之后(含)的车主(以优步后台数据显示为准) 滴滴快车单单2.5倍,注册地址:http://www.udache.com/如何注册Ub ...

- MySql 增加字段 删除字段 修改字段名称 修改字段类型

//1.增加一个字段 alter table user add COLUMN new1 VARCHAR(20) DEFAULT NULL; //增加一个字段,默认为空 alter table user ...

- iOS - Foundation相关

1.NSString A.创建的方式: stringWithFormat:格式化字符串 ,创建字符串对象在堆区域 @"jack& ...

- rn打包分析

rn打包原来是packager,后来独立出一个专门的打包工具metro,构建工具的大体思路跟前端构建工具差不多,都会有一个启动文件,然后根据模块依赖关系把对应文件找到. 开发中打包 在开发中打包,我们 ...

- 微信小程序如何性能测试?

背景: 微信小程序作为手机页面的一种,相比传统的网站和应用来说存在比较特殊的地方: 1. 开发者往往对程序做了限制,只能通过微信客户端访问 2. 通过微信的Oauth进行认证 这样往往会导致我们的 ...

- 第四十篇 Python之设计模式总结-简单工厂、工厂方法、抽象工厂、单例模式

一. 简单工厂 简单工厂模式(Simple Factory Pattern):是通过专门定义一个类来负责创建其他类的实例,被创建的实例通常都具有共同的父类. 简单工厂的用处不大,主要就是一个if... ...