HiBench成长笔记——(4) HiBench测试Spark SQL

很多内容之前的博客已经提过,这里不再赘述,详细内容参照本系列前面的博客:https://www.cnblogs.com/ratels/p/10970905.html 和 https://www.cnblogs.com/ratels/p/10976060.html

执行脚本

bin/workloads/sql/scan/prepare/prepare.sh

返回信息

[root@node1 prepare]# ./prepare.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/sql/scan.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start HadoopPrepareScan bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Scan/Input rm: `hdfs://node1:8020/HiBench/Scan/Input': No such file or directory Pages:, USERVISITS: Submit MapReduce Job: /opt/cloudera/parcels/CDH--.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn jar /home/cf/app/HiBench-master/autogen/target/autogen-7.1-SNAPSHOT-jar-with-dependencies.jar HiBench.DataGen -t hive -b hdfs://node1:8020/HiBench/Scan -n Input -m 8 -r 8 -p 120 -v 1000 -o sequence // :: INFO HiBench.HiveData: Closing hive data generator... finish HadoopPrepareScan bench

执行脚本

bin/workloads/sql/scan/spark/run.sh

返回信息

[root@node1 spark]# ./run.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/sql/scan.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start ScalaSparkScan bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Scan/Output rm: `hdfs://node1:8020/HiBench/Scan/Output': No such file or directory Export env: SPARKBENCH_PROPERTIES_FILES=/home/cf/app/HiBench-master/report/scan/spark/conf/sparkbench/sparkbench.conf Export env: HADOOP_CONF_DIR=/etc/hadoop/conf.cloudera.yarn Submit Spark job: /opt/cloudera/parcels/CDH--.cdh5./lib/spark/bin/spark-submit --properties- --executor-cores --executor-memory 4g /home/cf/app/HiBench-master/sparkbench/assembly/target/sparkbench-assembly-7.1-SNAPSHOT-dist.jar ScalaScan /home/cf/app/HiBench-master/report/scan/spark/conf/../rankings_uservisits_scan.hive // :: INFO CuratorFrameworkSingleton: Closing ZooKeeper client. hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -du -s hdfs://node1:8020/HiBench/Scan/Output finish ScalaSparkScan bench

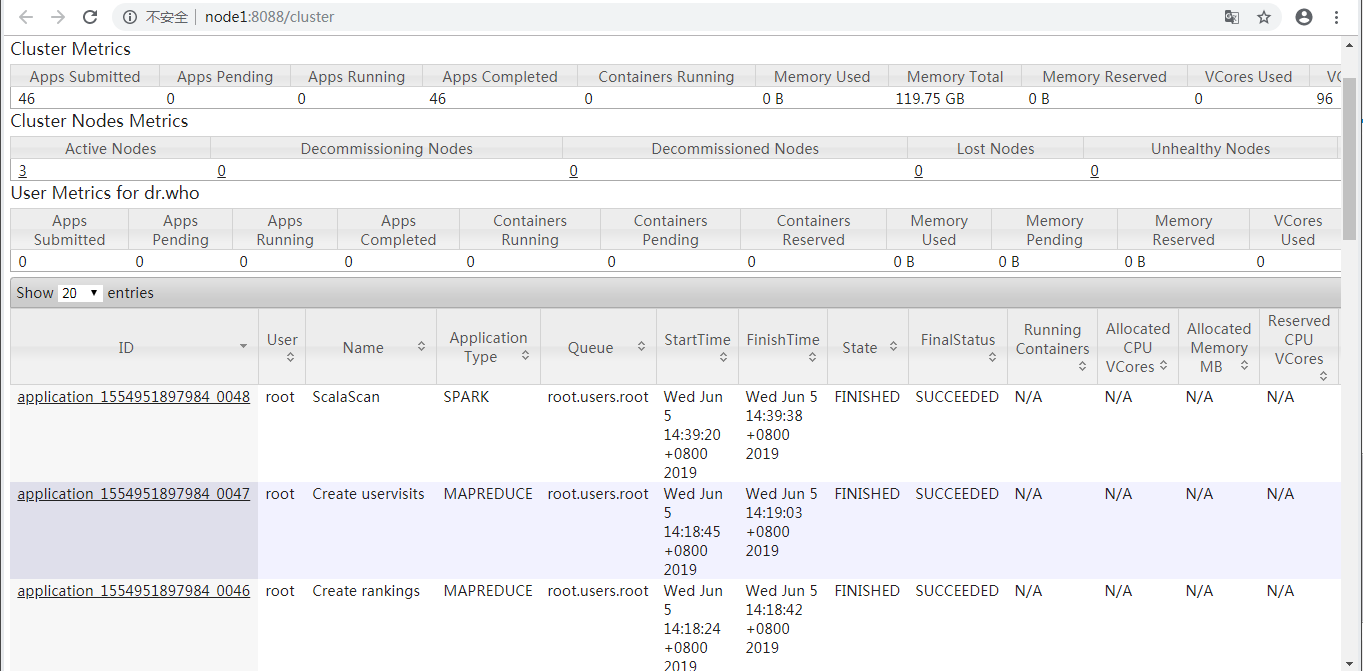

查看ResourceManager Web UI

prepare.sh启动了application_1554951897984_0047和application_1554951897984_0046两个MAPREDUCE任务,run.sh启动了application_1554951897984_0048这个Spark任务。

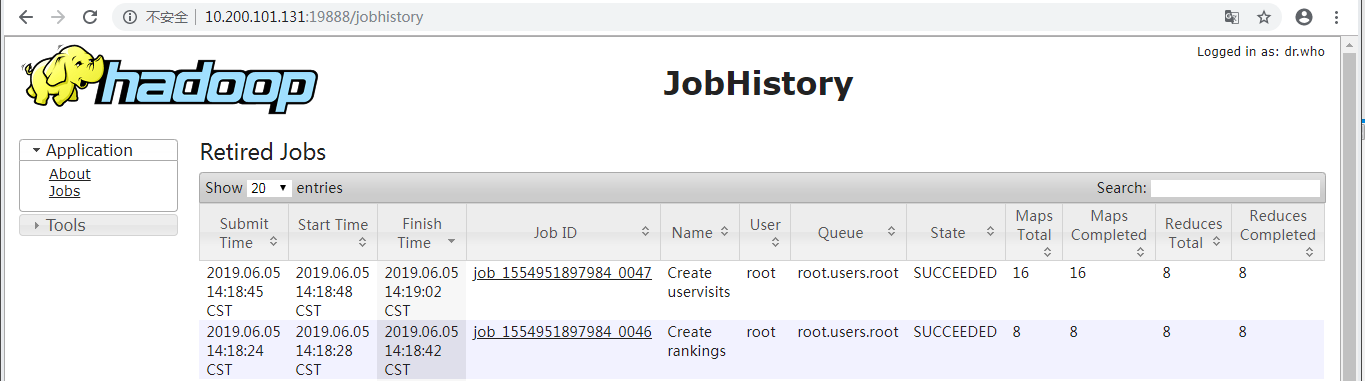

查看(Hadoop)HistoryServer Web UI

显示了prepare.sh启动的application_1554951897984_0047和application_1554951897984_0046两个MAPREDUCE任务。

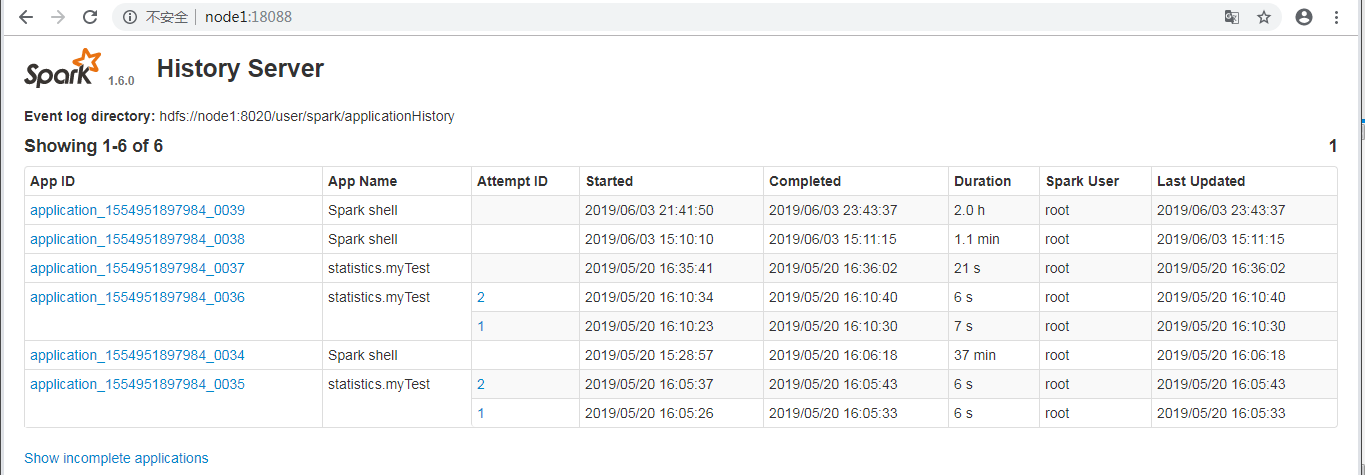

查看(Spark) History Server Web UI

并未显示run.sh启动的application_1554951897984_0048这个Spark任务。

执行脚本

bin/workloads/sql/join/prepare/prepare.sh

返回信息

[root@node1 prepare]# ./prepare.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/sql/join.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start HadoopPrepareJoin bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Join/Input rm: `hdfs://node1:8020/HiBench/Join/Input': No such file or directory Pages:, USERVISITS: Submit MapReduce Job: /opt/cloudera/parcels/CDH--.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn jar /home/cf/app/HiBench-master/autogen/target/autogen-7.1-SNAPSHOT-jar-with-dependencies.jar HiBench.DataGen -t hive -b hdfs://node1:8020/HiBench/Join -n Input -m 8 -r 8 -p 120 -v 1000 -o sequence // :: INFO HiBench.HiveData: Closing hive data generator... finish HadoopPrepareJoin bench

执行脚本

bin/workloads/sql/join/spark/run.sh

返回信息

[root@node1 spark]# ./run.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/sql/join.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start ScalaSparkJoin bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -du -s hdfs://node1:8020/HiBench/Join/Input hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Join/Output rm: `hdfs://node1:8020/HiBench/Join/Output': No such file or directory Export env: SPARKBENCH_PROPERTIES_FILES=/home/cf/app/HiBench-master/report/join/spark/conf/sparkbench/sparkbench.conf Export env: HADOOP_CONF_DIR=/etc/hadoop/conf.cloudera.yarn Submit Spark job: /opt/cloudera/parcels/CDH--.cdh5./lib/spark/bin/spark-submit --properties- --executor-cores --executor-memory 4g /home/cf/app/HiBench-master/sparkbench/assembly/target/sparkbench-assembly-7.1-SNAPSHOT-dist.jar ScalaJoin /home/cf/app/HiBench-master/report/join/spark/conf/../rankings_uservisits_join.hive // :: INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports. finish ScalaSparkJoin bench

执行脚本

bin/workloads/sql/aggregation/prepare/prepare.sh

返回信息

[root@node1 prepare]# ./prepare.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/sql/aggregation.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start HadoopPrepareAggregation bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Aggregation/Input rm: `hdfs://node1:8020/HiBench/Aggregation/Input': No such file or directory Pages:, USERVISITS: Submit MapReduce Job: /opt/cloudera/parcels/CDH--.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn jar /home/cf/app/HiBench-master/autogen/target/autogen-7.1-SNAPSHOT-jar-with-dependencies.jar HiBench.DataGen -t hive -b hdfs://node1:8020/HiBench/Aggregation -n Input -m 8 -r 8 -p 120 -v 1000 -o sequence // :: INFO HiBench.HiveData: Closing hive data generator... finish HadoopPrepareAggregation bench

执行脚本

bin/workloads/sql/aggregation/spark/run.sh

返回信息

[root@node1 spark]# ./run.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/sql/aggregation.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start ScalaSparkAggregation bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Aggregation/Output rm: `hdfs://node1:8020/HiBench/Aggregation/Output': No such file or directory Export env: SPARKBENCH_PROPERTIES_FILES=/home/cf/app/HiBench-master/report/aggregation/spark/conf/sparkbench/sparkbench.conf Export env: HADOOP_CONF_DIR=/etc/hadoop/conf.cloudera.yarn Submit Spark job: /opt/cloudera/parcels/CDH--.cdh5./lib/spark/bin/spark-submit --properties- --executor-cores --executor-memory 4g /home/cf/app/HiBench-master/sparkbench/assembly/target/sparkbench-assembly-7.1-SNAPSHOT-dist.jar ScalaAggregation /home/cf/app/HiBench-master/report/aggregation/spark/conf/../uservisits_aggre.hive // :: INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports. hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -du -s hdfs://node1:8020/HiBench/Aggregation/Output finish ScalaSparkAggregation bench

参考:https://www.cnblogs.com/barneywill/p/10436299.html

HiBench成长笔记——(4) HiBench测试Spark SQL的更多相关文章

- HiBench成长笔记——(1) HiBench概述

测试分类 HiBench共计19个测试方向,可大致分为6个测试类别:分别是micro,ml(机器学习),sql,graph,websearch和streaming. 2.1 micro Benchma ...

- HiBench成长笔记——(3) HiBench测试Spark

很多内容之前的博客已经提过,这里不再赘述,详细内容参照本系列前面的博客:https://www.cnblogs.com/ratels/p/10970905.html 创建并修改配置文件conf/spa ...

- HiBench成长笔记——(6) HiBench测试结果分析

Scan Join Aggregation Scan Join Aggregation Scan Join Aggregation Scan Join Aggregation Scan Join Ag ...

- HiBench成长笔记——(5) HiBench-Spark-SQL-Scan源码分析

run.sh #!/bin/bash # Licensed to the Apache Software Foundation (ASF) under one or more # contributo ...

- HiBench成长笔记——(2) CentOS部署安装HiBench

安装Scala 使用spark-shell命令进入shell模式,查看spark版本和Scala版本: 下载Scala2.10.5 wget https://downloads.lightbend.c ...

- HiBench成长笔记——(8) 分析源码workload_functions.sh

workload_functions.sh 是测试程序的入口,粘连了监控程序 monitor.py 和 主运行程序: #!/bin/bash # Licensed to the Apache Soft ...

- HiBench成长笔记——(7) 阅读《The HiBench Benchmark Suite: Characterization of the MapReduce-Based Data Analysis》

<The HiBench Benchmark Suite: Characterization of the MapReduce-Based Data Analysis>内容精选 We th ...

- HiBench成长笔记——(10) 分析源码execute_with_log.py

#!/usr/bin/env python2 # Licensed to the Apache Software Foundation (ASF) under one or more # contri ...

- HiBench成长笔记——(9) 分析源码monitor.py

monitor.py 是主监控程序,将监控数据写入日志,并统计监控数据生成HTML统计展示页面: #!/usr/bin/env python2 # Licensed to the Apache Sof ...

随机推荐

- Python 爬取 热词并进行分类数据分析-[解释修复+热词引用]

日期:2020.02.02 博客期:141 星期日 [本博客的代码如若要使用,请在下方评论区留言,之后再用(就是跟我说一声)] 所有相关跳转: a.[简单准备] b.[云图制作+数据导入] c.[拓扑 ...

- 为小学生出四则运算题目.java

import java.util.Scanner; import java.util.Random; public class test{ public static int s1 = new Ran ...

- python 中的生成器(generator)

生成器不会吧结果保存在一个系列里,而是保存生成器的状态,在每次进行迭代时返回一个值,直到遇到StopTteration异常结束 1.生成器语法: 生成器表达式:通列表解析语法,只不过把列表解析的[] ...

- PAT T1012 Greedy Snake

直接暴力枚举,注意每次深搜完状态的还原~ #include<bits/stdc++.h> using namespace std; ; int visit[maxn][maxn]; int ...

- Spring Boot 概括

Spring Boot 简介 简化Spring应用开发的一个框架: 整个Spring技术栈的一个大整合: J2EE开发的一站式解决方案: 微服务 2014,martin fowler 微服务:架构风格 ...

- SpringCloud入门——(1)创建Eureka项目

Eureka是Spring Cloud Netflix微服务套件中的一部分,可以与Springboot构建的微服务很容易的整合起来.Eureka包含了服务器端和客户端组件.服务器端,也被称作是服务注册 ...

- Redis的C++与JavaScript访问操作

上篇简单介绍了Redis及其安装部署,这篇记录一下如何用C++语言和JavaScript语言访问操作Redis 1. Redis的接口访问方式(通用接口或者语言接口) 很多语言都包含Redis支持,R ...

- Array数组的方法总结

1.检测数组 自从ECMAScript3作出规定后,就出现了确定某个对象是不是数组的经典问题.对于一个网页,或者一个全局作用域而言,使用instanceof操作符就能得到满意结果. if (value ...

- pygame学习的第一天

pygame最小开发框架: import pygame, sys pygame.init() screen = pygame.display.set_mode((600, 480)) pygame.d ...

- 启动storm任务时,异常提示

启动storm任务时,异常提示: 14182 [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2000] WARN o.a.s.s.o.a.z.s.NIOServerCnx ...