python--爬虫入门(七)urllib库初体验以及中文编码问题的探讨

python系列均基于python3.4环境

---------@_@? --------------------------------------------------------------------

- 提出问题:如何简单抓取一个网页的源码

- 解决方法:利用urllib库,抓取一个网页的源代码

------------------------------------------------------------------------------------

- 代码示例

#python3.4

import urllib.request response = urllib.request.urlopen("http://zzk.cnblogs.com/b")

print(response.read())

- 运行结果

b'\n<!DOCTYPE html>\n<html>\n<head>\n <meta charset="utf-8"/>\n <title>\xe6\x89\xbe\xe6\x89\xbe\xe7\x9c\x8b - \xe5\x8d\x9a\xe5\xae\xa2\xe5\x9b\xad</title> \n <link rel="shortcut icon" href="/Content/Images/favicon.ico" type="image/x-icon"/>\n <meta content="\xe6\x8a\x80\xe6\x9c\xaf\xe6\x90\x9c\xe7\xb4\xa2,IT\xe6\x90\x9c\xe7\xb4\xa2,\xe7\xa8\x8b\xe5\xba\x8f\xe6\x90\x9c\xe7\xb4\xa2,\xe4\xbb\xa3\xe7\xa0\x81\xe6\x90\x9c\xe7\xb4\xa2,\xe7\xa8\x8b\xe5\xba\x8f\xe5\x91\x98\xe6\x90\x9c\xe7\xb4\xa2\xe5\xbc\x95\xe6\x93\x8e" name="keywords" />\n <meta content="\xe9\x9d\xa2\xe5\x90\x91\xe7\xa8\x8b\xe5\xba\x8f\xe5\x91\x98\xe7\x9a\x84\xe4\xb8\x93\xe4\xb8\x9a\xe6\x90\x9c\xe7\xb4\xa2\xe5\xbc\x95\xe6\x93\x8e\xe3\x80\x82\xe9\x81\x87\xe5\x88\xb0\xe6\x8a\x80\xe6\x9c\xaf\xe9\x97\xae\xe9\xa2\x98\xe6\x80\x8e\xe4\xb9\x88\xe5\x8a\x9e\xef\xbc\x8c\xe5\x88\xb0\xe5\x8d\x9a\xe5\xae\xa2\xe5\x9b\xad\xe6\x89\xbe\xe6\x89\xbe\xe7\x9c\x8b..." name="description" />\n <link type="text/css" href="/Content/Style.css" rel="stylesheet" />\n <script src="http://common.cnblogs.com/script/jquery.js" type="text/javascript"></script>\n <script src="/Scripts/Common.js" type="text/javascript"></script>\n <script src="/Scripts/Home.js" type="text/javascript"></script>\n</head>\n<body>\n <div class="top">\n \n <div class="top_tabs">\n <a href="http://www.cnblogs.com">\xc2\xab \xe5\x8d\x9a\xe5\xae\xa2\xe5\x9b\xad\xe9\xa6\x96\xe9\xa1\xb5 </a>\n </div>\n <div id="span_userinfo" class="top_links">\n </div>\n </div>\n <div style="clear: both">\n </div>\n <center>\n <div id="main">\n <div class="logo_index">\n <a href="http://zzk.cnblogs.com">\n <img alt="\xe6\x89\xbe\xe6\x89\xbe\xe7\x9c\x8blogo" src="/images/logo.gif" /></a>\n </div>\n <div class="index_sozone">\n <div class="index_tab">\n <a href="/n" onclick="return channelSwitch('n');">\xe6\x96\xb0\xe9\x97\xbb</a>\n<a class="tab_selected" href="/b" onclick="return channelSwitch('b');">\xe5\x8d\x9a\xe5\xae\xa2</a> <a href="/k" onclick="return channelSwitch('k');">\xe7\x9f\xa5\xe8\xaf\x86\xe5\xba\x93</a>\n <a href="/q" onclick="return channelSwitch('q');">\xe5\x8d\x9a\xe9\x97\xae</a>\n </div>\n <div class="search_block">\n <div class="index_btn">\n <input type="button" class="btn_so_index" onclick="Search();" value=" \xe6\x89\xbe\xe4\xb8\x80\xe4\xb8\x8b " />\n <span class="help_link"><a target="_blank" href="/help">\xe5\xb8\xae\xe5\x8a\xa9</a></span>\n </div>\n <input type="text" onkeydown="searchEnter(event);" class="input_index" name="w" id="w" />\n </div>\n </div>\n </div>\n <div class="footer">\n ©2004-2016 <a href="http://www.cnblogs.com">\xe5\x8d\x9a\xe5\xae\xa2\xe5\x9b\xad</a>\n </div>\n </center>\n</body>\n</html>\n'

- 附上python2.7的实现代码:

#python2.7

import urllib2 response = urllib2.urlopen("http://zzk.cnblogs.com/b")

print response.read()

- 可见,python3.4和python2.7的代码存在差异性。

----------@_@? 问题出现!----------------------------------------------------------------------

- 发现问题:查看上面的运行结果,会发现中文并没有正常显示。

- 解决问题:处理中文编码问题

--------------------------------------------------------------------------------------------------

- 处理源码中的中文问题!!!

- 修改代码,如下:

#python3.4

import urllib.request response = urllib.request.urlopen("http://zzk.cnblogs.com/b")

print(response.read().decode('UTF-8'))

- 运行,结果显示:

C:\Python34\python.exe E:/pythone_workspace/mydemo/spider/demo.py <!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>找找看 - 博客园</title>

<link rel="shortcut icon" href="/Content/Images/favicon.ico" type="image/x-icon"/>

<meta content="技术搜索,IT搜索,程序搜索,代码搜索,程序员搜索引擎" name="keywords" />

<meta content="面向程序员的专业搜索引擎。遇到技术问题怎么办,到博客园找找看..." name="description" />

<link type="text/css" href="/Content/Style.css" rel="stylesheet" />

<script src="http://common.cnblogs.com/script/jquery.js" type="text/javascript"></script>

<script src="/Scripts/Common.js" type="text/javascript"></script>

<script src="/Scripts/Home.js" type="text/javascript"></script>

</head>

<body>

<div class="top"> <div class="top_tabs">

<a href="http://www.cnblogs.com">« 博客园首页 </a>

</div>

<div id="span_userinfo" class="top_links">

</div>

</div>

<div style="clear: both">

</div>

<center>

<div id="main">

<div class="logo_index">

<a href="http://zzk.cnblogs.com">

<img alt="找找看logo" src="/images/logo.gif" /></a>

</div>

<div class="index_sozone">

<div class="index_tab">

<a href="/n" onclick="return channelSwitch('n');">新闻</a>

<a class="tab_selected" href="/b" onclick="return channelSwitch('b');">博客</a> <a href="/k" onclick="return channelSwitch('k');">知识库</a>

<a href="/q" onclick="return channelSwitch('q');">博问</a>

</div>

<div class="search_block">

<div class="index_btn">

<input type="button" class="btn_so_index" onclick="Search();" value=" 找一下 " />

<span class="help_link"><a target="_blank" href="/help">帮助</a></span>

</div>

<input type="text" onkeydown="searchEnter(event);" class="input_index" name="w" id="w" />

</div>

</div>

</div>

<div class="footer">

©2004-2016 <a href="http://www.cnblogs.com">博客园</a>

</div>

</center>

</body>

</html> Process finished with exit code 0

- 结果显示:处理完编码后,网页源码中中文可以正常显示了

-----------@_@! 探讨一个新的中文编码问题 ----------------------------------------------------------

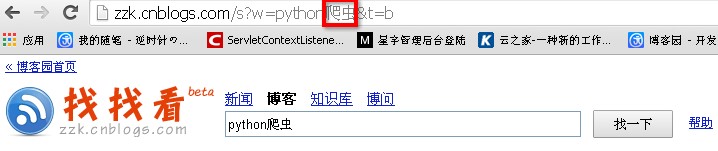

问题:“如果url中出现中文,那么应该如果解决呢?”

例如:url = "http://zzk.cnblogs.com/s?w=python爬虫&t=b"

-----------------------------------------------------------------------------------------------------

- 接下来,我们来解决url中出现中文的问题!!!

(1)测试1:保留原来的格式,直接访问,不做任何处理

- 代码示例:

#python3.4

import urllib.request url="http://zzk.cnblogs.com/s?w=python爬虫&t=b"

resp = urllib.request.urlopen(url)

print(resp.read().decode('UTF-8'))

- 运行结果:

C:\Python34\python.exe E:/pythone_workspace/mydemo/spider/demo.py

Traceback (most recent call last):

File "E:/pythone_workspace/mydemo/spider/demo.py", line , in <module>

response = urllib.request.urlopen(url)

File "C:\Python34\lib\urllib\request.py", line , in urlopen

return opener.open(url, data, timeout)

File "C:\Python34\lib\urllib\request.py", line , in open

response = self._open(req, data)

File "C:\Python34\lib\urllib\request.py", line , in _open

'_open', req)

File "C:\Python34\lib\urllib\request.py", line , in _call_chain

result = func(*args)

File "C:\Python34\lib\urllib\request.py", line , in http_open

return self.do_open(http.client.HTTPConnection, req)

File "C:\Python34\lib\urllib\request.py", line , in do_open

h.request(req.get_method(), req.selector, req.data, headers)

File "C:\Python34\lib\http\client.py", line , in request

self._send_request(method, url, body, headers)

File "C:\Python34\lib\http\client.py", line , in _send_request

self.putrequest(method, url, **skips)

File "C:\Python34\lib\http\client.py", line , in putrequest

self._output(request.encode('ascii'))

UnicodeEncodeError: 'ascii' codec can't encode characters in position 15-16: ordinal not in range(128) Process finished with exit code

果然不行!!!

(2)测试2:中文单独处理

- 代码示例:

import urllib.request

import urllib.parse url = "http://zzk.cnblogs.com/s?w=python"+ urllib.parse.quote("爬虫")+"&t=b"

resp = urllib.request.urlopen(url)

print(resp.read().decode('utf-8'))

- 运行结果:

C:\Python34\python.exe E:/pythone_workspace/mydemo/spider/demo.py

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<title>python爬虫-博客园找找看</title>

<link rel="shortcut icon" href="/Content/Images/favicon.ico" type="image/x-icon"/>

<link href="/Content/so.css?id=20140908" rel="stylesheet" type="text/css" />

<link href="/Content/jquery-ui-1.8.21.custom.css" rel="stylesheet" type="text/css" />

<script src="http://common.cnblogs.com/script/jquery.js" type="text/javascript"></script>

<script src="/Scripts/jquery-ui-1.8.11.min.js" type="text/javascript"></script>

<script src="/Scripts/Common.js" type="text/javascript"></script>

<script src="/Scripts/Search.js" type="text/javascript"></script>

<script src="/Scripts/jquery.ui.datepicker-zh-CN.js" type="text/javascript"></script>

</head>

<body>

<div class="top_bar">

<div class="top_tabs">

<a href="http://www.cnblogs.com">« 博客园首页 </a>

</div>

<div id="span_userinfo">

</div>

</div>

<div id="header"> <div id="headerMain">

<a id="logo" href="/"></a>

<div id="searchBox">

<div id="searchRangeList">

<ul>

<li><a href="/s?t=n" onclick="return channelSwitch('n');">新闻</a></li>

<li><a class="tab_selected" href="/s?t=b" onclick="return channelSwitch('b');">博客</a></li> <li><a href="/s?t=k" onclick="return channelSwitch('k');">知识库</a></li>

<li><a href="/s?t=q" onclick="return channelSwitch('q');">博问</a></li>

</ul>

</div>

<!--end: searchRangeList -->

<div class="seachInput">

<input type="text" onchange="ShowtFilter(this, false);" onkeypress="return searchEnter(event);"

value="python爬虫" name="w" id="w" maxlength="" title="博客园 找找看" class="txtSeach" />

<input type="button" value="找一下" class="btnSearch" onclick="Search();" />

<span class="help_link"><a target="_blank" href="/help">帮助</a></span>

<br />

</div>

<!--end: seachInput -->

</div>

<!--end: searchBox --> </div> <div style="clear: both">

</div>

<!--end: headerMain -->

<div id="searchInfo">

<span style="float: left; margin-left: 15px;"></span>博客园找找看,找到相关内容<b id="CountOfResults"></b>篇,用时132毫秒

</div>

<!--end: searchInfo -->

</div>

<!--end: header -->

<div id="main">

<div id="searchResult">

<div style="clear: both">

</div>

<div class="forflow"> <div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/hearzeus/p/5238867.html"><strong>Python 爬虫</strong>入门——小项目实战(自动私信博客园某篇博客下的评论人,随机发送一条笑话,完整代码在博文最后)</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

<strong>python, 爬虫</strong>, 之前写的都是针对<strong>爬虫</strong>过程中遇到问题... <strong>python</strong>代码如下: def getCo...通过关键特征告诉<strong>爬虫</strong>,已经遍历结束了。我用的特征代码如下: ...定时器 <strong>python</strong>定时器,代码示例: impor

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/hearzeus/" target="_blank">不剃头的一休哥</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/hearzeus/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/hearzeus/p/5151449.html"><strong>Python 爬虫</strong>入门(一)</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

<strong>python, 爬虫</strong>, 毕设是做<strong>爬虫</strong>相关的,本来想的是用j...太满意。之前听说<strong>Python</strong>这方面比较强,就想用<strong>Python</strong>...至此,一个简单的<strong>爬虫</strong>就完成了。之后是针对反<strong>爬虫</strong>的一些策略,比...a写,也写了几个<strong>爬虫</strong>,其中一个是爬网易云音乐的用户信息,爬了

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/hearzeus/" target="_blank">不剃头的一休哥</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/hearzeus/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/xueweihan/p/4592212.html">[<strong>Python</strong>]新手写<strong>爬虫</strong>全过程(已完成)</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

hool.cc/<strong>python</strong>/<strong>python</strong>-files-io...<strong>python, 爬虫</strong>,今天早上起来,第一件事情就是理一理今天...任务,写一个只用<strong>python</strong>字符串内建函数的<strong>爬虫</strong>,定义为v1...实主要的不是学习<strong>爬虫</strong>,而是依照这个需求锻炼下自己的编程能力,

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/xueweihan/" target="_blank">削微寒</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/xueweihan/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/hearzeus/p/5157016.html"><strong>Python 爬虫</strong>入门(二)—— IP代理使用</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

的代理。 在<strong>爬虫</strong>中,有些网站可能为了防止<strong>爬虫</strong>或者DDOS...<strong>python, 爬虫</strong>, 上一节,大概讲述了Python 爬...所以,我们可以用<strong>爬虫</strong>爬那么IP。用上一节的代码,完全可以做到...(;;)这样的。<strong>python</strong>中的for循环,in 表示X的取

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/hearzeus/" target="_blank">不剃头的一休哥</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/hearzeus/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/ruthon/p/4638262.html">《零基础写<strong>Python爬虫</strong>》系列技术文章整理收藏</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

<strong>Python</strong>,《零基础写<strong>Python爬虫</strong>》系列技术文章整理收... 1零基础写<strong>python爬虫</strong>之<strong>爬虫</strong>的定义及URL构成ht...ml 8零基础写<strong>python爬虫</strong>之<strong>爬虫</strong>编写全记录http:/...ml 9零基础写<strong>python爬虫</strong>之<strong>爬虫</strong>框架Scrapy安装配

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/ruthon/" target="_blank">豆芽ruthon</a>

</span><span class="searchItemInfo-publishDate">--</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/ruthon/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/wenjianmuran/p/5049966.html"><strong>Python爬虫</strong>入门案例:获取百词斩已学单词列表</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

记不住。我们来用<strong>Python</strong>来爬取这些信息,同时学习<strong>Python爬虫</strong>基础。 首先...<strong>Python</strong>, 案例, 百词斩是一款很不错的单词记忆APP,在学习过程中,它会记录你所学的每...n) 如果要在<strong>Python</strong>中解析json,我们需要json库。我们打印下前两页

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/wenjianmuran/" target="_blank">文剑木然</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/wenjianmuran/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/cs-player1/p/5169307.html"><strong>python爬虫</strong>之初体验</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

<strong>python, 爬虫</strong>,上网简单看了几篇博客自己试了试简单的<strong>爬虫</strong>哎呦喂很有感觉蛮好玩的 之前写博客 有点感觉是在写教程啊什么的写的很别扭 各种复制粘贴写得很不舒服 以后还是怎么舒服怎么写把每天的练习所得写上来就好了本来就是个菜鸟不断学习 不断debug就好 直接

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/cs-player1/" target="_blank">cs-player1</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/cs-player1/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/hearzeus/p/5226546.html"><strong>Python 爬虫</strong>入门(四)—— 验证码下篇(破解简单的验证码)</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

<strong>python, 爬虫</strong>, 年前写了验证码上篇,本来很早前就想写下篇来着,只是过年比较忙,还有就是验证码破解比较繁杂,方法不同,正确率也会有差...码(这里我用的是<strong>python</strong>的"PIL"图像处理库) a.)转为灰度图 PIL 在这方面也提供了极完备的支

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/hearzeus/" target="_blank">不剃头的一休哥</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/hearzeus/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/xin-xin/p/4297852.html">《<strong>Python爬虫</strong>学习系列教程》学习笔记</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

家的交流。 一、<strong>Python</strong>入门 . <strong>Python爬虫</strong>入门...一之综述 . <strong>Python爬虫</strong>入门二之<strong>爬虫</strong>基础了解 . ... <strong>Python爬虫</strong>入门七之正则表达式 二、<strong>Python</strong>实战 ...on进阶 . <strong>Python爬虫</strong>进阶一之<strong>爬虫</strong>框架Scrapy

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/xin-xin/" target="_blank">心_心</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/xin-xin/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/nishuihan/p/4754622.html">PHP, <strong>Python</strong>, Node.js 哪个比较适合写<strong>爬虫</strong>?</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

子,做一个简单的<strong>爬虫</strong>容易,但要做一个完备的<strong>爬虫</strong>挺难的。像我搭...path的类库/<strong>爬虫</strong>库后,就会发现此种方式虽然入门门槛低,但...荐采用一些现成的<strong>爬虫</strong>库,诸如xpath、多线程支持还是必须考...以考虑。、如果<strong>爬虫</strong>是涉及大规模网站爬取,效率、扩展性、可维

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/nishuihan/" target="_blank">技术宅小牛牛</a>

</span><span class="searchItemInfo-publishDate">--</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/nishuihan/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/nishuihan/p/4815930.html">PHP, <strong>Python</strong>, Node.js 哪个比较适合写<strong>爬虫</strong>?</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

子,做一个简单的<strong>爬虫</strong>容易,但要做一个完备的<strong>爬虫</strong>挺难的。像我搭...主要看你定义的“<strong>爬虫</strong>”干什么用。、如果是定向爬取几个页面,...path的类库/<strong>爬虫</strong>库后,就会发现此种方式虽然入门门槛低,但...荐采用一些现成的<strong>爬虫</strong>库,诸如xpath、多线程支持还是必须考

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/nishuihan/" target="_blank">技术宅小牛牛</a>

</span><span class="searchItemInfo-publishDate">--</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/nishuihan/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/rwxwsblog/p/4557123.html">安装<strong>python爬虫</strong>scrapy踩过的那些坑和编程外的思考</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

了一下开源的<strong>爬虫</strong>资料,看了许多对于开源<strong>爬虫</strong>的比较发现开源<strong>爬虫</strong>...没办法,只能升级<strong>python</strong>的版本了。 、升级<strong>python</strong>...s://www.<strong>python</strong>.org/ftp/<strong>python</strong>/...n 检查<strong>python</strong>版本 <strong>python</strong> --ve

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/rwxwsblog/" target="_blank">秋楓</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/rwxwsblog/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/maybe2030/p/4555382.html">[<strong>Python</strong>] 网络<strong>爬虫</strong>和正则表达式学习总结</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

有的网站为了防止<strong>爬虫</strong>,可能会拒绝<strong>爬虫</strong>的请求,这就需要我们来修...,正则表达式不是<strong>Python</strong>的语法,并不属于<strong>Python</strong>,其...\d" 2.2 <strong>Python</strong>的re模块 <strong>Python</strong>通过... 实例描述 <strong>python</strong> 匹配 "<strong>python</strong>".

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/maybe2030/" target="_blank">poll的笔记</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/maybe2030/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/mr-zys/p/5059451.html">一个简单的多线程<strong>Python爬虫</strong>(一)</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

一个简单的多线程<strong>Python爬虫</strong> 最近想要抓取[拉勾网](h...自己写一个简单的<strong>Python爬虫</strong>的想法。 本文中的部分链接.../<strong>python</strong>-threading-how-d.../<strong>python</strong>-threading-how-do-i-lock-a-thread) ## 一个<strong>爬虫</strong>

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/mr-zys/" target="_blank">mr_zys</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/mr-zys/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/jixin/p/5145813.html">自学<strong>Python</strong>十一 <strong>Python爬虫</strong>总结</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

Demo <strong>爬虫</strong>就靠一段落吧,更深入的<strong>爬虫</strong>框架以及htm...学习与尝试逐渐对<strong>python爬虫</strong>有了一些小小的心得,我们渐渐...尝试着去总结一下<strong>爬虫</strong>的共性,试着去写个helper类以避免重...。 参考:用<strong>python爬虫</strong>抓站的一些技巧总结 zz

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/jixin/" target="_blank">我的代码会飞</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/jixin/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/hearzeus/p/5162691.html"><strong>Python 爬虫</strong>入门(三)—— 寻找合适的爬取策略</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

<strong>python, 爬虫</strong>, 写<strong>爬虫</strong>之前,首先要明确爬取的数据。...怎么寻找一个好的<strong>爬虫</strong>策略。(代码仅供学习交流,切勿用作商业或...(这个也是我们用<strong>爬虫</strong>发请求的结果),如图所示 很庆...).顺便说一句,<strong>python</strong>有json解析模块,可以用。 下面附上蝉游记的<strong>爬虫</strong>

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/hearzeus/" target="_blank">不剃头的一休哥</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/hearzeus/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/ybjourney/p/5304501.html"><strong>python</strong>简单<strong>爬虫</strong></a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

<strong>爬虫</strong>真是一件有意思的事儿啊,之前写过<strong>爬虫</strong>,用的是urll...Soup实现简单<strong>爬虫</strong>,scrapy也有实现过。最近想更好的学...习<strong>爬虫</strong>,那么就尽可能的做记录吧。这篇博客就我今天的一个学习过...的语法规则,我在<strong>爬虫</strong>中常用的有: . 匹配任意字符(换

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/ybjourney/" target="_blank">oyabea</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/ybjourney/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/hippieZhou/p/4967075.html"><strong>Python</strong>带你轻松进行网页<strong>爬虫</strong></a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

,所以就打算自学<strong>Python</strong>。在还没有学它的时候就听说用它来进行网页<strong>爬虫</strong>....0这次的网络<strong>爬虫</strong>需求背景我打算延续DotNet开源大本营...例。.实战网页<strong>爬虫</strong>:2.1.获取城市列表:首先,我们需要获...行速度,那么可能<strong>Python</strong>还是挺适合的,毕竟可以通过它写更

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/hippiezhou/" target="_blank">hippiezhou</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/hippieZhou/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/mfryf/p/3695844.html">开发记录_自学<strong>Python</strong>写<strong>爬虫</strong>程序爬取csdn个人博客信息</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

.3_开工 据说<strong>Python</strong>并不难,看过了<strong>python</strong>的代码...lecd这 个半<strong>爬虫</strong>半网站的项目, 累积不少<strong>爬虫</strong>抓站的经验,... 某些网站反感<strong>爬虫</strong>的到访,于是对<strong>爬虫</strong>一律拒绝请求 ...模仿了一个自己的<strong>Python爬虫</strong>。 [<strong>python</strong>]

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/mfryf/" target="_blank">知识天地</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/mfryf/p/.html</span>

</div>

<!--end: searchURL -->

</div>

<div class="searchItem">

<h3 class="searchItemTitle">

<a target="_blank" href="http://www.cnblogs.com/coltfoal/archive/2012/10/06/2713348.html"><strong>Python</strong>天气预报采集器(网页<strong>爬虫</strong>)</a>

</h3>

<!--end: searchItemTitle -->

<span class="searchCon">

的。 补充上<strong>爬虫</strong>结果的截图: <strong>python</strong>的使...编程, <strong>Python</strong>, python是一门很强大的语言,在...以就算了。 <strong>爬虫</strong>简单说来包括两个步骤:获得网页文本、过滤...ml文本。 <strong>python</strong>在获取html方面十分方便,寥寥

</span>

<!--end: searchCon -->

<div class="searchItemInfo">

<span class="searchItemInfo-userName">

<a href="http://www.cnblogs.com/coltfoal/" target="_blank">coltfoal</a>

</span><span class="searchItemInfo-publishDate">--</span>

<span class="searchItemInfo-good">推荐()</span>

<span class="searchItemInfo-comments">评论()</span>

<span class="searchItemInfo-views">浏览()</span>

</div>

<div class="searchItemInfo">

<span class="searchURL">www.cnblogs.com/coltfoal/archive////.html</span>

</div>

<!--end: searchURL -->

</div>

<div id="paging_block"><div class="pager"><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=1" class="p_1 current" onclick="Return true;;buildPaging(1);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=2" class="p_2" onclick="Return true;;buildPaging(2);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=3" class="p_3" onclick="Return true;;buildPaging(3);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=4" class="p_4" onclick="Return true;;buildPaging(4);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=5" class="p_5" onclick="Return true;;buildPaging(5);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=6" class="p_6" onclick="Return true;;buildPaging(6);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=7" class="p_7" onclick="Return true;;buildPaging(7);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=8" class="p_8" onclick="Return true;;buildPaging(8);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=9" class="p_9" onclick="Return true;;buildPaging(9);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=10" class="p_10" onclick="Return true;;buildPaging(10);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=11" class="p_11" onclick="Return true;;buildPaging(11);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=12" class="p_12" onclick="Return true;;buildPaging(12);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=13" class="p_13" onclick="Return true;;buildPaging(13);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=14" class="p_14" onclick="Return true;;buildPaging(14);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=15" class="p_15" onclick="Return true;;buildPaging(15);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=16" class="p_16" onclick="Return true;;buildPaging(16);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=17" class="p_17" onclick="Return true;;buildPaging(17);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=18" class="p_18" onclick="Return true;;buildPaging(18);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=19" class="p_19" onclick="Return true;;buildPaging(19);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=20" class="p_20" onclick="Return true;;buildPaging(20);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=21" class="p_21" onclick="Return true;;buildPaging(21);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=22" class="p_22" onclick="Return true;;buildPaging(22);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=23" class="p_23" onclick="Return true;;buildPaging(23);return false;"></a>···<a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=75" class="p_75" onclick="Return true;;buildPaging(75);return false;"></a><a href="/s?w=python%e7%88%ac%e8%99%ab&t=b&p=2" onclick="Return true;;buildPaging(2);return false;">Next ></a></div></div><script type="text/javascript">var pagingBuider={"OnlyLinkText":false,"TotalCount":,"PageIndex":,"PageSize":,"ShowPageCount":,"SkipCount":,"UrlFormat":"/s?w=python%e7%88%ac%e8%99%ab&t=b&p={0}","OnlickJsFunc":"Return true;","FirstPageLink":"/s?w=python%e7%88%ac%e8%99%ab&t=b&p=1","AjaxUrl":"/","AjaxCallbak":null,"TopPagerId":"pager_top","IsRenderScript":true};function buildPaging(pageIndex){pagingBuider.PageIndex=pageIndex;$.ajax({url:pagingBuider.AjaxUrl,data:JSON.stringify(pagingBuider),type:'post',dataType:'text',contentType:'application/json; charset=utf-8',success:function (data) { $('#paging_block').html(data); var pagerTop=$('#pager_top');if(pageIndex>){$(pagerTop).html(data).show();}else{$(pagerTop).hide();}}});}</script> </div>

</div>

<div class="forflow" id="sidebar">

<div class="s_google">

用 <a href="javascript:void(0);" title="Google站内搜索" onclick="return google_search()">Google</a> 找一下<br/>

</div> <div style="clear: both;">

</div> <div style="clear: both;">

</div>

<div class="sideRightWidget">

<b>按浏览数筛选</b><br /> <ol id="viewsRange">

<li class="ui-selected"

><a href="javascript:void(0);" onclick="Views(0);redirect();">全部</a></li>

<li ><a href="javascript:void(0);" onclick="Views(200);redirect();">200以上</a></li>

<li ><a href="javascript:void(0);" onclick="Views(500);redirect();">500以上</a></li>

<li ><a href="javascript:void(0);" onclick="Views(1000);redirect();">1000以上</a></li>

</ol>

</div> <div style="clear: both;">

</div> <div class="sideRightWidget">

<b>按时间筛选</b><br />

<ol id="dateRange">

<li class="ui-selected"

><a href="javascript:void(0);" onclick="clearDate();dateRange(null);redirect();">全部</a></li>

<li ><a href="javascript:void(0);" onclick="dateRange('One-Week');redirect();">

一周内</a></li>

<li ><a href="javascript:void(0);" onclick="dateRange('One-Month');redirect();">

一月内</a></li>

<li ><a href="javascript:void(0);" onclick="dateRange('Three-Month');redirect();">

三月内</a></li>

<li ><a href="javascript:void(0);" onclick="dateRange('One-Year');redirect();">

一年内</a></li>

</ol>

<p id="datepicker">

自定义: <input type="text" id="dateMin"

class="datepicker"/>-<input type="text" id="dateMax" class="datepicker"

/>

</p>

</div> <div style="clear: both;">

</div>

<div class="sideRightWidget">

» 去“<a title="博问是博客园提供的问答系统" href="http://q.cnblogs.com/">博问</a>”问一下?

<br />

» 搜索“<a href="http://job.cnblogs.com/search/">招聘职位</a>”

<br />

» 我有<a href="http://space.cnblogs.com/forum/public">反馈或建议</a>

</div>

<div id="siderigt_ad">

<script type='text/javascript'>

var googletag = googletag || {};

googletag.cmd = googletag.cmd || [];

(function () {

var gads = document.createElement('script');

gads.async = true;

gads.type = 'text/javascript';

var useSSL = 'https:' == document.location.protocol;

gads.src = (useSSL ? 'https:' : 'http:') +

'//www.googletagservices.com/tag/js/gpt.js';

var node = document.getElementsByTagName('script')[];

node.parentNode.insertBefore(gads, node);

})();

</script>

<script type='text/javascript'>

googletag.cmd.push(function () {

googletag.defineSlot('/1090369/cnblogs_zzk_Z1', [, ], 'div-gpt-ad-1410172170550-0').addService(googletag.pubads());

googletag.pubads().enableSingleRequest();

googletag.enableServices();

});

</script>

<!-- cnblogs_zzk_Z1 -->

<div id='div-gpt-ad-1410172170550-0' style='width:300px; height:250px;'>

<script type='text/javascript'>

googletag.cmd.push(function () { googletag.display('div-gpt-ad-1410172170550-0'); });

</script>

</div>

</div>

</div>

</div>

<div style="clear: both;">

</div> <div id="footer">

© - <a title="开发者的网上家园" href="http://www.cnblogs.com">博客园</a>

</div>

<script type="text/javascript"> var _gaq = _gaq || [];

_gaq.push(['_setAccount', 'UA-476124-10']);

_gaq.push(['_trackPageview']); (function () {

var ga = document.createElement('script'); ga.type = 'text/javascript'; ga.async = true;

ga.src = ('https:' == document.location.protocol ? 'https://ssl' : 'http://www') + '.google-analytics.com/ga.js';

var s = document.getElementsByTagName('script')[]; s.parentNode.insertBefore(ga, s);

})(); </script> <!--end: footer -->

</body>

</html> Process finished with exit code

运行结果

- 结果显示:对url中的中文进行单独处理,url对应内容可以正常抓取了

------@_@! 又有一个新的问题-----------------------------------------------------------

- 问题:如果把url的中英文一起进行处理呢?还能成功抓取吗?

----------------------------------------------------------------------------------------

(3)于是,测试3出现了!测试3:url中,中英文一起进行处理

- 代码示例:

#python3.4

import urllib.request

import urllib.parse url = urllib.parse.quote("http://zzk.cnblogs.com/s?w=python爬虫&t=b")

resp = urllib.request.urlopen(url)

print(resp.read().decode('utf-8'))

- 运行结果:

C:\Python34\python.exe E:/pythone_workspace/mydemo/spider/demo.py

Traceback (most recent call last):

File "E:/pythone_workspace/mydemo/spider/demo.py", line , in <module>

resp = urllib.request.urlopen(url)

File "C:\Python34\lib\urllib\request.py", line , in urlopen

return opener.open(url, data, timeout)

File "C:\Python34\lib\urllib\request.py", line , in open

req = Request(fullurl, data)

File "C:\Python34\lib\urllib\request.py", line , in __init__

self.full_url = url

File "C:\Python34\lib\urllib\request.py", line , in full_url

self._parse()

File "C:\Python34\lib\urllib\request.py", line , in _parse

raise ValueError("unknown url type: %r" % self.full_url)

ValueError: unknown url type: 'http%3A//zzk.cnblogs.com/s%3Fw%3Dpython%E7%88%AC%E8%99%AB%26t%3Db' Process finished with exit code

- 结果显示:ValueError!无法成功抓取网页!

- 结合测试1、2、3,可得到下面结果:

(1)在python3.4中,如果url中包含中文,可以用 urllib.parse.quote("爬虫") 进行处理。

(2)url中的中文需要单独处理,不能中英文一起处理。

- Tips:如果想了解一个函数的参数传值

#python3.4

import urllib.request

help(urllib.request.urlopen)

- 运行上面代码,控制台输出

C:\Python34\python.exe E:/pythone_workspace/mydemo/spider/demo.py

Help on function urlopen in module urllib.request: urlopen(url, data=None, timeout=<object object at 0x00A50490>, *, cafile=None, capath=None, cadefault=False, context=None) Process finished with exit code

@_@)Y,这篇的分享就到此结束~待续~

python--爬虫入门(七)urllib库初体验以及中文编码问题的探讨的更多相关文章

- urllib库初体验以及中文编码问题的探讨

提出问题:如何简单抓取一个网页的源码 解决方法:利用urllib库,抓取一个网页的源代码 ------------------------------------------------------- ...

- Python爬虫入门之Urllib库的高级用法

1.设置Headers 有些网站不会同意程序直接用上面的方式进行访问,如果识别有问题,那么站点根本不会响应,所以为了完全模拟浏览器的工作,我们需要设置一些Headers 的属性. 首先,打开我们的浏览 ...

- Python爬虫入门之Urllib库的基本使用

那么接下来,小伙伴们就一起和我真正迈向我们的爬虫之路吧. 1.分分钟扒一个网页下来 怎样扒网页呢?其实就是根据URL来获取它的网页信息,虽然我们在浏览器中看到的是一幅幅优美的画面,但是其实是由浏览器解 ...

- Python爬虫入门:Urllib库的基本使用

1.分分钟扒一个网页下来 怎样扒网页呢?其实就是根据URL来获取它的网页信息,虽然我们在浏览器中看到的是一幅幅优美的画面,但是其实是由浏览器解释才呈现出来的,实质它 是一段HTML代码,加 JS.CS ...

- 芝麻HTTP:Python爬虫入门之Urllib库的基本使用

1.分分钟扒一个网页下来 怎样扒网页呢?其实就是根据URL来获取它的网页信息,虽然我们在浏览器中看到的是一幅幅优美的画面,但是其实是由浏览器解释才呈现出来的,实质它是一段HTML代码,加 JS.CSS ...

- Python爬虫入门:Urllib库的高级使用

1.设置Headers 有些网站不会同意程序直接用上面的方式进行访问,如果识别有问题,那么站点根本不会响应,所以为了完全模拟浏览器的工作,我们需要设置一些Headers 的属性. 首先,打开我们的浏览 ...

- 芝麻HTTP: Python爬虫入门之Urllib库的高级用法

1.设置Headers 有些网站不会同意程序直接用上面的方式进行访问,如果识别有问题,那么站点根本不会响应,所以为了完全模拟浏览器的工作,我们需要设置一些Headers 的属性. 首先,打开我们的浏览 ...

- 爬虫入门之urllib库详解(二)

爬虫入门之urllib库详解(二) 1 urllib模块 urllib模块是一个运用于URL的包 urllib.request用于访问和读取URLS urllib.error包括了所有urllib.r ...

- Python爬虫入门七之正则表达式

在前面我们已经搞定了怎样获取页面的内容,不过还差一步,这么多杂乱的代码夹杂文字我们怎样把它提取出来整理呢?下面就开始介绍一个十分强大的工具,正则表达式! 1.了解正则表达式 正则表达式是对字符串操作的 ...

随机推荐

- shell 记录

查看进程打开的文件句柄lsof -p 进程号|wc -l date --set "Wed Dec 11 14:51:41 CST 2013" 重启VPN pon vpn arg=` ...

- Pyhton开源框架(加强版)

info:Djangourl:https://www.oschina.net/p/djangodetail: Django 是 Python 编程语言驱动的一个开源模型-视图-控制器(MVC)风格的 ...

- css+js回到顶部

.backToTop { display: none; width: 18px; line-height: 1.2; padding: 5px 0; background-color: #000; c ...

- 《理解 ES6》阅读整理:函数(Functions)(三)Function Constructor & Spread Operator

增强的Function构造函数(Increased Capabilities of the Function Constructor) 在Javascript中Function构造函数可以让你创建一个 ...

- Elasticsearch笔记

资料 官网: http://www.elasticsearch.org 中文资料:http://www.learnes.net/ .Net驱动: http://nest.azurewebsites.n ...

- 【腾讯优测干货】看腾讯的技术大牛如何将Crash率从2.2%降至0.2%?

小优有话说: App Crash就像地雷. 你怕它,想当它不存在.无异于让你的用户去探雷,一旦引爆,用户就没了. 你鼓起勇气去扫雷,它却神龙见首不见尾. 你告诫自己一定开发过程中减少crash,少埋点 ...

- 从3D Touch 看 原生快速开发

全新的按压方式苹果继续为我们带来革命性的交互:Peek和Pop,Peek 和 Pop 让你能够预览所有类型的内容,甚至可对内容进行操作,却不必真的打开它们.例如,轻按屏幕,可用 Peek 预览收件箱中 ...

- Asp.net下使用HttpModule模拟Filter,实现权限控制

在asp.net中,我们为了防止用户直接从Url中访问指定的页面而绕过登录验证,需要给每个页面加上验证,或者是在模板页中加上验证.如果说项目比较大的话,添加验证是一件令人抓狂的事情,本次,我就跟大家分 ...

- 走进AngularJs(九)表单及表单验证

年底了越来越懒散,AngularJs的学习落了一段时间,博客最近也没更新.惭愧~前段时间有试了一下用yeoman构建Angular项目,感觉学的差不多了想做个项目练练手,谁知遇到了一系列问题.yeom ...

- [stm32] 一个简单的stm32vet6驱动的天马4线SPI-1.77寸LCD彩屏DEMO

书接上文<1.一个简单的nRF51822驱动的天马4线SPI-1.77寸LCD彩屏DEMO> 我们发现用16MHz晶振的nRF51822驱动1.77寸的spi速度达不到要求 本节主要采用7 ...