【转载】 日内瓦大学 & NeurIPS 2020 | 在强化学习中动态分配有限的内存资源

原文地址:

https://hub.baai.ac.cn/view/4029

========================================================

【论文标题】Dynamic allocation of limited memory resources in reinforcement learning

【作者团队】Nisheet Patel, Luigi Acerbi, Alexandre Pouget

【发表时间】2020/11/12

【论文链接】https://proceedings.neurips.cc//paper/2020/file/c4fac8fb3c9e17a2f4553a001f631975-Paper.pdf

【论文代码】https://github.com/nisheetpatel/DynamicResourceAllocator

【推荐理由】本文收录于NeurIPS 2020,来自日内瓦大学的研究人员研究强化学习与神经科学两个方面,提出了动态框架来对资源进行分配。

生物大脑固有的处理和存储信息的能力受到限制,但是仍然能够轻松地解决复杂的任务。

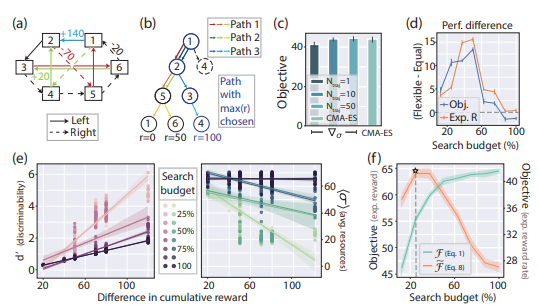

在本文中,研究人员提出了动态资源分配器(DRA),将其应用于强化学习中的两个标准任务和一个基于模型的计划任务,

发现它将更多资源分配给对内存有更高影响的项目。

此外,DRA从更高的资源预算开始学习时比为更好地完成任务而分配的学习速度要快,

这可以解释为什么生物大脑的额叶皮层区域在适应较低的渐近活动水平之前似乎更多地参与了学习的早期阶段。

本文的工作为学习如何将昂贵的资源分配给不确定的内存集合以适应环境变化的方式提供了一个规范性的解决方案。

代码地址:

https://github.com/nisheetpatel/DynamicResourceAllocator

======================================================

论文官方地址:

https://archive-ouverte.unige.ch/unige:149081

============================================

论文评审意见:

https://proceedings.neurips.cc/paper/2020/file/c4fac8fb3c9e17a2f4553a001f631975-MetaReview.html

Dynamic allocation of limited memory resources in reinforcement learning

Meta Review

This paper nicely bridges between neuroscience and RL, and considers the important topic of limited memory resources in RL agents. The topic is well-suited for NeurIPS (R2) as it has broader applicability toward e.g. model-based RL and planning, although this is not extensively discussed or shown in the paper itself. All reviewers agreed that it is well-motivated and written (R1, R2, R3, R4), although R3 did ask for a bit more explanation on some methodological details. It is also appropriately situated with respect to related work (R1, R2, R3) although R2 suggests a separate related works section, and R4 wanted to see more discussion of work outside of neuroscience, focused on optimizing RL with limited capacity. R1 pointed out that perhaps there’s a bit of confusion between memory precision and use of memory resources, as the former is more accurate for agents, the latter perhaps for real brains - ie more precise representations require more resources to encode in the brain, but this seems to be a minor point. R1 also asked to include standard baseline implementations to test for issues such how their model scales compared to other methods. R4 was the least positive, expressing that the contribution to AI is unclear, that the tasks are too easy and wouldn’t be expected to challenge memory resources. Also the connection to neuroscience is a bit tenuous as the implementation doesn’t seem particularly biologically plausible. In the rebuttal, authors argue that this approach will allow them to generate testable predictions regarding neural representations during learning, some of which are already included in the discussion. I find this adequate, but these predictions should maybe be foregrounded more so as to make clearer the neuroscientific contribution. I’m overall quite impressed with how responsive the authors were in their response, including almost all of the requested analyses. I think the final paper, with all of these changes incorporated, is likely to be much stronger, and so I recommend accept.

======================================

论文的视频讲解:(外网)

https://www.youtube.com/watch?v=1MJJkJd_umA

===================================

【转载】 日内瓦大学 & NeurIPS 2020 | 在强化学习中动态分配有限的内存资源的更多相关文章

- 深度强化学习中稀疏奖励问题Sparse Reward

Sparse Reward 推荐资料 <深度强化学习中稀疏奖励问题研究综述>1 李宏毅深度强化学习Sparse Reward4 强化学习算法在被引入深度神经网络后,对大量样本的需求更加 ...

- 强化学习中的无模型 基于值函数的 Q-Learning 和 Sarsa 学习

强化学习基础: 注: 在强化学习中 奖励函数和状态转移函数都是未知的,之所以有已知模型的强化学习解法是指使用采样估计的方式估计出奖励函数和状态转移函数,然后将强化学习问题转换为可以使用动态规划求解的 ...

- 强化学习中REIINFORCE算法和AC算法在算法理论和实际代码设计中的区别

背景就不介绍了,REINFORCE算法和AC算法是强化学习中基于策略这类的基础算法,这两个算法的算法描述(伪代码)参见Sutton的reinforcement introduction(2nd). A ...

- SpiningUP 强化学习 中文文档

2020 OpenAI 全面拥抱PyTorch, 全新版强化学习教程已发布. 全网第一个中文译本新鲜出炉:http://studyai.com/course/detail/ba8e572a 个人认为 ...

- 强化学习中的经验回放(The Experience Replay in Reinforcement Learning)

一.Play it again: reactivation of waking experience and memory(Trends in Neurosciences 2010) SWR发放模式不 ...

- 【转载】 “强化学习之父”萨顿:预测学习马上要火,AI将帮我们理解人类意识

原文地址: https://yq.aliyun.com/articles/400366 本文来自AI新媒体量子位(QbitAI) ------------------------------- ...

- (转) 深度强化学习综述:从AlphaGo背后的力量到学习资源分享(附论文)

本文转自:http://mp.weixin.qq.com/s/aAHbybdbs_GtY8OyU6h5WA 专题 | 深度强化学习综述:从AlphaGo背后的力量到学习资源分享(附论文) 原创 201 ...

- temporal credit assignment in reinforcement learning 【强化学习 经典论文】

Sutton 出版论文的主页: http://incompleteideas.net/publications.html Phd 论文: temporal credit assignment i ...

- ICML 2018 | 从强化学习到生成模型:40篇值得一读的论文

https://blog.csdn.net/y80gDg1/article/details/81463731 感谢阅读腾讯AI Lab微信号第34篇文章.当地时间 7 月 10-15 日,第 35 届 ...

- 【强化学习】1-1-2 “探索”(Exploration)还是“ 利用”(Exploitation)都要“面向目标”(Goal-Direct)

title: [强化学习]1-1-2 "探索"(Exploration)还是" 利用"(Exploitation)都要"面向目标"(Goal ...

随机推荐

- .net framework 使用Apollo 配置中心

参照了:https://www.cnblogs.com/xichji/p/11324893.html Apollo默认有一个"SampleApp"应用,"DEV" ...

- 架构与思维:了解Http 和 Https的区别(图文详解)

1 介绍 随着 HTTPS 的不断普及和使用成本的下降,现阶段大部分的系统都已经开始用上 HTTPS 协议. HTTPS 与 HTTP 相比, 主打的就是安全概念,相关的知识如 SSL .非对称加密. ...

- word文档生成视频,自动配音、背景音乐、自动字幕,另类创作工具

简介 不同于别的视频创作工具,这个工具创作视频只需要在word文档中打字,插入图片即可.完事后就能获得一个带有配音.字幕.背景音乐.视频特效滤镜的优美作品. 这种不要门槛,没有技术难度的视频创作工具, ...

- SOP页面跳转设计 RAS AES加密算法应用跨服务免登陆接口设计

SOP页面跳转设计 RAS AES加密算法应用跨服务免登陆接口设计 SOP,是 Standard Operating Procedure三个单词中首字母的大写 ,即标准作业程序,指将某一事件的标准操作 ...

- k8s使用rbd作为存储

k8s使用rbd作为存储 如果需要使用rbd作为后端存储的话,需要先安装ceph-common 1. ceph集群创建rbd 需要提前在ceph集群上创建pool,然后创建image [root@ce ...

- 看李沐的 ViT 串讲

ViT 概括 论文题目:AN IMAGE IS WORTH 16X16 WORDS: TRANSFORMERS FOR IMAGE RECOGNITION AT SCALE 论文地址:https:// ...

- 记录一次学习mongodb的20个常用语句

// 查询当前数据库 db // // 查看所有数据库 show dbs// 创建数据库 use db_name// 删除数据库 db.dropDatabase()// 创建集合 db.createC ...

- SNAT,DNAT以及REDIRECT转发详解

最近负责的其中一个项目的服务器集群出现了点网络方面的问题,在处理过程当中又涉及到了防火墙相关的知识和命令,想着有一段时间没有复习这部分内容了,于是借着此次机会复写了下顺便将本次复习的一些内容以博客的形 ...

- P9576 题解

赛时没仔细想,赛后才发现并不难. 将 \(l,r\) 与 \(l',r'\) 是否相交分开讨论. 假若不相交,那么 \(l',r' < l\) 或者 \(l',r' > r\) 并且 \( ...

- 用python处理html代码的转义与还原-转

本篇博客来源: 用python处理html代码的转义与还原 'tag>aaa</tag> # 转义还原 str_out = html.unescape(str_out) print( ...