5 Things You Should Know About the New Maxwell GPU Architecture

The introduction this week of NVIDIA’s first-generation “Maxwell” GPUs is a very exciting moment for GPU computing. These first Maxwell products, such as the GeForce GTX 750 Ti, are based on the GM107 GPU and are designed for use in low-power environments such as notebooks and small form factor computers. What is exciting about this announcement for HPC and other GPU computing developers is the great leap in energy efficiency that Maxwell provides: nearly twice that of the Kepler GPU architecture.

This post will tell you five things that you need to know about Maxwell as a GPU computing programmer, including high-level benefits of the architecture, specifics of the new Maxwell multiprocessor, guidance on tuning and pointers to more resources.

1. The Heart of Maxwell: More Efficient Multiprocessors

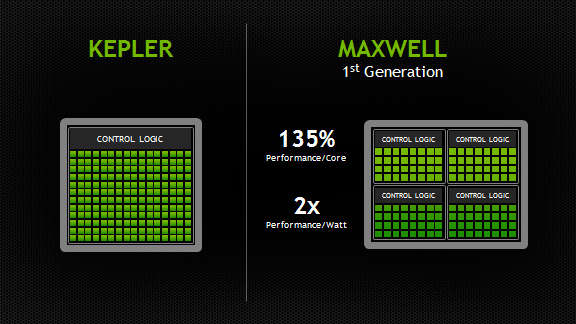

Maxwell introduces an all-new design for the Streaming Multiprocessor (SM) that dramatically improves power efficiency. Although the Kepler SMX design was extremely efficient for its generation, through its development NVIDIA’s GPU architects saw an opportunity for another big leap forward in architectural efficiency; the Maxwell SM is the realization of that vision. Improvements to control logic partitioning, workload balancing, clock-gating granularity, instruction scheduling, number of instructions issued per clock cycle, and many other enhancements allow the Maxwell SM (also called “SMM”) to far exceed Kepler SMX efficiency. The new Maxwell SM architecture enabled us to increase the number of SMs to five in GM107, compared to two in GK107, with only a 25% increase in die area.

Improved Instruction Scheduling

The number of CUDA Cores per SM has been reduced to a power of two, however with Maxwell’s improved execution efficiency, performance per SM is usually within 10% of Kepler performance, and the improved area efficiency of the SM means CUDA cores per GPU will be substantially higher versus comparable Fermi or Kepler chips. The Maxwell SM retains the same number of instruction issue slots per clock and reduces arithmetic latencies compared to the Kepler design.

As with SMX, each SMM has four warp schedulers, but unlike SMX, all core SMM functional units are assigned to a particular scheduler, with no shared units. The power-of-two number of CUDA Cores per partition simplifies scheduling, as each of SMM’s warp schedulers issue to a dedicated set of CUDA Cores equal to the warp width. Each warp scheduler still has the flexibility to dual-issue (such as issuing a math operation to a CUDA Core in the same cycle as a memory

operation to a load/store unit), but single-issue is now sufficient to fully utilize all CUDA Cores.

Increased Occupancy for Existing Code

In terms of CUDA compute capability, Maxwell’s SM is CC 5.0. SMM is

similar in many respects to the Kepler architecture’s SMX, with key

enhancements geared toward improving efficiency without requiring

significant increases in available parallelism per SM from the

application. The register file size and the maximum number of concurrent

warps in SMM are the same as in SMX (64k 32-bit registers and 64 warps,

respectively), as is the maximum number of registers per thread (255).

However the maximum number of active thread blocks per multiprocessor

has been doubled over SMX to 32, which should result in an automatic

occupancy improvement for kernels that use small thread blocks of 64 or

fewer threads (assuming available registers and shared memory are not

the occupancy limiter). Table 1 provides a comparison between key

characteristics of Maxwell GM107 and its predecessor Kepler GK107.

Reduced Arithmetic Instruction Latency

Another major improvement of SMM is that dependent arithmetic

instruction latencies have been significantly reduced. Because occupancy

(which translates to available warp-level parallelism) is the same or

better on SMM than on SMX, these reduced latencies improve utilization

and throughput.

| GPU | GK107 (Kepler) | GM107 (Maxwell) |

| CUDA Cores | 384 | 640 |

| Base Clock | 1058 MHz | 1020 MHz |

| GPU Boost Clock | N/A | 1085 MHz |

| GFLOP/s | 812.5 | 1305.6 |

| Compute Capability | 3.0 | 5.0 |

| Shared Memory / SM | 16KB / 48 KB | 64 KB |

| Register File Size / SM | 256 KB | 256 KB |

| Active Blocks / SM | 16 | 32 |

| Memory Clock | 5000 MHz | 5400 MHz |

| Memory Bandwidth | 80 GB/s | 86.4 GB/s |

| L2 Cache Size | 256 KB | 2048 KB |

| TDP | 64W | 60W |

| Transistors | 1.3 Billion | 1.87 Billion |

| Die Size | 118 mm2 | 148 mm2 |

| Manufactoring Process | 28 nm | 28 nm |

2. Larger, Dedicated Shared Memory

A significant improvement in SMM is that it provides 64KB of

dedicated shared memory per SM—unlike Fermi and Kepler, which

partitioned the 64KB of memory between L1 cache and shared memory. The

per-thread-block limit remains 48KB on Maxwell, but the increase in

total available shared memory can lead to occupancy

improvements. Dedicated shared memory is made possible in Maxwell by

combining the functionality of the L1 and texture caches into a single

unit.

3. Fast Shared Memory Atomics

Maxwell provides native shared memory atomic operations for 32-bit

integers and native shared memory 32-bit and 64-bit compare-and-swap

(CAS), which can be used to implement other atomic functions. In

contrast, the Fermi and Kepler architectures implemented shared memory

atomics using a lock/update/unlock pattern that could be expensive in

the presence of high contention for updates to particular locations in

shared memory.

4. Support for Dynamic Parallelism

Kepler GK110 introduced a new architectural feature called Dynamic

Parallelism, which allows the GPU to create additional work for itself. A

programming model enhancement leveraging this feature was introduced in

CUDA 5.0 to enable threads running on GK110 to launch additional

kernels onto the same GPU.

SMM brings Dynamic Parallelism into the mainstream by supporting it

across the product line, even in lower-power chips such as GM107. This

will benefit developers, because it means that applications will no

longer need special-case algorithm implementations for high-end GPUs

that differ from those usable in more power constrained environments.

5. Learn More about Programming Maxwell

For more architecture details and guidance on optimizing your code for Maxwell, I encourage you to check out the Maxwell Tuning Guide and Maxwell Compatibility Guide, which are available now to CUDA Registered Developers.

5 Things You Should Know About the New Maxwell GPU Architecture的更多相关文章

- Angular2学习笔记(1)

Angular2学习笔记(1) 1. 写在前面 之前基于Electron写过一个Markdown编辑器.就其功能而言,主要功能已经实现,一些小的不影响使用的功能由于时间关系还没有完成:但就代码而言,之 ...

- 动画requestAnimationFrame

前言 在研究canvas的2D pixi.js库的时候,其动画的刷新都用requestAnimationFrame替代了setTimeout 或 setInterval 但是jQuery中还是采用了s ...

- 【AR实验室】OpenGL ES绘制相机(OpenGL ES 1.0版本)

0x00 - 前言 之前做一些移动端的AR应用以及目前看到的一些AR应用,基本上都是这样一个套路:手机背景显示现实场景,然后在该背景上进行图形学绘制.至于图形学绘制时,相机外参的解算使用的是V-SLA ...

- iOS代码规范(OC和Swift)

下面说下iOS的代码规范问题,如果大家觉得还不错,可以直接用到项目中,有不同意见 可以在下面讨论下. 相信很多人工作中最烦的就是代码不规范,命名不规范,曾经见过一个VC里有3个按钮被命名为button ...

- 梅须逊雪三分白,雪却输梅一段香——CSS动画与JavaScript动画

CSS动画并不是绝对比JavaScript动画性能更优越,开源动画库Velocity.js等就展现了强劲的性能. 一.两者的主要区别 先开门见山的说说两者之间的区别. 1)CSS动画: 基于CSS的动 ...

- 阿里巴巴直播内容风险防控中的AI力量

直播作为近来新兴的互动形态和今年阿里巴巴双十一的一大亮点,其内容风险监控是一个全新的课题,技术的挑战非常大,管控难点主要包括业界缺乏成熟方案和标准.主播行为.直播内容不可控.峰值期间数千路高并发处理. ...

- 虾扯蛋:Android View动画 Animation不完全解析

本文结合一些周知的概念和源码片段,对View动画的工作原理进行挖掘和分析.以下不是对源码一丝不苟的分析过程,只是以搞清楚Animation的执行过程.如何被周期性调用为目标粗略分析下相关方法的执行细节 ...

- 【探索】无形验证码 —— PoW 算力验证

先来思考一个问题:如何写一个能消耗对方时间的程序? 消耗时间还不简单,休眠一下就可以了: Sleep(1000) 这确实消耗了时间,但并没有消耗 CPU.如果对方开了变速齿轮,这瞬间就能完成. 不过要 ...

- 对抗密码破解 —— Web 前端慢 Hash

(更新:https://www.cnblogs.com/index-html/p/frontend_kdf.html ) 0x00 前言 天下武功,唯快不破.但在密码学中则不同.算法越快,越容易破. ...

- H5单页面手势滑屏切换原理

H5单页面手势滑屏切换是采用HTML5 触摸事件(Touch) 和 CSS3动画(Transform,Transition)来实现的,效果图如下所示,本文简单说一下其实现原理和主要思路. 1.实现原理 ...

随机推荐

- GIT截图

GIT截图 今天首次成功用了GIT上传了JAVA代码,感觉一下次就能上传这么多代码,确实比在网页上方便.自己一开始根本摸不着头脑,不知道怎样使用GIT软件,但在学姐博客的指导下,在同学热情且耐心地指导 ...

- INSPIRED启示录 读书笔记 - 第23章 改进现有产品

不是一味地添加功能 改进产品不是简单地满足个别用户的要求,也不能对用户调查的结果照单全收.能提高指标的功能才是关注的重点.应该找准方向,分析关键指标,有针对性地改进产品

- 开机启动顺序rc.local与chkconfig的不同

/etc/rc.local文件有如下两行/etc/init.d/mysql start/etc/init.d/keepalived start /etc/rc.local是按脚本的顺序一个启动后启动下 ...

- Elasticsearch6.0简介入门介绍

Elasticsearch简单介绍 Elasticsearch (ES)是一个基于Lucene构建的开源.分布式.RESTful 接口全文搜索引擎.Elasticsearch 还是一个分布式文档数据库 ...

- 【P2401】不等数列(DP)

这个题乍一看就应该是DP,再看一眼数据范围,1000..那就应该是了.然后就向DP的方向想,经过对小数据的计算可以得出,如果我们用f[i][j]来表示前i个数有j个是填了"<" ...

- Nginad Server安装

前言 Nginad是一个基于php的开源项目,它既可以作为静态配置的Ad Server,也可以作为动态的RTB Exchange使用.代码结构比较直接明了,挺适合用作学习的.本文如果有理解错误的地方, ...

- MySql增加用户、授权、修改密码等语句

1. mysql 增加新用户: insert into mysql.user(Host,User,Password,ssl_cipher,x509_issuer,x509_subject) value ...

- 刻录DVD.XP系统盘(U盘)

ZC:用这个软件,安装太慢了... 忽然发现 以前有别的软件可以使用:http://www.cnblogs.com/vmskill/p/6196522.html 1.我是在这个论坛看到 这个工具的:h ...

- js写出斐波那契数列

斐波那契数列:1.1.2.3.5.8.13.21.34.…… 函数: 使用公式f[n]=f[n-1]+f[n-2],依次递归计算,递归结束条件是f[1]=1,f[2]=1. for循环: 从底层向上运 ...

- 从性能的角度谈SQL Server聚集索引键的选择

简介 在SQL Server中,数据是按页进行存放的.而为表加上聚集索引后,SQL Server对于数据的查找就是按照聚集索引的列作为关键字进行了.因此对于聚集索引的选择对性能的影响就变得十分重要 ...