windows下 eclipse搭建spark java编译环境

环境:

win10

jdk1.8

之前有在虚拟机或者集群上安装spark安装包的,解压到你想要放spark的本地目录下,比如我的目录就是D:\Hadoop\spark-1.6.0-bin-hadoop2.6

/**

*注意:

之前在linux环境下安装的spark的版本是spark-2.2.0-bin-hadoop2.6,但后来搭建eclipse的spark开发环境时发现spark-2.2.0-bin-hadoop2.6解压后没有lib文件,也就没有关键的spark-assembly-1.6.0-hadoop2.6.0.jar这个jar包,不知道spark-2.2.0以后怎么支持eclipse的开发,所以我换了spark-1.6.0,如果有知道的大神,谢谢在下边留言指导一下。

**/

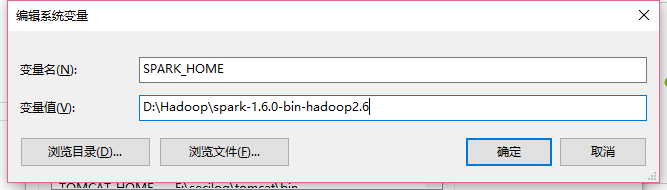

下边就简单了,先配置spark的环境变量,先添加一个SPARK_HOME,如下:

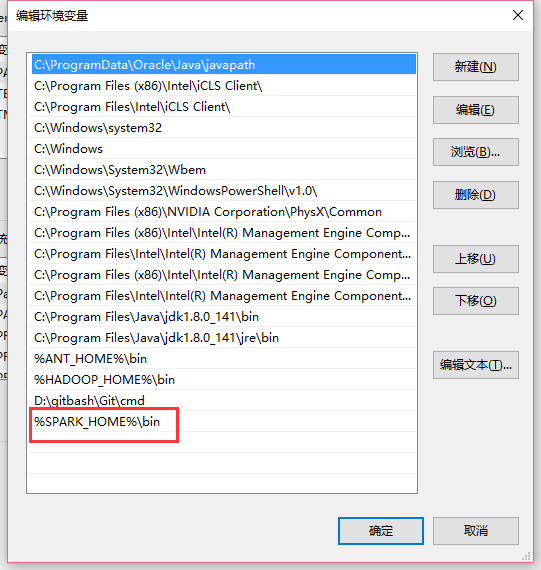

然后把SPARK_HOME配置到path,如下:

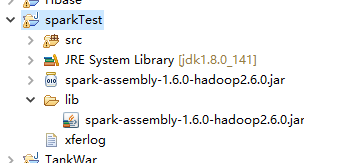

这样环境就搭好了,然后就是在eclipse上创建一个普通的java项目,然后把spark-assembly-1.6.0-hadoop2.6.0.jar这个包复制进工程并且导入,如下图

就可以开发spark程序了,下边附上一段小的测试代码:

import java.util.Arrays; import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.api.java.function.VoidFunction; import scala.Tuple2; public class WordCount {

public static void main(String[] args) {

SparkConf conf = new SparkConf().setMaster("local").setAppName("wc");

JavaSparkContext sc = new JavaSparkContext(conf); JavaRDD<String> text = sc.textFile("hdfs://master:9000/user/hadoop/input/test");

JavaRDD<String> words = text.flatMap(new FlatMapFunction<String, String>() {

private static final long serialVersionUID = 1L;

@Override

public Iterable<String> call(String line) throws Exception {

return Arrays.asList(line.split(" "));//把字符串转化成list

}

}); JavaPairRDD<String, Integer> pairs = words.mapToPair(new PairFunction<String, String, Integer>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, Integer> call(String word) throws Exception {

// TODO Auto-generated method stub

return new Tuple2<String, Integer>(word, 1);

}

}); JavaPairRDD<String, Integer> results = pairs.reduceByKey(new Function2<Integer, Integer, Integer>() {

private static final long serialVersionUID = 1L;

@Override

public Integer call(Integer value1, Integer value2) throws Exception {

// TODO Auto-generated method stub

return value1 + value2;

}

}); JavaPairRDD<Integer, String> temp = results.mapToPair(new PairFunction<Tuple2<String,Integer>, Integer, String>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<Integer, String> call(Tuple2<String, Integer> tuple)

throws Exception {

return new Tuple2<Integer, String>(tuple._2, tuple._1);

}

}); JavaPairRDD<String, Integer> sorted = temp.sortByKey(false).mapToPair(new PairFunction<Tuple2<Integer,String>, String, Integer>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, Integer> call(Tuple2<Integer, String> tuple)

throws Exception {

// TODO Auto-generated method stub

return new Tuple2<String, Integer>(tuple._2,tuple._1);

}

}); sorted.foreach(new VoidFunction<Tuple2<String,Integer>>() {

private static final long serialVersionUID = 1L;

@Override

public void call(Tuple2<String, Integer> tuple) throws Exception {

System.out.println("word:" + tuple._1 + " count:" + tuple._2);

}

}); sc.close();

}

}

统计的文件是下边的内容:

Look! at the window there leans an old maid. She plucks the

withered leaf from the balsam, and looks at the grass-covered rampart,

on which many children are playing. What is the old maid thinking

of? A whole life drama is unfolding itself before her inward gaze.

"The poor little children, how happy they are- how merrily they

play and romp together! What red cheeks and what angels' eyes! but

they have no shoes nor stockings. They dance on the green rampart,

just on the place where, according to the old story, the ground always

sank in, and where a sportive, frolicsome child had been lured by

means of flowers, toys and sweetmeats into an open grave ready dug for

it, and which was afterwards closed over the child; and from that

moment, the old story says, the ground gave way no longer, the mound

remained firm and fast, and was quickly covered with the green turf.

The little people who now play on that spot know nothing of the old

tale, else would they fancy they heard a child crying deep below the

earth, and the dewdrops on each blade of grass would be to them

tears of woe. Nor do they know anything of the Danish King who here,

in the face of the coming foe, took an oath before all his trembling

courtiers that he would hold out with the citizens of his capital, and

die here in his nest; they know nothing of the men who have fought

here, or of the women who from here have drenched with boiling water

the enemy, clad in white, and 'biding in the snow to surprise the

city.

.

当然也可以把这个工程打包成jar包,放在spark集群上运行,比如我打成jar包的名称是WordCount.jar

运行命令:/usr/local/spark/bin/spark-submit --master local --class cn.spark.test.WordCount /home/hadoop/Desktop/WordCount.jar

windows下 eclipse搭建spark java编译环境的更多相关文章

- Win7 Eclipse 搭建spark java1.8环境:WordCount helloworld例子

[学习笔记] Win7 Eclipse 搭建spark java1.8环境:WordCount helloworld例子在eclipse oxygen上创建一个普通的java项目,然后把spark-a ...

- 配置 Windows 下的 nodejs C++ 模块编译环境 安装 node-gyp

配置 Windows 下的 nodejs C++ 模块编译环境 根据 node-gyp 指示的 Windows 编译环境说明, 简单一句话就是 "Python + VC++ 编译环境&quo ...

- windows下eclipse搭建android_ndk开发环境

安装cygwin: 由于NDK编译代码时必须要用到make和gcc,所以你必须先搭建一个linux环境, cygwin是一个在windows平台上运行的unix模拟环境,它对于学习unix/linux ...

- Ubuntu杂记——Ubuntu下Eclipse搭建Maven、SVN环境

正在实习的公司项目是使用Maven+SVN管理的,所以转到Ubuntu下也要靠自己搭环境,自己动手,丰衣足食.步骤有点简略,但还是能理解的. 一.安装JDK7 打开终端(Ctrl+Alt+T),输入 ...

- Ubuntu(Linux)使用Eclipse搭建C/C++编译环境

转自:http://www.cppblog.com/kangnixi/archive/2010/02/10/107636.html 首先是安装Eclipse,方法有两种: 第一种是通过Ub ...

- Ubuntu 12.04 使用Eclipse搭建C/C++编译环境

首先是安装Eclipse,方法有两种: 第一种是通过Ubuntu自带的程序安装功能安装Eclipse,应用程序->Ubtuntu软件中心,搜Eclipse安装即可. 第二 ...

- Windows下Eclipse+PyDev配置Python开发环境

1.简介 Eclipse是一款基于Java的可扩展开发平台.其官方下载中包括J2EE.Java.C/C++.Android等诸多版本.除此之外,Eclipse还可以通过安装插件的方式进行包括Pytho ...

- Windows下Eclipse+PyDev安装Python开发环境

.简介 Eclipse是一款基于Java的可扩展开发平台.其官方下载中包括J2EE方向版本.Java方向版本.C/C++方向版本.移动应用方向版本等诸多版本.除此之外,Eclipse还可以通过安装插件 ...

- Windows下配置cygwin和ndk编译环境

cygwin安装 正确的安装步骤其实很简单:1. 下载setup-86_64.exe 2. 直接从网上下载安装,选择包时,顶部选择“default”不变 3. 搜索make,勾选make,cmake, ...

随机推荐

- ZLG zigbee 虚拟串口配置

一.设置网关工作模式: 在ZNetCom Utility工具中,将设置网关工作模式为 Real COM 模式 启动 ZNetCom Utility 搜索设备 获得设备信息 修改工作模式为:real c ...

- SpringBoot配置redis和分布式session-redis

springboot项目 和传统项目 配置redis的区别,更加简单方便,在分布式系统中,解决sesssion共享问题,可以用spring session redis. 1.pom.xml <d ...

- Percona-Tookit工具包之pt-table-sync

Preface We've used pt-table-checksum to checksum the different table data bwtween replicatio ...

- python实现简单决策树(信息增益)——基于周志华的西瓜书数据

数据集如下: 色泽 根蒂 敲声 纹理 脐部 触感 好瓜 青绿 蜷缩 浊响 清晰 凹陷 硬滑 是 乌黑 蜷缩 沉闷 清晰 凹陷 硬滑 是 乌黑 蜷缩 浊响 清晰 凹陷 硬滑 是 青绿 蜷缩 沉闷 清晰 ...

- 实现php Curl 调用不同项目中方法

之前为了实现跨项目调用方法,遇到的一些问题和解决方法总结. 话不多说,直接复制代码先跑了再说! jq代码. $.ajax({ type: "post", dataType: &qu ...

- jdbc最基础的mysql操作

1.基本的数据库操作 这里连接数据库可以做成一个单独的utils类,我这里因为程序少就没有封装. 虽然现在jdbc被其他框架取代了,但这是框架的基础 如下:第一个是插入数据操作 package Dat ...

- javaScript函数封装

本篇封装了一些常用的函数,兼容IE8及以下的浏览器,怪异模式. 按需加载loadScript().绑定事件处理函数addEvet().查看滚动尺寸getScrollOffset().查看可视区窗口尺寸 ...

- 23.3.3 Web存储机制【JavaScript高级程序设计第三版】

Web Storage 最早是在Web 超文本应用技术工作组(WHAT-WG)的Web 应用1.0 规范中描述的. 这个规范的最初的工作最终成为了HTML5 的一部分.Web Storage 的目的是 ...

- Python学习:运算符

简单运算符: +(加) 两个对象相加 -(减) 从一个数中减去另一个数,如果第一个操作数不存在,则假定为零 *(乘) 给出两个数的乘积,或返回字符串重复指定次数后的结果 Eg.'haha' * 3 ...

- 网站安全检测 漏洞检测 对thinkphp通杀漏洞利用与修复建议

thinkphp在国内来说,很多站长以及平台都在使用这套开源的系统来建站,为什么会这么深受大家的喜欢,第一开源,便捷,高效,生成静态化html,第二框架性的易于开发php架构,很多第三方的插件以及第三 ...