Two kinds of item classification model architecture

Introduction:

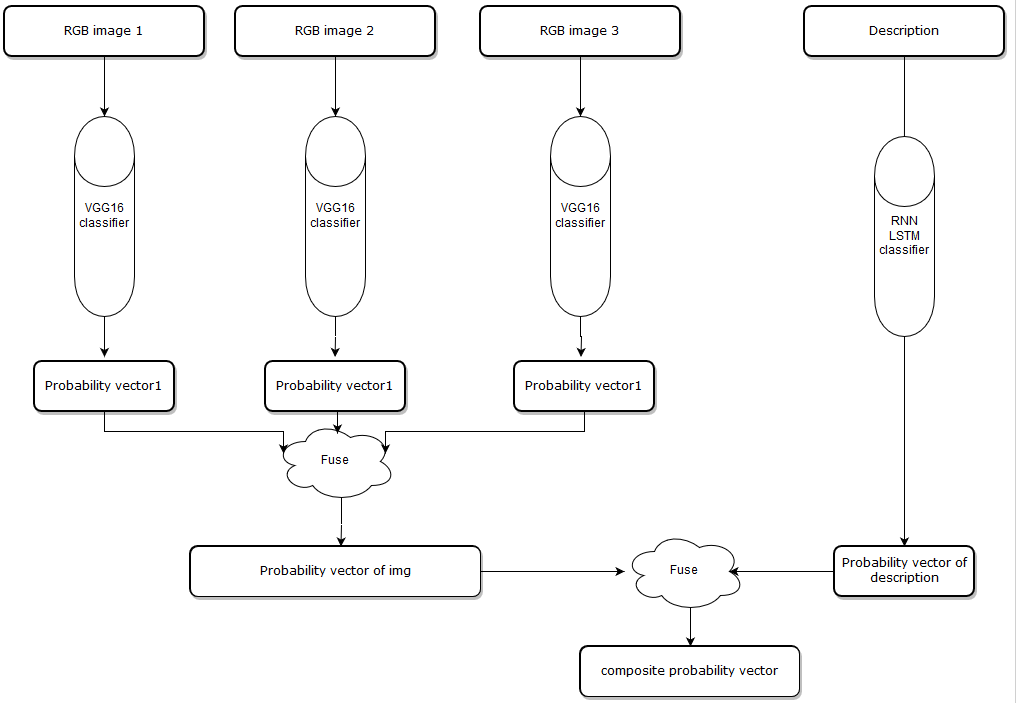

Introduction to Fusing-Probability model:

Cause the input has two parts, one is item images, the number of images can be 1 to N, one is item description contains several words. The main idea of Fusing-Probability is predicting on these features individually, and we have two classifiers, on the image side, we use VGG16 deep learning architecture which is a convolutional neural network to produce a probability for each image, and in the description side, we use an LSTM network to train a model to classify the text features. Then I use an algorithm to put all probability vectors together, finally, we get a composite probability vector, more about the algorithm can be found here https://github.com/solitaire2015/Machine-Learning-kernels/blob/master/composite-score/Composite_Score_Solution.ipynb.

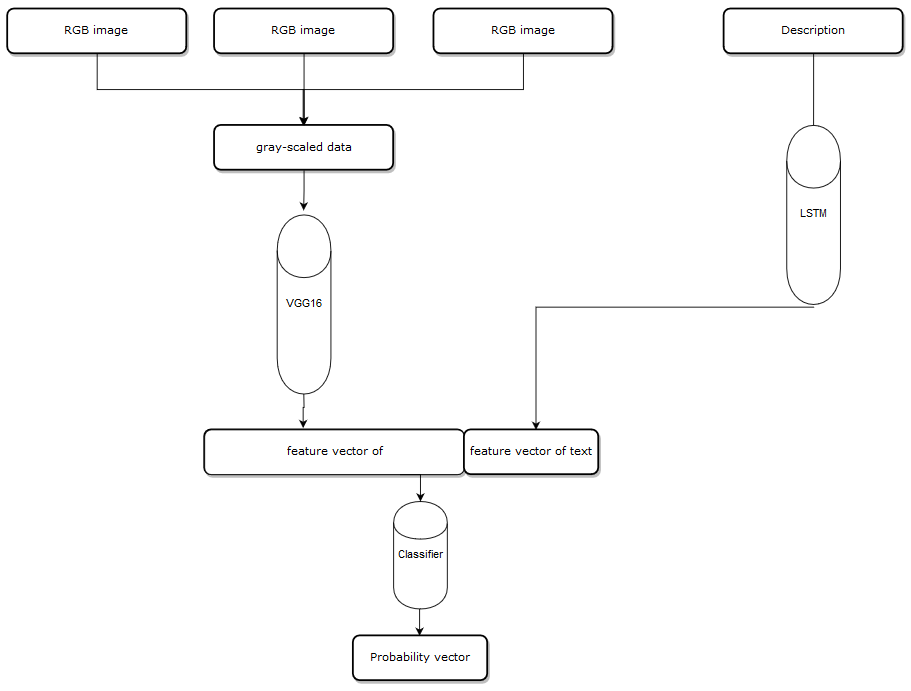

Introduction to Multi-Task model:

The main difference between these two models is we combine all features first, and put it into a single classifier, first, I choose first 3 images and convert them into gray-scale image, the shape of an RGB image is (width,height,3) because there are three channels in an RGB image, and the shape of gray-scaled image is (width,height) there is only one channel, and I combine three gray-scaled images into one data block which has shape(width,height,3). Then, we put it in the VGG16 model, instead of getting a probability vector, we get the feature vector after the last pooling layer, now we get a feature vector from the images, on the description side, same as before ,we put the description into the LSTM model, we get text feature before the model produce a probability vector, and we combine two feature vectors. then put the vector to a classifier and get the final probability vector.

Multi-Task model architecture:

Fusing-Probability model architecture:

Validation:

I test two architectures on the same data set. the data set has 10 classes:

'Desktop_CPU',

'Server_CPU',

'Desktop_Memory',

'Server_Memory',

'AllInOneComputer',

'CellPhone',

'USBFlashDrive',

‘Keyboard',

‘Mouse',

‘NoteBooks',

And the data set contains 5487 items, the Fusing-Probability model gets 93.87% accuracy, the Multi-Task model gets 93.38% accuracy, I have several figures to show the difference between two architectures.

1. Items that model predict successfully and the probability distribution.

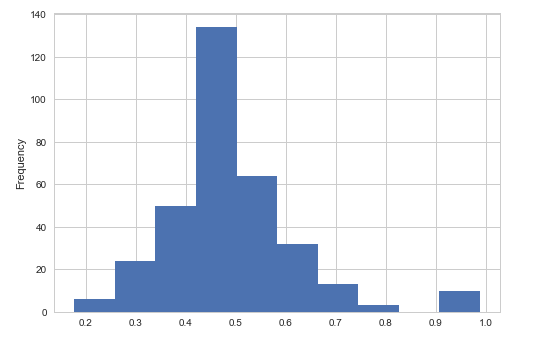

Fusing-Probability Multi-Task

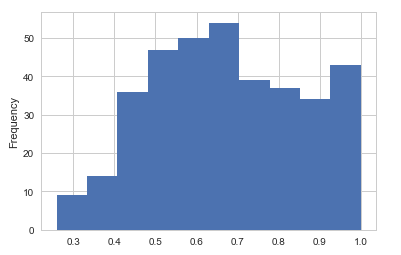

2. Items that model doesn't predict success and the probability distribution.

Fusing-Probability Multi-Task

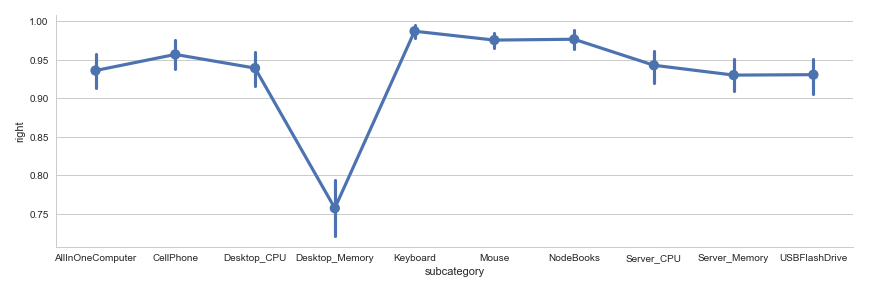

3. Fraction map of the accuracy on each class.

Fusing-Probability Multi-Task

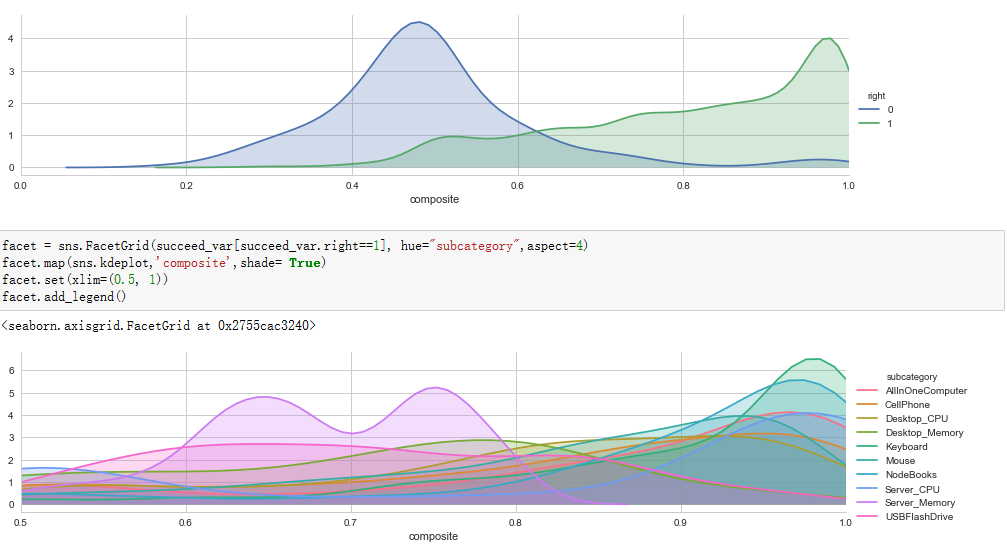

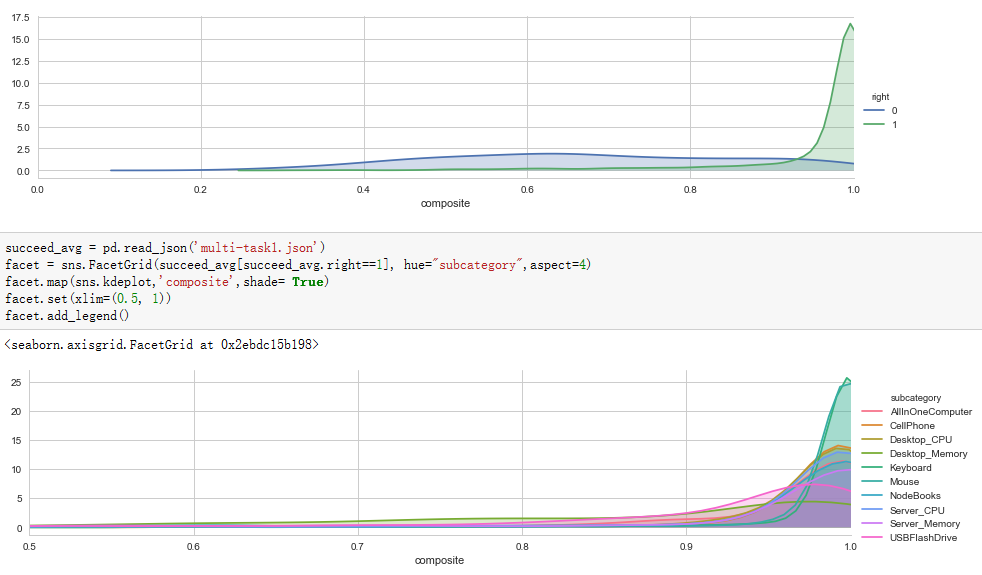

4.Curve figure.

Fusing-Probability Multi-Task

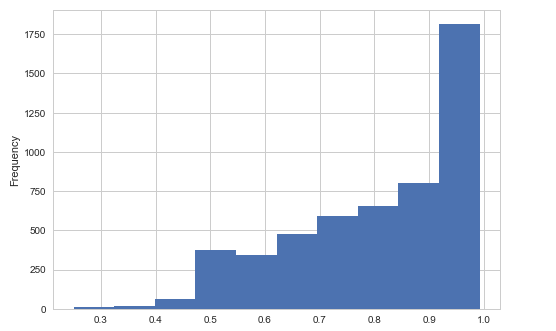

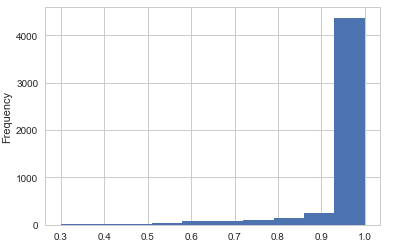

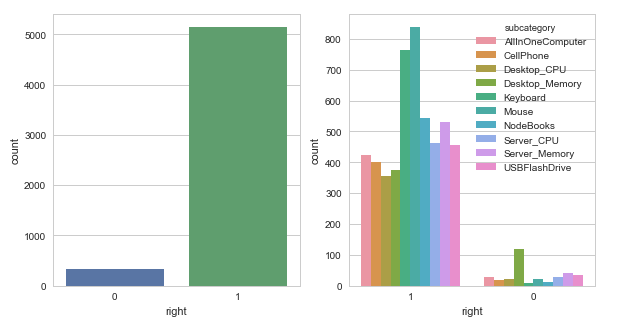

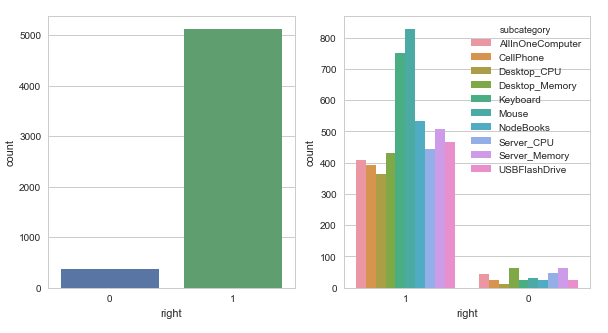

5. Histogram.

Fusing-Probability

Multi-Task

Analysis of the figures:

First,

let's look at the first couple of figures, the figure in left panel is

the histogram of the probability the Fusing-Probability model

successfully predicted, the right panel figure comes from the Multi-Task

model, most right answers have a probability greater than 0.9 in the

Multi-Task model, but in Fusing-Probability model there are some right

answers have a lower probability. From this view Multi-Task model is

better.

Second, we can get some information from a second couple

of figures, it shows the histogram of the probability that models

predict a wrong result. The probability centralizes on about 0.5 from

the Fusing-Probability model, I need to mention that some results get a

high probability greater than 0.9, they are really wrong, the data is

not so clean and has some misclassified items. Look at the right panel,

we can't find any relationship. So, Fusing-Probability model is better.

3th,

a fraction map shows model accuracy on each class, we can find that the

desktop-memory has the lowest accuracy in both models, there are some

items really hard to say if it's a desktop memory or server memory even

for a real person. But on other classes, the Fusing-Probability model

has higher accuracy than another model, Fusing-Probability is better.

4th,

it's a couple of curve figures of right and wrong predictions, we can

easily find a reasonable threshold from the left panel, because most

wrong predictions has about 0.5 probability, we can use a threshold as

0.63 when we use the model to divide all predictions in to certain and

not sure group, but in Multi-Task model we can't get a very meaningful

threshold. So, Fusing-Probability model is better.

5th, a couple

of histograms, the Fusing-Probability model gets higher accuracy, except

desktop-memory class, the model works fine, the reason why the Mouse

and Keyboard class have most right predictions are we have more samples

on these two sets.

Finally, we can find the Fusing-Probability model works better than Multi-Task model.

Pros & cons:

Multi-Task model:

Easily

to train, get higher probability when it predicts right, but when we

have a very limit data set we can't do data augmentation on the data

set, because there is not a good way to produce more data on text, if we

only do data augmentation on images, the text will be more important,

the image will be useless. The model easily gets overfitting. the image

input part is a set of images, the order would not influence the result,

but this model treats the first image more important, and we can have

at most 3 images, we will lose some information if there are more than 3

images. we convert the RGB image into gray-scaled image we may miss

some important features.

Fusing-Probability model:

We

can do data augmentation separately because we train different models

on image input and text input, we can find a more reasonable threshold

for each class, base on the probability fusing algorithm, we can have

any number of images, and the model won't consider the order of

images.The model has more flexibility.

Two kinds of item classification model architecture的更多相关文章

- 分类模型的性能评价指标(Classification Model Performance Evaluation Metric)

二分类模型的预测结果分为四种情况(正类为1,反类为0): TP(True Positive):预测为正类,且预测正确(真实为1,预测也为1) FP(False Positive):预测为正类,但预测错 ...

- Image Classification

.caret, .dropup > .btn > .caret { border-top-color: #000 !important; } .label { border: 1px so ...

- Undo/Redo for Qt Tree Model

Undo/Redo for Qt Tree Model eryar@163.com Abstract. Qt contains a set of item view classes that use ...

- [notes] ImageNet Classification with Deep Convolutional Neual Network

Paper: ImageNet Classification with Deep Convolutional Neual Network Achievements: The model address ...

- 论文笔记:Fast Neural Architecture Search of Compact Semantic Segmentation Models via Auxiliary Cells

Fast Neural Architecture Search of Compact Semantic Segmentation Models via Auxiliary Cells 2019-04- ...

- (转)Illustrated: Efficient Neural Architecture Search ---Guide on macro and micro search strategies in ENAS

Illustrated: Efficient Neural Architecture Search --- Guide on macro and micro search strategies in ...

- ASP.NET Core 中文文档 第二章 指南(4.4)添加 Model

原文:Adding a model 作者:Rick Anderson 翻译:娄宇(Lyrics) 校对:许登洋(Seay).孟帅洋(书缘).姚阿勇(Mr.Yao).夏申斌 在这一节里,你将添加一些类来 ...

- MVC5 DBContext.Database.SqlQuery获取对象集合到ViewModel集合中(可以利用这个方法给作为前台视图页cshtml页面的@model 源)

首先我们已经有了一个Model类: using System;using System.Data.Entity;using System.ComponentModel.DataAnnotations; ...

- .NET/ASP.NETMVC 大型站点架构设计—迁移Model元数据设置项(自定义元数据提供程序)

阅读目录: 1.需求背景介绍(Model元数据设置项应该与View绑定而非ViewModel) 1.1.确定问题域范围(可以使用DSL管理问题域前提是锁定领域模型) 2.迁移ViewModel设置到外 ...

随机推荐

- ssh操作服务器

# -*- coding: utf-8 -*- """ Created on Wed Mar 20 10:15:16 2019 @author: Kuma 1. ssh连 ...

- Linux安装配置rabbitmq

Step1:安装erlang 1)下载erlang wget http://www.rabbitmq.com/releases/erlang/erlang-19.0.4-1.el7.centos.x8 ...

- vue-cli搭建项目模拟后台接口数据,webpack-dev-conf.js文件配置

webpack.dev.conf.js 首先第一步 const express = require('express');const app = express();var appData = req ...

- C语言-第2次作业得分

作业链接:https://edu.cnblogs.com/campus/hljkj/CS20180的2/homework/2292 作业链接:https://edu.cnblogs.com/campu ...

- Ajax的工作原理以及优缺点

Ajax的工作原理 : 相当于在客户端与服务端之间加了一个抽象层(Ajax引擎),使用户请求和服务器响应异步化,并不是所有的请求都提交给服务器,像一些数据验证和数据处理 都交给Ajax引擎来完成,只有 ...

- 编译原理作业(第一次)-完成retinf.c(阉割版)

首先,作业要求概括如下: 根据前缀表达式文法,实现statements() 和expression() 两个函数. 并且要求使得语义分析在完成分析前缀表达式并输出中间代码的同时,也能够将前缀表达式翻译 ...

- 《DSP using MATLAB》Problem 7.15

用Kaiser窗方法设计一个台阶状滤波器. 代码: %% +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++ ...

- Python 模块调用的变量与路径

自己编写的python代码经常需要分模块文件以及包,梳理一下调用顺序.执行顺序.工作路径.函数与变量等 工作路径 首先是工作路径,当模块代码放在统一的包内的时候,其路径和外层的包路径不同,当作为主调用 ...

- 从零开始在iPhone上运行视频流实时预测模型应用,只需10步

1、买一台苹果电脑,建议MacBook Pro. 2、安装Xcode. 3、克隆TensorFlow:https://github.com/tensorflow/tensorflow.git 4、下载 ...

- 学习笔记TF058:人脸识别

人脸识别,基于人脸部特征信息识别身份的生物识别技术.摄像机.摄像头采集人脸图像或视频流,自动检测.跟踪图像中人脸,做脸部相关技术处理,人脸检测.人脸关键点检测.人脸验证等.<麻省理工科技评论&g ...