spark+kafka 小案例

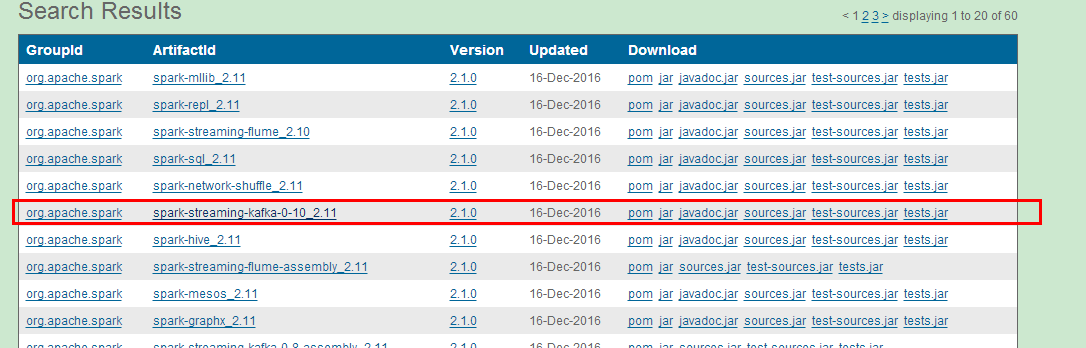

(1)下载kafka的jar包

package com.sparkstreaming

import org.apache.spark.SparkConf

import org.apache.spark.streaming.Seconds

import org.apache.spark.streaming.StreamingContext

import org.apache.spark.streaming.kafka010.KafkaUtils

import org.apache.spark.streaming.kafka010.LocationStrategies.PreferConsistent

import org.apache.spark.streaming.kafka010.ConsumerStrategies.Subscribe

import org.apache.kafka.common.serialization.StringDeserializer

object SparkStreamKaflaWordCount {

def main(args: Array[String]): Unit = {

//创建streamingContext

var conf=new SparkConf().setMaster("spark://192.168.177.120:7077")

.setAppName("SparkStreamKaflaWordCount Demo");

var ssc=new StreamingContext(conf,Seconds());

//创建topic

//var topic=Map{"test" -> 1}

var topic=Array("test");

//指定zookeeper

//创建消费者组

var group="con-consumer-group"

//消费者配置

val kafkaParam = Map(

"bootstrap.servers" -> "192.168.177.120:9092,anotherhost:9092",//用于初始化链接到集群的地址

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

//用于标识这个消费者属于哪个消费团体

"group.id" -> group,

//如果没有初始化偏移量或者当前的偏移量不存在任何服务器上,可以使用这个配置属性

//可以使用这个配置,latest自动重置偏移量为最新的偏移量

"auto.offset.reset" -> "latest",

//如果是true,则这个消费者的偏移量会在后台自动提交

"enable.auto.commit" -> (false: java.lang.Boolean)

);

//创建DStream,返回接收到的输入数据

var stream=KafkaUtils.createDirectStream[String,String](ssc, PreferConsistent,Subscribe[String,String](topic,kafkaParam))

//每一个stream都是一个ConsumerRecord

stream.map(s =>(s.key(),s.value())).print();

ssc.start();

ssc.awaitTermination();

}

}

# The number of milliseconds of each tick

tickTime=

# The number of ticks that the initial

# synchronization phase can take

initLimit=

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=

# the directory where the snapshot is stored.

dataDir=/home/zhangxs/datainfo/developmentData/zookeeper/zkdata1

# the port at which the clients will connect

clientPort=

server.=zhangxs::

zkServer.sh start zoo1.cfg

【bin/kafka-server-start.sh config/server.properties】

[root@zhangxs kafka_2.]# bin/kafka-server-start.sh config/server.properties

[-- ::,] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = null

advertised.port = null

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads =

broker.id =

broker.id.generation.enable = true

broker.rack = null

compression.type = producer

connections.max.idle.ms =

controlled.shutdown.enable = true

controlled.shutdown.max.retries =

controlled.shutdown.retry.backoff.ms =

controller.socket.timeout.ms =

create.topic.policy.class.name = null

default.replication.factor =

delete.topic.enable = false

fetch.purgatory.purge.interval.requests =

group.max.session.timeout.ms =

group.min.session.timeout.ms =

host.name =

inter.broker.listener.name = null

inter.broker.protocol.version = 0.10.-IV0

leader.imbalance.check.interval.seconds =

[root@zhangxs kafka_2.]# bin/kafka-console-producer.sh --broker-list 192.168.177.120: --topic test

./spark-submit --class com.sparkstreaming.SparkStreamKaflaWordCount /usr/local/development/spark-2.0/jars/streamkafkademo.jar

zhang xing sheng

// :: INFO cluster.CoarseGrainedSchedulerBackend$DriverEndpoint: Launching task on executor id: hostname: 192.168.177.120.

// :: INFO storage.BlockManagerInfo: Added broadcast_99_piece0 in memory on 192.168.177.120: (size: 1913.0 B, free: 366.3 MB)

// :: INFO scheduler.TaskSetManager: Finished task 0.0 in stage 99.0 (TID ) in ms on 192.168.177.120 (/)

// :: INFO scheduler.TaskSchedulerImpl: Removed TaskSet 99.0, whose tasks have all completed, from pool

// :: INFO scheduler.DAGScheduler: ResultStage (print at SparkStreamKaflaWordCount.scala:) finished in 0.019 s

// :: INFO scheduler.DAGScheduler: Job finished: print at SparkStreamKaflaWordCount.scala:, took 0.023450 s

-------------------------------------------

Time: ms

-------------------------------------------

(null,zhang xing sheng)

<dependency><groupId>org.apache.spark</groupId><artifactId>spark-streaming_2.11</artifactId><version>2.1.0</version></dependency>

(2)在提交spark应用程序的时候,抛出类找不到

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/kafka/common/serialization/StringDeserializer

at com.sparkstreaming.SparkStreamKaflaWordCount$.main(SparkStreamKaflaWordCount.scala:)

at com.sparkstreaming.SparkStreamKaflaWordCount.main(SparkStreamKaflaWordCount.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

------------------------------------------------------------------------

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/spark/streaming/kafka010/KafkaUtils$

at com.sparkstreaming.SparkStreamKaflaWordCount$.main(SparkStreamKaflaWordCount.scala:)

at com.sparkstreaming.SparkStreamKaflaWordCount.main(SparkStreamKaflaWordCount.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

spark+kafka 小案例的更多相关文章

- kafka 小案例【二】 --kafka 设置多个消费着集群

这个配是我在http://www.cnblogs.com/zhangXingSheng/p/6646972.html 的基础上再添加的配置 设置多个消息集群 (1)复制两份配置文件 > cp c ...

- kafka 小案例【一】---设置但个消息集群

启动kafka服务 [ bin/kafka-server-start.sh config/server.properties ] [root@zhangxs kafka_2.]# bin/kafka- ...

- 大数据Spark+Kafka实时数据分析案例

本案例利用Spark+Kafka实时分析男女生每秒购物人数,利用Spark Streaming实时处理用户购物日志,然后利用websocket将数据实时推送给浏览器,最后浏览器将接收到的数据实时展现, ...

- Scala进阶之路-Spark底层通信小案例

Scala进阶之路-Spark底层通信小案例 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.Spark Master和worker通信过程简介 1>.Worker会向ma ...

- _00017 Kafka的体系结构介绍以及Kafka入门案例(0基础案例+Java API的使用)

博文作者:妳那伊抹微笑 itdog8 地址链接 : http://www.itdog8.com(个人链接) 博客地址:http://blog.csdn.net/u012185296 博文标题:_000 ...

- Spark Streaming updateStateByKey案例实战和内幕源码解密

本节课程主要分二个部分: 一.Spark Streaming updateStateByKey案例实战二.Spark Streaming updateStateByKey源码解密 第一部分: upda ...

- graph小案例

(小案例,有五个人他们参见相亲节目,这个五个人分别是0,1,2,3,4,号选手,计算出追随者年龄大于被追随者年龄的人数和平均年龄) scala> import org.apache.spark. ...

- 机械表小案例之transform的应用

这个小案例主要是对transform的应用. 时钟的3个表针分别是3个png图片,通过setInterval来让图片转动.时,分,秒的转动角度分别是30,6,6度. 首先,通过new Date函数获取 ...

- shell讲解-小案例

shell讲解-小案例 一.文件拷贝输出检查 下面测试文件拷贝是否正常,如果cp命令并没有拷贝文件myfile到myfile.bak,则打印错误信息.注意错误信息中basename $0打印脚本名.如 ...

随机推荐

- SYSPROCESSES 查看连接

原文:SYSPROCESSES 查看连接 SELECT at.text,sp.* FROM[Master].[dbo].[SYSPROCESSES] sp CROSS APPLY sys.dm_exe ...

- SPCOMM控件对串口参数的设置

对于串口来说,一般大家都了解波特率,校验码,数据位之类的参数.然而在实际的数据传输中,有些参数也会影响数据的传输.现总结如下,以便大家查询.在对串口进行编程时,可用portman对串口参数进行跟踪,提 ...

- 用CSS3产生动画效果

相关属性: @keyframes规则:定义动画 语法:@keyframes animationname{keyframes-selector {CSS-style;}} animationname:动 ...

- Oracle truncate、 delete、 drop区别

相同点: 1.truncate和不带where子句的delete.以及drop都会删除表内的数据. 2.drop.truncate都是DDL语句(数据定义语言),执行后会自动提交. 不同点: 1. t ...

- eclipse 启动报错 java was started but returned code=13

eclipse启动不了,出现“Java was started but returned exit code=13......”对话框如下 我的解决方法是:去控制面板--程序--卸载程序和功能下面查看 ...

- kubernetes1.5.2--部署监控服务

本文基于kubernetes 1.5.2版本编写 Heapster是kubernetes集群监控工具.在1.2的时候,kubernetes的监控需要在node节点上运行cAdvisor作为agent收 ...

- Spring整合Hibernate的时候使用hibernate.cfg.xml

Spring整合Hibernate其实也就是把Hibernate的SessionFactory对象封装成:org.springframework.orm.hibernate3.LocalSession ...

- HDOJ 3359 Kind of a Blur

用高斯消元对高斯模糊的图像还原.... Kind of a Blur Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 32768/327 ...

- POJ 2983-Is the Information Reliable?(差分约束系统)

题目地址:POJ 2983 题意:有N个车站.给出一些点的精确信息和模糊信息.精确信息给出两点的位置和距离.模糊信息给出两点的位置.但距离大于等于一.试确定是否全部的信息满足条件. 思路:事实上就是让 ...

- flume学习(四):Flume Interceptors的使用

转载:http://blog.csdn.net/xiao_jun_0820/article/details/38111305 对于flume拦截器,我的理解是:在app(应用程序日志)和 source ...