Multi-Dimensional Recurrent Neural Networks

Multi-Dimensional Recurrent Neural Networks

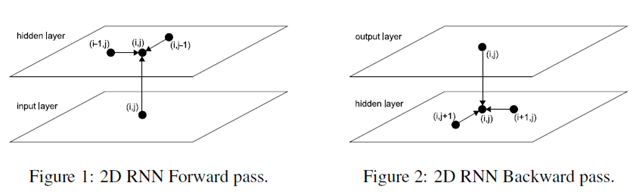

The basic idea of MDRNNs is to replace the single recurrent connection found in standard RNNs with as many recurrent connections as there are dimensions in the data. During the forward pass, at each point in the data sequence, the hidden layer of the network receives both an external input and its own activations from one step back along all dimensions.

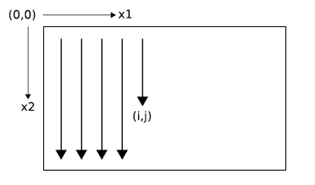

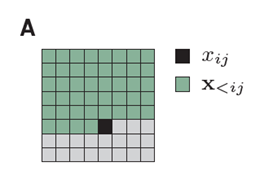

Clearly, the data must be processed in such a way that when the network reaches a poin in an n-dimensional sequence, it has already passed through all the points from which it will rechive its previous activations.

2D sequence ordering. The MDRNN forword pass starts at the origin and follows the direction of the arrows. The point(I,j) is never reached before both (i-1,j) and (i,j-1)

The forward pass of an MDRNN can then be carried out by feeding forward the input and the n previous hidden layer activations at each point in the ordered input sequence, and storing the resulting hidden layer activations at each point in the ordered input sequence, and storing the resulting hidden layer activations. Care must be taken at the sequence boundaries not to feed forward activations from points outside the sequence.

Note that each 'point' in the input sequence will in general be a multivalued vector. For example, in a two dimensional color image, the inputs could be single pixels represented by RGB triples, or blocks of pixels, or the outputs of a preprocessing method such as a discrete cosine transform.

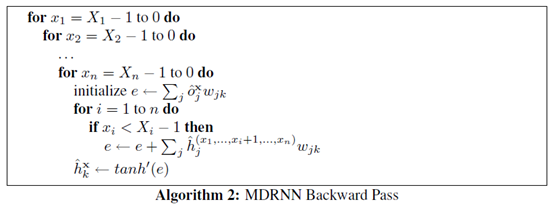

The error gradient of an MDRNN(that is, the derivative of some objective function with respect to the network weights) can be calculated with an n-dimensional extension of the backpropagation through time(BPTT) algorithm. As with one dimensional BPTT, the sequence is processed in the reverse order of the forward pass. At each timestep, the hidden layer receives both the output error derivatives and its own n 'future' derivatives. Figure 2 illustrates the BPTT backword pass for two dimensions. Again, care must be taken at the sequence boundaries.

At a point in an n-dimensional sequence, define and respectively as the activations of the input unit and the hidden unit. Define as the weight of the connection going from unit j to unit k. Then for an n-dimensional MDRNN whose hidden layer consists of summation units with the tanh activation function, the forward pass for a sequence with dimensions can be sumarised as follows:

Defining and respectively as the derivatives of the objective function with respect to the activations of the output unit and the hidden unit at point x, the backward pass is:

Since the forward and backward pass require one pass each through the data sequence, the overall complexity of MDRNN training is linear in the number of data points and the unmber of network weights.

Multi-directional MDRNNs

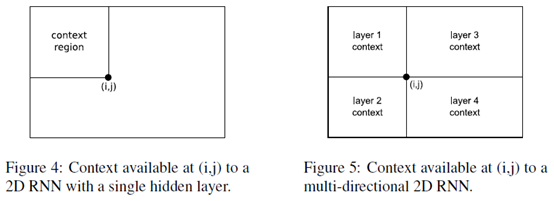

For one dimensional RNNs, the problem of multi-directional context was solved in 1997 by the introduction of bidirectional recurrent neural networks (BRNNs). BRNNs contain two separate hidden layers that process the input sequence in the forward and reverse directions. The two hidden layers are connected to a single output layer, thereby providing the network with access to both past and future context.

BRNNs can be extended to n-dimensional data by using separate hidden layers, each of which processes the sequence using the ordering defined above, but with a different choice of axes.

More specifically, the axes are chosen so that their origins lie on the the vertices of the sequence. The 2 dimensional case is illustrated in Figure 6.

As before, the hidden layers are connected to a single output layer, which now has access to all surrounding context.

If the size of the hidden layers is held constan, multi-direcitonal MDRNNs scales as O() for n-dimensional data. In practive however, we have found that using small layers gives better results than 1 large layer with the same overall number of weights, presumably because the data processing is shared between the hidden layers. This also holds in one dimension, as previous experiments have demonstrated. In any case the complexity of the algorithm remains linear in the number of data points and the number of parameters, and the number of parameters is independent of the data dimensionality.

For a multi-directional MDRNN, the forward and backward passes through an n-dimensional sequence can be summarized as follows:

MCGSM

We factorize the distribution of images such that the prediction of a pixel(black) may depend on any pixel in the upper-left green region.

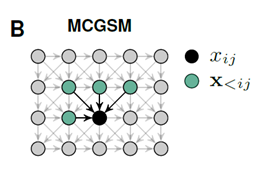

A graphical model representation of an MCGSM with a causal neighborhood limited to a small region.

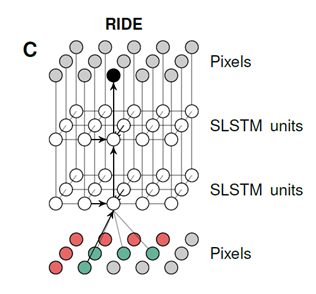

A visualization of our recurrent iamge model with two layers of spatial LSTMs. The pixels of the image are represented twice and some arrows are omitted for clarity. Through feedforward connections, the prediction of a pixel depends directly on its neighborhood(gre-e),but through recurrent connections it hs access to the information in a much larger region(r-ed).

Multi-Dimensional Recurrent Neural Networks的更多相关文章

- The Unreasonable Effectiveness of Recurrent Neural Networks (RNN)

http://karpathy.github.io/2015/05/21/rnn-effectiveness/ There’s something magical about Recurrent Ne ...

- 循环神经网络(RNN, Recurrent Neural Networks)介绍(转载)

循环神经网络(RNN, Recurrent Neural Networks)介绍 这篇文章很多内容是参考:http://www.wildml.com/2015/09/recurrent-neur ...

- Attention and Augmented Recurrent Neural Networks

Attention and Augmented Recurrent Neural Networks CHRIS OLAHGoogle Brain SHAN CARTERGoogle Brain Sep ...

- cs231n spring 2017 lecture10 Recurrent Neural Networks 听课笔记

(没太听明白,下次重新听一遍) 1. Recurrent Neural Networks

- 第十四章——循环神经网络(Recurrent Neural Networks)(第一部分)

由于本章过长,分为两个部分,这是第一部分. 这几年提到RNN,一般指Recurrent Neural Networks,至于翻译成循环神经网络还是递归神经网络都可以.wiki上面把Recurrent ...

- 第十四章——循环神经网络(Recurrent Neural Networks)(第二部分)

本章共两部分,这是第二部分: 第十四章--循环神经网络(Recurrent Neural Networks)(第一部分) 第十四章--循环神经网络(Recurrent Neural Networks) ...

- Pixel Recurrent Neural Networks翻译

Pixel Recurrent Neural Networks 目前主要在用的文档存放: https://www.yuque.com/lart/papers/prnn github存档: https: ...

- 循环神经网络(Recurrent Neural Networks, RNN)介绍

目录 1 什么是RNNs 2 RNNs能干什么 2.1 语言模型与文本生成Language Modeling and Generating Text 2.2 机器翻译Machine Translati ...

- 《转》循环神经网络(RNN, Recurrent Neural Networks)学习笔记:基础理论

转自 http://blog.csdn.net/xingzhedai/article/details/53144126 更多参考:http://blog.csdn.net/mafeiyu80/arti ...

随机推荐

- servlet config

<webapp> <!--servlet是指编写的Servlet的路径,以及定义别名--> <servlet> <servlet-name>test&l ...

- PHP中调用SVN命令更新网站方法(解决文件名包含中文更新失败的问题)

想说写一个通过网页就可以执行 SVN 升级的程序,结果并不是我想得那样简单,有一些眉角需要注意的说. 先以 Apache 的用户帐号执行 SVN checkout,这样 Apache 才有 SVN 的 ...

- 工作方法-scrum+番茄工作法

1.产品和开发团队近期的工作分析和安排,使用scrum. 产品的工作:通过product backlog来列出 开发团队近期的工作安排:通过sprint backlog来列出,由个人认领,并估算(优先 ...

- python+selenium之断言Assertion

一.断言方法 断言是对自动化测试异常情况的判断. # -*- coding: utf-8 -*- from selenium import webdriver import unittest impo ...

- 【LeetCode】9 Palindrome Number 回文数判定

题目: Determine whether an integer is a palindrome. Do this without extra space. Some hints: Could neg ...

- Java 守护线程(Daemon) 例子

当我们在Java中创建一个线程,缺省状态下它是一个User线程,如果该线程运行,JVM不会终结该程序.当一个线被标记为守护线程,JVM不会等待其结束,只要所有用户(User)线程都结束,JVM将终结程 ...

- POJ 4020 NEERC John's inversion 贪心+归并求逆序对

题意:给你n张卡,每张卡上有蓝色和红色的两种数字,求一种排列使得对应颜色数字之间形成的逆序对总数最小 题解:贪心,先按蓝色排序,数字相同再按红色排,那么蓝色数字的逆序总数为0,考虑交换红色的数字消除逆 ...

- cv2.solvepnp 相机的位姿估计

预备知识 图像坐标系: 理想的图像坐标系原点O1和真实的O0有一定的偏差,由此我们建立了等式(1)和(2),可以用矩阵形式(3)表示. 相机坐标系(C)和世界坐标系(W): 通过相机与图像的投 ...

- [CV笔记]图像特征提取三大法宝:HOG特征,LBP特征,Haar特征

(一)HOG特征 1.HOG特征: 方向梯度直方图(Histogram of Oriented Gradient, HOG)特征是一种在计算机视觉和图像处理中用来进行物体检测的特征描述子.它通过计算和 ...

- VS开发软winform软件的更改用户使用权限

在使用软件的过程中,我们经常需要使用的软件拥有管理员权限,在开发的过程中,本人就遇到了应为权限不足的问题导致软件不能正常使用的状况. 在此我来记录我遇到的问题. 为开发的软件赋予管理员权限 https ...