Kafka单节点及集群配置安装

一.单节点

1.上传Kafka安装包到Linux系统【当前为Centos7】。

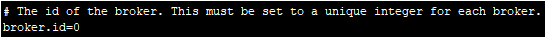

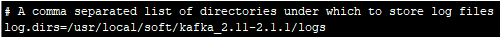

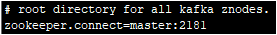

2.解压,配置conf/server.property。

2.1配置broker.id

2.2配置log.dirs

2.3配置zookeeper.connect

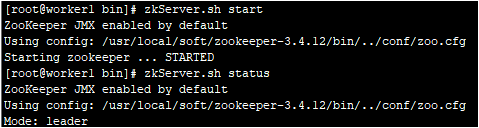

3.启动Zookeeper集群

备注:zookeeper集群启动时,先启动的节点因节点启动过少而出现not running这种情况,是正常的,把所有节点都启动之后这个情况就会消失!

3.启动Kafka服务

执行:./kafka-server-start.sh ../config/server.properties &

启动日志:

[root@master bin]# ./kafka-server-start.sh ../config/server.properties &

[]

[root@master bin]# [-- ::,] INFO Registered kafka:type=kafka.Log4jController MBean (kafka.utils.Log4jControllerRegistration$)

[-- ::,] INFO starting (kafka.server.KafkaServer)

[-- ::,] INFO Connecting to zookeeper on master: (kafka.server.KafkaServer)

[-- ::,] INFO [ZooKeeperClient] Initializing a new session to master:. (kafka.zookeeper.ZooKeeperClient)

[-- ::,] INFO Client environment:zookeeper.version=3.4.-2d71af4dbe22557fda74f9a9b4309b15a7487f03, built on // : GMT (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:host.name=master (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.version=1.8.0_172 (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.vendor=Oracle Corporation (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.home=/usr/local/soft/jdk1..0_172/jre (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.class.path=.:/usr/local/soft/jdk1..0_172/jre/lib/rt.jar:/usr/local/soft/jdk1..0_172/lib/dt.jar:/usr/local/soft/jdk1..0_172/lib/tools.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/activation-1.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/aopalliance-repackaged-2.5.-b42.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/argparse4j-0.7..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/audience-annotations-0.5..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/commons-lang3-3.8..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/connect-api-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/connect-basic-auth-extension-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/connect-file-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/connect-json-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/connect-runtime-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/connect-transforms-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/guava-20.0.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/hk2-api-2.5.-b42.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/hk2-locator-2.5.-b42.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/hk2-utils-2.5.-b42.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jackson-annotations-2.9..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jackson-core-2.9..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jackson-databind-2.9..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jackson-jaxrs-base-2.9..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jackson-jaxrs-json-provider-2.9..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jackson-module-jaxb-annotations-2.9..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/javassist-3.22.-CR2.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/javax.annotation-api-1.2.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/javax.inject-.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/javax.inject-2.5.-b42.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/javax.servlet-api-3.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/javax.ws.rs-api-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/javax.ws.rs-api-2.1.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jaxb-api-2.3..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jersey-client-2.27.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jersey-common-2.27.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jersey-container-servlet-2.27.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jersey-container-servlet-core-2.27.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jersey-hk2-2.27.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jersey-media-jaxb-2.27.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jersey-server-2.27.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jetty-client-9.4..v20180830.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jetty-continuation-9.4..v20180830.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jetty-http-9.4..v20180830.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jetty-io-9.4..v20180830.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jetty-security-9.4..v20180830.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jetty-server-9.4..v20180830.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jetty-servlet-9.4..v20180830.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jetty-servlets-9.4..v20180830.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jetty-util-9.4..v20180830.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/jopt-simple-5.0..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/kafka_2.-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/kafka_2.-2.1.-sources.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/kafka-clients-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/kafka-log4j-appender-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/kafka-streams-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/kafka-streams-examples-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/kafka-streams-scala_2.-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/kafka-streams-test-utils-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/kafka-tools-2.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/log4j-1.2..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/lz4-java-1.5..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/maven-artifact-3.6..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/metrics-core-2.2..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/osgi-resource-locator-1.0..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/plexus-utils-3.1..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/reflections-0.9..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/rocksdbjni-5.14..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/scala-library-2.11..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/scala-logging_2.-3.9..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/scala-reflect-2.11..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/slf4j-api-1.7..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/slf4j-log4j12-1.7..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/snappy-java-1.1.7.2.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/validation-api-1.1..Final.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/zkclient-0.11.jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/zookeeper-3.4..jar:/usr/local/soft/kafka_2.-2.1./bin/../libs/zstd-jni-1.3.-.jar (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.io.tmpdir=/tmp (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.compiler=<NA> (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:os.name=Linux (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:os.arch=amd64 (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:os.version=3.10.-.el7.x86_64 (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:user.name=root (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:user.home=/root (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:user.dir=/usr/local/soft/kafka_2.-2.1./bin (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Initiating client connection, connectString=master: sessionTimeout= watcher=kafka.zookeeper.ZooKeeperClient$ZooKeeperClientWatcher$@351d00c0 (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Opening socket connection to server master/192.168.245.136:. Will not attempt to authenticate using SASL (unknown error) (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO [ZooKeeperClient] Waiting until connected. (kafka.zookeeper.ZooKeeperClient)

[-- ::,] INFO Socket connection established to master/192.168.245.136:, initiating session (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO Session establishment complete on server master/192.168.245.136:, sessionid = 0x1000023437d0000, negotiated timeout = (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO [ZooKeeperClient] Connected. (kafka.zookeeper.ZooKeeperClient)

[-- ::,] INFO Cluster ID = 1AkrnNRhRiW9PWHA77R9lA (kafka.server.KafkaServer)

[-- ::,] WARN No meta.properties file under dir /usr/local/soft/kafka_2.-2.1./logs/meta.properties (kafka.server.BrokerMetadataCheckpoint)

[-- ::,] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = null

advertised.port = null

alter.config.policy.class.name = null

alter.log.dirs.replication.quota.window.num =

alter.log.dirs.replication.quota.window.size.seconds =

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads =

broker.id =

broker.id.generation.enable = true

broker.rack = null

client.quota.callback.class = null

compression.type = producer

connection.failed.authentication.delay.ms =

connections.max.idle.ms =

controlled.shutdown.enable = true

controlled.shutdown.max.retries =

controlled.shutdown.retry.backoff.ms =

controller.socket.timeout.ms =

create.topic.policy.class.name = null

default.replication.factor =

delegation.token.expiry.check.interval.ms =

delegation.token.expiry.time.ms =

delegation.token.master.key = null

delegation.token.max.lifetime.ms =

delete.records.purgatory.purge.interval.requests =

delete.topic.enable = true

fetch.purgatory.purge.interval.requests =

group.initial.rebalance.delay.ms =

group.max.session.timeout.ms =

group.min.session.timeout.ms =

host.name =

inter.broker.listener.name = null

inter.broker.protocol.version = 2.1-IV2

kafka.metrics.polling.interval.secs =

kafka.metrics.reporters = []

leader.imbalance.check.interval.seconds =

leader.imbalance.per.broker.percentage =

listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

listeners = null

log.cleaner.backoff.ms =

log.cleaner.dedupe.buffer.size =

log.cleaner.delete.retention.ms =

log.cleaner.enable = true

log.cleaner.io.buffer.load.factor = 0.9

log.cleaner.io.buffer.size =

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

log.cleaner.min.cleanable.ratio = 0.5

log.cleaner.min.compaction.lag.ms =

log.cleaner.threads =

log.cleanup.policy = [delete]

log.dir = /tmp/kafka-logs

log.dirs = /usr/local/soft/kafka_2.-2.1./logs

log.flush.interval.messages =

log.flush.interval.ms = null

log.flush.offset.checkpoint.interval.ms =

log.flush.scheduler.interval.ms =

log.flush.start.offset.checkpoint.interval.ms =

log.index.interval.bytes =

log.index.size.max.bytes =

log.message.downconversion.enable = true

log.message.format.version = 2.1-IV2

log.message.timestamp.difference.max.ms =

log.message.timestamp.type = CreateTime

log.preallocate = false

log.retention.bytes = -

log.retention.check.interval.ms =

log.retention.hours =

log.retention.minutes = null

log.retention.ms = null

log.roll.hours =

log.roll.jitter.hours =

log.roll.jitter.ms = null

log.roll.ms = null

log.segment.bytes =

log.segment.delete.delay.ms =

max.connections.per.ip =

max.connections.per.ip.overrides =

max.incremental.fetch.session.cache.slots =

message.max.bytes =

metric.reporters = []

metrics.num.samples =

metrics.recording.level = INFO

metrics.sample.window.ms =

min.insync.replicas =

num.io.threads =

num.network.threads =

num.partitions =

num.recovery.threads.per.data.dir =

num.replica.alter.log.dirs.threads = null

num.replica.fetchers =

offset.metadata.max.bytes =

offsets.commit.required.acks = -

offsets.commit.timeout.ms =

offsets.load.buffer.size =

offsets.retention.check.interval.ms =

offsets.retention.minutes =

offsets.topic.compression.codec =

offsets.topic.num.partitions =

offsets.topic.replication.factor =

offsets.topic.segment.bytes =

password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding

password.encoder.iterations =

password.encoder.key.length =

password.encoder.keyfactory.algorithm = null

password.encoder.old.secret = null

password.encoder.secret = null

port =

principal.builder.class = null

producer.purgatory.purge.interval.requests =

queued.max.request.bytes = -

queued.max.requests =

quota.consumer.default =

quota.producer.default =

quota.window.num =

quota.window.size.seconds =

replica.fetch.backoff.ms =

replica.fetch.max.bytes =

replica.fetch.min.bytes =

replica.fetch.response.max.bytes =

replica.fetch.wait.max.ms =

replica.high.watermark.checkpoint.interval.ms =

replica.lag.time.max.ms =

replica.socket.receive.buffer.bytes =

replica.socket.timeout.ms =

replication.quota.window.num =

replication.quota.window.size.seconds =

request.timeout.ms =

reserved.broker.max.id =

sasl.client.callback.handler.class = null

sasl.enabled.mechanisms = [GSSAPI]

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin =

sasl.kerberos.principal.to.local.rules = [DEFAULT]

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds =

sasl.login.refresh.min.period.seconds =

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism.inter.broker.protocol = GSSAPI

sasl.server.callback.handler.class = null

security.inter.broker.protocol = PLAINTEXT

socket.receive.buffer.bytes =

socket.request.max.bytes =

socket.send.buffer.bytes =

ssl.cipher.suites = []

ssl.client.auth = none

ssl.enabled.protocols = [TLSv1., TLSv1., TLSv1]

ssl.endpoint.identification.algorithm = https

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.abort.timed.out.transaction.cleanup.interval.ms =

transaction.max.timeout.ms =

transaction.remove.expired.transaction.cleanup.interval.ms =

transaction.state.log.load.buffer.size =

transaction.state.log.min.isr =

transaction.state.log.num.partitions =

transaction.state.log.replication.factor =

transaction.state.log.segment.bytes =

transactional.id.expiration.ms =

unclean.leader.election.enable = false

zookeeper.connect = master:

zookeeper.connection.timeout.ms =

zookeeper.max.in.flight.requests =

zookeeper.session.timeout.ms =

zookeeper.set.acl = false

zookeeper.sync.time.ms =

(kafka.server.KafkaConfig)

[-- ::,] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = null

advertised.port = null

alter.config.policy.class.name = null

alter.log.dirs.replication.quota.window.num =

alter.log.dirs.replication.quota.window.size.seconds =

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads =

broker.id =

broker.id.generation.enable = true

broker.rack = null

client.quota.callback.class = null

compression.type = producer

connection.failed.authentication.delay.ms =

connections.max.idle.ms =

controlled.shutdown.enable = true

controlled.shutdown.max.retries =

controlled.shutdown.retry.backoff.ms =

controller.socket.timeout.ms =

create.topic.policy.class.name = null

default.replication.factor =

delegation.token.expiry.check.interval.ms =

delegation.token.expiry.time.ms =

delegation.token.master.key = null

delegation.token.max.lifetime.ms =

delete.records.purgatory.purge.interval.requests =

delete.topic.enable = true

fetch.purgatory.purge.interval.requests =

group.initial.rebalance.delay.ms =

group.max.session.timeout.ms =

group.min.session.timeout.ms =

host.name =

inter.broker.listener.name = null

inter.broker.protocol.version = 2.1-IV2

kafka.metrics.polling.interval.secs =

kafka.metrics.reporters = []

leader.imbalance.check.interval.seconds =

leader.imbalance.per.broker.percentage =

listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

listeners = null

log.cleaner.backoff.ms =

log.cleaner.dedupe.buffer.size =

log.cleaner.delete.retention.ms =

log.cleaner.enable = true

log.cleaner.io.buffer.load.factor = 0.9

log.cleaner.io.buffer.size =

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

log.cleaner.min.cleanable.ratio = 0.5

log.cleaner.min.compaction.lag.ms =

log.cleaner.threads =

log.cleanup.policy = [delete]

log.dir = /tmp/kafka-logs

log.dirs = /usr/local/soft/kafka_2.-2.1./logs

log.flush.interval.messages =

log.flush.interval.ms = null

log.flush.offset.checkpoint.interval.ms =

log.flush.scheduler.interval.ms =

log.flush.start.offset.checkpoint.interval.ms =

log.index.interval.bytes =

log.index.size.max.bytes =

log.message.downconversion.enable = true

log.message.format.version = 2.1-IV2

log.message.timestamp.difference.max.ms =

log.message.timestamp.type = CreateTime

log.preallocate = false

log.retention.bytes = -

log.retention.check.interval.ms =

log.retention.hours =

log.retention.minutes = null

log.retention.ms = null

log.roll.hours =

log.roll.jitter.hours =

log.roll.jitter.ms = null

log.roll.ms = null

log.segment.bytes =

log.segment.delete.delay.ms =

max.connections.per.ip =

max.connections.per.ip.overrides =

max.incremental.fetch.session.cache.slots =

message.max.bytes =

metric.reporters = []

metrics.num.samples =

metrics.recording.level = INFO

metrics.sample.window.ms =

min.insync.replicas =

num.io.threads =

num.network.threads =

num.partitions =

num.recovery.threads.per.data.dir =

num.replica.alter.log.dirs.threads = null

num.replica.fetchers =

offset.metadata.max.bytes =

offsets.commit.required.acks = -

offsets.commit.timeout.ms =

offsets.load.buffer.size =

offsets.retention.check.interval.ms =

offsets.retention.minutes =

offsets.topic.compression.codec =

offsets.topic.num.partitions =

offsets.topic.replication.factor =

offsets.topic.segment.bytes =

password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding

password.encoder.iterations =

password.encoder.key.length =

password.encoder.keyfactory.algorithm = null

password.encoder.old.secret = null

password.encoder.secret = null

port =

principal.builder.class = null

producer.purgatory.purge.interval.requests =

queued.max.request.bytes = -

queued.max.requests =

quota.consumer.default =

quota.producer.default =

quota.window.num =

quota.window.size.seconds =

replica.fetch.backoff.ms =

replica.fetch.max.bytes =

replica.fetch.min.bytes =

replica.fetch.response.max.bytes =

replica.fetch.wait.max.ms =

replica.high.watermark.checkpoint.interval.ms =

replica.lag.time.max.ms =

replica.socket.receive.buffer.bytes =

replica.socket.timeout.ms =

replication.quota.window.num =

replication.quota.window.size.seconds =

request.timeout.ms =

reserved.broker.max.id =

sasl.client.callback.handler.class = null

sasl.enabled.mechanisms = [GSSAPI]

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin =

sasl.kerberos.principal.to.local.rules = [DEFAULT]

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds =

sasl.login.refresh.min.period.seconds =

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism.inter.broker.protocol = GSSAPI

sasl.server.callback.handler.class = null

security.inter.broker.protocol = PLAINTEXT

socket.receive.buffer.bytes =

socket.request.max.bytes =

socket.send.buffer.bytes =

ssl.cipher.suites = []

ssl.client.auth = none

ssl.enabled.protocols = [TLSv1., TLSv1., TLSv1]

ssl.endpoint.identification.algorithm = https

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.abort.timed.out.transaction.cleanup.interval.ms =

transaction.max.timeout.ms =

transaction.remove.expired.transaction.cleanup.interval.ms =

transaction.state.log.load.buffer.size =

transaction.state.log.min.isr =

transaction.state.log.num.partitions =

transaction.state.log.replication.factor =

transaction.state.log.segment.bytes =

transactional.id.expiration.ms =

unclean.leader.election.enable = false

zookeeper.connect = master:

zookeeper.connection.timeout.ms =

zookeeper.max.in.flight.requests =

zookeeper.session.timeout.ms =

zookeeper.set.acl = false

zookeeper.sync.time.ms =

(kafka.server.KafkaConfig)

[-- ::,] INFO [ThrottledChannelReaper-Fetch]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[-- ::,] INFO [ThrottledChannelReaper-Produce]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[-- ::,] INFO [ThrottledChannelReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[-- ::,] INFO Loading logs. (kafka.log.LogManager)

[-- ::,] INFO Logs loading complete in ms. (kafka.log.LogManager)

[-- ::,] INFO Starting log cleanup with a period of ms. (kafka.log.LogManager)

[-- ::,] INFO Starting log flusher with a default period of ms. (kafka.log.LogManager)

[-- ::,] INFO Awaiting socket connections on 0.0.0.0:. (kafka.network.Acceptor)

[-- ::,] INFO [SocketServer brokerId=] Started acceptor threads (kafka.network.SocketServer)

[-- ::,] INFO [ExpirationReaper--Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [ExpirationReaper--Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [ExpirationReaper--DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[-- ::,] INFO Creating /brokers/ids/ (is it secure? false) (kafka.zk.KafkaZkClient)

[-- ::,] INFO Result of znode creation at /brokers/ids/ is: OK (kafka.zk.KafkaZkClient)

[-- ::,] INFO Registered broker at path /brokers/ids/ with addresses: ArrayBuffer(EndPoint(master,,ListenerName(PLAINTEXT),PLAINTEXT)) (kafka.zk.KafkaZkClient)

[-- ::,] WARN No meta.properties file under dir /usr/local/soft/kafka_2.-2.1./logs/meta.properties (kafka.server.BrokerMetadataCheckpoint)

[-- ::,] INFO [ExpirationReaper--topic]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [ExpirationReaper--Heartbeat]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [ExpirationReaper--Rebalance]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO Successfully created /controller_epoch with initial epoch (kafka.zk.KafkaZkClient)

[-- ::,] INFO [GroupCoordinator ]: Starting up. (kafka.coordinator.group.GroupCoordinator)

[-- ::,] INFO [GroupCoordinator ]: Startup complete. (kafka.coordinator.group.GroupCoordinator)

[-- ::,] INFO [GroupMetadataManager brokerId=] Removed expired offsets in milliseconds. (kafka.coordinator.group.GroupMetadataManager)

[-- ::,] INFO [ProducerId Manager ]: Acquired new producerId block (brokerId:,blockStartProducerId:,blockEndProducerId:) by writing to Zk with path version (kafka.coordinator.transaction.ProducerIdManager)

[-- ::,] INFO [TransactionCoordinator id=] Starting up. (kafka.coordinator.transaction.TransactionCoordinator)

[-- ::,] INFO [TransactionCoordinator id=] Startup complete. (kafka.coordinator.transaction.TransactionCoordinator)

[-- ::,] INFO [Transaction Marker Channel Manager ]: Starting (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[-- ::,] INFO [/config/changes-event-process-thread]: Starting (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[-- ::,] INFO [SocketServer brokerId=] Started processors for acceptors (kafka.network.SocketServer)

[-- ::,] INFO Kafka version : 2.1. (org.apache.kafka.common.utils.AppInfoParser)

[-- ::,] INFO Kafka commitId : 21234bee31165527 (org.apache.kafka.common.utils.AppInfoParser)

[-- ::,] INFO [KafkaServer id=] started (kafka.server.KafkaServer)

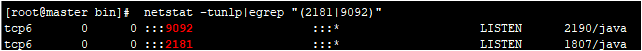

端口检测:

其中9092为kafka的监听端口,2181位zookeeper的使用端口。

4.单机连通性测试

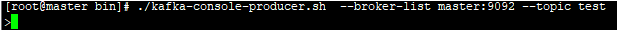

4.1启动生产者producer

4.2启动消费者consumer

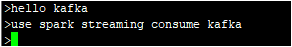

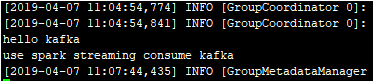

4.3测试

生产者生产数据:

消费者消耗数据:

二.Kafka集群搭建

未完待续......

Kafka单节点及集群配置安装的更多相关文章

- hadoop入门手册1:hadoop【2.7.1】【多节点】集群配置【必知配置知识1】

问题导读 1.说说你对集群配置的认识?2.集群配置的配置项你了解多少?3.下面内容让你对集群的配置有了什么新的认识? 目的 目的1:这个文档描述了如何安装配置hadoop集群,从几个节点到上千节点.为 ...

- hadoop入门手册2:hadoop【2.7.1】【多节点】集群配置【必知配置知识2】

问题导读 1.如何实现检测NodeManagers健康?2.配置ssh互信的作用是什么?3.启动.停止hdfs有哪些方式? 上篇: hadoop[2.7.1][多节点]集群配置[必知配置知识1]htt ...

- 使用Minikube运行一个本地单节点Kubernetes集群(阿里云)

使用Minikube运行一个本地单节点Kubernetes集群中使用谷歌官方镜像由于某些原因导致镜像拉取失败以及很多人并没有代理无法开展相关实验. 因此本文使用阿里云提供的修改版Minikube创建一 ...

- K8s二进制部署单节点 etcd集群,flannel网络配置 ——锥刺股

K8s 二进制部署单节点 master --锥刺股 k8s集群搭建: etcd集群 flannel网络插件 搭建master组件 搭建node组件 1.部署etcd集群 2.Flannel 网络 ...

- 使用Minikube运行一个本地单节点Kubernetes集群

使用Minikube是运行Kubernetes集群最简单.最快捷的途径,Minikube是一个构建单节点集群的工具,对于测试Kubernetes和本地开发应用都非常有用. ⒈安装Minikube Mi ...

- ActiveMQ的单节点和集群部署

平安寿险消息队列用的是ActiveMQ. 单节点部署: 下载解压后,直接cd到bin目录,用activemq start命令就可启动activemq服务端了. ActiveMQ默认采用61616端口提 ...

- Ceph实战入门系列(一)——三节点Ceph集群的安装与部署

安装文档:http://blog.csdn.net/u014139942/article/details/53639124

- Windows server2003 + sql server2005 集群配置安装

http://blog.itpub.net/29500582/viewspace-1249319/

- mongodb 单节点集群配置 (开发环境)

最近项目会用到mongodb的oplog触发业务流程,开发时的debug很不方便.所以在本地创建一个单台mongodb 集群进行开发debug. 大概:mongodb可以产生oplog的部署方式应该是 ...

随机推荐

- java 轻量级同步volatile关键字简介与可见性有序性与synchronized区别 多线程中篇(十二)

概念 JMM规范解决了线程安全的问题,主要三个方面:原子性.可见性.有序性,借助于synchronized关键字体现,可以有效地保障线程安全(前提是你正确运用) 之前说过,这三个特性并不一定需要全部同 ...

- JQuery --- 第一期 (初识jQuery, JQuery核心函数和工具方法)

个人学习笔记 初识jQuery 1.我的第一个JQuery <!DOCTYPE html> <html lang="en"> <head> & ...

- json转js对象方法,JS对象转JSON方法

<!DOCTYPE html> <html> <head> <meta charset="utf-8" /> <title&g ...

- Flutter 即学即用系列博客——01 环境搭建

前言 工欲善其事,必先利其器 所以第一篇我们来说说 Flutter 环境的搭建. 笔者这边使用的是 MAC 电脑,因此以 MAC 电脑的环境搭建为例. Windows 或者 Linux 也是类似的操作 ...

- MongoDB通过Shell 实现集合的日常归档

1.MongoDB数据归档的意义 和其他类型的数据库一样,归档对MongoDB同样重要.通过归档,可以保持集合中合适的数据量,对数据库的性能是一种保障,也就是大家常说的数据冷热分离. 同时,归档对数据 ...

- http-server安装及运行

vue项目打包后会生成一个dist目录,我们想要直接运行dist目录...除了复制静态文件到服务器nginx目录下,然后启动nginx来启动项目, 另外一个办法就是安装http-server 直接启动 ...

- IDEA mybatis mapper类跳转到xml文件

安装插件 free mybatis plugin,安装完成后重启,ctrl+单击即可跳转.

- 从壹开始前后端分离 [ vue + .netcore 补充教程 ] 二九║ Nuxt实战:异步实现数据双端渲染

回顾 哈喽大家好!又是元气满满的周~~~二哈哈,不知道大家中秋节过的如何,马上又是国庆节了,博主我将通过三天的时间,给大家把项目二的数据添上(这里强调下,填充数据不是最重要的,最重要的是要配合着让大家 ...

- 面向对象(__str__和__repr__方法)

#Author : Kelvin #Date : 2019/1/21 16:19 class App: def __init__(self,name): self.name=name # def __ ...

- EF实体实现链接字符串加密

1.加密解密方法 using System;using System.Security.Cryptography; using System.Text;namespace DBUtility{ /// ...